환경설정(colab)

from google.colab import drive

drive.mount('/content/gdrive')폴더 경로 설정

workspace_path = '/content/gdrive/경로'필요 패키지 로드

import cv2

import os

import torch

import random

import numpy as np

import torch.nn as nn

import torch.optim as optim

import matplotlib.pyplot as plt

import torch.nn.functional as F

from torchvision import datasets, transforms

from PIL import ImageObject Detection (YOLOv3)

YOLOv3 코드 다운로드

!git clone https://github.com/AlexeyAB/darknet.gitColab에서 Darknet의 GPU 지원 활성화

%cd darknet

!sed -i 's/GPU=0/GPU=1/' Makefile

!sed -i 's/CUDNN=0/CUDNN=1/' Makefile

!sed -i 's/OPENCV=0/OPENCV=1/' Makefile

!makeYOLOv3 사전 훈련된 가중치 다운로드

!wget https://pjreddie.com/media/files/yolov3.weightsdog.jpg에 대해 인퍼런스 수행

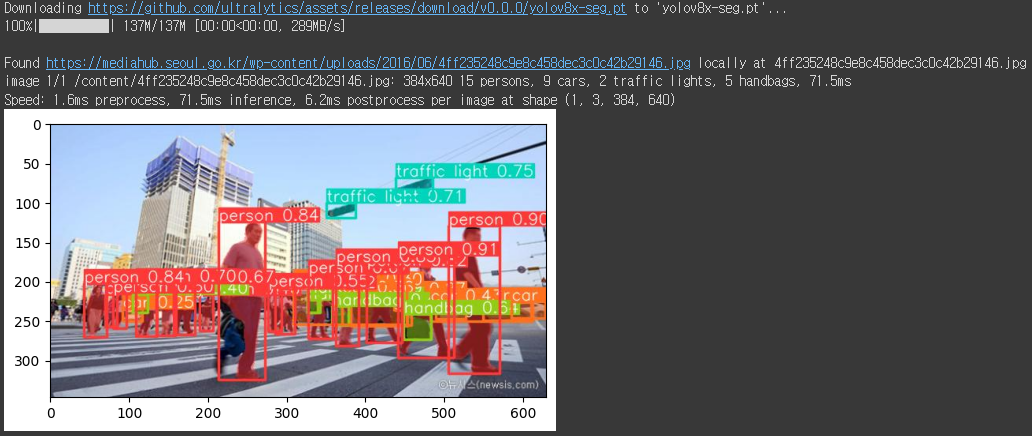

!./darknet detect cfg/yolov3.cfg yolov3.weights data/dog.jpgObject Detection (YOLOv8)

%cd /content

!pip install ultralyticsfrom ultralytics import YOLO

import io ## https://github.com/ultralytics/ultralytics

from urllib import request

test_url = 'https://mediahub.seoul.go.kr/wp-content/uploads/2016/06/4ff235248c9e8c458dec3c0c42b29146.jpg'

img = Image.open(io.BytesIO(request.urlopen(test_url).read()))

plt.imshow(img)

plt.show()

model = YOLO('yolov8m.pt')

results = model.predict(source=test_url)

#results = model.track(source=test_url) ## Tracking

for r in results :

box = r.boxes

im_array = r.plot() # plot a BGR numpy array of predictions

im = Image.fromarray(im_array[..., ::-1]) # RGB PIL image

im.save('results.jpg')

predict_img = Image.open('results.jpg')

plt.imshow(predict_img)

plt.show()#결과:

- YOLOv8은 Object Detection 외에도 Classification, Instance Segmentation, Pose Estimation, Tracking을 지원함

YOLOv8 Seg

model = YOLO('yolov8x-seg.pt')

results = model.predict(source=test_url)

for r in results :

im_array = r.plot() # plot a BGR numpy array of predictions

im = Image.fromarray(im_array[..., ::-1]) # RGB PIL image

im.save('seg_results.jpg')

predict_img = Image.open('seg_results.jpg')

plt.imshow(predict_img)

plt.show()#결과 :

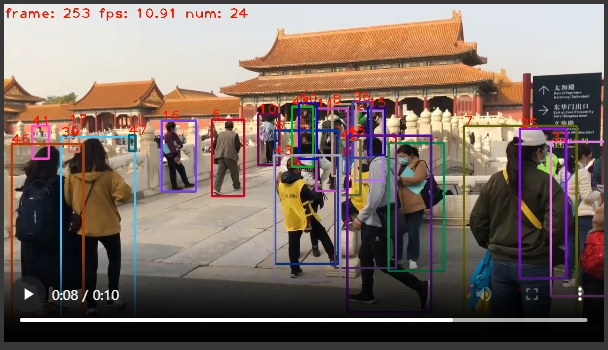

Object Tracking (Bytetrack)

바이트 트랙 코드 다운로드

# == Download the repo content and install dependencies ==

%cd /content

!git clone https://github.com/ifzhang/ByteTrack.git

%cd /content/ByteTrack/

%mkdir pretrained

%cd pretrained

# == Download pretrained X model weights ==

!gdown --id "1P4mY0Yyd3PPTybgZkjMYhFri88nTmJX5"COCO API 다운, 패키지 설치

# == Install dependencies ==

!pip3 install cython

!pip3 install 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI'

!pip3 install cython_bbox

!pip3 install loguru

!pip3 install thop

!pip3 install lap

%cd /content/ByteTrack/

!pip3 install -r requirements.txt# == Install ByteTrack ==

!python3 setup.py develop트래킹 코드 실행

# run inference demo (can be slow on colab). The cell output is deflected to the file 'log.txt' for downstream use to display the result but feel free to remove

%cd /content/ByteTrack

!python3 tools/demo_track.py video -f exps/example/mot/yolox_x_mix_det.py -c pretrained/bytetrack_x_mot17.pth.tar --fp16 --fuse --save_result &> log.txt# == Get rendered result video file path ==

import re

%cd /content/ByteTrack

with open('log.txt', 'r') as file:

text = file.read().replace('\n', '')

m = re.search('video save_path is ./(.+?).mp4', text) #mp4 형식의 파일을 찾아냄

print(m)

if m:

found = '/content/ByteTrack/' + m.group(1) + ".mp4"

foundColab에서 영상 재생을 위해 MP4 -> webm 파일로 변환

# == Convert mp4 to webm video file for proper display on colab ==

%cd /content/ByteTrack

!ffmpeg -i $found -vcodec vp9 out.webmwebm 파일을 재생하는 코드

# == Display results ==

from IPython.display import HTML

from base64 import b64encode

video_path = '/content/ByteTrack/out.webm'

mp4 = open(video_path, "rb").read()

data_url = "data:video/webm;base64," + b64encode(mp4).decode()

HTML(f"""

<video width=600 controls>

<source src="{data_url}" type="video/webm">

</video>

""")#결과: