[ABSA-CL] Learning Implicit Sentiment in ABSA with Supervised Contrastive Pre-training

학습 Methodology

Supervised Contrastive Pre-training

a. Transformer Encoder backbone

x_i : 각 리뷰 문장

y_i : 각 문장에 대한 라벨 값

D={x_1, ..., x_n}

I_i=[CLS]+x_i+[SEP]

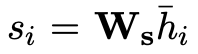

h_i bar=TransEnc(I_i)

b. Supervised Contrastive Learning

h_i bar : x_i의 sentence representation

W_s : trainable sentiment perceptron

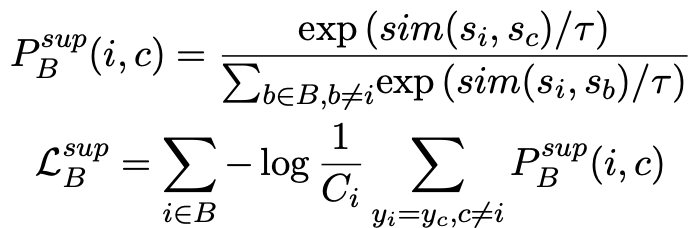

: likelihood that s_c is most similar to s_i

tau : temperature of softmax

: similarity metric

: Supervised contrastive loss

: number of samples in the same category y_i in B

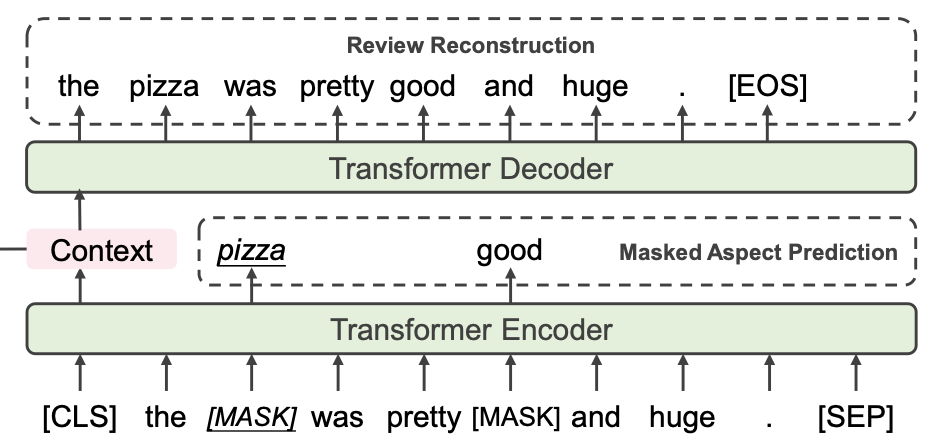

c. Review Reconstruction

- denoising auto encoder에서 영감을 받음.

d. Masked Aspect Prediction

- model learns to predict the masked aspect from a corrupted version for each review.

- Aspect Span Masking

aspect spans의 token은 80% 확률로 masking되거나 10% 확률로 random token으로 대체됨. - Random Masking

ASM 후에 masked token의 비율이 15%보다 적으면 비율을 맞추기 위해 추가적으로 masking을 함.

- 다른 MLM(Devlin et al 2019), sentiment masking(Tian et al 2020), masked aspect prediction과의 차이점 : 우리는 aspect-related context info modeling에 더 집중함.

e. Joint Training

Aspect-Aware Fine-tuning

x_ab: sentences in D_ab

w_a: x_ab에 나타나는 aspects 중 하나

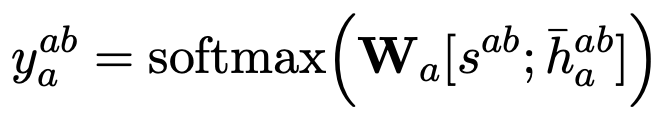

y_ab: 모델이 예측하는 해당 aspect에 대한 sentiment

h^ab_a bar: aspect-based representation

s^ab: sentiment representation

a. Aspect-based Representation

- Ethayarajh, 2019 : The research on pre-trained contextualized word representation showed that it can capture context info related from the word.

-> 각 단어 w_a에 대응하는 final hidden state를 모아 aspect-based repr h^ab_a bar를 추출함.

b. Representation Combination

-

sentence representation에서 sentiment representation s_ab를 얻기 위해 같은 sentiment perceptron W_s를 사용함. 그 다음 s_ab와 aspect based representation h^ab_a bar를 concat하여 aspect level sentiment polarity를 예측함

-

W_a: trainable parameter matrix.

Experimental Settings

a. ABSA Dataset

b. Retrieved External Corpora

- Yelp/Amazon에서 별 5개, 1개 리뷰들만 모아 문장 단위로 쪼갬. 각 문장에 있는 aspect의 polarity는 리뷰 점수를 따라가게 함.