아래 글을 참고하며 Docker 기반으로 Cluster API를 사용해본다.

clusterctl 설치

linux

❯ curl -L https://github.com/kubernetes-sigs/cluster-api/releases/download/v1.1.2/clusterctl-linux-amd64 -o clusterctl

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 661 100 661 0 0 2111 0 --:--:-- --:--:-- --:--:-- 2105

100 59.0M 100 59.0M 0 0 461k 0 0:02:10 0:02:10 --:--:-- 152k

❯ ls

clusterctl

❯ chmod +x ./clusterctl

❯ sudo mv ./clusterctl /usr/local/bin/clusterctl

❯ clusterctl version

clusterctl version: &version.Info{Major:"1", Minor:"1", GitVersion:"v1.1.2", GitCommit:"3433f7b769b4e7f5cb899b2742a5a8a1a9f51b3e", GitTreeState:"clean", BuildDate:"2022-02-17T19:14:59Z", GoVersion:"go1.17.3", Compiler:"gc", Platform:"linux/amd64"}macOS

❯ brew install clusterctl

❯ clusterctl versionInitialization for common providers

❯ clusterctl init --infrastructure docker

Fetching providers

Installing cert-manager Version="v1.5.3"

Waiting for cert-manager to be available...

Installing Provider="cluster-api" Version="v1.1.2" TargetNamespace="capi-system"

Installing Provider="bootstrap-kubeadm" Version="v1.1.2" TargetNamespace="capi-kubeadm-bootstrap-system"

Installing Provider="control-plane-kubeadm" Version="v1.1.2" TargetNamespace="capi-kubeadm-control-plane-system"

Installing Provider="infrastructure-docker" Version="v1.1.2" TargetNamespace="capd-system"

Your management cluster has been initialized successfully!

You can now create your first workload cluster by running the following:

clusterctl generate cluster [name] --kubernetes-version [version] | kubectl apply -f -

❯ docker ps --format "table {{.Names}} {{.Command}}"

NAMES COMMAND

k8s_manager_capd-controller-manager-786f8f5898-hws5s_capd-system_146bb014-b9ce-4768-92ca-5e77a98bf164_0 "/manager --leader-e…"

k8s_manager_capi-kubeadm-control-plane-controller-manager-9dd9b5b88-xqltw_capi-kubeadm-control-plane-system_1fa2d017-9ae7-453f-9b6a-5d25fc11f452_0 "/manager --leader-e…"

k8s_manager_capi-kubeadm-bootstrap-controller-manager-b7cc9cb-bhpmx_capi-kubeadm-bootstrap-system_b0b03d86-bfb2-4460-810e-3a7981718604_0 "/manager --leader-e…"

k8s_manager_capi-controller-manager-75cd44fb4c-9mbwf_capi-system_939c9be7-f0a4-4c9a-b1e6-b0ab5301e5e4_0 "/manager --leader-e…"

k8s_cert-manager_cert-manager-webhook-7c9588c76-gcshl_cert-manager_67e45b35-3a6b-4676-b1a8-44f5c8b83b9f_0 "/app/cmd/webhook/we…"

k8s_cert-manager_cert-manager-848f547974-rzpfl_cert-manager_abf380b2-0a27-4f45-8892-0dafb016cdac_0 "/app/cmd/controller…"

k8s_cert-manager_cert-manager-cainjector-54f4cc6b5-8clhz_cert-manager_f645ded3-731c-4f19-81b5-45cbc943f6d7_0 "/app/cmd/cainjector…"

❯ docker ps --format "table {{.Names}} {{.Command}}" --last=10

NAMES COMMAND

k8s_manager_capd-controller-manager-786f8f5898-hws5s_capd-system_146bb014-b9ce-4768-92ca-5e77a98bf164_0 "/manager --leader-e…"

k8s_manager_capi-kubeadm-control-plane-controller-manager-9dd9b5b88-xqltw_capi-kubeadm-control-plane-system_1fa2d017-9ae7-453f-9b6a-5d25fc11f452_0 "/manager --leader-e…"

k8s_manager_capi-kubeadm-bootstrap-controller-manager-b7cc9cb-bhpmx_capi-kubeadm-bootstrap-system_b0b03d86-bfb2-4460-810e-3a7981718604_0 "/manager --leader-e…"

k8s_manager_capi-controller-manager-75cd44fb4c-9mbwf_capi-system_939c9be7-f0a4-4c9a-b1e6-b0ab5301e5e4_0 "/manager --leader-e…"

k8s_cert-manager_cert-manager-webhook-7c9588c76-gcshl_cert-manager_67e45b35-3a6b-4676-b1a8-44f5c8b83b9f_0 "/app/cmd/webhook/we…"Create Workload Cluster YAML

❯ clusterctl generate cluster capi-quickstart --flavor development \

--kubernetes-version v1.22.5 \

--control-plane-machine-count=1 \

--worker-machine-count=1 \

> capi-quickstart.yaml해당 경로에 yaml 파일이 생성됨.

적용

❯ kubectl apply -f capi-quickstart.yaml

cluster.cluster.x-k8s.io/capi-quickstart created

dockercluster.infrastructure.cluster.x-k8s.io/capi-quickstart created

kubeadmcontrolplane.controlplane.cluster.x-k8s.io/capi-quickstart-control-plane created

dockermachinetemplate.infrastructure.cluster.x-k8s.io/capi-quickstart-control-plane created

dockermachinetemplate.infrastructure.cluster.x-k8s.io/capi-quickstart-md-0 created

kubeadmconfigtemplate.bootstrap.cluster.x-k8s.io/capi-quickstart-md-0 created

machinedeployment.cluster.x-k8s.io/capi-quickstart-md-0 created

❯ k get cluster

NAME PHASE AGE VERSION

capi-quickstart Provisioning 34s

❯ docker ps --format "table {{.Names}} {{.Command}}" --last=1

NAMES COMMAND

capi-quickstart-lb "haproxy -sf 7 -W -d…"그런데 오류가 있다.

❯ cc describe cluster capi-quickstart

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-quickstart False Warning LoadBalancerProvisioningFailed 3m57s 0 of 1 completed

├─ClusterInfrastructure - DockerCluster/capi-quickstart False Warning LoadBalancerProvisioningFailed 3m57s 0 of 1 completed

├─ControlPlane - KubeadmControlPlane/capi-quickstart-control-plane

└─Workers

└─MachineDeployment/capi-quickstart-md-0 False Warning WaitingForAvailableMachines 4m4s Minimum availability requires 1 replicas, current 0 available

└─Machine/capi-quickstart-md-0-6cfcc9c46d-m7hzc False Info WaitingForClusterInfrastructure 4m4s 0 of 2 completed 아무것도 보이지 않는다...

Docker Desktop이 아니라 kind에 설치

❯ clusterctl init --infrastructure docker

Fetching providers

Installing cert-manager Version="v1.5.3"

Waiting for cert-manager to be available...

Installing Provider="cluster-api" Version="v1.1.2" TargetNamespace="capi-system"

Installing Provider="bootstrap-kubeadm" Version="v1.1.2" TargetNamespace="capi-kubeadm-bootstrap-system"

Installing Provider="control-plane-kubeadm" Version="v1.1.2" TargetNamespace="capi-kubeadm-control-plane-system"

I0306 04:02:21.429257 58201 request.go:665] Waited for 1.033401344s due to client-side throttling, not priority and fairness, request: GET:https://127.0.0.1:38527/apis/scheduling.k8s.io/v1beta1?timeout=30s

Installing Provider="infrastructure-docker" Version="v1.1.2" TargetNamespace="capd-system"

Your management cluster has been initialized successfully!

You can now create your first workload cluster by running the following:

clusterctl generate cluster [name] --kubernetes-version [version] | kubectl apply -f -참고) 깃헙 호출 한도 다 됐을 때

- 깃헙 토큰

Please wait one hour or get a personal API token and assign it to the GITHUB_TOKEN environment variable

❯ clusterctl generate cluster capi-quickstart --flavor development \ ...

Error: failed to get repository client for the InfrastructureProvider with name docker: error creating the GitHub repository client: failed to get GitHub latest version: failed to get repository versions: failed to get repository versions: rate limit for github api has been reached. Please wait one hour or get a personal API token and assign it to the GITHUB_TOKEN environment variable

# 깃헙 계정에서 토큰 발급

❯ export GITHUB_TOKEN=ghp_###

❯ clusterctl generate cluster capi-quickstart --flavor development \

--kubernetes-version v1.22.5 \

--control-plane-machine-count=1 \

--worker-machine-count=1 \

> capi-test.yaml이번에도 배포 실패.도커 환경상 문제로 보인다.

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

❯ k logs -fn capd-system capd-controller-manager-786f8f5898-v7tbl

I0305 19:24:38.090946 1 dockermachine_controller.go:140] controller/dockermachine "msg"="Waiting for DockerCluster Controller to create cluster infrastructure" "cluster"="capi-quickstart" "docker-cluster"="capi-quickstart" "machine"="capi-quickstart-md-0-6cfcc9c46d-8xkg8" "name"="capi-quickstart-md-0-q84tw" "namespace"="default" "reconciler group"="infrastructure.cluster.x-k8s.io" "reconciler kind"="DockerMachine"

E0305 19:31:18.065784 1 controller.go:317] controller/dockercluster "msg"="Reconciler error" "error"="failed to create helper for managing the externalLoadBalancer: failed to list containers: failed to list containers: failed to list containers: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?" "name"="capi-quickstart" "namespace"="default" "reconciler group"="infrastructure.cluster.x-k8s.io" "reconciler kind"="DockerCluster"

I0305 19:31:26.632838 1 dockermachine_controller.go:140] controller/dockermachine "msg"="Waiting for DockerCluster Controller to create cluster infrastructure" "cluster"="capi-quickstart" "docker-cluster"="capi-quickstart" "machine"="capi-quickstart-md-0-6cfcc9c46d-8xkg8" "name"="capi-quickstart-md-0-q84tw" "namespace"="default" "reconciler group"="infrastructure.cluster.x-k8s.io" "reconciler kind"="DockerMachine"해결 내용

kind 생성할 때 yaml로 설정 추가하여 생성

export KIND_EXPERIMENTAL_DOCKER_NETWORK=bridge

cat > kind-cluster-api.yaml <<EOF

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

image: kindest/node:v1.22.5@sha256:d409e1b1b04d3290195e0263e12606e1b83d5289e1f80e54914f60cd1237499d

extraMounts:

- hostPath: /var/run/docker.sock

containerPath: /var/run/docker.sock

EOF

kind create cluster --config ./kind-cluster-api.yaml

clusterctl init --infrastructure docker

❯ clusterctl generate cluster capi-test --flavor development \

--kubernetes-version v1.22.5 \

--control-plane-machine-count=1 \

--worker-machine-count=1 \

> capi-test.yaml

❯ k apply -f ./capi-test.yaml

cluster.cluster.x-k8s.io/capi-test created

dockercluster.infrastructure.cluster.x-k8s.io/capi-test created

kubeadmcontrolplane.controlplane.cluster.x-k8s.io/capi-test-control-plane created

dockermachinetemplate.infrastructure.cluster.x-k8s.io/capi-test-control-plane created

dockermachinetemplate.infrastructure.cluster.x-k8s.io/capi-test-md-0 created

kubeadmconfigtemplate.bootstrap.cluster.x-k8s.io/capi-test-md-0 created

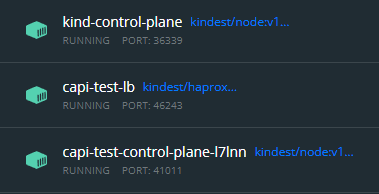

machinedeployment.cluster.x-k8s.io/capi-test-md-0 created상태가 달라졌다.

❯ kubectl get kubeadmcontrolplane

NAME CLUSTER INITIALIZED API SERVER AVAILABLE REPLICAS READY UPDATED UNAVAILABLE AGE VERSION

capi-test-control-plane capi-test 1 1 1 25s v1.22.5상태 1

❯ cc describe cluster capi-test

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-test False Info Bootstrapping @ Machine/capi-test-control-plane-l7lnn 9s 1 of 2 completed

├─ClusterInfrastructure - DockerCluster/capi-test True 60s

├─ControlPlane - KubeadmControlPlane/capi-test-control-plane False Info Bootstrapping @ Machine/capi-test-control-plane-l7lnn 9s 1 of 2 completed

│ └─Machine/capi-test-control-plane-l7lnn True 21s

└─Workers

└─MachineDeployment/capi-test-md-0 False Warning WaitingForAvailableMachines 62s Minimum availability requires 1 replicas, current 0 available

└─Machine/capi-test-md-0-7d478cc799-mql69 False Info WaitingForControlPlaneAvailable 60s 0 of 2 completed상태 2. 여기서 다시 막혔다.

❯ cc describe cluster capi-test

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-test False Info WaitingForKubeadmInit 6s

├─ClusterInfrastructure - DockerCluster/capi-test True 77s

├─ControlPlane - KubeadmControlPlane/capi-test-control-plane False Info WaitingForKubeadmInit 6s

│ └─Machine/capi-test-control-plane-l7lnn True 38s

└─Workers

└─MachineDeployment/capi-test-md-0 False Warning WaitingForAvailableMachines 79s Minimum availability requires 1 replicas, current 0 available

└─Machine/capi-test-md-0-7d478cc799-mql69 False Info WaitingForControlPlaneAvailable 77s 0 of 2 completedCNI 추가

마스터노드는 떴으니 kubeconfig 정보를 받아올 수 있다.

❯ cc get kubeconfig capi-test

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: ###

server: https://172.18.0.2:6443

name: capi-test

contexts:

- context:

cluster: capi-test

user: capi-test-admin

name: capi-test-admin@capi-test

current-context: capi-test-admin@capi-test

kind: Config

preferences: {}

users:

- name: capi-test-admin

user:

client-certificate-data:

...server 파트 접속 주소를 바꿔야한다.

- 도커 데스크탑이라 유저 입장에서는 6443포트로 접속 불가함.

- Docker-Desktop -> capi-test-control-plane inspect 값의 6443 port 노출 포트로 바꾼다.

- cluster:

certificate-authority-data: ^___^

# server: https://172.18.0.2:6443

server: https://127.0.0.1:41011 # Docker-Desktop capi-test-control-plane inspect의 6443 port 노출 포트

name: capi-testcalico CNI를 설치한다.

❯ k --kubeconfig=./capi-test.kubeconfig \

apply -f https://docs.projectcalico.org/v3.21/manifests/calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created현상 정리

여전히 WaitingForKubeadmInit 로 뜬다. 마스터는 올라왔으나, 워커가 없어서 파드를 생성해봐도 pending에서 멈춰있다.

❯ k --kubeconfig=./capi-test.kubeconfig \

> get no

NAME STATUS ROLES AGE VERSION

capi-test-control-plane-l7lnn Ready control-plane,master 13m v1.22.5

# capi로 만든 클러스터에 파드를 띄워본다

❯ k --kubeconfig=./capi-test.kubeconfig \

apply -f https://k8s.io/examples/pods/simple-pod.yaml

pod/nginx created

# 워커가 없어서 pending 상태

❯ k --kubeconfig=./capi-test.kubeconfig \

get all

NAME READY STATUS RESTARTS AGE

pod/nginx 0/1 Pending 0 63s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.128.0.1 <none> 443/TCP 17m왜지?

일단 지우는 것은

k delete -f yml로는 권장되지 않는다.

❯ k delete cluster capi-test

cluster.cluster.x-k8s.io "capi-test" deleted