cc generate로 생성했던 파일을 한번에 적용하면 worker가 안올라왔었다.

해당 파일을 Cluster | Master | Worker 이렇게 3개로 쪼개서 순차적으로 적용해 봄.

cc init

❯ cc init

Fetching providers

Installing cert-manager Version="v1.5.3"

Waiting for cert-manager to be available...

Installing Provider="cluster-api" Version="v1.1.3" TargetNamespace="capi-system"

Installing Provider="bootstrap-kubeadm" Version="v1.1.3" TargetNamespace="capi-kubeadm-bootstrap-system"

Installing Provider="control-plane-kubeadm" Version="v1.1.3" TargetNamespace="capi-kubeadm-control-plane-system"

Your management cluster has been initialized successfully!

You can now create your first workload cluster by running the following:

clusterctl generate cluster [name] --kubernetes-version [version] | kubectl apply -f -

❯ cc init --infrastructure docker

Fetching providers

Skipping installing cert-manager as it is already installed

Installing Provider="infrastructure-docker" Version="v1.1.3" TargetNamespace="capd-system"

❯ k get ns

NAME STATUS AGE

capd-system Active 150m # docker

capi-kubeadm-bootstrap-system Active 152m

capi-kubeadm-control-plane-system Active 152m

capi-system Active 152m

cert-manager Active 153m

default Active 154m

kube-node-lease Active 154m

kube-public Active 154m

kube-system Active 154m

local-path-storage Active 154mCluster(Docker) 생성

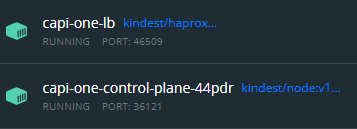

- 로드밸런서 컨테이너가 올라온다.

❯ k apply -f cluster-def.yaml

cluster.cluster.x-k8s.io/capi-one created

dockercluster.infrastructure.cluster.x-k8s.io/capi-one created

❯ k get dockercluster

NAME CLUSTER AGE

capi-one capi-one 56s

❯ cc describe cluster capi-one

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-one True 32s

└─ClusterInfrastructure - DockerCluster/capi-one True 32s

# lb 생성 확인

❯ docker ps --format "table {{.Names}} {{.Command}}"

NAMES COMMAND

capi-one-lb "haproxy -sf 7 -W -d…"

kind-control-plane "/usr/local/bin/entr…"

capi-ond-lb: 0.0.0.0:46509->6443/tcpcapi-control-plane: 127.0.0.1:36121->6443/tcp

Master 생성

- kubeconfig에서 server를 LB 포트로 지정하면 접속 가능하다.

# replicas=1

❯ k apply -f master.yaml

kubeadmcontrolplane.controlplane.cluster.x-k8s.io/capi-one-control-plane created

dockermachinetemplate.infrastructure.cluster.x-k8s.io/capi-one-control-plane created

❯ cc describe cluster capi-one

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-one True 47s

├─ClusterInfrastructure - DockerCluster/capi-one True 3m34s

└─ControlPlane - KubeadmControlPlane/capi-one-control-plane True 47s

└─Machine/capi-one-control-plane-44pdr True 49s

# 생성 확인

❯ docker ps --format "table {{.Names}} {{.Command}}"

NAMES COMMAND

capi-one-control-plane-44pdr "/usr/local/bin/entr…"

capi-one-lb "haproxy -sf 7 -W -d…"

kind-control-plane "/usr/local/bin/entr…"

# secret

❯ k get secret

NAME TYPE DATA AGE

capi-one-ca cluster.x-k8s.io/secret 2 43s

capi-one-control-plane-5jplg cluster.x-k8s.io/secret 2 43s

capi-one-etcd cluster.x-k8s.io/secret 2 43s

capi-one-kubeconfig cluster.x-k8s.io/secret 1 43s

capi-one-proxy cluster.x-k8s.io/secret 2 43s

capi-one-sa cluster.x-k8s.io/secret 2 43s

default-token-8kqnr kubernetes.io/service-account-token 3 7m20s

# kubeconfig 추출

❯ k get secret/capi-one-kubeconfig -o json \

| jq -r '.data.value' \

| base64 --decode \

> ./capi-kconfig.yaml

# 그리고 서버 포트 바꿔주기

# lb 포트로 변경해도 접근 가능

❯ k --kubeconfig=./capi-kconfig.yaml get no

NAME STATUS ROLES AGE VERSION

capi-one-control-plane-44pdr NotReady control-plane,master 16m v1.22.5

# CNI 설치 후

❯ k --kubeconfig=./capi-kconfig.yaml get no

NAME STATUS ROLES AGE VERSION

capi-one-control-plane-44pdr Ready control-plane,master 19m v1.22.5Worker 추가

# replicas=1

❯ k apply -f worker.yaml

dockermachinetemplate.infrastructure.cluster.x-k8s.io/capi-one-md-0 created

kubeadmconfigtemplate.bootstrap.cluster.x-k8s.io/capi-one-md-0 created

machinedeployment.cluster.x-k8s.io/capi-one-md-0 created

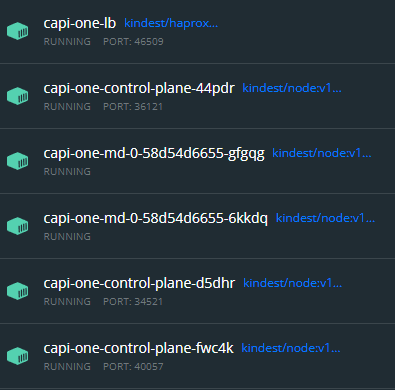

# capi-one-md 가 worker node

❯ docker ps --format "table {{.Names}} {{.Command}}"

NAMES COMMAND

capi-one-md-0-58d54d6655-gfgqg "/usr/local/bin/entr…"

capi-one-control-plane-44pdr "/usr/local/bin/entr…"

capi-one-lb "haproxy -sf 7 -W -d…"

kind-control-plane "/usr/local/bin/entr…"

# capi-one 클러스터에 노드가 추가됨

❯ k --kubeconfig=./capi-kconfig.yaml get no

NAME STATUS ROLES AGE VERSION

capi-one-control-plane-44pdr Ready control-plane,master 23m v1.22.5

capi-one-md-0-58d54d6655-gfgqg Ready <none> 72s v1.22.5

# 파드 생성

❯ k --kubeconfig=./capi-kconfig.yaml \

apply -f https://k8s.io/examples/pods/simple-pod.yaml

pod/nginx created

# worker node에 할당됨

❯ k --kubeconfig=./capi-kconfig.yaml get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 94s 192.168.195.129 capi-one-md-0-58d54d6655-gfgqg <none> <none>worker 노드 하나 더 추가

# replicas=2

❯ k apply -f worker.yaml

dockermachinetemplate.infrastructure.cluster.x-k8s.io/capi-one-md-0 unchanged

kubeadmconfigtemplate.bootstrap.cluster.x-k8s.io/capi-one-md-0 unchanged

machinedeployment.cluster.x-k8s.io/capi-one-md-0 configured

# 가장 최근에 추가된 컨테이너를 조회

❯ docker ps --format "table {{.Names}} {{.Command}}" --latest

NAMES COMMAND

capi-one-md-0-58d54d6655-6kkdq "/usr/local/bin/entr…"

# worker node가 2개로 추가됨

❯ docker ps --format "table {{.Names}} {{.Command}}"

NAMES COMMAND

capi-one-md-0-58d54d6655-6kkdq "/usr/local/bin/entr…"

capi-one-md-0-58d54d6655-gfgqg "/usr/local/bin/entr…"

capi-one-control-plane-44pdr "/usr/local/bin/entr…"

capi-one-lb "haproxy -sf 7 -W -d…"

kind-control-plane "/usr/local/bin/entr…"

# kubectl로 노드 리스트 확인

❯ k --kubeconfig=./capi-kconfig.yaml get no

NAME STATUS ROLES AGE VERSION

capi-one-control-plane-44pdr Ready control-plane,master 28m v1.22.5

capi-one-md-0-58d54d6655-6kkdq Ready <none> 97s v1.22.5

capi-one-md-0-58d54d6655-gfgqg Ready <none> 6m54s v1.22.5master를 3대로 늘리기

# replicas=3

❯ k apply -f master.yaml

kubeadmcontrolplane.controlplane.cluster.x-k8s.io/capi-one-control-plane configured

dockermachinetemplate.infrastructure.cluster.x-k8s.io/capi-one-control-plane unchanged

# 순차적으로 하나씩 올라온다 (d5dhr)

❯ docker ps --format "table {{.Names}} {{.Command}}" --latest

NAMES COMMAND

capi-one-control-plane-d5dhr "/usr/local/bin/entr…"

# 하나가 올라온 뒤 다음 노드가 올라옴 (fwc4k)

❯ docker ps --format "table {{.Names}} {{.Command}}" --latest

NAMES COMMAND

capi-one-control-plane-fwc4k "/usr/local/bin/entr…"

# 마스터 노드 추가 확인

❯ docker ps --format "table {{.Names}} {{.Command}} {{.RunningFor}}"

NAMES COMMAND CREATED

capi-one-control-plane-fwc4k "/usr/local/bin/entr…" 3 minutes ago

capi-one-control-plane-d5dhr "/usr/local/bin/entr…" 4 minutes ago

capi-one-md-0-58d54d6655-6kkdq "/usr/local/bin/entr…" 7 minutes ago

capi-one-md-0-58d54d6655-gfgqg "/usr/local/bin/entr…" 12 minutes ago

capi-one-control-plane-44pdr "/usr/local/bin/entr…" 34 minutes ago

capi-one-lb "haproxy -sf 7 -W -d…" 36 minutes ago

kind-control-plane "/usr/local/bin/entr…" 42 minutes ago

# kubectl로 노드 리스트 확인

❯ k --kubeconfig=./capi-kconfig.yaml get no

NAME STATUS ROLES AGE VERSION

capi-one-control-plane-44pdr Ready control-plane,master 34m v1.22.5

capi-one-control-plane-d5dhr Ready control-plane,master 4m32s v1.22.5

capi-one-control-plane-fwc4k Ready control-plane,master 3m19s v1.22.5

capi-one-md-0-58d54d6655-6kkdq Ready <none> 7m32s v1.22.5

capi-one-md-0-58d54d6655-gfgqg Ready <none> 12m v1.22.5현재까지의 상태 확인

LB: cluster LoadBalancer (to master node?)control-plane: master nodemd: worker node

# clusterctl로 현재 상태 확인

❯ cc describe cluster capi-one

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-one True 46m

├─ClusterInfrastructure - DockerCluster/capi-one True 80m

├─ControlPlane - KubeadmControlPlane/capi-one-control-plane True 46m

│ └─3 Machines... True 78m See capi-one-control-plane-44pdr, capi-one-control-plane-d5dhr, ...

└─Workers

└─MachineDeployment/capi-one-md-0 True 50m

└─2 Machines... True 56m See capi-one-md-0-58d54d6655-6kkdq, capi-one-md-0-58d54d6655-gfgqg

master node scale down

# replicas=1

❯ k apply -f master.yaml

kubeadmcontrolplane.controlplane.cluster.x-k8s.io/capi-one-control-plane configured

dockermachinetemplate.infrastructure.cluster.x-k8s.io/capi-one-control-plane unchanged

# 지워지기까지 시간이 걸린다

❯ k --kubeconfig=./capi-kconfig.yaml get no

NAME STATUS ROLES AGE VERSION

capi-one-control-plane-44pdr Ready control-plane,master 117m v1.22.5

capi-one-control-plane-d5dhr Ready control-plane,master 87m v1.22.5

capi-one-control-plane-fwc4k Ready control-plane,master 86m v1.22.5

capi-one-md-0-58d54d6655-6kkdq Ready <none> 90m v1.22.5

capi-one-md-0-58d54d6655-gfgqg Ready <none> 96m v1.22.5

❯ k get kubeadmcontrolplane

NAME CLUSTER INITIALIZED API SERVER AVAILABLE REPLICAS READY UPDATED UNAVAILABLE AGE VERSION

capi-one-control-plane capi-one true true 3 3 3 0 120m v1.22.5

❯ cc describe cluster capi-one

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-one False Warning ScalingDown 71s Scaling down control plane to 1 replicas (actual 3)

├─ClusterInfrastructure - DockerCluster/capi-one True 121m

├─ControlPlane - KubeadmControlPlane/capi-one-control-plane False Warning ScalingDown 72s Scaling down control plane to 1 replicas (actual 3)

│ └─3 Machines... True 118m See capi-one-control-plane-44pdr, capi-one-control-plane-d5dhr, ...

└─Workers

└─MachineDeployment/capi-one-md-0 True 91m

└─2 Machines... True 97m See capi-one-md-0-58d54d6655-6kkdq, capi-one-md-0-58d54d6655-gfgqg

❯ cc describe cluster capi-one

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-one False Warning ScalingDown 13s Scaling down control plane to 1 replicas (actual 2)

├─ClusterInfrastructure - DockerCluster/capi-one True 122m

├─ControlPlane - KubeadmControlPlane/capi-one-control-plane False Warning ScalingDown 13s Scaling down control plane to 1 replicas (actual 2)

│ └─Machine/capi-one-control-plane-fwc4k True 88m

└─Workers

└─MachineDeployment/capi-one-md-0 True 92m

└─2 Machines... True 97m See capi-one-md-0-58d54d6655-6kkdq, capi-one-md-0-58d54d6655-gfgqg

# 삭제됨

❯ k get kubeadmcontrolplane

NAME CLUSTER INITIALIZED API SERVER AVAILABLE REPLICAS READY UPDATED UNAVAILABLE AGE VERSION

capi-one-control-plane capi-one true true 1 1 1 0 123m v1.22.5

❯ cc describe cluster capi-one

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-one True 48s

├─ClusterInfrastructure - DockerCluster/capi-one True 123m

├─ControlPlane - KubeadmControlPlane/capi-one-control-plane True 48s

│ └─Machine/capi-one-control-plane-fwc4k True 89m

└─Workers

└─MachineDeployment/capi-one-md-0 True 93m

└─2 Machines... True 98m See capi-one-md-0-58d54d6655-6kkdq, capi-one-md-0-58d54d6655-gfgqgworker node scale down

# replicas=1

❯ k apply -f worker.yaml

dockermachinetemplate.infrastructure.cluster.x-k8s.io/capi-one-md-0 unchanged

kubeadmconfigtemplate.bootstrap.cluster.x-k8s.io/capi-one-md-0 unchanged

machinedeployment.cluster.x-k8s.io/capi-one-md-0 configured

❯ k --kubeconfig=./capi-kconfig.yaml get no

NAME STATUS ROLES AGE VERSION

capi-one-control-plane-fwc4k Ready control-plane,master 103m v1.22.5

capi-one-md-0-58d54d6655-6kkdq Ready <none> 107m v1.22.5파일로 순차적으로 클러스터 삭제하기

- 가능하지만, 문서에는 오브젝트로 삭제하는 것이 더 안전하다고 써있다.

# worker 삭제

❯ k delete -f worker.yaml

dockermachinetemplate.infrastructure.cluster.x-k8s.io "capi-one-md-0" deleted

kubeadmconfigtemplate.bootstrap.cluster.x-k8s.io "capi-one-md-0" deleted

machinedeployment.cluster.x-k8s.io "capi-one-md-0" deleted

❯ cc describe cluster capi-one

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-one True 16m

├─ClusterInfrastructure - DockerCluster/capi-one True 139m

└─ControlPlane - KubeadmControlPlane/capi-one-control-plane True 16m

└─Machine/capi-one-control-plane-fwc4k True 104m

# master 삭제

❯ k delete -f master.yaml

kubeadmcontrolplane.controlplane.cluster.x-k8s.io "capi-one-control-plane" deleted

dockermachinetemplate.infrastructure.cluster.x-k8s.io "capi-one-control-plane" deleted

❯ cc describe cluster capi-one

NAME READY SEVERITY REASON SINCE MESSAGE

Cluster/capi-one True 18m

└─ClusterInfrastructure - DockerCluster/capi-one True 140m

# cluster 삭제

❯ k get cluster

NAME PHASE AGE VERSION

capi-one Provisioned 143m

❯ k delete -f cluster-def.yaml

cluster.cluster.x-k8s.io "capi-one" deleted

dockercluster.infrastructure.cluster.x-k8s.io "capi-one" deleted

❯ k get cluster

No resources found in default namespace.

❯ cc describe cluster capi-one

Error: clusters.cluster.x-k8s.io "capi-one" not found

# capi-one 클러스터가 삭제되어 management만 남았음.

❯ docker ps --format "table {{.Names}} {{.Command}}"

NAMES COMMAND

kind-control-plane "/usr/local/bin/entr…"