1. Introduction

-

Discrete Entities are embedded to dense real-valued vectors

- word embedding for LLM

- recommender system

-

The embedding vector can be used as input to other models

-

Also, they can provide a data-driven notion of similarity between entities

-

Cosine Similarity has become a very popular measure of semantic similarity

- the norm of the embedding vectors is not as important as the directional alignment between the embedding vectors

- unnormalized dot-product is not worked

-

Cosine similarity of the learned embeddings can in fact yield arbitrary results

- learned embeddings have a DoF that can render arbitrary cosine-similarities even though their dot-products are well-defined and unique

-

propose linear Matrix Factorization models as analytical solutions

2. Matrix Factorization Models

-

focus on linear models as they follow for closed-form solutions

-

matrix , conatining data points and features

-

the goal is to estimate a low-rank matrix where with such that is a good approximation of

-

is a user-item matrix

- the row of : item-embeddings (-dimensional)

- the row of : user-embeddings

- the embedding of user is the sum of the item-embeddings that the user has consumed

-

this is defined in terms of the unnormalized dot-product between two embeddings

-

-

once it has been learned, it is common to consider

- two items cosine similarity

- two users cosine similarity

- user-item cosine similarity

-

this can lead to arbitrary results and they may not even be unique

-

2.1 Training

-

the key factor affecting the utility of cosine similarity is the regularization employed when learning the embeddings in

-

First one applies to their product

- in Linear models, this L2-regularization is equivalent to learning with denoising (drop-out in the input layer)

- the resulting prediction accuracy os test data was superior to the second objective

- denoising/drop-out is better than weight decay (second one)

-

Second one is equivalent to the usual matrix factorization objective

- regularizing and separately is similar to weight decay in deep learning

-

if are solutions of objective, for an arbitrary rotation matrix are the solutions as well

-

cosine similarity is invariant under rotation

-

only the first objetive is invariant to rescalings of the columns of and (different latent dimensions of the embeddings)

-

if is the solution of the first objective, for an arbitrary diagonal matrix , is the solution also

-

Then devine a new solution as a function of as

-

this diagonal matrix affects the normalization of the learned user and item embeddings (rows)

where is appropriate diagonal matrices to normalize each learned embedding to unit Euclidian norm

-

a different choice for cannot be compensated by the

-

they depend on so they can be shown as

-

cosing similarities of the embeddings depend on this arbitrary matrix

-

-

the cosine similarity becomes

- item - item

- user-user

- user-item

- item - item

-

These cosine similarities all depend on arbitrary matrix

-

user-user and item-item is directly depend on while user-item is indirectly depend on due to its effect to normalizing matrices

2.2 Details on First Objective

-

The closed-form solution of the first objective is and is truncated matrices of rank

-

Sine is arbitrary, w.l.o.g. we may define

-

when we think of the special case of a full-rank MF model, this would be two cases

-

choose

- given the matrix of normalized singular vectors , the normalization

- Then

- Cosine similarity between any pair of item-embedding is zero

- the only difference in user-item embeddings is the normalization the same ranking ( is irrelevant)

-

choose

- similar to previous case

- for user-user similarities, it is based on the raw data-matrix

- it doesn't uses the learned embeddings

- normalizes the rows of but this is again the same rankings

- this is very different from the previous choice

-

Hence, the different choice of result in different cosine-similarities even though the learned model is invariant to

-

the results of cosine-similarity are arbitrary and not unique for this model

-

2.3 Details on Second Objective

-

The solution of the second objective is

-

If we use usual notation of MF and ,

- we get

- this diagonal matrix is same for user and item embeddings due to its symmetry in the L2-norm regularization

- this solution is unique there is no way to choose arbitrary matrix

-

In this case, the cosine-similarity yields unique results

-

is this matrix the best possible semantic similarities?

- comparing this case with 2.2 gives the arbitrary diagonal matrix in 2.2 may be chosen as

3. Remedies and Alternatives to Cosine-Similarity

- when a model is trained w.r.t. the dot-product, its effect on cosine-similarity can be opaque and sometimes not even unique

-

train model on cosine-similarity use layer normalization

-

project the embedding back into the original space cosine-similarity works

- view as the raw data's smoothed version and the rows of as the users' embeddings in the original space

-

- in cosine-similarity, normalization is applied after the embeddings have been learned

- this can reduce the result similarities compare to applying some normalization or reduction of popularity bias before of during learning

- To resolve this,

-

standardize data (zero mean, unit variance)

-

negative sampling, inverse propensity scaling to account for the different item popularities

- word2vec is trained by sampling negatives with a probability proportional to their frequency

-

4. Experiments

-

illustrate these findings for low-rank embeddings

-

Not aware of a good metric for semantic similarity experiments on simulated data ground-truths are known (clustered items data)

-

generated interactions between 20000 users and 1000 items assigned to 5 clusters with probability

-

sampled the powerlaw-exponent for each cluster ,

- where

-

assigned a baseline popularity to each item according to the powerlaw

-

then generated the items that each user had interacted with

- firstly, randomly sampled user-cluster preferences

- compute the user-item probabilities

- sampled the number of items for this user (used and sampled items with )

-

Learned the matrices with two training objective ( and )

- low-rank constraint , to complement the analytical result for the full-rank case above

-

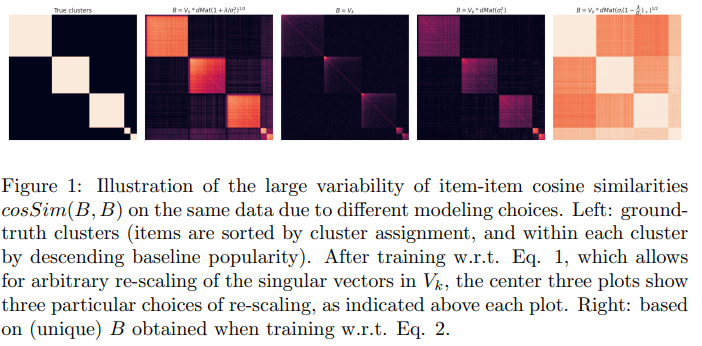

Left one is ground-truth item-item similarities

-

training with first objective and chose three re-scaling of the singular vectors in (middle three)

-

Right one is trained with second objective unique solution

-

see how vastly different the resulting cosine-similarities can be even for reasonable choice of re-scaling (not used extreme case)

5. Conclusions

-

cosine similarities are heavily dependent on the method and regularization technique

-

in some cases, it can be rendered even meaningless

-

cosine-similarity with the embeddings in deep models to be plagued by similar problems

- deep model's different layers may be subject to different regularization may affect

6. Comment

- 맹목적으로 사용하는 코사인 유사도에 대한 고찰. 하지만 너무 제한적인 상황에서 테스트를 해본 것 같지만 그냥 의심을 한번 해보자는 취지에서는 괜찮았던 것 같음.