퍼셉트론을 깊게 연결한 진짜 신경망, 딥러닝 모델을 만들어보자.

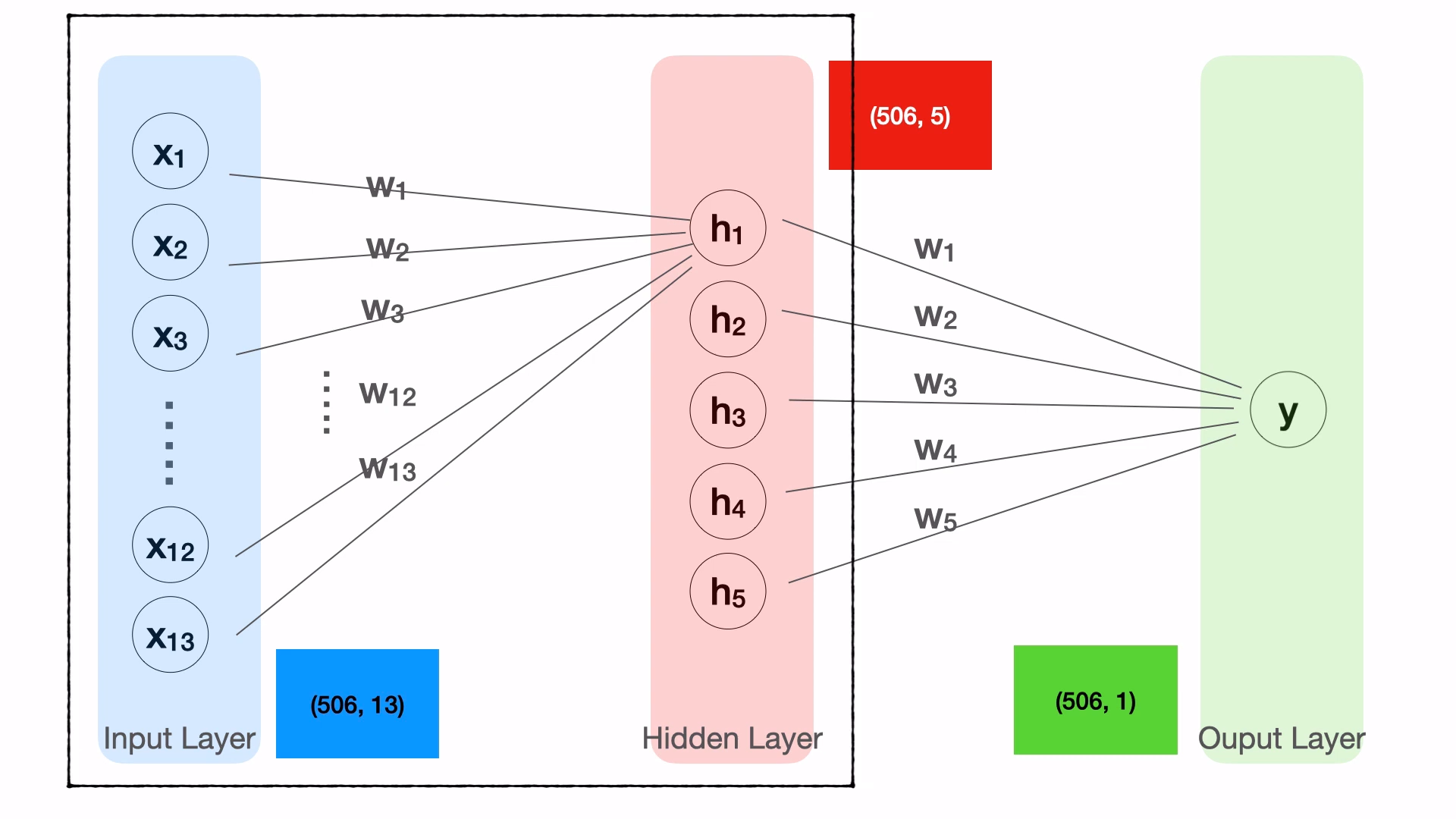

Input Layer와 Output Layer 중간에 Hidden Layer를 추가하여 위와 같이 각각의 모델을 연속적으로 연결하여 하나의 거대한 신경망을 만든 것이 딥러닝, 즉 인공신경망이다.

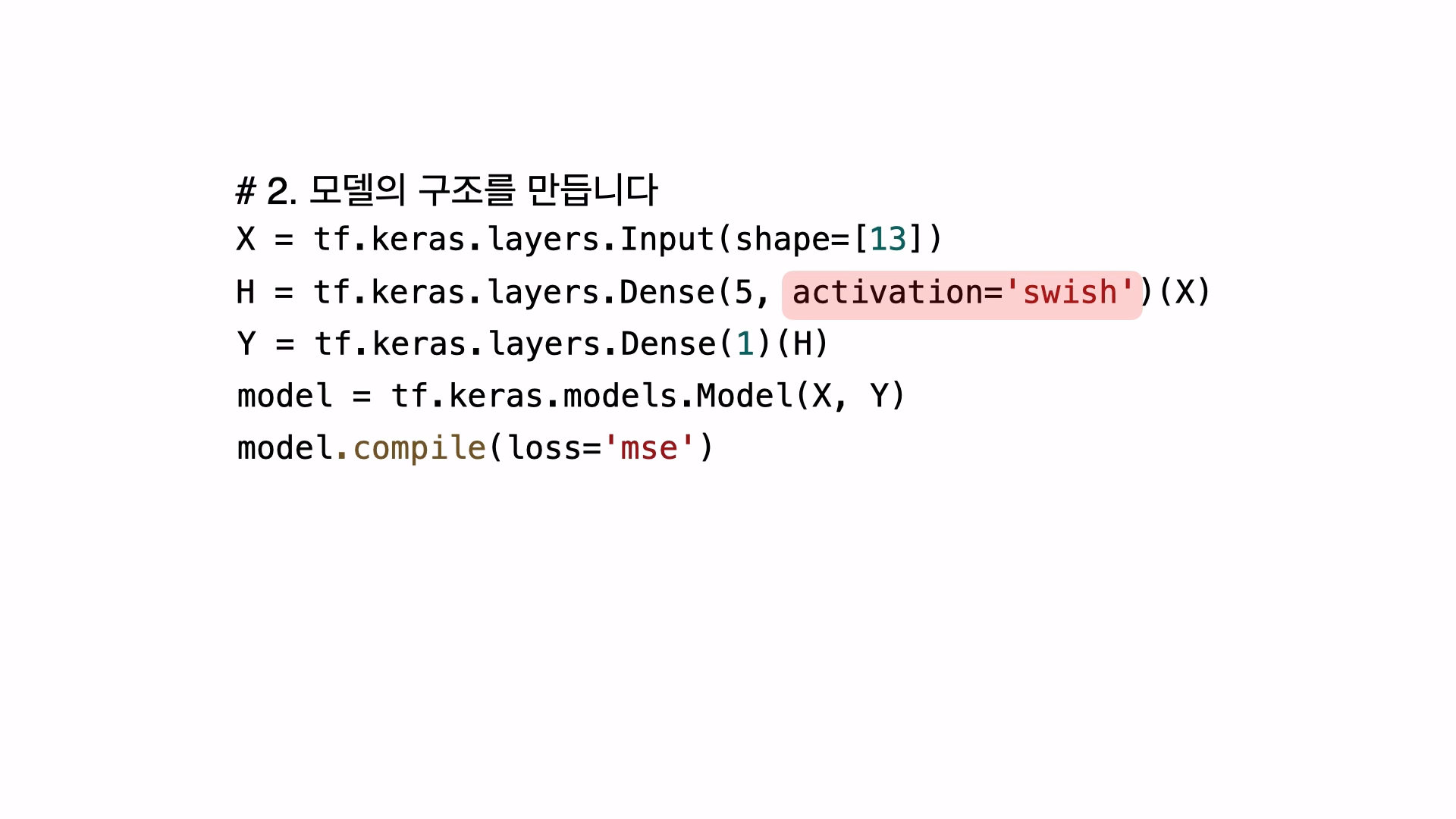

이전 코드에 추가로 활성화 함수인 'swish'를 사용하는 코드를 추가하였다.

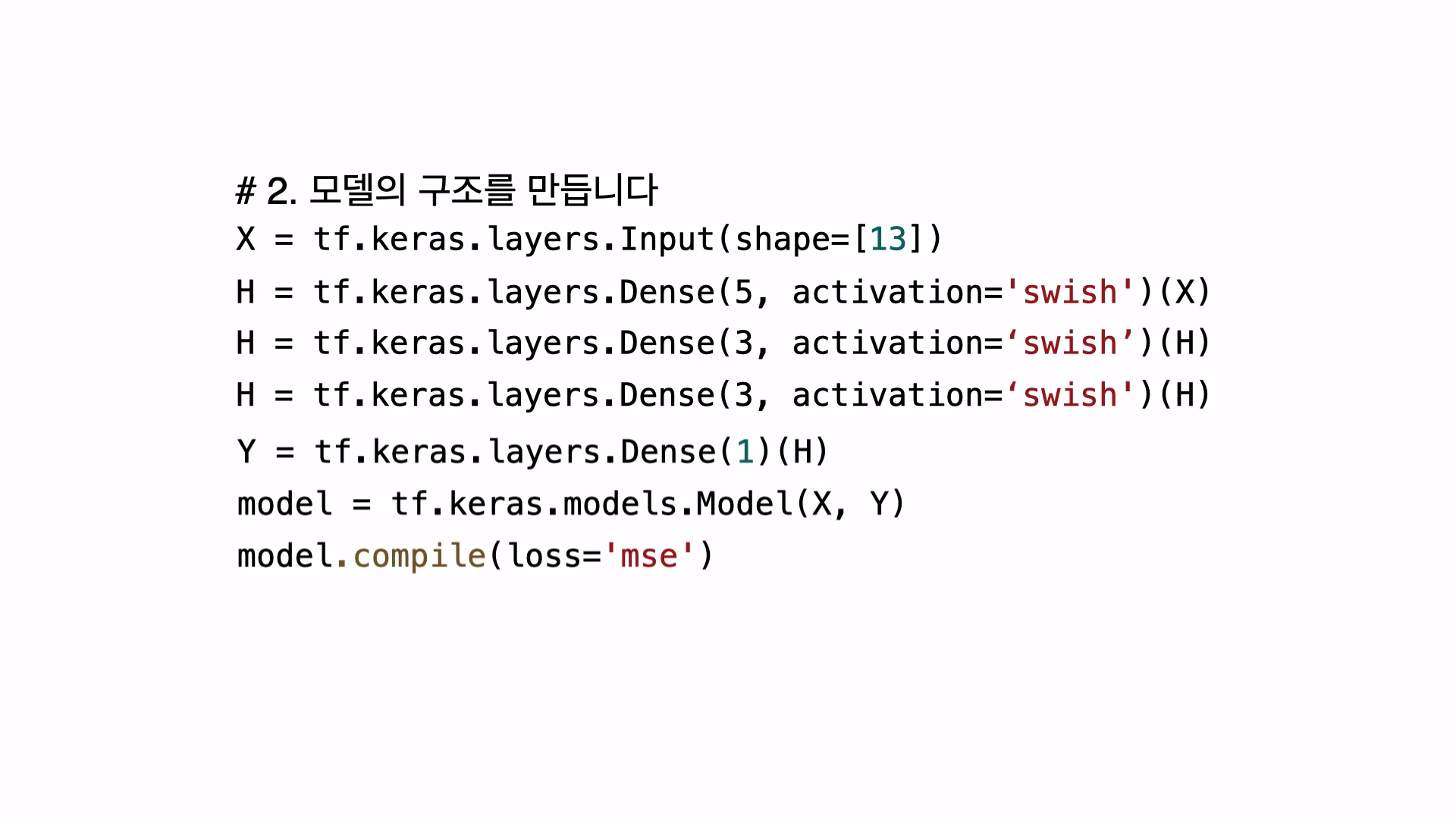

위와 같이 히든레이어를 여러층 추가 할수도 있다. 첫번째 레이어는 5개의 노드, 나머지 두개의 레이어는 3개의 노드를 가진 레이어가 된다.

실습

1. 보스턴 집값 예측

라이브러리 사용

import tensorflow as tf

import pandas as pd1. 과거의 데이터를 준비한다.

파일경로 = '/content/boston.csv'

보스턴 = pd.read_csv(파일경로)종속변수, 독립변수

독립 = 보스턴[['crim', 'zn', 'indus', 'chas', 'nox', 'rm', 'age', 'dis', 'rad', 'tax',

'ptratio', 'b', 'lstat']]

종속 = 보스턴[['medv']]

print(독립.shape, 종속.shape)실행결과

(506, 13) (506, 1)

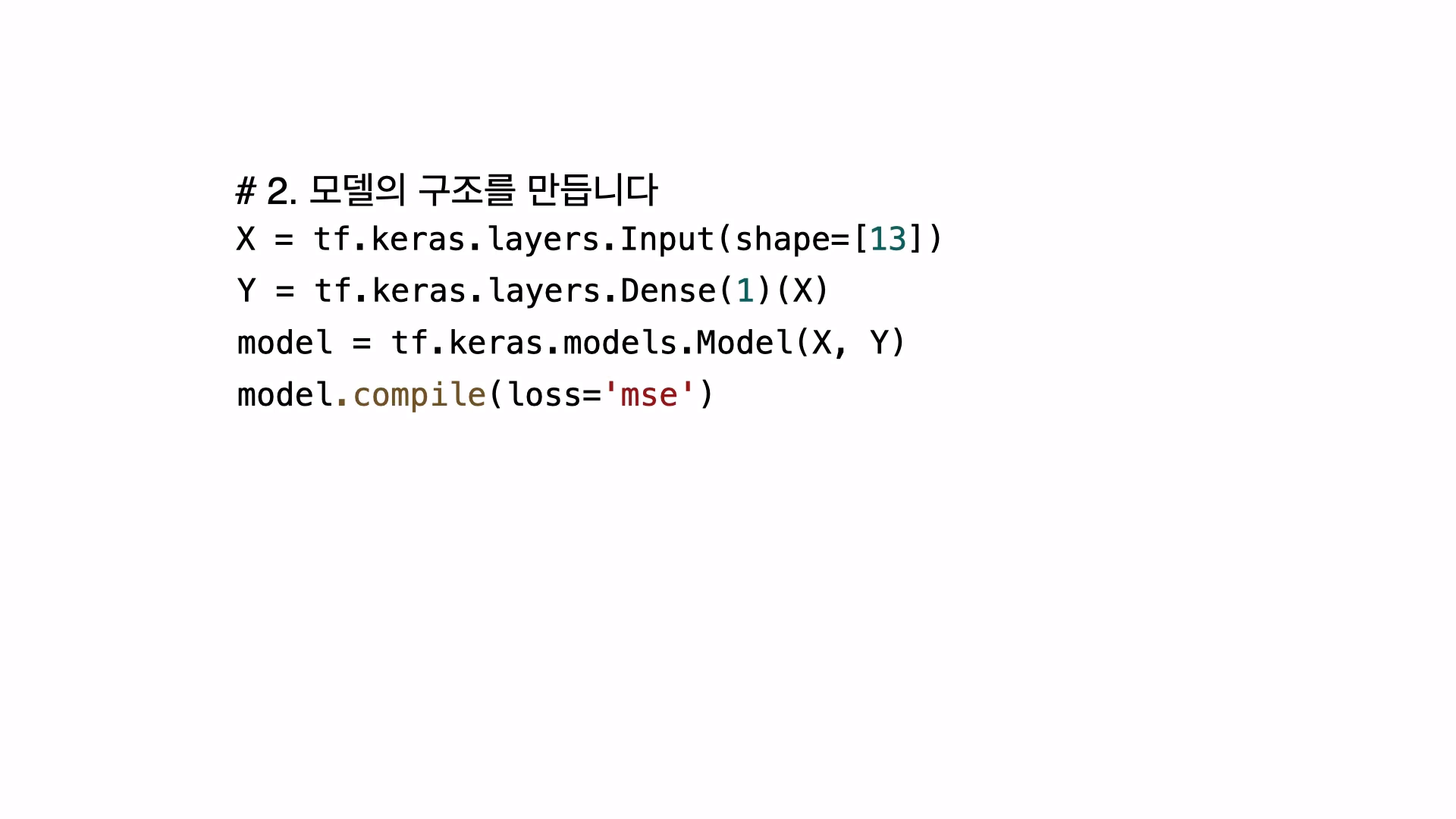

2. 모델의 구조를 만든다.

X = tf.keras.layers.Input(shape=[13])

H = tf.keras.layers.Dense(10, activation='swish')(X)

Y = tf.keras.layers.Dense(1)(H)

model = tf.keras.models.Model(X, Y)

model.compile(loss='mse')모델 구조 확인

model.summary()실행결과

Model: "model" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_1 (InputLayer) [(None, 13)] 0 _________________________________________________________________ dense (Dense) (None, 10) 140 _________________________________________________________________ dense_1 (Dense) (None, 1) 11 ================================================================= Total params: 151 Trainable params: 151 Non-trainable params: 0 _________________________________________________________________3. 데이터로 모델을 학습(FIT)한다.

model.fit(독립, 종속, epochs=10)실행결과

Epoch 1/10 16/16 [==============================] - 0s 1ms/step - loss: 23.9763 Epoch 2/10 16/16 [==============================] - 0s 1ms/step - loss: 26.1203 Epoch 3/10 16/16 [==============================] - 0s 1ms/step - loss: 23.9813 Epoch 4/10 16/16 [==============================] - 0s 2ms/step - loss: 24.7751 Epoch 5/10 16/16 [==============================] - 0s 2ms/step - loss: 25.1300 Epoch 6/10 16/16 [==============================] - 0s 1ms/step - loss: 24.4888 Epoch 7/10 16/16 [==============================] - 0s 1ms/step - loss: 25.1748 Epoch 8/10 16/16 [==============================] - 0s 1ms/step - loss: 24.1443 Epoch 9/10 16/16 [==============================] - 0s 1ms/step - loss: 25.6121 Epoch 10/10 16/16 [==============================] - 0s 1ms/step - loss: 24.3392 <tensorflow.python.keras.callbacks.History at 0x7f0a84c756a0>

4. 모델을 이용한다.

print(model.predict(독립[:5]))

print(종속[:5])실행결과

[[29.875679] [24.5941 ] [31.143375] [29.89589 ] [29.418259]] medv 0 24.0 1 21.6 2 34.7 3 33.4 4 36.2

2. 아이리스 품종 분류

1. 과거의 데이터를 준비합니다.

파일경로 = '/content/iris.csv'

아이리스 = pd.read_csv(파일경로)원핫인코딩

인코딩 = pd.get_dummies(아이리스)종속변수, 독립변수

독립 = 인코딩[['꽃잎길이', '꽃잎폭', '꽃받침길이', '꽃받침폭']]

종속 = 인코딩[['품종_setosa', '품종_versicolor', '품종_virginica']]

print(독립.shape, 종속.shape)실행결과

(150, 4) (150, 3)

2. 모델의 구조를 만듭니다.

X = tf.keras.layers.Input(shape=[4])

H = tf.keras.layers.Dense(8, activation='swish')(X)

H = tf.keras.layers.Dense(8, activation='swish')(H)

H = tf.keras.layers.Dense(8, activation='swish')(H)

Y = tf.keras.layers.Dense(3, activation='softmax')(H)

model = tf.keras.models.Model(X, Y)

model.compile(loss='categorical_crossentropy', metrics='accuracy')모델 구조 확인

model.summary()실행결과

Model: "model_2" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_3 (InputLayer) [(None, 4)] 0 _________________________________________________________________ dense_6 (Dense) (None, 8) 40 _________________________________________________________________ dense_7 (Dense) (None, 8) 72 _________________________________________________________________ dense_8 (Dense) (None, 8) 72 _________________________________________________________________ dense_9 (Dense) (None, 3) 27 ================================================================= Total params: 211 Trainable params: 211 Non-trainable params: 0 _________________________________________________________________

3. 데이터로 모델을 학습(FIT)합니다.

model.fit(독립, 종속, epochs=1000, verbose=0)

model.fit(독립, 종속, epochs=10)실행결과

Epoch 1/10 5/5 [==============================] - 0s 2ms/step - loss: 0.0439 - accuracy: 0.9800 Epoch 2/10 5/5 [==============================] - 0s 2ms/step - loss: 0.0453 - accuracy: 0.9800 Epoch 3/10 5/5 [==============================] - 0s 2ms/step - loss: 0.0447 - accuracy: 0.9733 Epoch 4/10 5/5 [==============================] - 0s 2ms/step - loss: 0.0463 - accuracy: 0.9733 Epoch 5/10 5/5 [==============================] - 0s 2ms/step - loss: 0.0471 - accuracy: 0.9733 Epoch 6/10 5/5 [==============================] - 0s 2ms/step - loss: 0.0417 - accuracy: 0.9867 Epoch 7/10 5/5 [==============================] - 0s 2ms/step - loss: 0.0467 - accuracy: 0.9800 Epoch 8/10 5/5 [==============================] - 0s 2ms/step - loss: 0.0479 - accuracy: 0.9800 Epoch 9/10 5/5 [==============================] - 0s 4ms/step - loss: 0.0461 - accuracy: 0.9800 Epoch 10/10 5/5 [==============================] - 0s 2ms/step - loss: 0.0407 - accuracy: 0.9867 <tensorflow.python.keras.callbacks.History at 0x7fd1680626d8>

4. 모델을 이용합니다.

print(model.predict(독립[:5]))

print(종속[:5])실행결과

[[1.0000000e+00 5.6188866e-11 5.0029675e-15] [1.0000000e+00 1.4186266e-08 9.0516131e-13] [1.0000000e+00 8.4865925e-10 7.9909718e-14] [1.0000000e+00 2.7057682e-08 8.9389092e-13] [1.0000000e+00 2.6075730e-11 2.2111196e-15]] 품종_setosa 품종_versicolor 품종_virginica 0 1 0 0 1 1 0 0 2 1 0 0 3 1 0 0 4 1 0 0