네이버 API

네이버 API 사용등록

- 네이버 개발자 센터

- https://developers.naver.com/main/

- Application

- 어플리케이션 등록

- 어플리케이션 이름 ds_study

- 사용 API

- 검색

- 데이터랩(검색어트렌드)

- 데이터랩(쇼핑인사이트)

- 환경추가

- WEB 설정

- http://localhost

- Client ID: -

- Client Secret: -

네이버 API 사용하기

- https://developers.naver.com/docs/serviceapi/search/blog/blog.md#python # 개발 가이드에서 코드를 제공

- urllib: http 프로토콜에 따라서 서버의 요청/응답을 처리하기 위한 모듈

- urllib.request: 클라이언트의 요청을 처리하는 모듈

- urllib.parse: url 주소에 대한 분석

검색

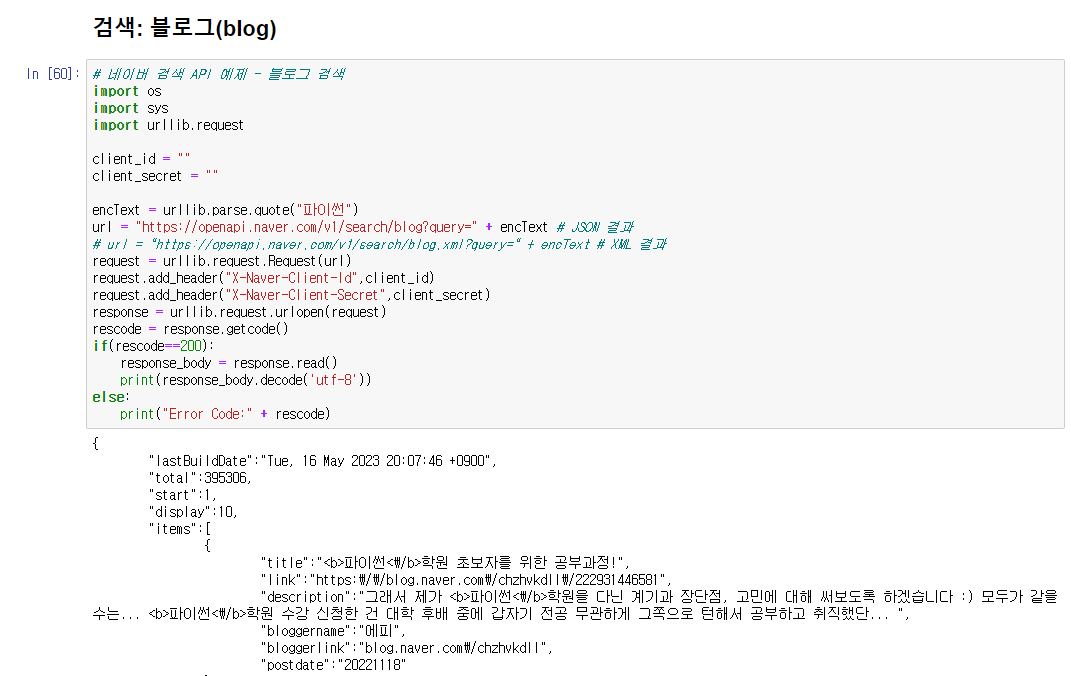

- 검색: 블로그(blog)

네이버 블로그에서 파이썬을 검색한 결과를 가져온다.

# 네이버 검색 API 예제 - 블로그 검색

import os

import sys

import urllib.request

client_id = ""

client_secret = ""

encText = urllib.parse.quote("파이썬")

url = "https://openapi.naver.com/v1/search/blog?query=" + encText # JSON 결과

# url = "https://openapi.naver.com/v1/search/blog.xml?query=" + encText # XML 결과

request = urllib.request.Request(url)

request.add_header("X-Naver-Client-Id",client_id)

request.add_header("X-Naver-Client-Secret",client_secret)

response = urllib.request.urlopen(request)

rescode = response.getcode()

if(rescode==200):

response_body = response.read()

print(response_body.decode('utf-8'))

else:

print("Error Code:" + rescode)- 검색: 책(book)

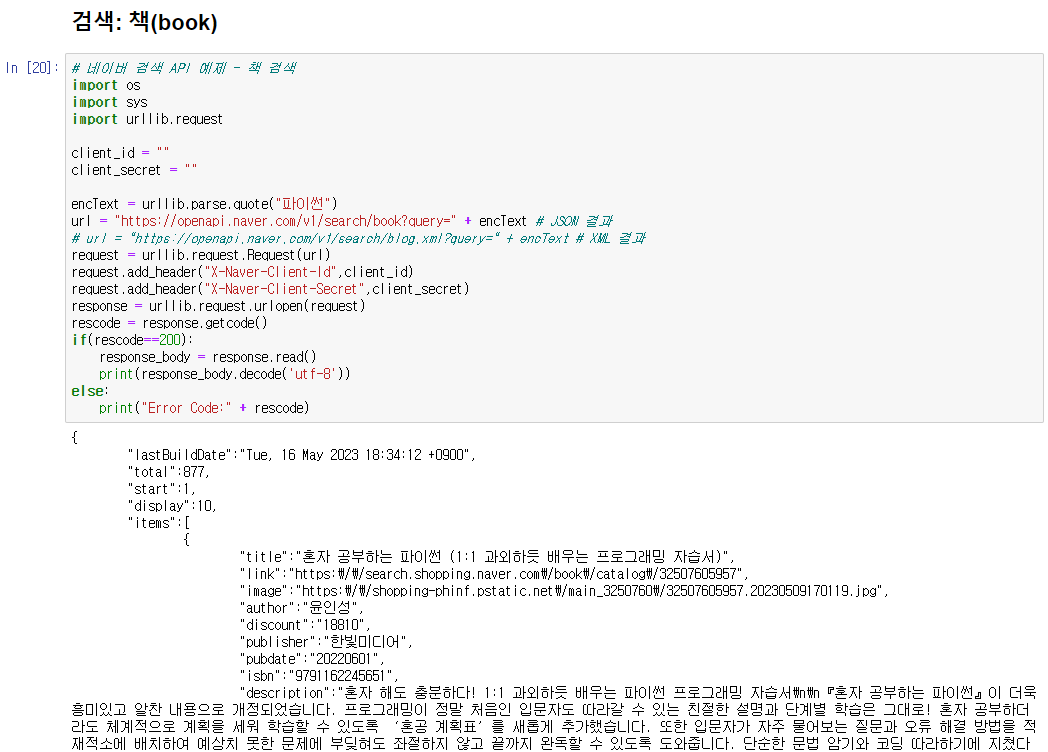

네이버 책사이트에서 파이썬을 검색한 결과를 가져온다.

블로그 검색에서 url만 책 사이트로 변경

# 네이버 검색 API 예제 - 책 검색

import os

import sys

import urllib.request

client_id = ""

client_secret = ""

encText = urllib.parse.quote("파이썬")

url = "https://openapi.naver.com/v1/search/book?query=" + encText # JSON 결과

# url = "https://openapi.naver.com/v1/search/blog.xml?query=" + encText # XML 결과

request = urllib.request.Request(url)

request.add_header("X-Naver-Client-Id",client_id)

request.add_header("X-Naver-Client-Secret",client_secret)

response = urllib.request.urlopen(request)

rescode = response.getcode()

if(rescode==200):

response_body = response.read()

print(response_body.decode('utf-8'))

else:

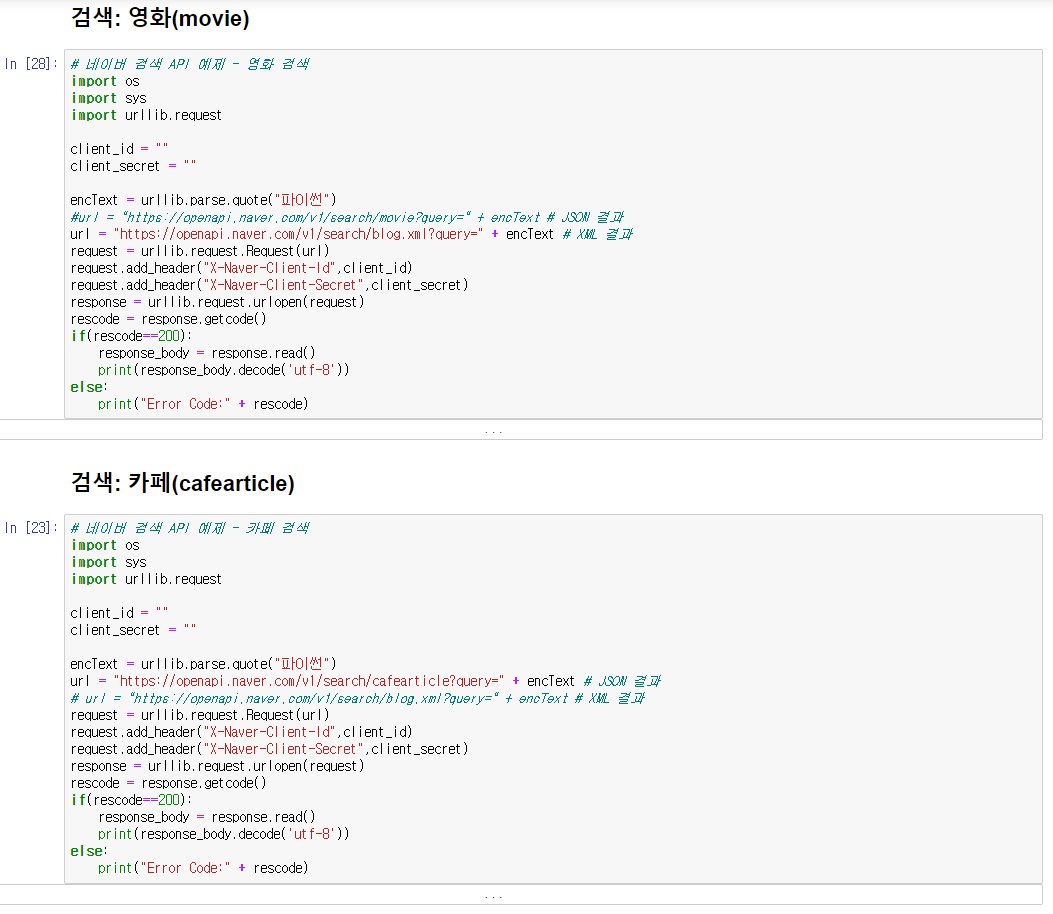

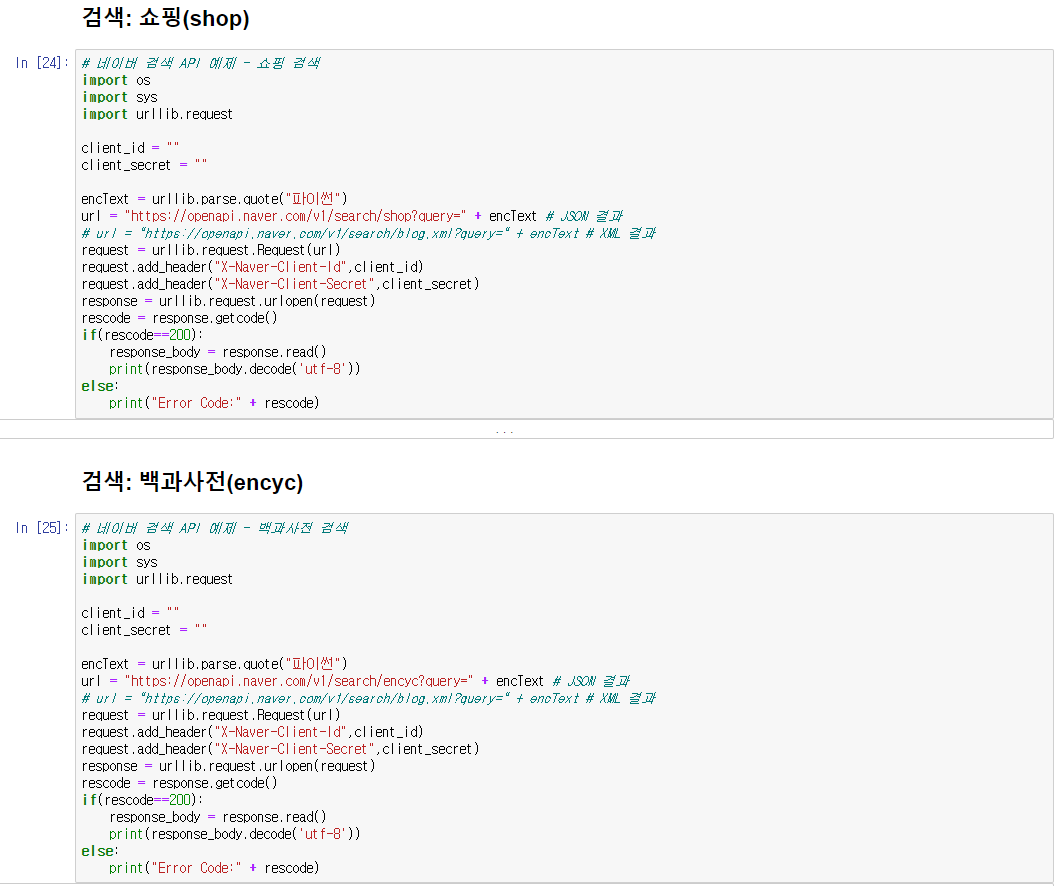

print("Error Code:" + rescode)- 영화, 카페, 쇼핑, 백과사전

블로그, 책 뿐만아니라 네이버 API를 이용하여 url만 변경하면 네이버에서 제공하는 사이트들의 검색기능을 사용할수있다.

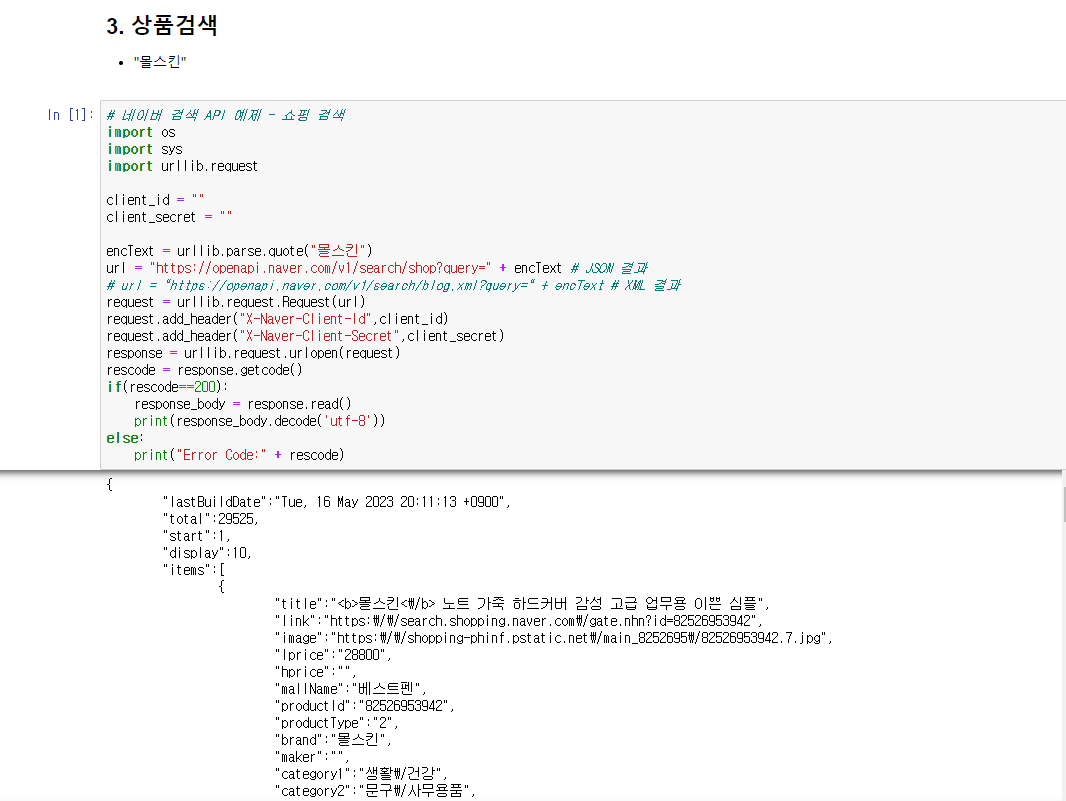

상품검색 프로젝트

네이버 쇼핑에서 몰스킨을 검색하여 나온 결과를 정리하여 데이터프레임으로 정리해보자

# 네이버 검색 API 예제 - 쇼핑 검색

import os

import sys

import urllib.request

client_id = ""

client_secret = ""

encText = urllib.parse.quote("몰스킨")

url = "https://openapi.naver.com/v1/search/shop?query=" + encText # JSON 결과

# url = "https://openapi.naver.com/v1/search/blog.xml?query=" + encText # XML 결과

request = urllib.request.Request(url)

request.add_header("X-Naver-Client-Id",client_id)

request.add_header("X-Naver-Client-Secret",client_secret)

response = urllib.request.urlopen(request)

rescode = response.getcode()

if(rescode==200):

response_body = response.read()

print(response_body.decode('utf-8'))

else:

print("Error Code:" + rescode)URL 생성 함수

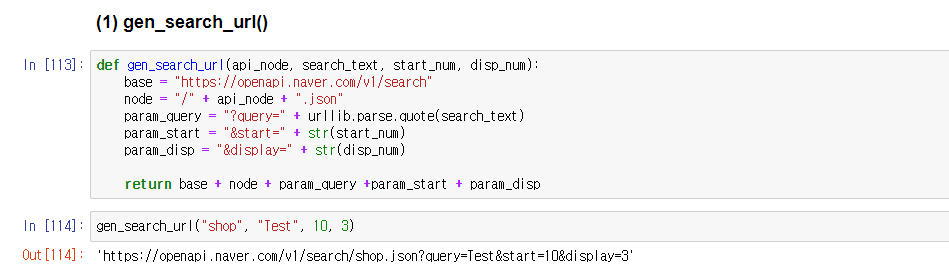

def gen_search_url(api_node, search_text, start_num, disp_num):

base = "https://openapi.naver.com/v1/search"

node = "/" + api_node + ".json"

param_query = "?query=" + urllib.parse.quote(search_text)

param_start = "&start=" + str(start_num)

param_disp = "&display=" + str(disp_num)

return base + node + param_query +param_start + param_disp검색 함수

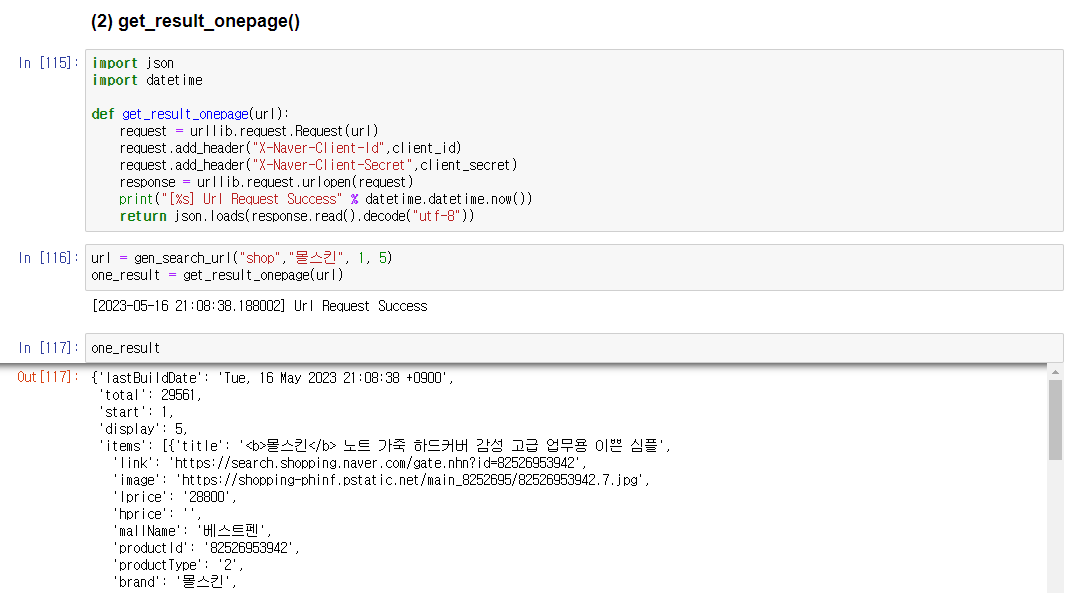

함수로 만든 URL을 이용하여 네이버 API로 사이트에서 검색하여 필요한 정보 추출

import json

import datetime

def get_result_onepage(url):

request = urllib.request.Request(url)

request.add_header("X-Naver-Client-Id",client_id)

request.add_header("X-Naver-Client-Secret",client_secret)

response = urllib.request.urlopen(request)

print("[%s] Url Request Success" % datetime.datetime.now())

return json.loads(response.read().decode("utf-8"))

url = gen_search_url("shop","몰스킨", 1, 5)

one_result = get_result_onepage(url)

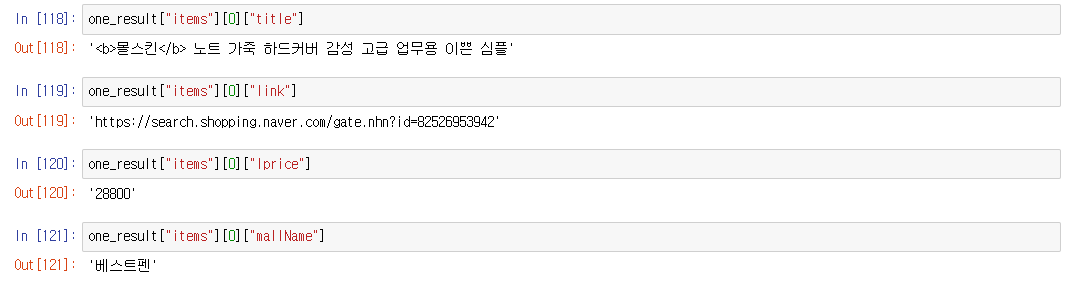

one_result["items"][0]["title"]

one_result["items"][0]["link"]

one_result["items"][0]["lprice"]

one_result["items"][0]["mallName"]데이터프레임 생성 함수

import pandas as pd

def get_fields(json_data):

title = [each["title"] for each in json_data["items"]]

link = [each["link"] for each in json_data["items"]]

lprice = [each["lprice"] for each in json_data["items"]]

mall_name = [each["mallName"] for each in json_data["items"]]

result_pd = pd.DataFrame({

"title": title,

"link": link,

"lprice" : lprice,

"mall": mall_name}, columns = ["title", "lprice", "link", "mall"])

return result_pd

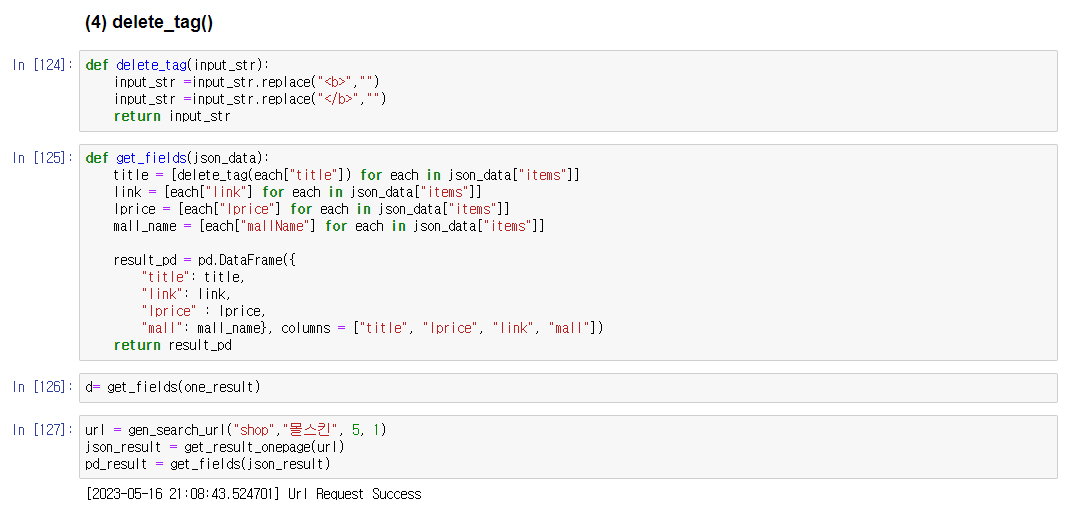

get_fields(one_result)태그 삭제하여 데이터프레임 재생성 함수

def delete_tag(input_str):

input_str =input_str.replace("<b>","")

input_str =input_str.replace("</b>","")

return input_str

def get_fields(json_data):

title = [delete_tag(each["title"]) for each in json_data["items"]]

link = [each["link"] for each in json_data["items"]]

lprice = [each["lprice"] for each in json_data["items"]]

mall_name = [each["mallName"] for each in json_data["items"]]

result_pd = pd.DataFrame({

"title": title,

"link": link,

"lprice" : lprice,

"mall": mall_name}, columns = ["title", "lprice", "link", "mall"])

return result_pd

get_fields(one_result)단계 별 함수 실행

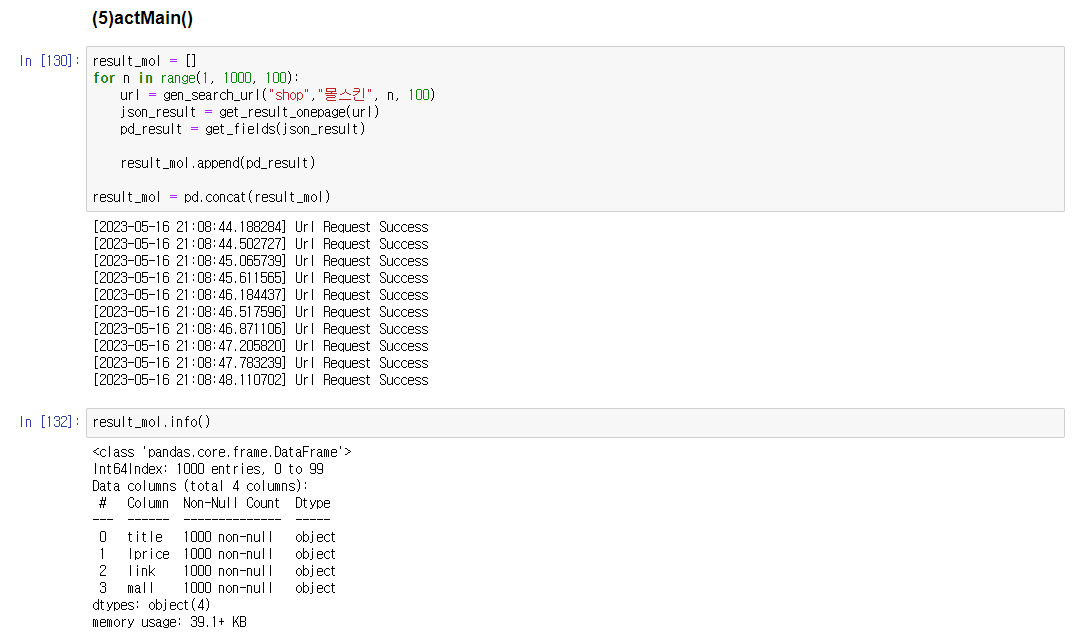

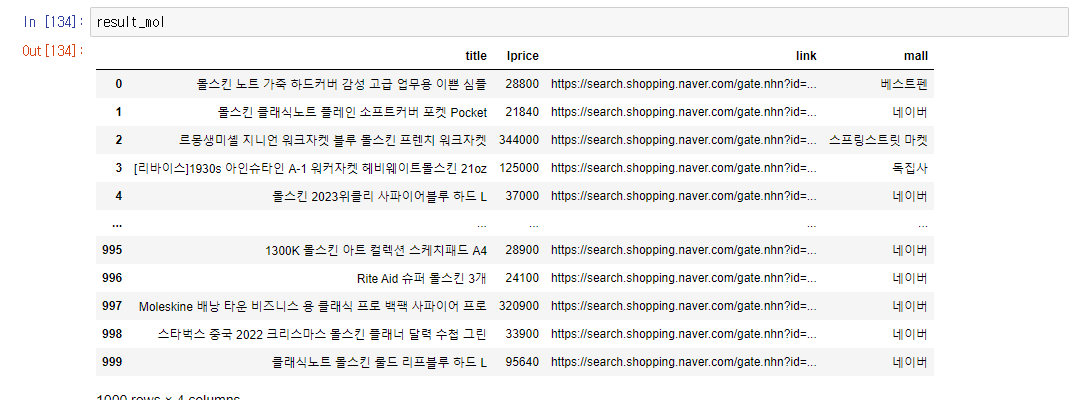

위에 했던 함수 단계들을 1에서부터 100개씩 1000개 정보 불러와서 concat을 이용해 데이터프레임 생성

result_mol = []

for n in range(1, 1000, 100):

url = gen_search_url("shop","몰스킨", n, 100)

json_result = get_result_onepage(url)

pd_result = get_fields(json_result)

result_mol.append(pd_result)

result_mol = pd.concat(result_mol)데이터프레임 엑셀에 저장

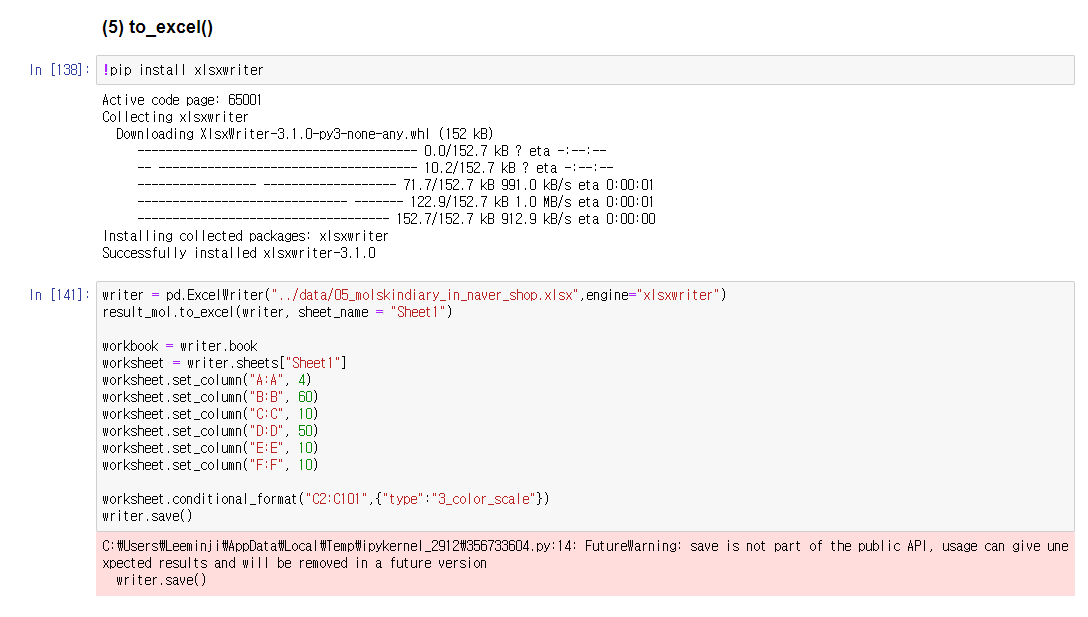

쥬피터 노트북에서 엑셀 다루기

!pip install xlsxwriter

writer = pd.ExcelWriter("../data/05_molskindiary_in_naver_shop.xlsx",engine="xlsxwriter")

result_mol.to_excel(writer, sheet_name = "Sheet1")

workbook = writer.book

worksheet = writer.sheets["Sheet1"]

worksheet.set_column("A:A", 4)

worksheet.set_column("B:B", 60)

worksheet.set_column("C:C", 10)

worksheet.set_column("D:D", 50)

worksheet.set_column("E:E", 10)

worksheet.set_column("F:F", 10)

worksheet.conditional_format("C2:C101",{"type":"3_color_scale"})

writer.save()데이터 시각화

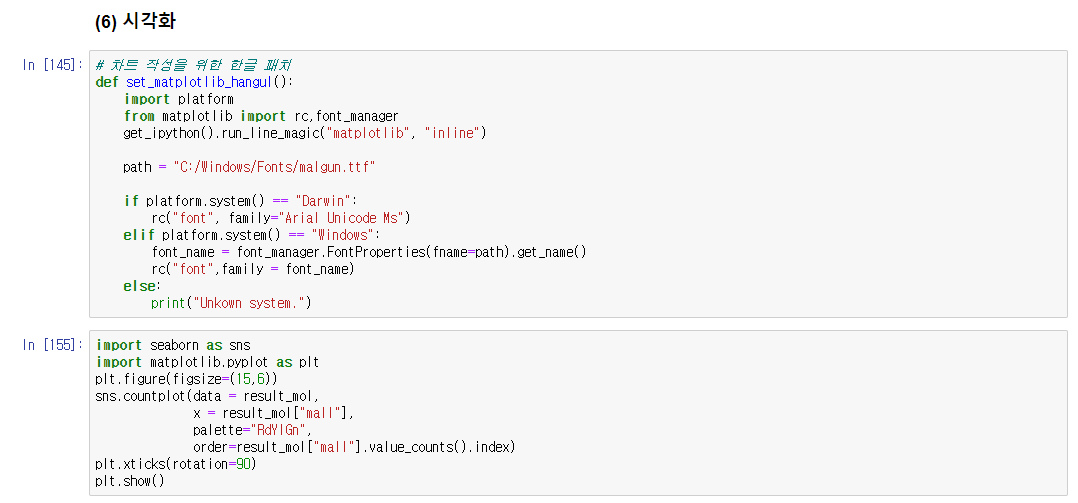

# 차트 작성을 위한 한글 패치

def set_matplotlib_hangul():

import platform

from matplotlib import rc,font_manager

get_ipython().run_line_magic("matplotlib", "inline")

path = "C:/Windows/Fonts/malgun.ttf"

if platform.system() == "Darwin":

rc("font", family="Arial Unicode Ms")

elif platform.system() == "Windows":

font_name = font_manager.FontProperties(fname=path).get_name()

rc("font",family = font_name)

else:

print("Unkown system.")

import seaborn as sns

import matplotlib.pyplot as plt

plt.figure(figsize=(15,6))

sns.countplot(data = result_mol,

x = result_mol["mall"],

palette="RdYlGn",

order=result_mol["mall"].value_counts().index)

plt.xticks(rotation=90)

plt.show()이글은 제로베이스 데이터 취업스쿨의 강의자료 일부를 발췌하여 작성되었습니다.