Udemy Python Bootcamp Day 045

Beautiful Soup Module

Beautiful Soup is a Python library for pulling data out of HTML and XML files and they're responsible for structuring data like the data in a website using there tags.

from bs4 import BeautifulSoup

# import lxml

with open("website.html", encoding="utf-8") as file:

contents = file.read()

soup = BeautifulSoup(contents, "html.parser")

print(soup.title)

print(soup.title.name)

print(soup.title.string)

#output

<title>Awesomee's Personal Site</title>

title

Awesomee's Personal Siteprint(soup) : print all html file

print(soup.prettify()) : print indented all html file

print(soup.p) : print first <p>

print(soup.a) : print first <a>

Finding and Selecting Particular Elements

all_anchor_tags = soup.find_all(name="a")

print(all_anchor_tags)

for tag in all_anchor_tags:

print(tag.getText())

for tag in all_anchor_tags:

print(tag.get("href"))

#output

[<a href="https://www.appbrewery.co/">The App Brewery</a>, <a href="https://angelabauer.github.io/cv/hobbies.html">My Hobbies</a>, <a href="https://angelabauer.github.io/cv/contact-me.html">Contact Me</a>]

The App Brewery

My Hobbies

Contact Me

https://www.appbrewery.co/

https://angelabauer.github.io/cv/hobbies.html

https://angelabauer.github.io/cv/contact-me.htmlheading = soup.find(name="h1", id="name")

print(heading)

section_heading = soup.find(name="h3", class_="heading")

print(section_heading)

print(section_heading.getText())

print(section_heading.name)

print(section_heading.string)

print(section_heading.get("class"))

#output

<h1 id="name">Awesomee</h1>

<h3 class="heading">Books and Teaching</h3>

Books and Teaching

h3

Books and Teaching

['heading']company_url = soup.select_one(selector="p a")selector="p a" is the CSS selector. It doesn't just stick to the HTML selectors. Also can use the class or the id in your CSS selector.

name = soup.select_one(selector="#name")

print(name)

headings = soup.select(".heading")

print(headings)

#output

<h1 id="name">Awesomee</h1>

[<h3 class="heading">Books and Teaching</h3>, <h3 class="heading">Other Pages</h3>]Scraping a Live Website

print article's title and article's link of largest number of votes

from bs4 import BeautifulSoup

import requests

response = requests.get("https://news.ycombinator.com/news")

yc_web_page = response.text

soup = BeautifulSoup(yc_web_page, "html.parser")

articles = soup.find_all(name="a", class_="titlelink")

article_texts = []

article_links = []

for article_tag in articles:

text = article_tag.getText()

article_texts.append(text)

link = article_tag.get("href")

article_links.append(link)

article_upvotes = [int(score.getText().split()[0]) for score in soup.find_all(name="span", class_="score")]

largest_number = max(article_upvotes)

largest_index = article_upvotes.index(largest_number)

print(article_texts[largest_index])

print(article_links[largest_index])Reverse the order in list

list[::-1]revered_list = [list[n] for n in range(len(list) - 1, 0, -1)]

Is Web Scraping Legal?

Remember to look at to see what can do and can't do with their website. So before you scrap a website, always go to the root of their URL and check out their robots.txt and follow the ethical codes of conduct when you're trying to commercialize a project.

100 Movies that You Must Watch

import requests

from bs4 import BeautifulSoup

URL = "https://web.archive.org/web/20200518073855/https://www.empireonline.com/movies/features/best-movies-2/"

response = requests.get(URL)

website_html = response.text

soup = BeautifulSoup(website_html, "html.parser")

all_movies = soup.find_all(name="h3", class_="title")

movie_titles = [movie.getText() for movie in all_movies]

movies = movie_titles[::-1]

with open("movie.txt", "w") as file:

for movie in movies:

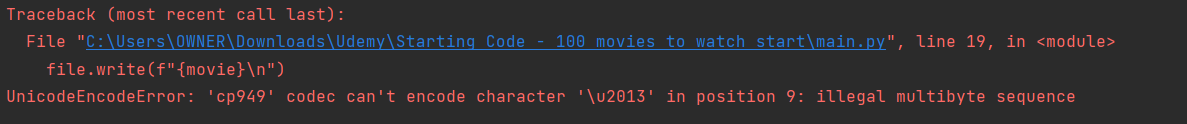

file.write(f"{movie}\n")이게 솔루션 코드인데,,,

아마도 사이트가 막혔나봄....?

사이트가 막히긴 뭐가 막혀....

UnicodeEncodeError 인거보고 위에서 했던 것 처럼 수정했더니 됨

with open("movie.txt", "w", encoding="utf-8") as file:

for movie in movies:

file.write(f"{movie}\n")#output

movie.txt

1) The Godfather

2) The Empire Strikes Back

3) The Dark Knight

4) The Shawshank Redemption

5) Pulp Fiction

6) Goodfellas

7) Raiders Of The Lost Ark

8) Jaws

9) Star Wars

10) The Lord Of The Rings: The Fellowship Of The Ring

11) Back To The Future

12: The Godfather Part II

13) Blade Runner

14) Alien

15) Aliens

16) The Lord Of The Rings: The Return Of The King

17) Fight Club

18) Inception

19) Jurassic Park

20) Die Hard

21) 2001: A Space Odyssey

22) Apocalypse Now

23) The Lord Of The Rings: The Two Towers

24) The Matrix

25) Terminator 2: Judgment Day

26) Heat

27) The Good, The Bad And The Ugly

28) Casablanca

29) The Big Lebowski

30) Seven

31) Taxi Driver

32) The Usual Suspects

33) Schindler's List

34) Guardians Of The Galaxy

35) The Shining

36) The Departed

37) The Thing

38) Mad Max: Fury Road

39) Saving Private Ryan

40) 12 Angry Men

41) Eternal Sunshine Of The Spotless Mind

42) There Will Be Blood

43) One Flew Over The Cuckoo's Nest

44) Gladiator

45) Drive

46) Citizen Kane

47) Interstellar

48) The Silence Of The Lambs

49) Trainspotting

50) Lawrence Of Arabia

51) It's A Wonderful Life

52) Once Upon A Time In The West

53) Psycho

54) Vertigo

55) Pan's Labyrinth

56) Reservoir Dogs

57) Whiplash

58) Inglourious Basterds

59) E.T. – The Extra Terrestrial

60) American Beauty

61) Forrest Gump

62) La La Land

63) Donnie Darko

64) L.A. Confidential

65) Avengers Assemble

66) Return Of The Jedi

67) Memento

68) Ghostbusters

69) Singin' In The Rain

70) The Lion King

71) Hot Fuzz

72) Rear Window

73) Seven Samurai

74) Mulholland Dr.

75) Fargo

76) A Clockwork Orange

77) Toy Story

78) Oldboy

79) Captain America: Civil War

15) Spirited Away

81) The Social Network

82) Some Like It Hot

83) True Romance

84) Rocky

85) Léon

86) Indiana Jones And The Last Crusade

87) Predator

88) The Exorcist

89) Shaun Of The Dead

90) No Country For Old Men

91) The Prestige

92) The Terminator

93) The Princess Bride

94) Lost In Translation

95) Arrival

96) Good Will Hunting

97) Titanic

98) Amelie

99) Raging Bull

100) Stand By Me