[Contents]

1) Image classification 1

2) Annotation data efficient learning

Image classification 1

- 우리는 오감 중 특히 시각에 의존하여 사물을 바라보고 이해하며 살아가고 있다

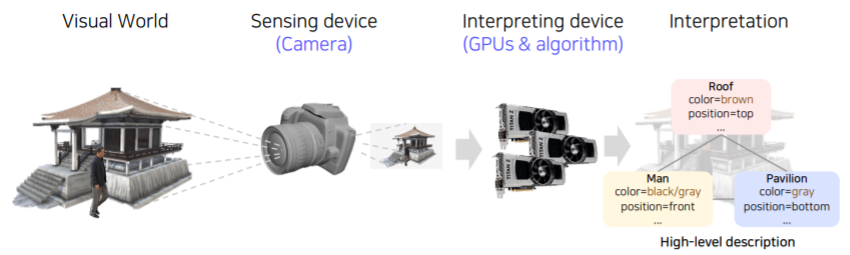

- 동일한 프로세스를 컴퓨터에 적용한 컴퓨터 비전이다

- 여기에서는 컴퓨터 비전 (CV)의 첫 시간으로 CV에 대해 짧게 소개하고, CV에서 가장 기본적인 task, image clasiification을 소개한다

- Image Classification은 사진이 주어졌을 때 특정 카테고리로 분류하는 task이다

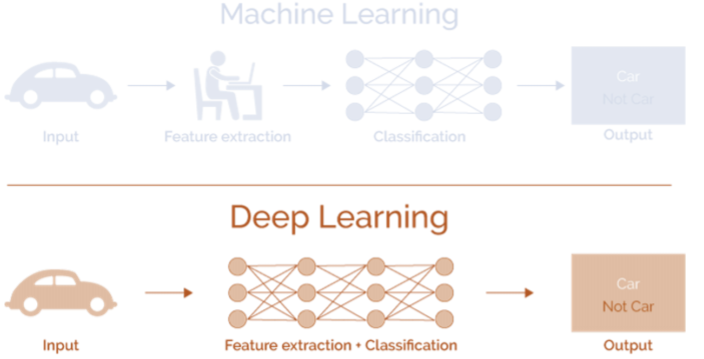

- 이번에는 먼저 기존의 머신러닝과 구분되는 딥러닝을 사용한 Image classification의 특징에 대해서 배운다

- 다음으로 대표적인 CNN 모델인 AlexNet을 배우고 이에 대한 실습을 진행한다

- 끝으로 가장 유명한 classification 모델 중 하나인 VGGNet에 대해 배운다

Further Reading

Course overview

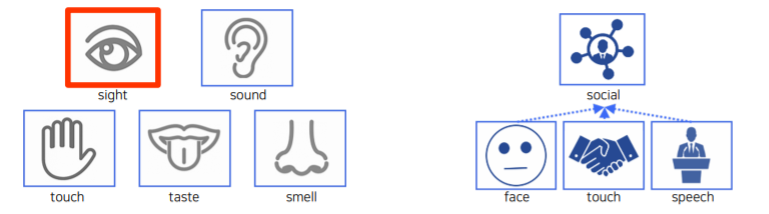

Why is visual perception important?

-

Artificial Intelligence (AI)?

- 사람의 지능을 computer system으로 구현 하는 것

- 지능 : 인지능력, 지각능력, 기억과 이해 및 사고 능력까지 포함

-

Perception to system (지각능력)

- 시스템에서 입력과 출력 데이터에 관련된 것

- As humans grow, we learn about the world by interacting with it

- We gather informative signals from multi-modal association

- Developing machine perception is still an open research area

-

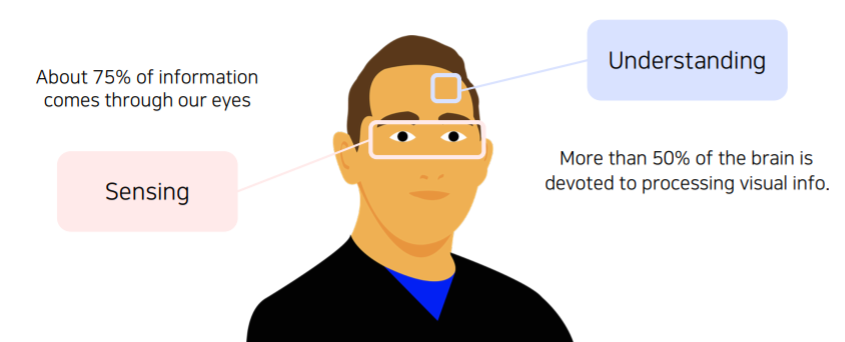

시각지각 능력이 가장 중요하다

- 왜냐하면 다른 오감에 비해서 압도적으로 시각에 많이 의지해서 살아가기 때문이다

- 왜냐하면 다른 오감에 비해서 압도적으로 시각에 많이 의지해서 살아가기 때문이다

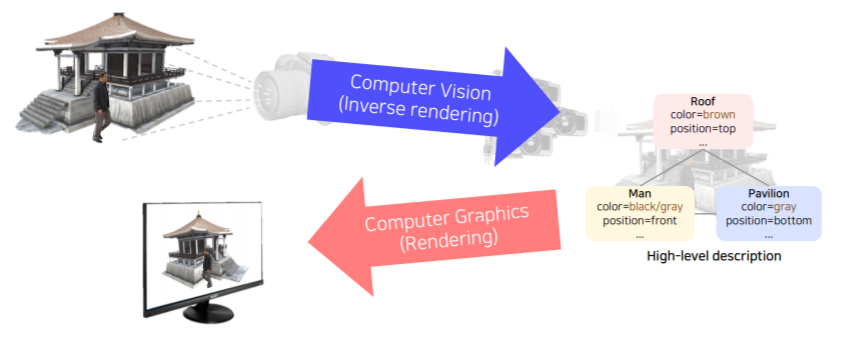

What is computer vision?

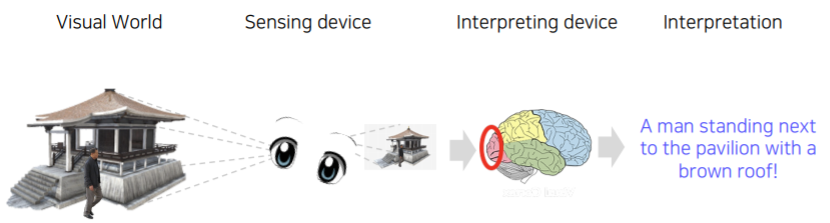

- 사람이 정면을 이해하는 과정

- 컴퓨터가 정면을 이해하는 과정

- rendering : 정보를 통해서 2d 이미지를 drawing 하는 테크닉

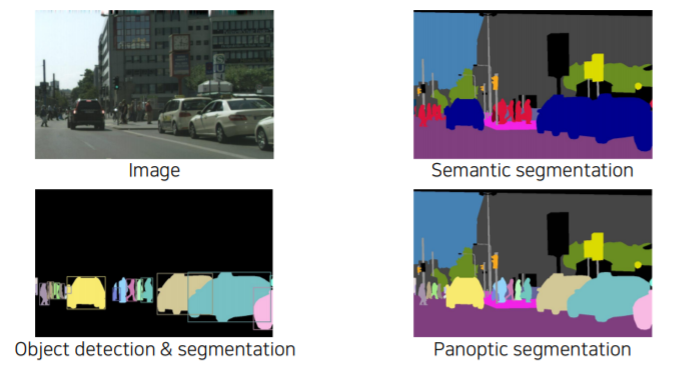

- Visual perception & intelligence

- Input : visual data (image or video)

- Class of visual perception

- Color perception

- Motion perception

- 3D perception

- Semantic-level perception

- Social perception (emotion perception)

- Visuomotor perception, etc.

- Also, computer vision includes understanding human visual perception capability!

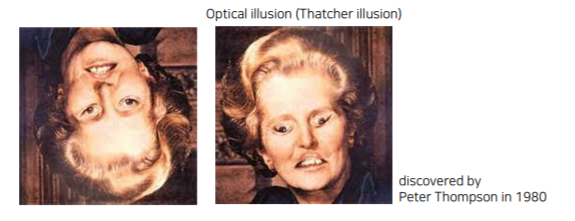

- Our visual perception is imperfect

- to develop machine visual perception,

- we need to understand the good and bad of our visual perception

- we need to come up with how to compensate for the imperfection

- to develop machine visual perception,

- How to implement?

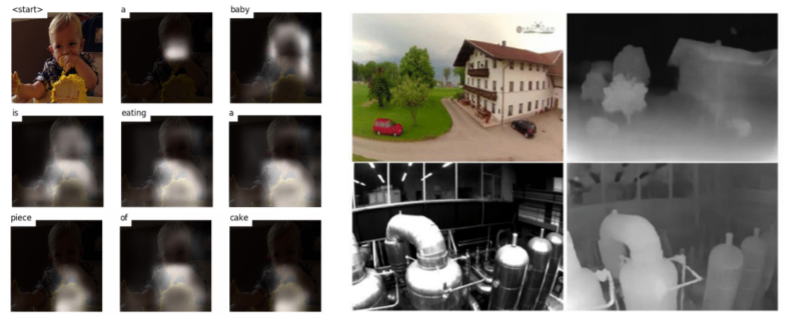

what you will learn in this course

- Fundamental image tasks

- deep learning based tasks

- deep learning based tasks

- Data augmentation and knowledge distillation

- Multi-modal learning (vision + {text, sound, 3D, etc.})

- Conditional generative model

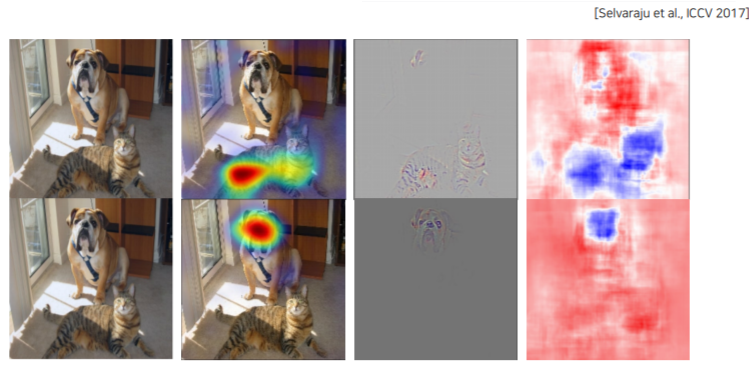

- Neural network analysis by visualization

- 딥러닝을 디버깅하고 이해하기 위한 visualization tool들도 배운다

- 딥러닝을 디버깅하고 이해하기 위한 visualization tool들도 배운다

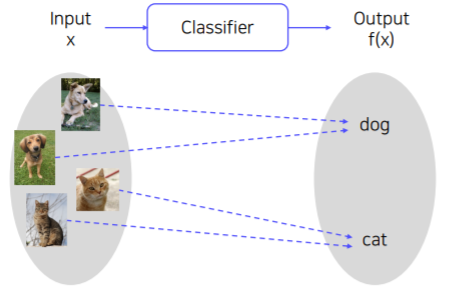

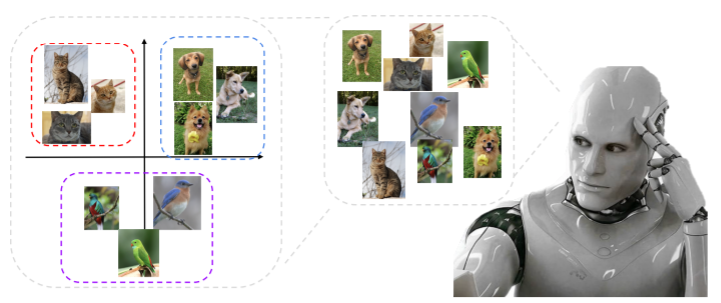

What is Classification

- Classifier: A mapping f(.) that maps an image to a category level

- 어떤 물체가 영상속에 들어있는지 분류

- 입력 : 영상

- 출력 : 영상이 해당하는 카테코리(클래스)

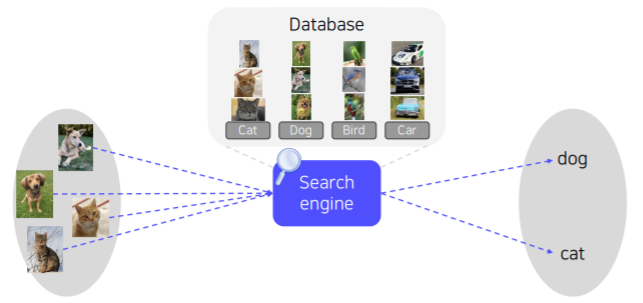

An ideal approach for image recognition

- What is we could memorize all the data in the world?

- All the classification problems could be solved by k Nearest Neighbors (k-NN)

- All the classification problems could be solved by k Nearest Neighbors (k-NN)

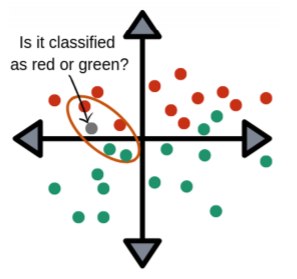

- k Nearest Neighbors (k-NN)

- classifies a query data point according to reference points closest to the query

- classifies a query data point according to reference points closest to the query

- All the classification problems could be solved by k-NN!

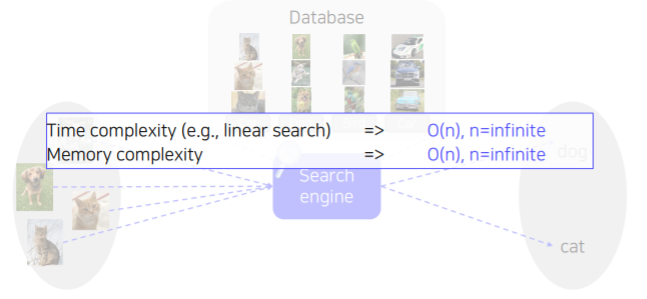

- Is it realizable?

- 정말 많은 데이터가 있다면

- 검색하는데 걸리는 시간이 데이터 수에 비례해서 증가하게 되고

- 메모리 용량도 데이터 수에 비례해서 증가한다

- 따라서 아무리 컴퓨터가 빨라져도 이세상에 모든 데이터를 담기에는 부족하다

- 정말 많은 데이터가 있다면

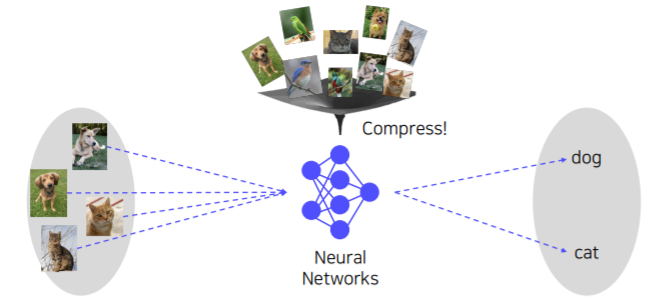

Convolutional Neural Networks (CNN)

- Compress all the data we have into the neural network

- 데이터가 너무 많으면 시스템 복잡도가 올라가서 실현할 수 없다

- 방대한 데이터를 제한된 복잡도의 시스템(neural networks)에 압축해서 녹여놓는거라고 볼수 있다

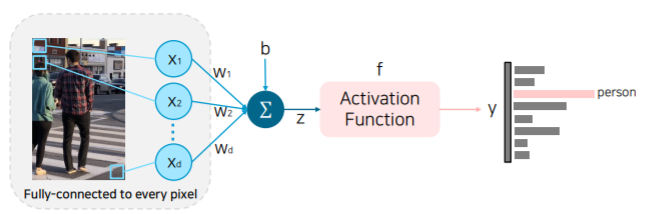

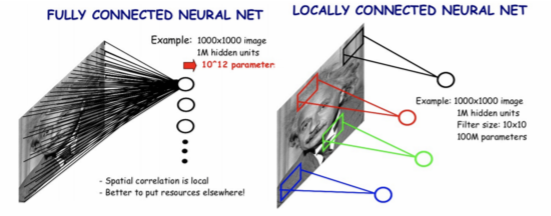

- let's look at a simple model(singal layer neural network aka. fully connected layer), perceptron, that takes every pixel of an image as input

- But, is this model suitable for solving the image classification problem?

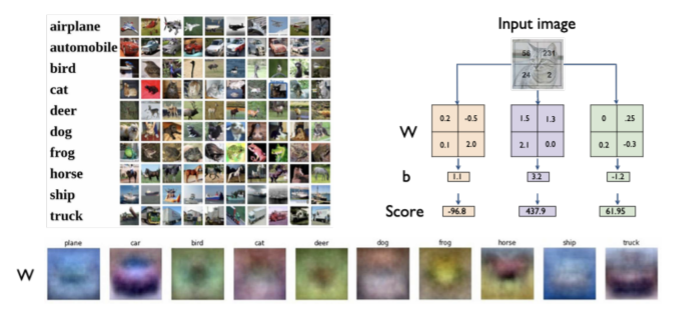

- Visualization of single fully connected layer networks

- 문제 1) layer가 한층이라 단순해서 평균 이미지들 같으거 이외에는 표현이 안된다

- 문제 1) layer가 한층이라 단순해서 평균 이미지들 같으거 이외에는 표현이 안된다

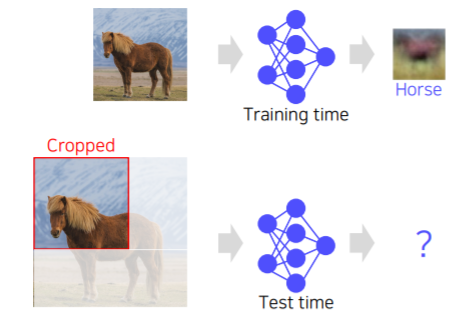

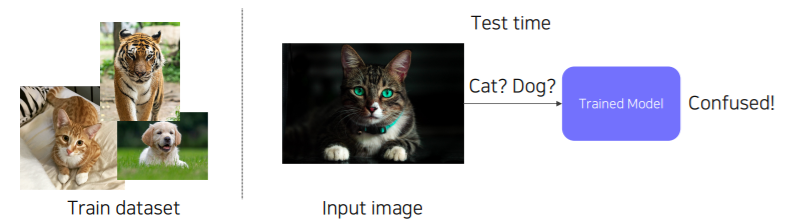

- A problem of single fully conneceted layer networks

- 문제 2) 적용시점(test time)때의 문제

- 학습시에는 영상이 가득찬 하나에 물체에 대해 학습해서 그 클래스에 대한 대표적인 패턴을 학습했다고 볼 수 있다

- 적용시점에 조금이라도 다른 패턴을 적용할 경우 학습동안에 본적이 없기 때문에 조금이라도 템플릿에 위치나 스케일에 맞지 않으면 굉장히 다른 결과나 해석을 내놓는다

- 위 문제점들을 해결하기 위해서 cnn 이 등장한다

- 하나의 특징을 뽑기위해서 모든 픽셀을 고려하는 fully connected layer 대신에 하나의 특징을 영상의 공간적인 특성을 고려해서 국부적인 영역들만 connection을 고려한 layer(locally connected layer) 사용

- 필요한 parameter 가 획기적으로 줄어든다

- 더 적은 파라미터로도 효과적인 특징을 추출할 수 있고 또한 overfitting도 방지한다

- Convolution neural networks are locally connected neural networks

- local feature learning

- parameter sharing

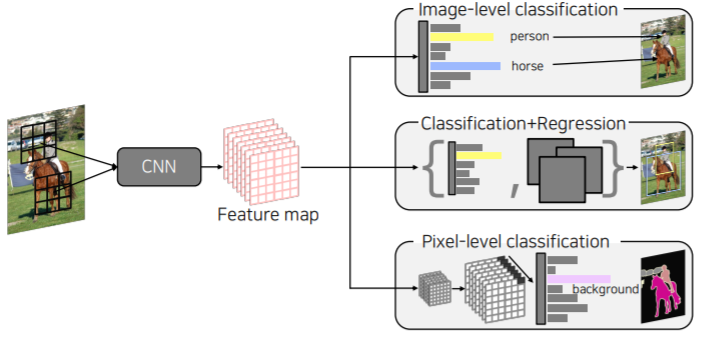

- CNN is used as a backbone of many CV tasks

- in this lecture, we will focus on image-level classification

CNN architectures for image classification 1

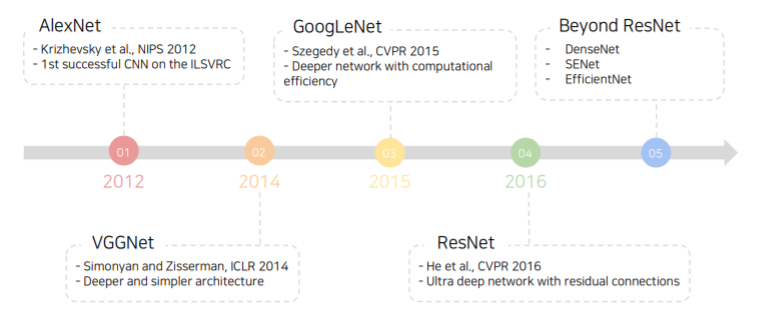

Brief History

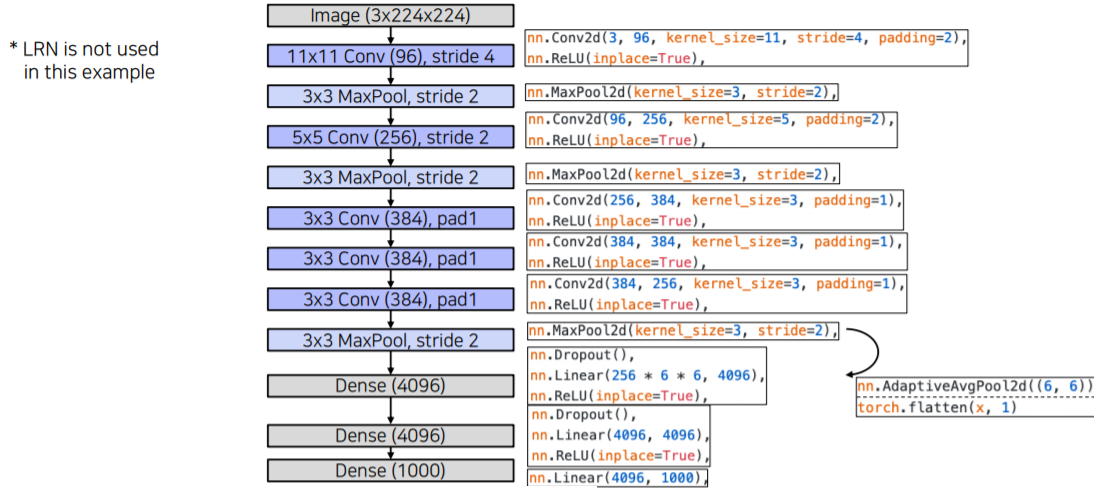

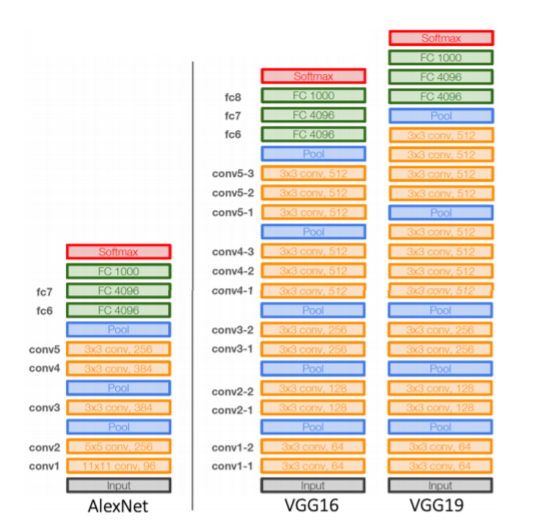

AlexNet

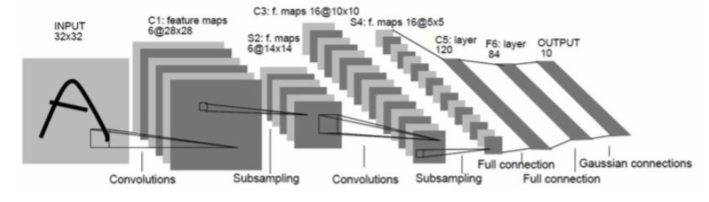

- LeNet-5

- A very simple CNN architecture introduced by Yann LeCun in 1998

- overal architecture: Conv - Pool - Conv - Pool - FC - FC

- Convolution: 5 x 5 filters with stride 1

- Pooling: 2 x 2 max pooling with stride 2

- A very simple CNN architecture introduced by Yann LeCun in 1998

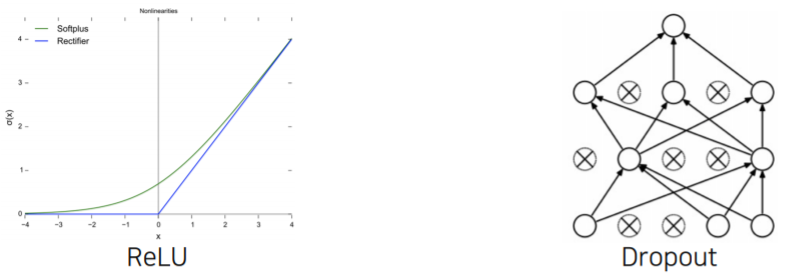

- Similar with LeNet-5, But

- Bigger (7 hidden layers, 605k neurons, 60 million parameters)

- Trained with ImageNet (large amount of data, 1.2 millions)

- Using better activation function (ReLU) and regularization technique (dropout)

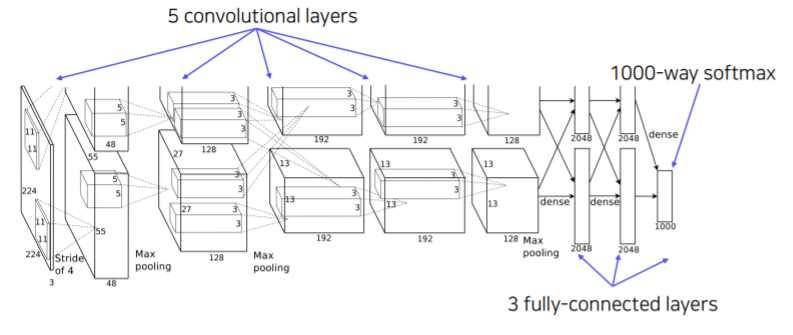

- Overal architecture

- Conv - Pool - LRN - Conv - Pool - LRN - Conv - Conv - Conv - Pool - FC - FC - FC

- network path 가 2개로 나뉘어져 있다

- 이때 당시에는 GPU 메모리가 모자라서 network를 절반씩 나누어서 각각 2개의 GPU에 올렸다

- 위 구조에서 살펴보면 중간에 몇번 activation map이 서로 cross 한다

- 이것이 GPU 2개간의 모든 부분에서 cross communication이 일어나면 느리기 때문에 일부에서만 교환하도록 설계한 것이다

- max pooing 된 2d activation map이 linear layer로 가기 위해선 3d 구조에서 2d 구조로 벡터화를 해야한다

- 벡터화 옵션:

- average pooling

- flatten

- 위에 그림에서 눈여겨 볼것은 fc layer 의 dimension 이 여기서는 4096이다

- gpu용량 부족 문제로 그 당시에 학습할 때는 절반씩 각각 gpu에 올려서 학습했기 때문에 two stream 그림에서는 2048로 적혀있었던 것이다

- Conv - Pool - LRN - Conv - Pool - LRN - Conv - Conv - Conv - Pool - FC - FC - FC

AlexNet(deprecated components)

- local response normalization(LRN)

- activation map 에서 명암을 normalization하는 역할을 한다

- Lateral inhibition : the capacity of an excited neuron to subdue its neighbors

- LRN normalizes around the local neighborhood of the excited neuron

- Excited neuron becomes even more sensitive as compared to its neighbors

- 지금은 LRN 보다는 Batch normalization을 활용한다

- 11 x 11 convolution filter

- The filter size is increased, as the input size of the image has increased

- input size of LeNet: 28x28

- input size of AlexNet: 227x227

- Larger size filters are used to cover a wider range of the input image

- 최신 네트워크 구조에서는 큰 filter size 를 사용하지 않는다

- The filter size is increased, as the input size of the image has increased

AlexNet

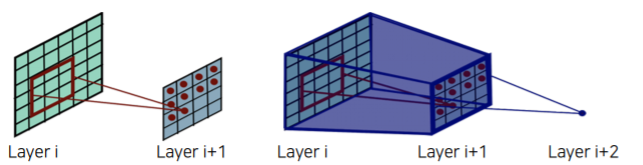

- __Receptive field in CNN

- The region in the input space that a particular CNN feature is looking at

- suppose K x K convolution filters with stride 1, and a pooling layer of size P x P,

- then a value of each unit in the pooling layer depends on an input patch of size : (P + K - 1) x (P + K - 1)

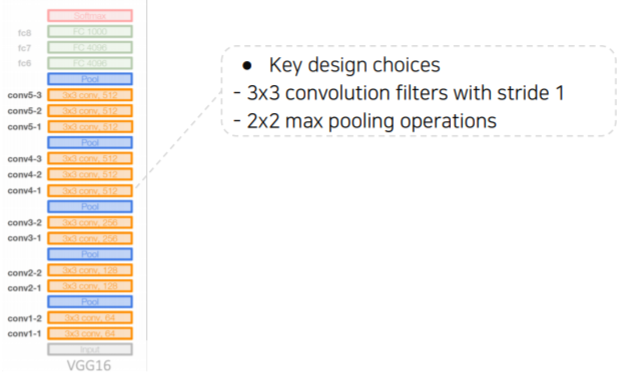

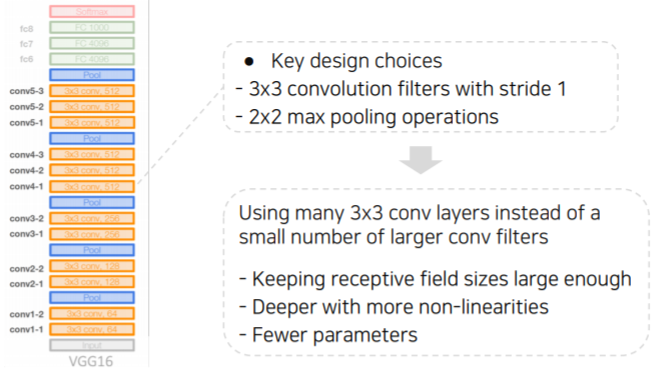

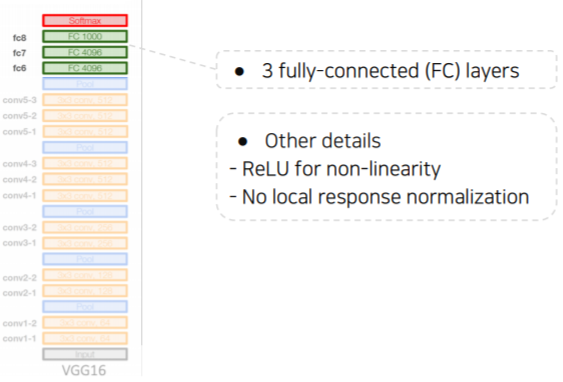

VGGNet

- Deeper architecture

- 16 and 19 layers

- simpler architecture

- no local response normalization

- only 3x3 conv filters blocks, 2x2 max pooling

- better performance

- significant performance improvement over AlexNet(second in ILSVRC14)

- better generalization

- final features generalizing well to other taks even without fine-tuning

- summarize

- deeper architecture

- simpler architecture

- better performance

- better generalization

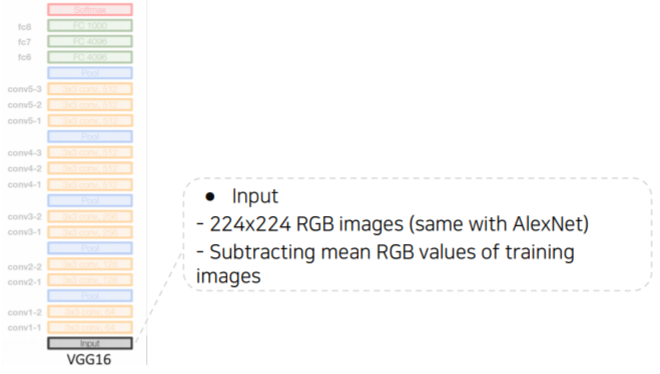

overall architecture

- 작은 convolution layer들도 stack을 많이 쌓으면 큰 receptive field size를 얻을 수 있다

- 큰 receptive field size를 input 단에서 얻었다는 의미는

- 이미지 영역에 많은 부분들을 고려해서 결론을 냈다는 의미와 동일하다

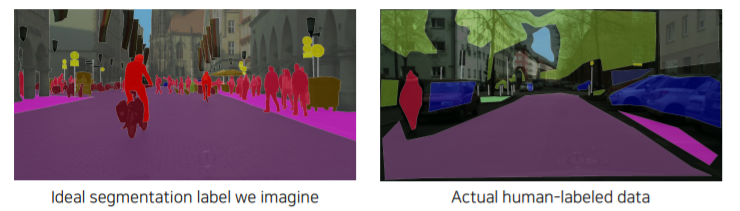

Annotation data(label data) efficient learning

- 컴퓨터 비전 문제를 푸는 딥러닝 모델은 supervised learning으로 학습하는 것이 유리하다는 사실은 알려져 있다

- 하지만, 딥러닝 모델을 학습할 수 있을 만큼 고품질의 데이터를 많이 확보하는 것은 보통 불가능하거나 그 비용이 매우 크다

- 여기에서는 Data Augmentation, Knowledge Distillation, Transfer learning, Learning without Forgetting, Semi-supervised learning 및 Self-training 등 주어진 데이터셋의 분포를 실제 데이터 분포와 최대한 유사하게 만들거나, 이미 학습된 정보를 이용해 새 데이터셋에 대해 보다 잘 학습하거나,

label이 없는 데이터셋까지 이용해 학습하는 등 주어진 데이터셋을 최대한 효율적으로 이용해 딥러닝 모델을 학습하는 방법을 소개한다

Further Reading

Data Augmentation

Learning representation of dataset

- learning representation from a dataset

- neural networks learn compact features(information) of a dataset

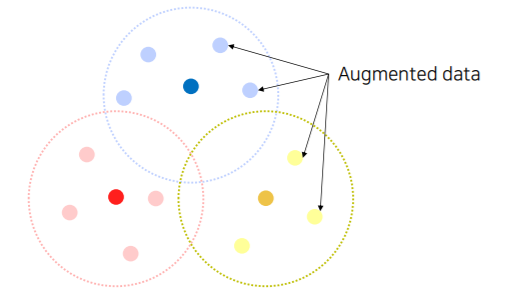

- Dataset is (almost) always biased

- Images taken by camera(training data) real data

- The training dataset is sparse samples of real data

- The training dataset contains only fractional part of real data

- The training dataset contains only fractional part of real data

- The training dataset and real data always have a gap

- Suppose a training dataset has only bright images

- during test time, if a dark image is fed as input, the trained model may be confused

- problem : datasets do not fully represent real data distribution

- Augmenting data to fill more space and to close the gap

- examples of augmentations to make a dataset denser

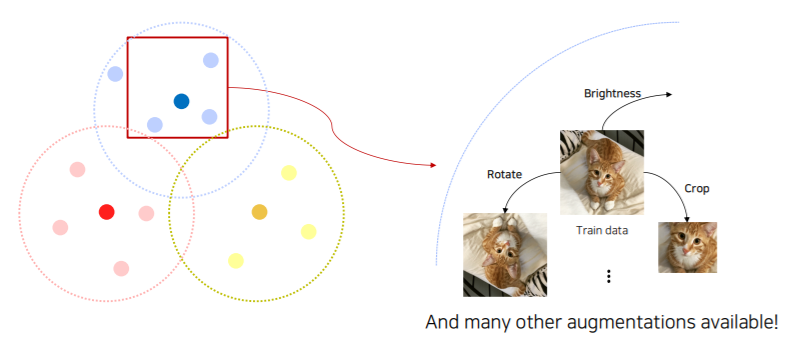

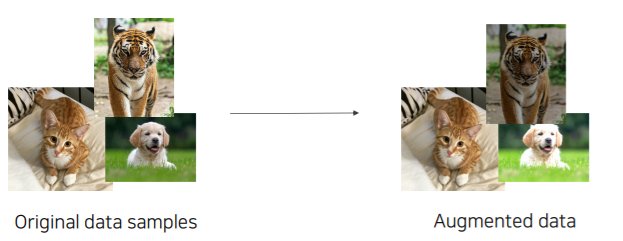

Data Augmentation

- image data augmentation

- applying various image transformations to the dataset

- crop, shear, brightness, perspective, rotate, etc.

- OpenCV and Numpy have various methods useful for data augmentation

- Goal: make training dataset's distribution similar with real data distribution

- applying various image transformations to the dataset

Various data augmentation methods

- brightness adjustment

- various brightness in dataset

- brightness adjustment (brightening) using numpy

- various brightness in dataset

def brightness_augmentation(img):

# numpy array img has RGB value(0~255) for each pixel

img[:, :,0] = img[:, :,0] + 100 # add 100 to R value

img[:, :,0] = img[:, :,1] + 100 # add 100 to G value

img[:, :,0] = img[:, :,2] + 100 # add 100 to B value

img[:, :,0][img[:, :,0] > 255] = 255 # clip R values over 255

img[:, :,1][img[:, :,1] > 255] = 255 # clip G values over 255

img[:, :,2][img[:, :,2] > 255] = 255 # clip B values over 255

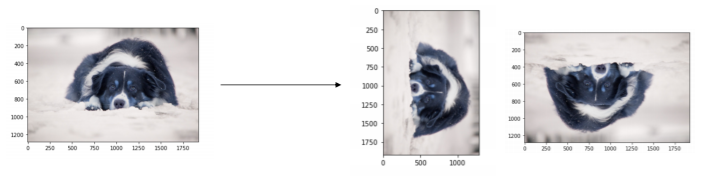

return img- Rotate, flip

- Diverse angles in dataset

- Rotating (flipping) image using OpenCV

- Diverse angles in dataset

img_rotated = cv2.rotate(image, cv2.ROTATE_90_CLOCKWISE)

img_flipped = cv2.rotate(image, cv2.ROTATE_180)

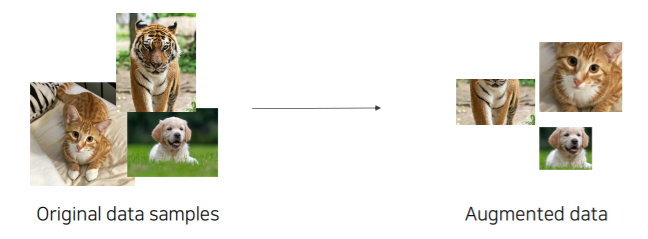

- crop

- learning with only part of images

- cropping image using numpy

- learning with only part of images

y_start = 500 # y pixel to start cropping

crop_y_size = 400 # cropped image's height

x_start = 300 # x pixel to start cropping

crop_x_size = 800 # cropped image's width

img_cropped = image[y_start : y_start + crop_y_size, x_start : x_start + crop_x_size, :]

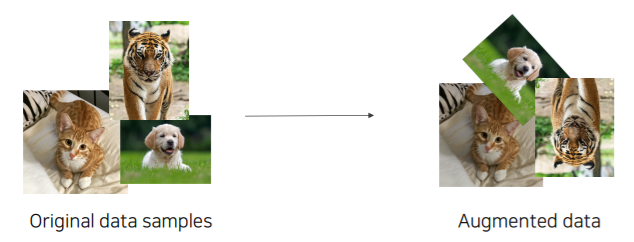

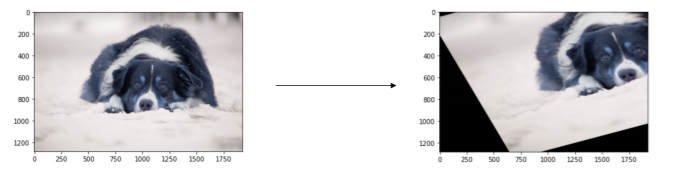

- Affine transformation

- preserve 'line', 'length ratio', and 'parallelism' in image

- for example, transforming a rectangle into a parallelogram

- see the shear transform example below

- see the shear transform example below

- Affine transformation (shear) using OpenCV

rows, cols, ch = image.shape

pts1 = np.float32([[50,50], [200,50], [50,200]])

pts2 = np.float32([[10,100], [200,50], [100,250]])

M = cv2.getAffineTransform(pts1, pts2)

shear_img = cv2.warpAffine(image, M, (cols, rows))

Model augmentation techniques

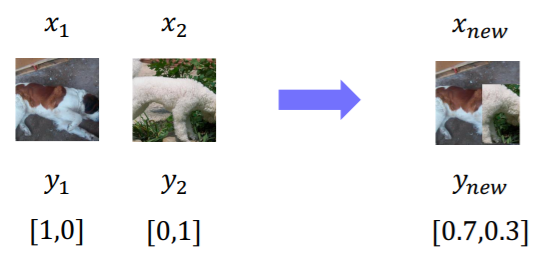

- CutMix

- 'Cut' and 'Mix training example to help model better localize objects

- 'Cut' and 'Mix training example to help model better localize objects

- Generating new training image

- mixing both images and labels

- 의미있는 수준의 성능 향상과 동시에 물체의 위치를 더 정교하게 catch 할수 있게끔 학습한다

- 의미있는 수준의 성능 향상과 동시에 물체의 위치를 더 정교하게 catch 할수 있게끔 학습한다

- mixing both images and labels

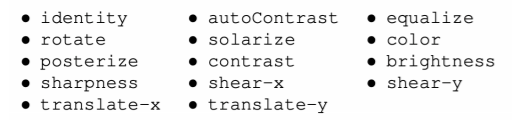

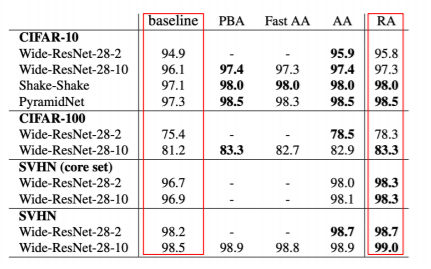

- RandAugment

- 여러가지 영상 처리 기능들을 조화해서 전혀 다른 데이터를 생성한다

- many augmentation methods exist. Hard to find best augmentations to apply

- automatically finding the best sequence of augmentations to apply

- Random sample, apply, and evaluate augmentations

- 랜덤하게 augmentation기법들을 sampling해서 수행하고 성능이 잘 나오는 것을 활용

- 랜덤하게 augmentation기법들을 sampling해서 수행하고 성능이 잘 나오는 것을 활용

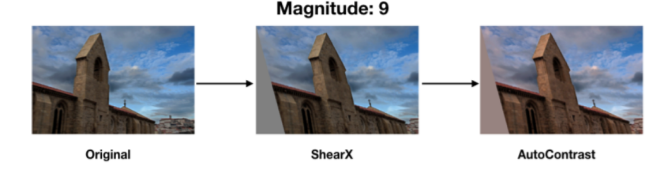

- example of augmented images in RandAug

- Augmentation policy has two parameters

- which augmentation to apply

- magnitude of augmentation to apply (how much to augment)

- parameters used in the above example

- which augmentations to apply : 'shearX' & 'AutoContrast'

- Magnitude of augmentations to apply : 9

- Augmentation policy has two parameters

- Randomly testing augmentations policies

- finding the best augmentations policy

- sample a policy : Policy = {N augmentations to apply} by random sampling

- train with a sampled policy, and evaluate the accuracy

- finding the best augmentations policy

- Augmentation helps model learning

- Higher test accuracy than training w/o augmentation

- Higher test accuracy than training w/o augmentation

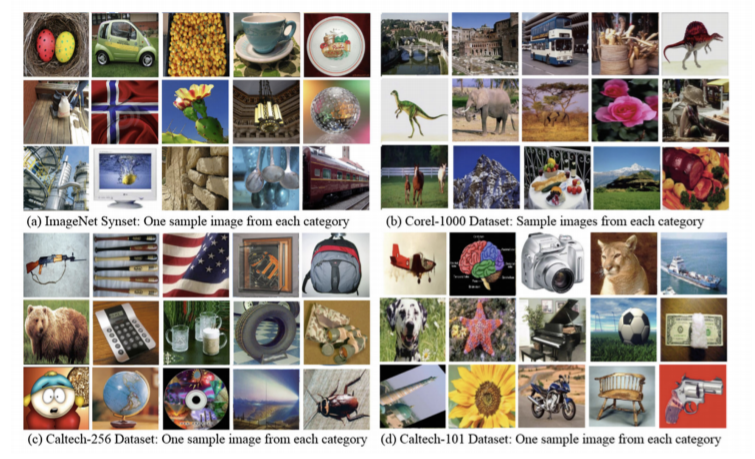

Leveraging pre-trained information

Transfer learning

- The high-quality dataset is expensive and hard to obtain

- supervised learning requires a very large-scale dataset for training

- annotating data is very expensive, and its quality is not ensured

- transfer learning : A practical training method with a small dataset

- Benefits when using transfer learning

- by transfer learning, we can easily adapt to a new task by leveraging pre-trained knowledge(feature)!

- Motivational observation : similar datasets share common information

- 한 데이터셋에서 배운 지식을 다른 데이터셋에서 활용하는 기술

- E.g., 4 distinct datasets with similar images

- knowledge learned from one dataset can be applied to other datasets

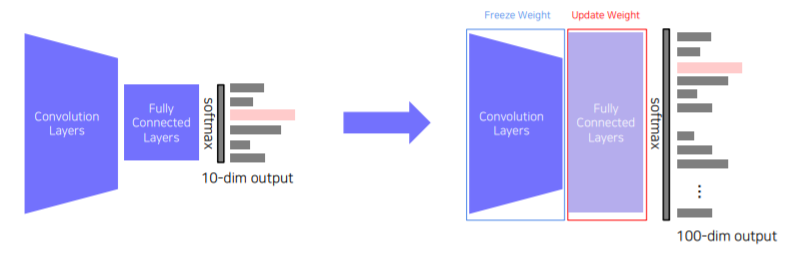

- Approach 1: Transfer knowledge from a pre-trained task to a new task

- Given a model pre-trained on a 10-class dataset,

- Chop off the final layer of the pre-trained model, add and only re-train a new FC layer

- Extracted features preserve all the knowledge from pre-training

- 새로운 task에 대응하도록 학습이 된게된다

- 이렇게 학습된 새로운 fc layer는 적은 데이터로 부터도 잘 작동되게 학습이 된다

- 몸통 전체를 학습시키지 않아도 되기때문에 학습해야할 파라미터수가 적어졌기 때문이다

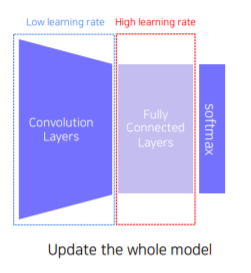

- Approach 2: Fine-tuning the whole model

- Given a model pre-trained on a dataset

- Replace the final layer of the pre-trained model to a new one, and re-train the whole model

- set learning rates differently

- 아래 그림에서 convolution layer 부분은 learning rate을 낮게 잡고 학습을 같이한다

- learning rate를 낮게 잡은 부분은 업데이트가 느리게 되는 반면 새로운 fc layer는 높은 learning rate를 통해서 새로운 target task에 빨리 적응하도록 학습이 되게한다

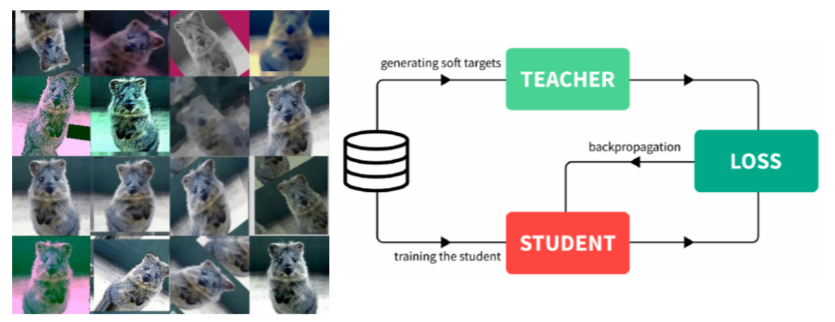

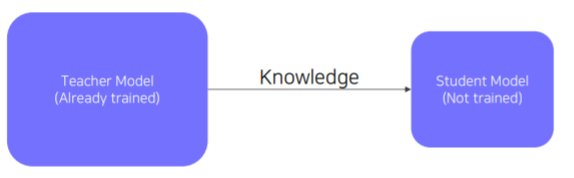

Knowledge distillation

- passing what model learned to 'another' smaller model(Teacher-student learning)

- 'Distillate' knowledge of a trained model into another smaller model

- used for model compression (Mimicking what a larger model knows)

- Also, used for pseudo-labeling (generating pseudo-labels for an unlabeled dataset)

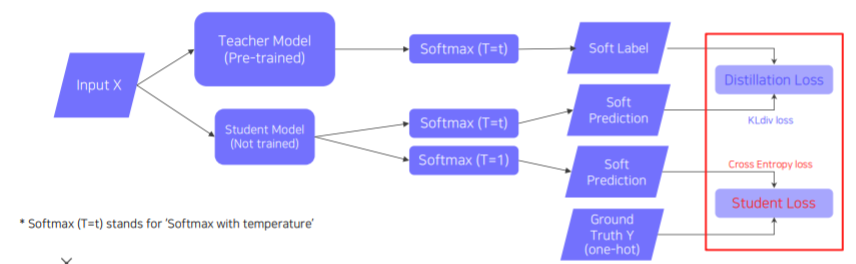

- Teacher-student network structure

- the student network learns what the teacher network knows

- the student network mimics outputs of the teacher network

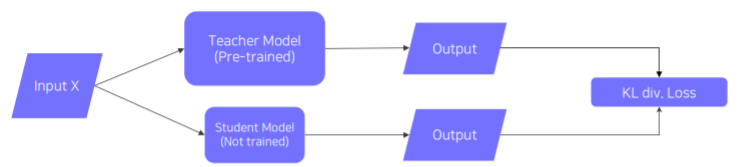

- 두 모델의 output의 차이를 KL div. Loss 를 통해 측정해서 backpropagation을 통해 student model 만 학습한다

- Unsupervised learning, since training can be done only with unlabeled data

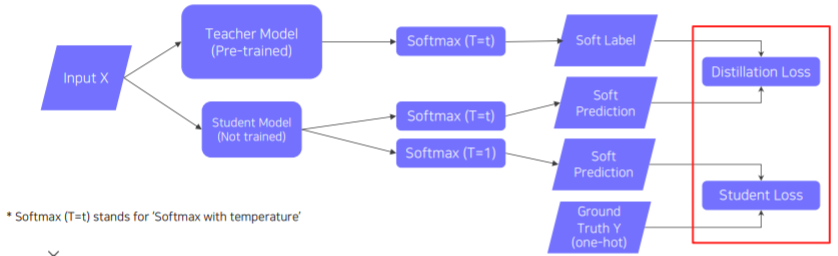

- knowledge distillation

- when labeled data is available, can leverage labeled data for training(student loss)

- Distillation loss to 'predict similar outputs with the teacher model'

- Semantic information is not considered in distillation

- teacher에서 나온 output의 각각 dimension이 pre-train 할때 사용되었던 어떤 이전 task class 들과 연관이 되어있다

- student loss로 학습하는 데이터는 pre-training dataset과 전혀 다른 task 일 수 있다(label 정보가 겹치지 않는)

- 그래서 중복되는 정보가 없더라도 soft label에서 발생하는 output dimension들 각각의 그 의미가 중요하다기 보다는 전체 output이 추상적인 지식의 형태를 표현하고 있어서 그 행동 자체를 따라하도록 만드는 것이 가장 중요하지 그 내부 각각 element의 semantic information이 중요한건 아니다 라는 의미

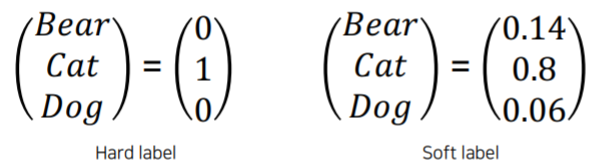

- Hard label vs Soft label

- Hard label(ground truth, one-hot vector)

- typically obtained from the dataset

- indicates whether a class is 'true answer' or not

- Soft label

- typical output of the model(=inference result)

- regard it as 'knowledge'. Useful to observe how the model thinks

- Hard label(ground truth, one-hot vector)

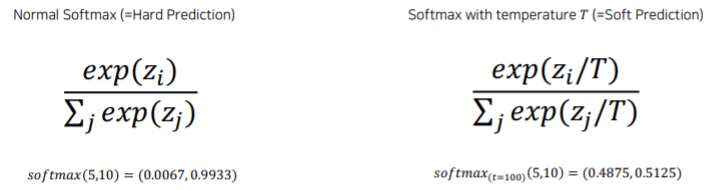

- softmax with temperature()

- Softmax with temperature: controls difference in output between small & large input values

- A large smoothens large input value differences

- 극단적으로 0과 1의 값만 있는것 보단 0과 1 사이의 중간 값도 가지면서 입력에 따라 민감하게 변하는 신호를 따라하게 만듬으로서 student가 teacher를 더 따라하게 도와주는 역할

- Useful to synchronize the student and teacher models' outputs

- intuition about distillation loss and student loss

- Distillation loss

- KLdiv(soft label, soft prediction)

- Loss = difference between the teacher and student network's inference

- Learn what teacher network knows by mimicking

- Student Loss

- CrossEntropy(hard label, soft prediction)

- Loss = difference between the student network's inference and tru label

- Learn the 'right answer'

- Distillation loss

- Loss functions in knowledge distillation

- Weighted sum of 'distillation loss' and 'student loss'

- Weighted sum of 'distillation loss' and 'student loss'

Leveraging unlabeled dataset for training

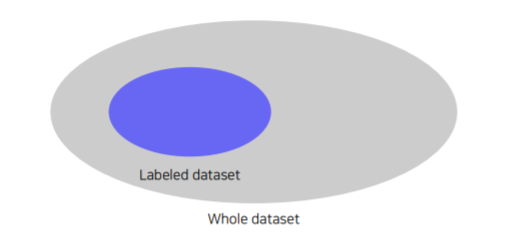

Semi-supervised learning

- There are lots of unlabeled data

- typically, only a small portion of data is labeled

- is there any way to learn from unlabeled data?

- Semi-supervised learning : Unsupervised (no label) + Fully supervised (fully labeled)

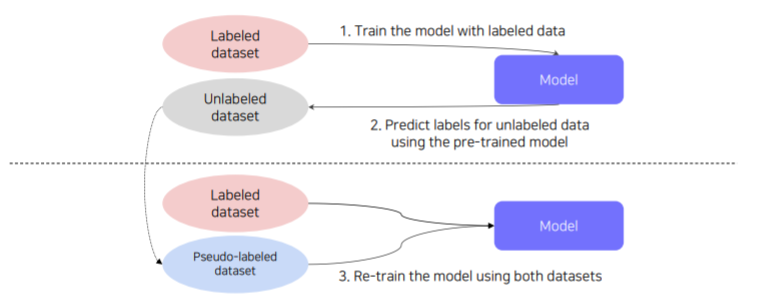

- Semi-supervised learning with pseudo labeling

- pseudo-labeling unlabeled data using a pre-trained model, then use for training

- pseudo-labeling unlabeled data using a pre-trained model, then use for training

Self-training

- Recap : Data efficient learning methods so far

- Data Augmentation

- Augment a dataset to make the dataset closer to real data distribution

- knowledge distillation

- train a student network to imitate a teacher network

- transfer the teacher network's knowledge to the student network

- semi-supervised learning (Pseudo label-based method)

- Pseudo-label an unlabeled dataset using a pre-trained model, then use for training

- Leveraging an unlabeled dataset for training

- Data Augmentation

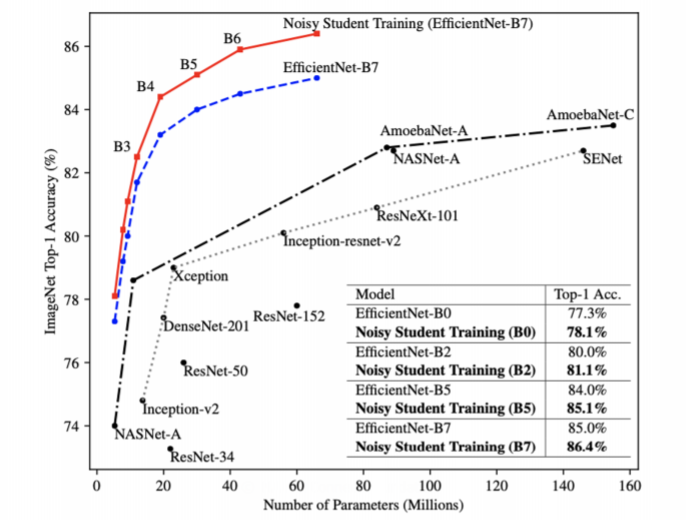

- self-training

- Augmentation + teacher-student networks + semi-supervised learning

- SOTA ImageNet Classification, 2019

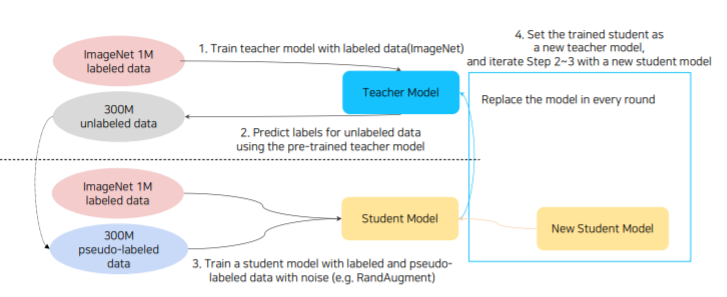

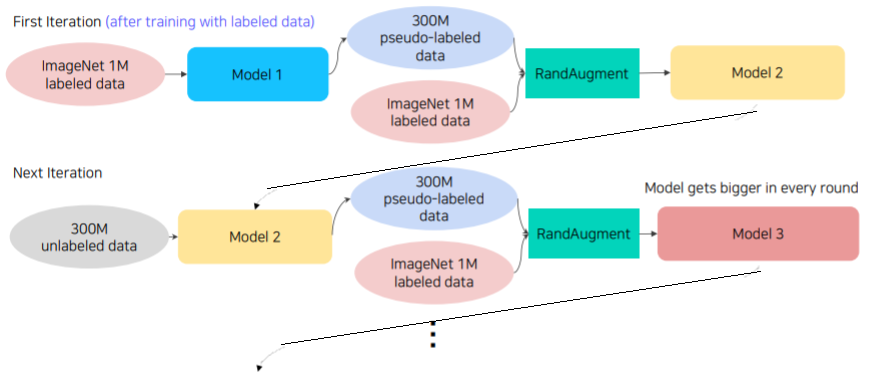

- self-training with noisy student

- iteratively training noisy student network using teacher network

- brief overview of self-training

- Train initial teacher model with labeled data

- pseudo-label unlabeled data using teacher model

- train student model with both labeled and unlabeled data with augmentation

- set the student model as a new teacher, and set new model(bigger) as a new student

- repeat 2~4 with new teacher/student models

Reference

- Data augmentation

- Yun et al.,CutMix:Regularization Strategy to Train Strong Classifiers with Localizable Features,ICCV2019

- Cubuk et al.,Randaugment:Practical automated data augmentation with a reduced search space,CVPRW 2020

- Leveraging pre-trained information

- Ahmed et al.,Fusion of local and global features for effective image extraction, Applied Intelligence 2017

- Oquab et al.,Learning and Transferring Mid- Level Image Representations using Convolutional Neural Networks, CVPR 2015

- Hinton et al.,Distilling the Knowledge in a Neural Network, NIPS deep learning workshop 2015

- Li & Hoiem, Learning without Forgetting, TPAMI 2018

- Leveraging unlabeled dataset for training

- Lee,Pseudo-label: The simple and Efficient Semi-Supervised Learning Method for Deep Neural Networks, ICML Workshop 2013

- Xie et al., Self-training with Noisy Student improves ImageNet classification, CVPR 2020