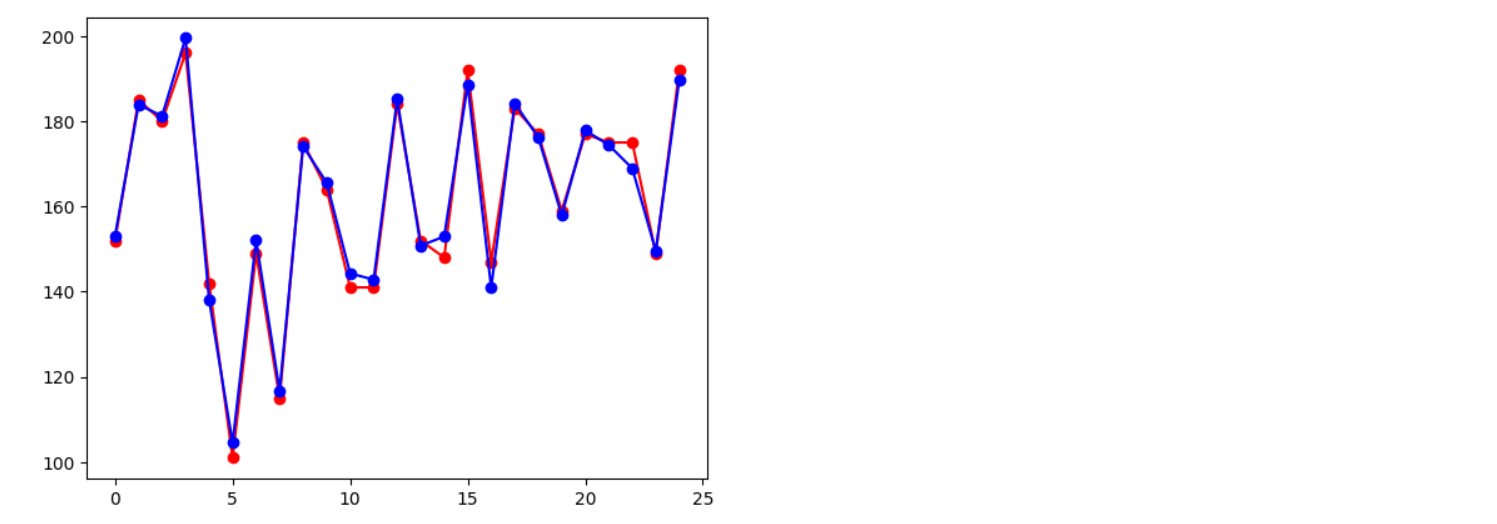

Score prediction

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

tf.__version__#2.15.0from google.colab import drive

drive.mount('/content/drive')Mounted at /content/driveSetting Data

xy = np.loadtxt('/content/drive/MyDrive/Lecture/양재AI_NLP_basic_to_LLMs/1주차/1_DL_basic/1_TF_examples/exercises/data-01-test-score.csv', delimiter=',', dtype=np.float32)

x_train = xy[:, 0:-1]

y_train = xy[:, [-1]]

print(x_train.shape, y_train.shape)

# (25, 3) (25, 1)make a dataset

dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(len(x_train))W = tf.Variable(tf.random.normal([3, 1]), name='weight')

b = tf.Variable(tf.random.normal([1]), name='bias')

print(W,b)#<tf.Variable 'weight:0' shape=(3, 1) dtype=float32, numpy=

#array([[-1.8743812],

# [-1.1022243],

# [ 1.3199434]], dtype=float32)> <tf.Variable 'bias:0' shape=(1,) dtype=float32, #numpy=array([-1.426634], dtype=float32)>def linear_regression(features):

hypothesis = tf.matmul(features, W) + b

return hypothesis

print(linear_regression(x_train))#tf.Tensor(

#[[-127.43866 ]

# [-149.98508 ]

# [-149.75407 ]

# [-157.39088 ]

# [-118.60724 ]

# [ -78.874275]

# [-110.687904]

# [ -72.05051 ]

# [-132.77861 ]

# [-110.503426]

# [-111.55877 ]

# [-106.60208 ]

# [-160.34053 ]

# [-141.32483 ]

# [-110.140114]

# [-147.12747 ]

# [-140.53905 ]

# [-129.69696 ]

# [-154.26169 ]

# [-137.47736 ]

# [-131.12228 ]

# [-135.53085 ]

# [-126.9178 ]

# [-141.64825 ]

# [-158.47946 ]], shape=(25, 1), dtype=float32)def loss_fn(hypothesis, labels): #MSE

cost = tf.reduce_mean(tf.square(hypothesis - labels))

return cost

optimizer = tf.compat.v1.train.GradientDescentOptimizer(learning_rate=0.00001)epochs = 5000

for step in range(epochs):

for features, labels in dataset:

with tf.GradientTape() as tape:

loss_value = loss_fn(linear_regression(features),labels)

grads = tape.gradient(loss_value, [W,b])

optimizer.apply_gradients(grads_and_vars=zip(grads,[W,b]))

if step % 100 == 0:

print("Iter: {}, Loss: {:.4f}".format(step, loss_fn(linear_regression(features),labels)))#Iter: 0, Loss: 32221.2480

#Iter: 100, Loss: 60.0295

#Iter: 200, Loss: 56.0295

#Iter: 300, Loss: 52.3404

#Iter: 400, Loss: 48.9370

#Iter: 500, Loss: 45.7966

#Iter: 600, Loss: 42.8979

#Iter: 700, Loss: 40.2216

#Iter: 800, Loss: 37.7502

#Iter: 900, Loss: 35.4670

#Iter: 1000, Loss: 33.3574

#Iter: 1100, Loss: 31.4075

#Iter: 1200, Loss: 29.6047

#Iter: 1300, Loss: 27.9373

#Iter: 1400, Loss: 26.3948

#Iter: 1500, Loss: 24.9673

#Iter: 1600, Loss: 23.6459

#Iter: 1700, Loss: 22.4222

#Iter: 1800, Loss: 21.2887

#Iter: 1900, Loss: 20.2383

#Iter: 2000, Loss: 19.2647

#Iter: 2100, Loss: 18.3620

#Iter: 2200, Loss: 17.5246

#Iter: 2300, Loss: 16.7476

#Iter: 2400, Loss: 16.0264

#Iter: 2500, Loss: 15.3567

#Iter: 2600, Loss: 14.7347

#Iter: 2700, Loss: 14.1566

#Iter: 2800, Loss: 13.6193

#Iter: 2900, Loss: 13.1197

#Iter: 3000, Loss: 12.6549

#Iter: 3100, Loss: 12.2224

#Iter: 3200, Loss: 11.8197

#Iter: 3300, Loss: 11.4448

#Iter: 3400, Loss: 11.0954

#Iter: 3500, Loss: 10.7698

#Iter: 3600, Loss: 10.4662

#Iter: 3700, Loss: 10.1831

#Iter: 3800, Loss: 9.9189

#Iter: 3900, Loss: 9.6723

#Iter: 4000, Loss: 9.4421

#Iter: 4100, Loss: 9.2270

#Iter: 4200, Loss: 9.0260

#Iter: 4300, Loss: 8.8382

#Iter: 4400, Loss: 8.6624

#Iter: 4500, Loss: 8.4981

#Iter: 4600, Loss: 8.3442

#Iter: 4700, Loss: 8.2002

#Iter: 4800, Loss: 8.0654

#Iter: 4900, Loss: 7.9390print(W, b)#<tf.Variable 'weight:0' shape=(3, 1) dtype=float32, numpy=

#array([[0.07136216],

# [0.57992035],

# [1.3724346 ]], dtype=float32)> <tf.Variable 'bias:0' shape=(1,) dtype=float32, #numpy=array([-1.4270566], dtype=float32)>