import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

tf.__version__

ZOO classification

Data list

- 동물 이름 animal name: (deleted)

- 털 hair Boolean

- 깃털 feathers Boolean

- 알 eggs Boolean

- 우유 milk Boolean

- 날 수있는지 airborne Boolean

- 수중 생물 aquatic Boolean

- 포식자 predator Boolean

- 이빨이 있는지 toothed Boolean

- 척추 동물 backbone Boolean

- 호흡 방법 breathes Boolean

- 독 venomous Boolean

- 물갈퀴 fins Boolean

- 다리 legs Numeric (set of values: {0",2,4,5,6,8})

- 꼬리 tail Boolean

- 사육 가능한 지 domestic Boolean

- 고양이 크기인지 catsize Boolean

- 동물 타입 type Numeric (integer values in range [0",6])

xy = np.loadtxt('./data-04-zoo.csv', delimiter=',', dtype=np.int32)

x_train = xy[0:-10, 0:-1]

y_train = xy[0:-10, [-1]]

x_train = tf.cast(x_train, tf.float32)

x_test = xy[-10:, 0:-1]

y_test = xy[-10:, [-1]]

x_test = tf.cast(x_test, tf.float32)

nb_classes = 7

y_train = tf.one_hot(list(y_train), nb_classes)

print(y_train.shape)

y_train = tf.reshape(y_train, [-1, nb_classes])

print(y_train.shape)

y_test = tf.one_hot(list(y_test), nb_classes)

y_test = tf.reshape(y_test, [-1, nb_classes])

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

print(x_train.dtype, y_train.dtype)

print(x_test.dtype, y_test.dtype)

dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(len(x_train))

print(dataset)

W = tf.Variable(tf.random.normal([16, nb_classes]), name='weight')

b = tf.Variable(tf.random.normal([nb_classes]), name='bias')

print(W.shape, b.shape)

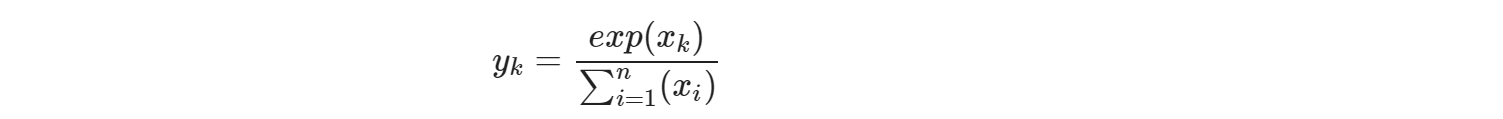

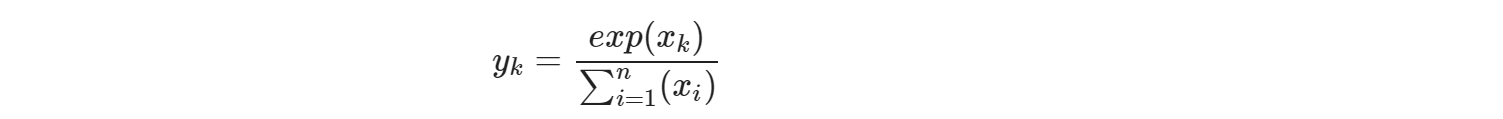

가설 설정

- 주어진 동물의 데이터들로 분류하는 가설 모델을 생성한다

def logistic_regression(features):

return tf.nn.softmax(tf.matmul(features, W) + b)

print(logistic_regression(x_train))

tf.Tensor(

[[1.54855186e-02 7.99202553e-06 9.48315382e-01 3.19353494e-05

6.01915781e-06 3.61315422e-02 2.17864908e-05]

[1.20813353e-02 4.79611208e-06 6.46479368e-01 4.67823047e-05

1.73373901e-05 3.41036886e-01 3.33462231e-04]

[2.72753477e-01 1.00762499e-02 6.39225602e-01 2.60519776e-02

3.53506766e-03 4.83063981e-02 5.12331790e-05]

[1.54855186e-02 7.99202553e-06 9.48315382e-01 3.19353494e-05

6.01915781e-06 3.61315422e-02 2.17864908e-05]

[1.25222569e-02 6.83396877e-07 9.52855229e-01 3.41892592e-05

2.99665521e-06 3.45740691e-02 1.05495747e-05]

[1.20813353e-02 4.79611208e-06 6.46479368e-01 4.67823047e-05

1.73373901e-05 3.41036886e-01 3.33462231e-04]

[1.01755001e-02 6.43019007e-07 7.74020493e-01 1.38418909e-05

6.50950024e-05 2.15282053e-01 4.42386430e-04]

[1.94308490e-01 8.31186119e-03 4.55227077e-01 9.24686715e-03

6.73220456e-02 2.63700157e-01 1.88350258e-03]

[2.72753477e-01 1.00762499e-02 6.39225602e-01 2.60519776e-02

3.53506766e-03 4.83063981e-02 5.12331790e-05]

[3.33081298e-02 9.89459386e-06 5.98992527e-01 5.69971016e-05

2.12986371e-04 3.61478835e-01 5.94058260e-03]

[1.25222569e-02 6.83396877e-07 9.52855229e-01 3.41892592e-05

2.99665521e-06 3.45740691e-02 1.05495747e-05]

[6.71478033e-01 3.50212707e-04 1.42793342e-01 4.93934005e-03

6.32465109e-02 1.16810188e-01 3.82330909e-04]

[2.72753477e-01 1.00762499e-02 6.39225602e-01 2.60519776e-02

3.53506766e-03 4.83063981e-02 5.12331790e-05]

[1.86856166e-01 6.63085505e-02 4.57052082e-01 1.81471869e-01

6.88572824e-02 3.85847203e-02 8.69275478e-04]

[2.62635620e-03 9.18472040e-07 9.96097803e-01 2.11757269e-05

1.03496604e-04 7.46545556e-04 4.03709622e-04]

[3.19297920e-04 5.45438583e-09 9.99344170e-01 3.88916959e-07

8.74191210e-06 1.50824810e-04 1.76662812e-04]

[7.97334671e-01 3.59139143e-04 1.69615075e-01 1.17718624e-02

2.80936132e-03 1.81011241e-02 8.79739855e-06]

[1.20813353e-02 4.79611208e-06 6.46479368e-01 4.67823047e-05

1.73373901e-05 3.41036886e-01 3.33462231e-04]

[1.06128462e-01 7.88718835e-03 8.46688867e-01 6.08664937e-03

2.23514438e-03 3.09655387e-02 8.11501741e-06]

[4.05125618e-02 8.34866706e-03 9.00641978e-01 1.45283889e-03

5.07052355e-05 4.89108488e-02 8.24468734e-05]

[6.71478033e-01 3.50212707e-04 1.42793342e-01 4.93934005e-03

6.32465109e-02 1.16810188e-01 3.82330909e-04]

[6.79859877e-01 9.20027494e-04 2.31572881e-01 5.17861079e-03

3.18241492e-03 7.86594078e-02 6.26775436e-04]

[1.20813353e-02 4.79611208e-06 6.46479368e-01 4.67823047e-05

1.73373901e-05 3.41036886e-01 3.33462231e-04]

[5.14066815e-01 3.38834454e-03 2.61786401e-01 6.46338100e-03

1.76501218e-02 1.96569234e-01 7.56465524e-05]

[1.88470667e-03 2.78773285e-07 8.73236656e-01 8.24304698e-06

5.96339814e-04 8.16552117e-02 4.26185504e-02]

[2.58668046e-02 5.68598239e-07 9.59779263e-01 5.83678702e-05

6.04288653e-05 1.41748274e-02 5.96901082e-05]

[3.90920714e-02 9.55409291e-07 9.54000115e-01 6.82620012e-05

5.91692042e-05 6.51830994e-03 2.61150679e-04]

[2.15519130e-01 8.87922128e-04 5.19710407e-02 3.80939501e-03

3.37017671e-04 7.26808131e-01 6.67334185e-04]

[1.20813353e-02 4.79611208e-06 6.46479368e-01 4.67823047e-05

1.73373901e-05 3.41036886e-01 3.33462231e-04]

[7.92960972e-02 1.33364199e-04 8.36497962e-01 3.80271988e-04

1.97763991e-04 8.34458172e-02 4.88196238e-05]

[1.28315315e-02 1.93553274e-06 7.52629638e-01 2.32369257e-05

4.99470765e-03 1.81824401e-01 4.76945676e-02]

[1.01755001e-02 6.43019007e-07 7.74020493e-01 1.38418909e-05

6.50950024e-05 2.15282053e-01 4.42386430e-04]

[4.83417958e-02 3.71535914e-03 2.52275229e-01 9.35947173e-04

1.62184748e-04 6.93950415e-01 6.19056518e-04]

[6.65758073e-01 1.23855119e-04 3.22469950e-01 3.57561721e-03

5.19683701e-04 7.53406622e-03 1.87339556e-05]

[2.02147573e-01 5.43226898e-02 3.33156317e-01 2.73840837e-02

1.57112591e-02 3.66034061e-01 1.24402368e-03]

[2.75477096e-02 8.65350614e-07 6.15565062e-01 6.24092208e-05

1.08450411e-04 3.53773415e-01 2.94208433e-03]

[2.94731539e-02 5.81620134e-06 4.63295698e-01 1.90071602e-04

2.60285251e-05 5.05010903e-01 1.99839775e-03]

[7.97334671e-01 3.59139143e-04 1.69615075e-01 1.17718624e-02

2.80936132e-03 1.81011241e-02 8.79739855e-06]

[2.72753477e-01 1.00762499e-02 6.39225602e-01 2.60519776e-02

3.53506766e-03 4.83063981e-02 5.12331790e-05]

[4.19552103e-02 9.57266707e-07 4.36885744e-01 1.68445185e-05

7.68695585e-03 1.88773707e-01 3.24680626e-01]

[2.97358502e-02 3.83350243e-06 3.31187725e-01 4.39158102e-05

1.88634207e-03 5.86676955e-01 5.04653789e-02]

[3.58573526e-01 1.58374867e-04 6.02542102e-01 1.27857570e-02

1.02698349e-03 2.48891190e-02 2.40688914e-05]

[1.16314860e-02 2.41197228e-07 9.70157981e-01 1.48516974e-05

7.55008368e-04 1.61209367e-02 1.31961156e-03]

[7.00571120e-01 2.29540118e-03 1.04802497e-01 1.46695357e-02

1.48024661e-02 1.62605703e-01 2.53247621e-04]

[1.25222569e-02 6.83396877e-07 9.52855229e-01 3.41892592e-05

2.99665521e-06 3.45740691e-02 1.05495747e-05]

[1.25222569e-02 6.83396877e-07 9.52855229e-01 3.41892592e-05

2.99665521e-06 3.45740691e-02 1.05495747e-05]

[3.19297920e-04 5.45438583e-09 9.99344170e-01 3.88916959e-07

8.74191210e-06 1.50824810e-04 1.76662812e-04]

[1.25222569e-02 6.83396877e-07 9.52855229e-01 3.41892592e-05

2.99665521e-06 3.45740691e-02 1.05495747e-05]

[5.69354696e-03 1.28335969e-07 9.86452103e-01 5.65483651e-06

3.01851230e-07 7.83607457e-03 1.22330412e-05]

[3.99429053e-02 1.08359700e-06 8.92844379e-01 1.81622978e-04

5.88230614e-06 6.69414625e-02 8.26637915e-05]

[1.25222569e-02 6.83396877e-07 9.52855229e-01 3.41892592e-05

2.99665521e-06 3.45740691e-02 1.05495747e-05]

[2.97358502e-02 3.83350243e-06 3.31187725e-01 4.39158102e-05

1.88634207e-03 5.86676955e-01 5.04653789e-02]

[2.09384449e-02 4.86705183e-08 9.65362132e-01 6.25513421e-05

3.01155069e-05 1.35777099e-02 2.89330892e-05]

[1.13732949e-05 1.90913292e-11 9.99964356e-01 1.25660837e-09

3.51544713e-07 1.47078399e-05 9.22029813e-06]

[3.99429053e-02 1.08359700e-06 8.92844379e-01 1.81622978e-04

5.88230614e-06 6.69414625e-02 8.26637915e-05]

[1.20813353e-02 4.79611208e-06 6.46479368e-01 4.67823047e-05

1.73373901e-05 3.41036886e-01 3.33462231e-04]

[1.59810439e-01 1.03290204e-03 6.42861843e-01 4.85276105e-03

4.46016900e-03 1.86838821e-01 1.43066776e-04]

[6.71478033e-01 3.50212707e-04 1.42793342e-01 4.93934005e-03

6.32465109e-02 1.16810188e-01 3.82330909e-04]

[7.09676668e-02 2.60438919e-05 9.24330115e-01 5.52732556e-04

7.31724867e-05 4.04531416e-03 4.94555070e-06]

[7.00571120e-01 2.29540118e-03 1.04802497e-01 1.46695357e-02

1.48024661e-02 1.62605703e-01 2.53247621e-04]

[1.06128462e-01 7.88718835e-03 8.46688867e-01 6.08664937e-03

2.23514438e-03 3.09655387e-02 8.11501741e-06]

[2.72753477e-01 1.00762499e-02 6.39225602e-01 2.60519776e-02

3.53506766e-03 4.83063981e-02 5.12331790e-05]

[6.13940716e-01 1.61003787e-03 1.20064147e-01 1.70960397e-01

5.34991920e-03 8.80004764e-02 7.43283454e-05]

[1.64730139e-02 3.23751635e-07 9.80579317e-01 2.10167746e-05

6.97423445e-07 2.84353853e-03 8.20547866e-05]

[1.25222569e-02 6.83396877e-07 9.52855229e-01 3.41892592e-05

2.99665521e-06 3.45740691e-02 1.05495747e-05]

[1.01755001e-02 6.43019007e-07 7.74020493e-01 1.38418909e-05

6.50950024e-05 2.15282053e-01 4.42386430e-04]

[4.05125618e-02 8.34866706e-03 9.00641978e-01 1.45283889e-03

5.07052355e-05 4.89108488e-02 8.24468734e-05]

[1.25222569e-02 6.83396877e-07 9.52855229e-01 3.41892592e-05

2.99665521e-06 3.45740691e-02 1.05495747e-05]

[8.98946729e-03 7.80940610e-08 9.72378016e-01 8.62212619e-06

9.58983492e-06 1.86023358e-02 1.19289043e-05]

[1.25222569e-02 6.83396877e-07 9.52855229e-01 3.41892592e-05

2.99665521e-06 3.45740691e-02 1.05495747e-05]

[1.01755001e-02 6.43019007e-07 7.74020493e-01 1.38418909e-05

6.50950024e-05 2.15282053e-01 4.42386430e-04]

[1.45738348e-01 1.29492328e-04 8.33664060e-01 3.12031433e-03

6.78274548e-04 1.66654754e-02 3.98224711e-06]

[1.30998174e-04 1.46713683e-11 9.97628629e-01 6.51580976e-08

2.59211674e-06 9.74685827e-04 1.26294035e-03]

[2.02147573e-01 5.43226898e-02 3.33156317e-01 2.73840837e-02

1.57112591e-02 3.66034061e-01 1.24402368e-03]

[1.33201897e-01 2.21856833e-01 4.52529311e-01 2.94250529e-03

4.41304255e-05 1.89218625e-01 2.06693570e-04]

[2.58761849e-02 2.22617440e-04 9.01413858e-01 1.14325994e-04

3.66698623e-06 7.22827241e-02 8.65447146e-05]

[3.62032622e-01 7.64944882e-04 5.76437712e-01 4.14967574e-02

2.21518986e-03 1.69740953e-02 7.86545424e-05]

[1.91647023e-01 3.12303938e-02 7.02005327e-01 5.23953661e-02

1.01369014e-02 6.00245409e-03 6.58254605e-03]

[6.65758073e-01 1.23855119e-04 3.22469950e-01 3.57561721e-03

5.19683701e-04 7.53406622e-03 1.87339556e-05]

[6.65758073e-01 1.23855119e-04 3.22469950e-01 3.57561721e-03

5.19683701e-04 7.53406622e-03 1.87339556e-05]

[4.66395229e-01 1.10008288e-03 1.38678700e-01 1.67827815e-01

6.27291901e-03 2.19705775e-01 1.95046814e-05]

[6.56531751e-02 8.33202675e-02 5.41292205e-02 8.32856521e-02

6.19431250e-02 6.19833112e-01 3.18354368e-02]

[2.02147573e-01 5.43226898e-02 3.33156317e-01 2.73840837e-02

1.57112591e-02 3.66034061e-01 1.24402368e-03]

[7.00571120e-01 2.29540118e-03 1.04802497e-01 1.46695357e-02

1.48024661e-02 1.62605703e-01 2.53247621e-04]

[7.52860233e-02 3.04151239e-04 1.43408597e-01 3.21387011e-03

9.56973017e-05 7.76273489e-01 1.41819566e-03]

[9.16413381e-04 7.08309358e-08 9.98447478e-01 2.87186549e-06

3.01011532e-05 3.35800258e-04 2.67253636e-04]

[1.54397100e-01 1.27575640e-02 8.10144484e-01 6.85243448e-03

2.10677553e-03 1.37074701e-02 3.41773994e-05]

[4.22761112e-01 1.15090143e-03 4.90197867e-01 1.93359342e-03

3.21572344e-03 8.05821270e-02 1.58658615e-04]

[1.88470667e-03 2.78773285e-07 8.73236656e-01 8.24304698e-06

5.96339814e-04 8.16552117e-02 4.26185504e-02]

[3.04983798e-02 4.87667194e-06 7.95794308e-01 9.76038718e-05

4.27261053e-04 1.70871779e-01 2.30576936e-03]

[2.65089776e-02 1.82559813e-06 7.56660938e-01 2.48180702e-04

1.81228016e-03 2.13773742e-01 9.94157279e-04]], shape=(91, 7), dtype=float32)

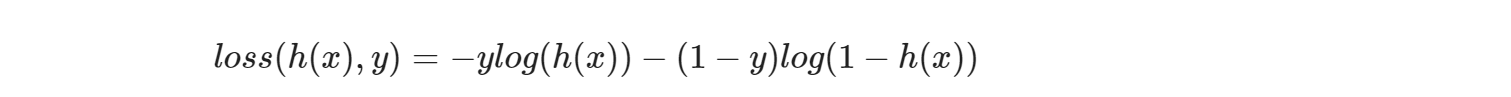

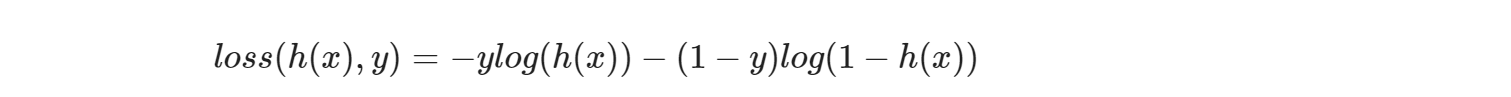

Loss Function

def loss_fn(hypothesis, labels):

cost = -tf.reduce_mean(labels * tf.math.log(hypothesis) + (1 - labels) * tf.math.log(1 - hypothesis))

return cost

optimizer = tf.compat.v1.train.GradientDescentOptimizer(learning_rate=0.01)

epochs = 5000

for step in range(epochs):

for features, labels in dataset:

with tf.GradientTape() as tape:

loss_value = loss_fn(logistic_regression(features),labels)

grads = tape.gradient(loss_value, [W,b])

optimizer.apply_gradients(grads_and_vars=zip(grads,[W,b]))

if step % 100 == 0:

print("Iter: {}, Loss: {:.4f}".format(step, loss_fn(logistic_regression(features),labels)))

Iter: 0, Loss: 1.0321

Iter: 100, Loss: 0.7508

Iter: 200, Loss: 0.6438

Iter: 300, Loss: 0.5896

Iter: 400, Loss: 0.5474

Iter: 500, Loss: 0.5094

Iter: 600, Loss: 0.4744

Iter: 700, Loss: 0.4420

Iter: 800, Loss: 0.4121

Iter: 900, Loss: 0.3847

Iter: 1000, Loss: 0.3597

Iter: 1100, Loss: 0.3371

Iter: 1200, Loss: 0.3167

Iter: 1300, Loss: 0.2984

Iter: 1400, Loss: 0.2821

Iter: 1500, Loss: 0.2677

Iter: 1600, Loss: 0.2549

Iter: 1700, Loss: 0.2436

Iter: 1800, Loss: 0.2338

Iter: 1900, Loss: 0.2251

Iter: 2000, Loss: 0.2174

Iter: 2100, Loss: 0.2107

Iter: 2200, Loss: 0.2047

Iter: 2300, Loss: 0.1994

Iter: 2400, Loss: 0.1945

Iter: 2500, Loss: 0.1901

Iter: 2600, Loss: 0.1861

Iter: 2700, Loss: 0.1823

Iter: 2800, Loss: 0.1789

Iter: 2900, Loss: 0.1756

Iter: 3000, Loss: 0.1725

Iter: 3100, Loss: 0.1697

Iter: 3200, Loss: 0.1669

Iter: 3300, Loss: 0.1643

Iter: 3400, Loss: 0.1619

Iter: 3500, Loss: 0.1595

Iter: 3600, Loss: 0.1572

Iter: 3700, Loss: 0.1551

Iter: 3800, Loss: 0.1530

Iter: 3900, Loss: 0.1510

Iter: 4000, Loss: 0.1491

Iter: 4100, Loss: 0.1473

Iter: 4200, Loss: 0.1455

Iter: 4300, Loss: 0.1438

Iter: 4400, Loss: 0.1422

Iter: 4500, Loss: 0.1406

Iter: 4600, Loss: 0.1390

Iter: 4700, Loss: 0.1375

Iter: 4800, Loss: 0.1360

Iter: 4900, Loss: 0.1346

def accuracy_fn(hypothesis, labels):

hypothesis = tf.argmax(hypothesis, 1)

predicted = tf.cast(hypothesis, dtype=tf.float32)

print(predicted)

labels = tf.argmax(labels, 1)

labels = tf.cast(labels, dtype=tf.float32)

print(labels)

accuracy = tf.reduce_mean(tf.cast(tf.equal(predicted, labels), dtype=tf.float32))

return accuracy

test_acc = accuracy_fn(logistic_regression(x_test),y_test)

print("Testset Accuracy: {:.4f}".format(test_acc))