import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras import callbacks

from sklearn.model_selection import train_test_split

import numpy as np

import matplotlib.pyplot as plt

tf.__version__

데이터 로드

- TF에서 제공하는 데이터셋을 load해 간단한 전처리 진행

(train_data, train_labels), (test_data, test_labels) = \

tf.keras.datasets.fashion_mnist.load_data()

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-labels-idx1-ubyte.gz

29515/29515 [==============================] - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-images-idx3-ubyte.gz

26421880/26421880 [==============================] - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-labels-idx1-ubyte.gz

5148/5148 [==============================] - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-images-idx3-ubyte.gz

4422102/4422102 [==============================] - 0s 0us/step

print(train_data.shape, train_labels.shape)

train_data, valid_data, train_labels, valid_labels = \

train_test_split(train_data, train_labels, test_size=0.1, shuffle=True)

print(train_data.shape, train_labels.shape)

print(valid_data.shape, valid_labels.shape)

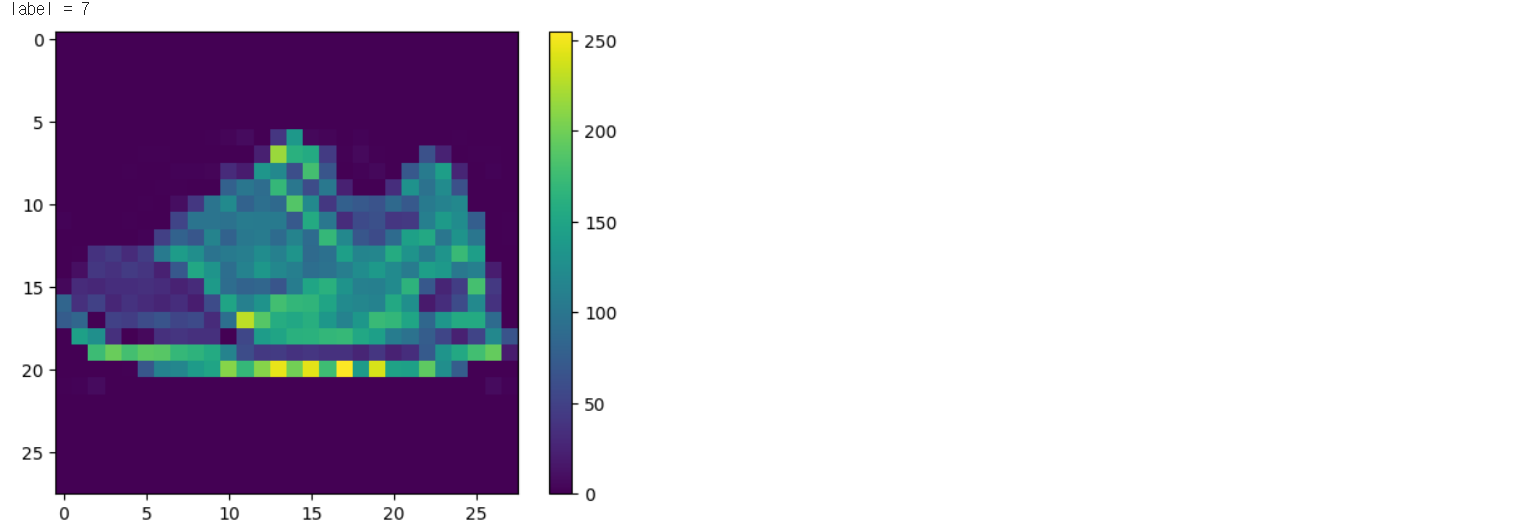

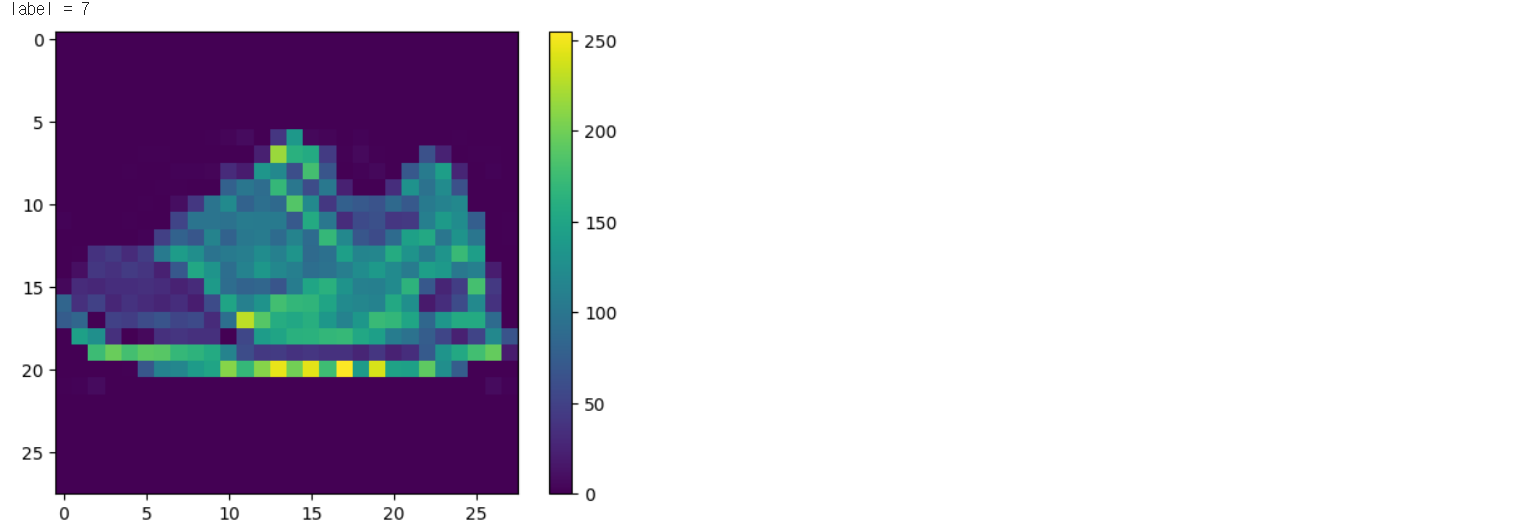

index = 5901

print("label = {}".format(valid_labels[index]))

plt.imshow(valid_data[index].reshape(28, 28))

plt.colorbar()

plt.show()

train_data = train_data / 255.

train_data = train_data.reshape(-1, 28 * 28)

train_data = train_data.astype(np.float32)

train_labels = train_labels.astype(np.int32)

test_data = test_data / 255.

test_data = test_data.reshape(-1, 28 * 28)

test_data = test_data.astype(np.float32)

test_labels = test_labels.astype(np.int32)

valid_data = valid_data / 255.

valid_data = valid_data.reshape(-1, 28 * 28)

valid_data = valid_data.astype(np.float32)

valid_labels = valid_labels.astype(np.int32)

print(train_data.shape, train_labels.shape)

print(test_data.shape, test_labels.shape)

print(valid_data.shape, valid_labels.shape)

학습에 사용할 tf.data.Dataset 구성

- 학습에 잘 적용할 수 있도록 label 처리

- 데이터셋 구성

def one_hot_label(image, label):

label = tf.one_hot(label, depth=10)

return image, label

batch_size = 32

train_dataset = tf.data.Dataset.from_tensor_slices((train_data, train_labels))

train_dataset = train_dataset.shuffle(buffer_size=10000)

train_dataset = train_dataset.map(one_hot_label)

train_dataset = train_dataset.repeat().batch(batch_size=batch_size)

print(train_dataset)

test_dataset = tf.data.Dataset.from_tensor_slices((test_data, test_labels))

test_dataset = test_dataset.map(one_hot_label)

test_dataset = test_dataset.batch(batch_size=batch_size)

print(test_dataset)

valid_dataset = tf.data.Dataset.from_tensor_slices((valid_data, valid_labels))

valid_dataset = valid_dataset.map(one_hot_label)

valid_dataset = valid_dataset.batch(batch_size=batch_size)

print(valid_dataset)

모델 구성

model = tf.keras.Sequential([

layers.Dense(64, kernel_initializer=tf.keras.initializers.HeUniform(),

kernel_regularizer=tf.keras.regularizers.L2(0.0001)),

layers.BatchNormalization(),

layers.Activation("relu"),

layers.Dropout(0.5),

layers.Dense(32, kernel_initializer=tf.keras.initializers.HeUniform(),

kernel_regularizer=tf.keras.regularizers.L2(0.0001)),

layers.BatchNormalization(),

layers.Activation("relu"),

layers.Dropout(0.5),

layers.Dense(16, kernel_initializer=tf.keras.initializers.HeUniform(),

kernel_regularizer=tf.keras.regularizers.L2(0.0001)),

layers.BatchNormalization(),

layers.Activation("relu"),

layers.Dense(10),

])

model.compile(optimizer=tf.keras.optimizers.Adam(1e-4),

loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

predictions = model(train_data[0:1], training=False)

print("Predictions: ", predictions.numpy())

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param

=================================================================

dense (Dense) (1, 64) 50240

batch_normalization (Batch (1, 64) 256

Normalization)

activation (Activation) (1, 64) 0

dropout (Dropout) (1, 64) 0

dense_1 (Dense) (1, 32) 2080

batch_normalization_1 (Bat (1, 32) 128

chNormalization)

activation_1 (Activation) (1, 32) 0

dropout_1 (Dropout) (1, 32) 0

dense_2 (Dense) (1, 16) 528

batch_normalization_2 (Bat (1, 16) 64

chNormalization)

activation_2 (Activation) (1, 16) 0

dense_3 (Dense) (1, 10) 170

=================================================================

Total params: 53466 (208.85 KB)

Trainable params: 53242 (207.98 KB)

Non-trainable params: 224 (896.00 Byte)

_________________________________________________________________

모델 학습 구성

check_point_cb = callbacks.ModelCheckpoint('fashion_mnist_model.h5',

save_best_only=True,

verbose=1)

early_stopping_cb = callbacks.EarlyStopping(patience=10,

monitor='val_loss',

restore_best_weights=True,

verbose=1)

max_epochs = 100

history = model.fit(train_dataset,

epochs=max_epochs,

steps_per_epoch=len(train_data) // batch_size,

validation_data=valid_dataset,

validation_steps=len(valid_data) // batch_size,

callbacks=[check_point_cb, early_stopping_cb]

)

Epoch 1/100

1686/1687 [============================>.] - ETA: 0s - loss: 1.9787 - accuracy: 0.3221

Epoch 1: val_loss improved from inf to 1.54169, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 18s 8ms/step - loss: 1.9786 - accuracy: 0.3222 - val_loss: 1.5417 - val_accuracy: 0.6832

Epoch 2/100

19/1687 [..............................] - ETA: 9s - loss: 1.6848 - accuracy: 0.5197/usr/local/lib/python3.10/dist-packages/keras/src/engine/training.py:3103: UserWarning: You are saving your model as an HDF5 file via `model.save()`. This file format is considered legacy. We recommend using instead the native Keras format, e.g. `model.save('my_model.keras')`.

saving_api.save_model(

1684/1687 [============================>.] - ETA: 0s - loss: 1.5489 - accuracy: 0.5151

Epoch 2: val_loss improved from 1.54169 to 1.13681, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 7ms/step - loss: 1.5485 - accuracy: 0.5153 - val_loss: 1.1368 - val_accuracy: 0.7482

Epoch 3/100

1684/1687 [============================>.] - ETA: 0s - loss: 1.2833 - accuracy: 0.5949

Epoch 3: val_loss improved from 1.13681 to 0.88925, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 1.2832 - accuracy: 0.5950 - val_loss: 0.8892 - val_accuracy: 0.7834

Epoch 4/100

1685/1687 [============================>.] - ETA: 0s - loss: 1.1196 - accuracy: 0.6378

Epoch 4: val_loss improved from 0.88925 to 0.73826, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 7ms/step - loss: 1.1195 - accuracy: 0.6378 - val_loss: 0.7383 - val_accuracy: 0.8015

Epoch 5/100

1682/1687 [============================>.] - ETA: 0s - loss: 1.0132 - accuracy: 0.6667

Epoch 5: val_loss improved from 0.73826 to 0.65151, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 1.0129 - accuracy: 0.6668 - val_loss: 0.6515 - val_accuracy: 0.8155

Epoch 6/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.9460 - accuracy: 0.6859

Epoch 6: val_loss improved from 0.65151 to 0.59941, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.9459 - accuracy: 0.6860 - val_loss: 0.5994 - val_accuracy: 0.8254

Epoch 7/100

1687/1687 [==============================] - ETA: 0s - loss: 0.8999 - accuracy: 0.6978

Epoch 7: val_loss improved from 0.59941 to 0.56490, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.8999 - accuracy: 0.6978 - val_loss: 0.5649 - val_accuracy: 0.8260

Epoch 8/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.8703 - accuracy: 0.7097

Epoch 8: val_loss improved from 0.56490 to 0.54167, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.8704 - accuracy: 0.7098 - val_loss: 0.5417 - val_accuracy: 0.8282

Epoch 9/100

1687/1687 [==============================] - ETA: 0s - loss: 0.8425 - accuracy: 0.7179

Epoch 9: val_loss improved from 0.54167 to 0.52763, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.8425 - accuracy: 0.7179 - val_loss: 0.5276 - val_accuracy: 0.8336

Epoch 10/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.8223 - accuracy: 0.7264

Epoch 10: val_loss improved from 0.52763 to 0.51580, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.8222 - accuracy: 0.7265 - val_loss: 0.5158 - val_accuracy: 0.8327

Epoch 11/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.8054 - accuracy: 0.7294

Epoch 11: val_loss improved from 0.51580 to 0.50027, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.8051 - accuracy: 0.7295 - val_loss: 0.5003 - val_accuracy: 0.8374

Epoch 12/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.7895 - accuracy: 0.7349

Epoch 12: val_loss improved from 0.50027 to 0.49493, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 7ms/step - loss: 0.7895 - accuracy: 0.7349 - val_loss: 0.4949 - val_accuracy: 0.8374

Epoch 13/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.7781 - accuracy: 0.7379

Epoch 13: val_loss improved from 0.49493 to 0.48376, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.7782 - accuracy: 0.7381 - val_loss: 0.4838 - val_accuracy: 0.8483

Epoch 14/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.7615 - accuracy: 0.7451

Epoch 14: val_loss improved from 0.48376 to 0.47872, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 7ms/step - loss: 0.7612 - accuracy: 0.7451 - val_loss: 0.4787 - val_accuracy: 0.8427

Epoch 15/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.7548 - accuracy: 0.7482

Epoch 15: val_loss improved from 0.47872 to 0.47074, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.7552 - accuracy: 0.7481 - val_loss: 0.4707 - val_accuracy: 0.8476

Epoch 16/100

1687/1687 [==============================] - ETA: 0s - loss: 0.7464 - accuracy: 0.7511

Epoch 16: val_loss improved from 0.47074 to 0.46636, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.7464 - accuracy: 0.7511 - val_loss: 0.4664 - val_accuracy: 0.8514

Epoch 17/100

1680/1687 [============================>.] - ETA: 0s - loss: 0.7330 - accuracy: 0.7567

Epoch 17: val_loss improved from 0.46636 to 0.46387, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.7330 - accuracy: 0.7567 - val_loss: 0.4639 - val_accuracy: 0.8499

Epoch 18/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.7344 - accuracy: 0.7539

Epoch 18: val_loss improved from 0.46387 to 0.45543, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.7343 - accuracy: 0.7540 - val_loss: 0.4554 - val_accuracy: 0.8486

Epoch 19/100

1679/1687 [============================>.] - ETA: 0s - loss: 0.7278 - accuracy: 0.7569

Epoch 19: val_loss improved from 0.45543 to 0.45203, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7281 - accuracy: 0.7569 - val_loss: 0.4520 - val_accuracy: 0.8488

Epoch 20/100

1680/1687 [============================>.] - ETA: 0s - loss: 0.7171 - accuracy: 0.7615

Epoch 20: val_loss improved from 0.45203 to 0.44627, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.7175 - accuracy: 0.7613 - val_loss: 0.4463 - val_accuracy: 0.8504

Epoch 21/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.7107 - accuracy: 0.7653

Epoch 21: val_loss improved from 0.44627 to 0.44421, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.7108 - accuracy: 0.7653 - val_loss: 0.4442 - val_accuracy: 0.8511

Epoch 22/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.7080 - accuracy: 0.7634

Epoch 22: val_loss did not improve from 0.44421

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7081 - accuracy: 0.7634 - val_loss: 0.4459 - val_accuracy: 0.8526

Epoch 23/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.7038 - accuracy: 0.7676

Epoch 23: val_loss improved from 0.44421 to 0.44307, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.7035 - accuracy: 0.7676 - val_loss: 0.4431 - val_accuracy: 0.8528

Epoch 24/100

1687/1687 [==============================] - ETA: 0s - loss: 0.7007 - accuracy: 0.7666

Epoch 24: val_loss improved from 0.44307 to 0.43942, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.7007 - accuracy: 0.7666 - val_loss: 0.4394 - val_accuracy: 0.8573

Epoch 25/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6908 - accuracy: 0.7707

Epoch 25: val_loss improved from 0.43942 to 0.43583, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6906 - accuracy: 0.7708 - val_loss: 0.4358 - val_accuracy: 0.8571

Epoch 26/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6857 - accuracy: 0.7716

Epoch 26: val_loss improved from 0.43583 to 0.43411, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6857 - accuracy: 0.7716 - val_loss: 0.4341 - val_accuracy: 0.8595

Epoch 27/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6888 - accuracy: 0.7742

Epoch 27: val_loss improved from 0.43411 to 0.43279, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6885 - accuracy: 0.7743 - val_loss: 0.4328 - val_accuracy: 0.8554

Epoch 28/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6808 - accuracy: 0.7747

Epoch 28: val_loss improved from 0.43279 to 0.43242, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6811 - accuracy: 0.7746 - val_loss: 0.4324 - val_accuracy: 0.8553

Epoch 29/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6812 - accuracy: 0.7752

Epoch 29: val_loss did not improve from 0.43242

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6815 - accuracy: 0.7751 - val_loss: 0.4331 - val_accuracy: 0.8556

Epoch 30/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6644 - accuracy: 0.7808

Epoch 30: val_loss improved from 0.43242 to 0.43040, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6645 - accuracy: 0.7807 - val_loss: 0.4304 - val_accuracy: 0.8580

Epoch 31/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6676 - accuracy: 0.7771

Epoch 31: val_loss did not improve from 0.43040

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6678 - accuracy: 0.7771 - val_loss: 0.4308 - val_accuracy: 0.8598

Epoch 32/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6671 - accuracy: 0.7812

Epoch 32: val_loss did not improve from 0.43040

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6671 - accuracy: 0.7812 - val_loss: 0.4310 - val_accuracy: 0.8573

Epoch 33/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6650 - accuracy: 0.7790

Epoch 33: val_loss improved from 0.43040 to 0.42394, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6651 - accuracy: 0.7790 - val_loss: 0.4239 - val_accuracy: 0.8625

Epoch 34/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6649 - accuracy: 0.7794

Epoch 34: val_loss did not improve from 0.42394

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6647 - accuracy: 0.7795 - val_loss: 0.4246 - val_accuracy: 0.8570

Epoch 35/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6571 - accuracy: 0.7823

Epoch 35: val_loss improved from 0.42394 to 0.42265, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6570 - accuracy: 0.7824 - val_loss: 0.4226 - val_accuracy: 0.8593

Epoch 36/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6544 - accuracy: 0.7816

Epoch 36: val_loss improved from 0.42265 to 0.41752, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6544 - accuracy: 0.7817 - val_loss: 0.4175 - val_accuracy: 0.8593

Epoch 37/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6616 - accuracy: 0.7829

Epoch 37: val_loss did not improve from 0.41752

1687/1687 [==============================] - 13s 7ms/step - loss: 0.6613 - accuracy: 0.7830 - val_loss: 0.4178 - val_accuracy: 0.8615

Epoch 38/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6490 - accuracy: 0.7881

Epoch 38: val_loss did not improve from 0.41752

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6487 - accuracy: 0.7882 - val_loss: 0.4201 - val_accuracy: 0.8611

Epoch 39/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6416 - accuracy: 0.7876

Epoch 39: val_loss improved from 0.41752 to 0.41550, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6416 - accuracy: 0.7876 - val_loss: 0.4155 - val_accuracy: 0.8616

Epoch 40/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6444 - accuracy: 0.7864

Epoch 40: val_loss did not improve from 0.41550

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6444 - accuracy: 0.7864 - val_loss: 0.4159 - val_accuracy: 0.8601

Epoch 41/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6465 - accuracy: 0.7845

Epoch 41: val_loss improved from 0.41550 to 0.41065, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6463 - accuracy: 0.7846 - val_loss: 0.4107 - val_accuracy: 0.8665

Epoch 42/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6415 - accuracy: 0.7893

Epoch 42: val_loss did not improve from 0.41065

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6415 - accuracy: 0.7893 - val_loss: 0.4139 - val_accuracy: 0.8606

Epoch 43/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6405 - accuracy: 0.7869

Epoch 43: val_loss did not improve from 0.41065

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6405 - accuracy: 0.7869 - val_loss: 0.4120 - val_accuracy: 0.8618

Epoch 44/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6380 - accuracy: 0.7886

Epoch 44: val_loss did not improve from 0.41065

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6378 - accuracy: 0.7888 - val_loss: 0.4163 - val_accuracy: 0.8633

Epoch 45/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6377 - accuracy: 0.7878

Epoch 45: val_loss improved from 0.41065 to 0.40604, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6375 - accuracy: 0.7878 - val_loss: 0.4060 - val_accuracy: 0.8641

Epoch 46/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6363 - accuracy: 0.7908

Epoch 46: val_loss did not improve from 0.40604

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6362 - accuracy: 0.7908 - val_loss: 0.4166 - val_accuracy: 0.8603

Epoch 47/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6338 - accuracy: 0.7893

Epoch 47: val_loss did not improve from 0.40604

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6339 - accuracy: 0.7893 - val_loss: 0.4092 - val_accuracy: 0.8640

Epoch 48/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6337 - accuracy: 0.7905

Epoch 48: val_loss did not improve from 0.40604

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6338 - accuracy: 0.7904 - val_loss: 0.4096 - val_accuracy: 0.8640

Epoch 49/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6307 - accuracy: 0.7917

Epoch 49: val_loss did not improve from 0.40604

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6308 - accuracy: 0.7917 - val_loss: 0.4065 - val_accuracy: 0.8646

Epoch 50/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6253 - accuracy: 0.7925

Epoch 50: val_loss improved from 0.40604 to 0.40466, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6254 - accuracy: 0.7925 - val_loss: 0.4047 - val_accuracy: 0.8636

Epoch 51/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6268 - accuracy: 0.7930

Epoch 51: val_loss improved from 0.40466 to 0.40251, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6268 - accuracy: 0.7930 - val_loss: 0.4025 - val_accuracy: 0.8655

Epoch 52/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6265 - accuracy: 0.7952

Epoch 52: val_loss improved from 0.40251 to 0.40179, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6266 - accuracy: 0.7951 - val_loss: 0.4018 - val_accuracy: 0.8668

Epoch 53/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6264 - accuracy: 0.7926

Epoch 53: val_loss did not improve from 0.40179

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6264 - accuracy: 0.7926 - val_loss: 0.4083 - val_accuracy: 0.8641

Epoch 54/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6178 - accuracy: 0.7955

Epoch 54: val_loss did not improve from 0.40179

1687/1687 [==============================] - 14s 8ms/step - loss: 0.6177 - accuracy: 0.7956 - val_loss: 0.4056 - val_accuracy: 0.8655

Epoch 55/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6191 - accuracy: 0.7956

Epoch 55: val_loss did not improve from 0.40179

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6191 - accuracy: 0.7956 - val_loss: 0.4059 - val_accuracy: 0.8626

Epoch 56/100

1680/1687 [============================>.] - ETA: 0s - loss: 0.6176 - accuracy: 0.7949

Epoch 56: val_loss improved from 0.40179 to 0.39865, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6179 - accuracy: 0.7948 - val_loss: 0.3986 - val_accuracy: 0.8688

Epoch 57/100

1687/1687 [==============================] - ETA: 0s - loss: 0.6134 - accuracy: 0.7966

Epoch 57: val_loss did not improve from 0.39865

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6134 - accuracy: 0.7966 - val_loss: 0.4041 - val_accuracy: 0.8656

Epoch 58/100

1679/1687 [============================>.] - ETA: 0s - loss: 0.6156 - accuracy: 0.7962

Epoch 58: val_loss did not improve from 0.39865

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6154 - accuracy: 0.7963 - val_loss: 0.4014 - val_accuracy: 0.8646

Epoch 59/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6198 - accuracy: 0.7955

Epoch 59: val_loss did not improve from 0.39865

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6200 - accuracy: 0.7955 - val_loss: 0.3991 - val_accuracy: 0.8718

Epoch 60/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6065 - accuracy: 0.7995

Epoch 60: val_loss did not improve from 0.39865

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6063 - accuracy: 0.7995 - val_loss: 0.4042 - val_accuracy: 0.8676

Epoch 61/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.6096 - accuracy: 0.7983

Epoch 61: val_loss improved from 0.39865 to 0.39738, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6095 - accuracy: 0.7982 - val_loss: 0.3974 - val_accuracy: 0.8707

Epoch 62/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.6109 - accuracy: 0.7982

Epoch 62: val_loss did not improve from 0.39738

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6110 - accuracy: 0.7982 - val_loss: 0.4011 - val_accuracy: 0.8685

Epoch 63/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.6168 - accuracy: 0.7941

Epoch 63: val_loss did not improve from 0.39738

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6168 - accuracy: 0.7941 - val_loss: 0.4047 - val_accuracy: 0.8666

Epoch 64/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6083 - accuracy: 0.7989

Epoch 64: val_loss did not improve from 0.39738

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6085 - accuracy: 0.7989 - val_loss: 0.3979 - val_accuracy: 0.8698

Epoch 65/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6144 - accuracy: 0.7989

Epoch 65: val_loss did not improve from 0.39738

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6149 - accuracy: 0.7987 - val_loss: 0.3985 - val_accuracy: 0.8686

Epoch 66/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.6077 - accuracy: 0.7980

Epoch 66: val_loss improved from 0.39738 to 0.39638, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6078 - accuracy: 0.7980 - val_loss: 0.3964 - val_accuracy: 0.8698

Epoch 67/100

1679/1687 [============================>.] - ETA: 0s - loss: 0.6067 - accuracy: 0.7998

Epoch 67: val_loss did not improve from 0.39638

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6068 - accuracy: 0.7997 - val_loss: 0.4008 - val_accuracy: 0.8660

Epoch 68/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.6047 - accuracy: 0.8016

Epoch 68: val_loss did not improve from 0.39638

1687/1687 [==============================] - 13s 8ms/step - loss: 0.6046 - accuracy: 0.8015 - val_loss: 0.3990 - val_accuracy: 0.8663

Epoch 69/100

1679/1687 [============================>.] - ETA: 0s - loss: 0.6097 - accuracy: 0.8002

Epoch 69: val_loss did not improve from 0.39638

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6102 - accuracy: 0.8000 - val_loss: 0.4010 - val_accuracy: 0.8653

Epoch 70/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6059 - accuracy: 0.8001

Epoch 70: val_loss improved from 0.39638 to 0.39021, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6058 - accuracy: 0.8002 - val_loss: 0.3902 - val_accuracy: 0.8730

Epoch 71/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.5997 - accuracy: 0.8018

Epoch 71: val_loss did not improve from 0.39021

1687/1687 [==============================] - 13s 8ms/step - loss: 0.5997 - accuracy: 0.8018 - val_loss: 0.3953 - val_accuracy: 0.8656

Epoch 72/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.6036 - accuracy: 0.8013

Epoch 72: val_loss did not improve from 0.39021

1687/1687 [==============================] - 12s 7ms/step - loss: 0.6033 - accuracy: 0.8014 - val_loss: 0.4027 - val_accuracy: 0.8635

Epoch 73/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.5982 - accuracy: 0.8021

Epoch 73: val_loss did not improve from 0.39021

1687/1687 [==============================] - 13s 8ms/step - loss: 0.5988 - accuracy: 0.8019 - val_loss: 0.3925 - val_accuracy: 0.8681

Epoch 74/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.5987 - accuracy: 0.7999

Epoch 74: val_loss did not improve from 0.39021

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5985 - accuracy: 0.8000 - val_loss: 0.3909 - val_accuracy: 0.8727

Epoch 75/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.5989 - accuracy: 0.8030

Epoch 75: val_loss did not improve from 0.39021

1687/1687 [==============================] - 13s 7ms/step - loss: 0.5989 - accuracy: 0.8029 - val_loss: 0.3950 - val_accuracy: 0.8676

Epoch 76/100

1687/1687 [==============================] - ETA: 0s - loss: 0.5968 - accuracy: 0.8019

Epoch 76: val_loss did not improve from 0.39021

1687/1687 [==============================] - 13s 8ms/step - loss: 0.5968 - accuracy: 0.8019 - val_loss: 0.3956 - val_accuracy: 0.8703

Epoch 77/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.5910 - accuracy: 0.8051

Epoch 77: val_loss did not improve from 0.39021

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5913 - accuracy: 0.8050 - val_loss: 0.3925 - val_accuracy: 0.8727

Epoch 78/100

1680/1687 [============================>.] - ETA: 0s - loss: 0.5893 - accuracy: 0.8048

Epoch 78: val_loss did not improve from 0.39021

1687/1687 [==============================] - 14s 8ms/step - loss: 0.5890 - accuracy: 0.8049 - val_loss: 0.3943 - val_accuracy: 0.8700

Epoch 79/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.5933 - accuracy: 0.8041

Epoch 79: val_loss improved from 0.39021 to 0.39005, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5929 - accuracy: 0.8043 - val_loss: 0.3900 - val_accuracy: 0.8728

Epoch 80/100

1687/1687 [==============================] - ETA: 0s - loss: 0.5934 - accuracy: 0.8035

Epoch 80: val_loss did not improve from 0.39005

1687/1687 [==============================] - 13s 7ms/step - loss: 0.5934 - accuracy: 0.8035 - val_loss: 0.3917 - val_accuracy: 0.8723

Epoch 81/100

1687/1687 [==============================] - ETA: 0s - loss: 0.5964 - accuracy: 0.8018

Epoch 81: val_loss did not improve from 0.39005

1687/1687 [==============================] - 13s 8ms/step - loss: 0.5964 - accuracy: 0.8018 - val_loss: 0.3980 - val_accuracy: 0.8688

Epoch 82/100

1679/1687 [============================>.] - ETA: 0s - loss: 0.5947 - accuracy: 0.8015

Epoch 82: val_loss did not improve from 0.39005

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5951 - accuracy: 0.8013 - val_loss: 0.3945 - val_accuracy: 0.8735

Epoch 83/100

1687/1687 [==============================] - ETA: 0s - loss: 0.5892 - accuracy: 0.8050

Epoch 83: val_loss did not improve from 0.39005

1687/1687 [==============================] - 14s 8ms/step - loss: 0.5892 - accuracy: 0.8050 - val_loss: 0.3955 - val_accuracy: 0.8722

Epoch 84/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.5897 - accuracy: 0.8036

Epoch 84: val_loss did not improve from 0.39005

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5900 - accuracy: 0.8034 - val_loss: 0.3904 - val_accuracy: 0.8748

Epoch 85/100

1681/1687 [============================>.] - ETA: 0s - loss: 0.5892 - accuracy: 0.8065

Epoch 85: val_loss did not improve from 0.39005

1687/1687 [==============================] - 13s 8ms/step - loss: 0.5890 - accuracy: 0.8066 - val_loss: 0.3909 - val_accuracy: 0.8710

Epoch 86/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.5895 - accuracy: 0.8046

Epoch 86: val_loss did not improve from 0.39005

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5895 - accuracy: 0.8046 - val_loss: 0.3923 - val_accuracy: 0.8732

Epoch 87/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.5907 - accuracy: 0.8049

Epoch 87: val_loss improved from 0.39005 to 0.38910, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 14s 8ms/step - loss: 0.5907 - accuracy: 0.8049 - val_loss: 0.3891 - val_accuracy: 0.8727

Epoch 88/100

1679/1687 [============================>.] - ETA: 0s - loss: 0.5870 - accuracy: 0.8033

Epoch 88: val_loss improved from 0.38910 to 0.38872, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5869 - accuracy: 0.8033 - val_loss: 0.3887 - val_accuracy: 0.8740

Epoch 89/100

1680/1687 [============================>.] - ETA: 0s - loss: 0.5916 - accuracy: 0.8038

Epoch 89: val_loss did not improve from 0.38872

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5916 - accuracy: 0.8038 - val_loss: 0.3929 - val_accuracy: 0.8707

Epoch 90/100

1683/1687 [============================>.] - ETA: 0s - loss: 0.5889 - accuracy: 0.8050

Epoch 90: val_loss did not improve from 0.38872

1687/1687 [==============================] - 13s 8ms/step - loss: 0.5891 - accuracy: 0.8049 - val_loss: 0.3921 - val_accuracy: 0.8708

Epoch 91/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.5874 - accuracy: 0.8043

Epoch 91: val_loss improved from 0.38872 to 0.38847, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5874 - accuracy: 0.8043 - val_loss: 0.3885 - val_accuracy: 0.8702

Epoch 92/100

1680/1687 [============================>.] - ETA: 0s - loss: 0.5843 - accuracy: 0.8048

Epoch 92: val_loss improved from 0.38847 to 0.38727, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.5844 - accuracy: 0.8046 - val_loss: 0.3873 - val_accuracy: 0.8755

Epoch 93/100

1679/1687 [============================>.] - ETA: 0s - loss: 0.5885 - accuracy: 0.8036

Epoch 93: val_loss did not improve from 0.38727

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5886 - accuracy: 0.8035 - val_loss: 0.3893 - val_accuracy: 0.8708

Epoch 94/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.5821 - accuracy: 0.8073

Epoch 94: val_loss did not improve from 0.38727

1687/1687 [==============================] - 13s 8ms/step - loss: 0.5821 - accuracy: 0.8073 - val_loss: 0.3894 - val_accuracy: 0.8723

Epoch 95/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.5850 - accuracy: 0.8055

Epoch 95: val_loss improved from 0.38727 to 0.38255, saving model to fashion_mnist_model.h5

1687/1687 [==============================] - 13s 8ms/step - loss: 0.5850 - accuracy: 0.8054 - val_loss: 0.3825 - val_accuracy: 0.8745

Epoch 96/100

1684/1687 [============================>.] - ETA: 0s - loss: 0.5781 - accuracy: 0.8086

Epoch 96: val_loss did not improve from 0.38255

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5780 - accuracy: 0.8086 - val_loss: 0.3882 - val_accuracy: 0.8732

Epoch 97/100

1686/1687 [============================>.] - ETA: 0s - loss: 0.5875 - accuracy: 0.8031

Epoch 97: val_loss did not improve from 0.38255

1687/1687 [==============================] - 15s 9ms/step - loss: 0.5875 - accuracy: 0.8031 - val_loss: 0.3889 - val_accuracy: 0.8712

Epoch 98/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.5813 - accuracy: 0.8077

Epoch 98: val_loss did not improve from 0.38255

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5811 - accuracy: 0.8077 - val_loss: 0.3937 - val_accuracy: 0.8713

Epoch 99/100

1682/1687 [============================>.] - ETA: 0s - loss: 0.5830 - accuracy: 0.8060

Epoch 99: val_loss did not improve from 0.38255

1687/1687 [==============================] - 15s 9ms/step - loss: 0.5829 - accuracy: 0.8059 - val_loss: 0.3918 - val_accuracy: 0.8727

Epoch 100/100

1685/1687 [============================>.] - ETA: 0s - loss: 0.5868 - accuracy: 0.8051

Epoch 100: val_loss did not improve from 0.38255

1687/1687 [==============================] - 12s 7ms/step - loss: 0.5869 - accuracy: 0.8050 - val_loss: 0.3861 - val_accuracy: 0.8702

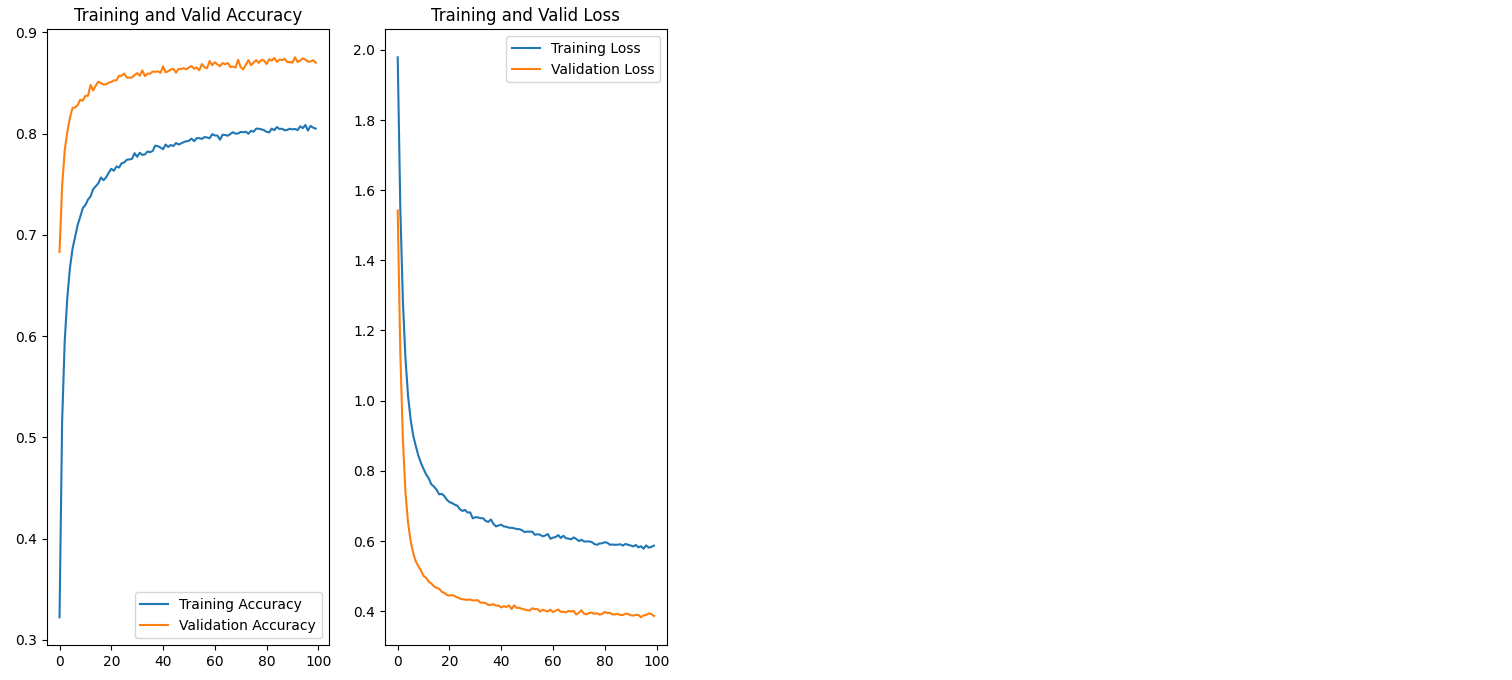

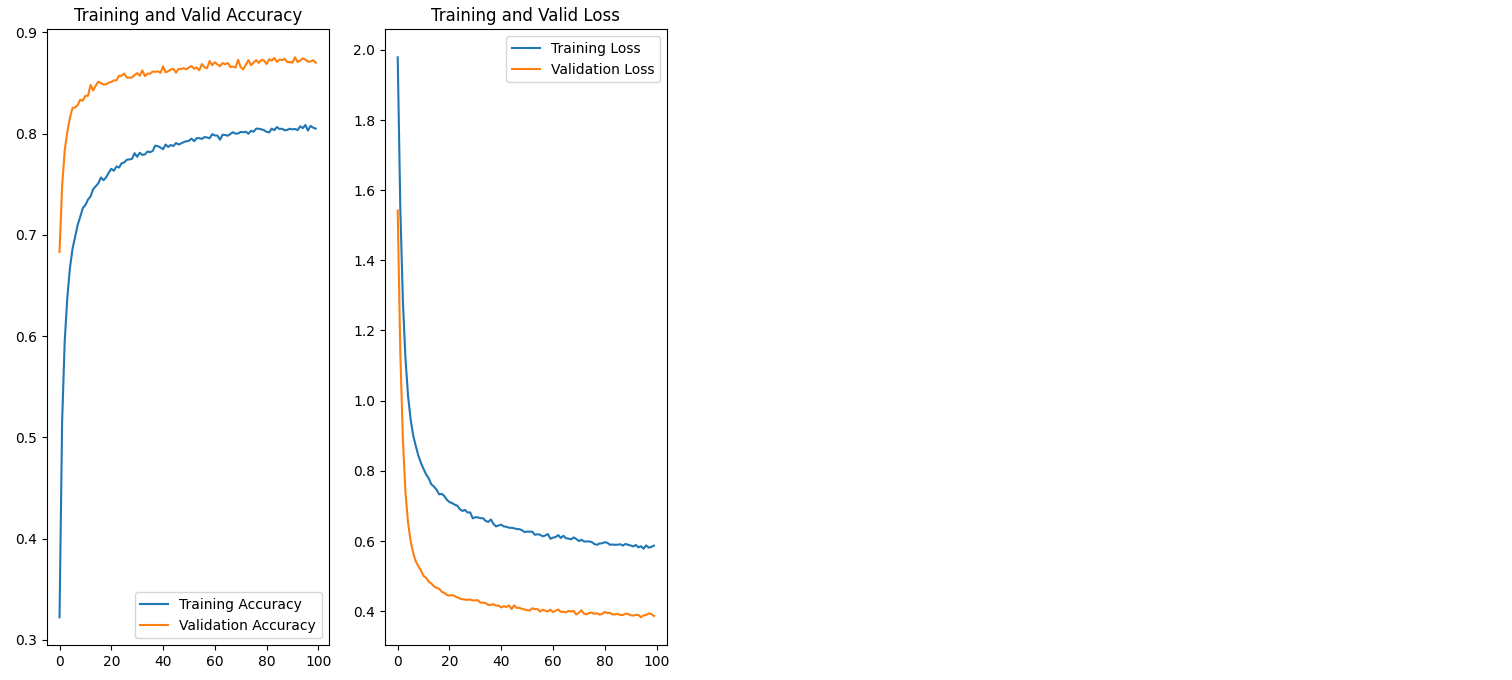

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(len(acc))

plt.figure(figsize=(8, 8))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Valid Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Valid Loss')

plt.show()