import tensorflow as tf

from tensorflow.keras import layers

from sklearn.model_selection import train_test_split

import numpy as np

import matplotlib.pyplot as plt

tf.__version__

(train_data, train_labels), (test_data, test_labels) = \

tf.keras.datasets.fashion_mnist.load_data()

train_data, valid_data, train_labels, valid_labels = \

train_test_split(train_data, train_labels, test_size=0.1, shuffle=True)

train_data = train_data / 255.

train_data = train_data.reshape(-1, 784)

train_data = train_data.astype(np.float32)

train_labels = train_labels.astype(np.int32)

test_data = test_data / 255.

test_data = test_data.reshape(-1, 784)

test_data = test_data.astype(np.float32)

test_labels = test_labels.astype(np.int32)

valid_data = valid_data / 255.

valid_data = valid_data.reshape(-1, 784)

valid_data = valid_data.astype(np.float32)

valid_labels = valid_labels.astype(np.int32)

print(train_data.shape, train_labels.shape)

print(test_data.shape, test_labels.shape)

print(valid_data.shape, valid_labels.shape)

def one_hot_label(image, label):

label = tf.one_hot(label, depth=10)

return image, label

batch_size = 32

max_epochs = 50

train_dataset = tf.data.Dataset.from_tensor_slices((train_data, train_labels))

train_dataset = train_dataset.map(one_hot_label)

train_dataset = train_dataset.repeat().batch(batch_size=batch_size)

print(train_dataset)

test_dataset = tf.data.Dataset.from_tensor_slices((test_data, test_labels))

test_dataset = test_dataset.map(one_hot_label)

test_dataset = test_dataset.batch(batch_size=batch_size)

print(test_dataset)

valid_dataset = tf.data.Dataset.from_tensor_slices((valid_data, valid_labels))

valid_dataset = valid_dataset.map(one_hot_label)

valid_dataset = valid_dataset.batch(batch_size=batch_size)

print(valid_dataset)

model = tf.keras.Sequential()

model.add(layers.Dense(units=256, activation='relu'))

model.add(layers.Dense(units=128))

model.add(layers.Activation("relu"))

model.add(layers.Dense(units=64, activation='relu'))

model.add(layers.Dense(units=32, activation='relu'))

model.add(layers.Dense(units=16, activation='relu'))

model.add(layers.Dense(units=10))

model.compile(optimizer=tf.keras.optimizers.Adam(1e-4),

loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

predictions = model(train_data[0:1], training=False)

print("Predictions: ", predictions.numpy())

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param

=================================================================

dense (Dense) (1, 256) 200960

dense_1 (Dense) (1, 128) 32896

activation (Activation) (1, 128) 0

dense_2 (Dense) (1, 64) 8256

dense_3 (Dense) (1, 32) 2080

dense_4 (Dense) (1, 16) 528

dense_5 (Dense) (1, 10) 170

=================================================================

Total params: 244890 (956.60 KB)

Trainable params: 244890 (956.60 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

history = model.fit(train_dataset, epochs=max_epochs,

steps_per_epoch=len(train_data) // batch_size,

validation_data=valid_dataset,

validation_steps=len(valid_data) // batch_size)

Epoch 1/50

1687/1687 [==============================] - 17s 8ms/step - loss: 0.7797 - accuracy: 0.7376 - val_loss: 0.5150 - val_accuracy: 0.8150

Epoch 2/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.4492 - accuracy: 0.8423 - val_loss: 0.4543 - val_accuracy: 0.8366

Epoch 3/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.3992 - accuracy: 0.8594 - val_loss: 0.4403 - val_accuracy: 0.8416

Epoch 4/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.3705 - accuracy: 0.8681 - val_loss: 0.4204 - val_accuracy: 0.8474

Epoch 5/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.3500 - accuracy: 0.8754 - val_loss: 0.4073 - val_accuracy: 0.8539

Epoch 6/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.3337 - accuracy: 0.8806 - val_loss: 0.3881 - val_accuracy: 0.8588

Epoch 7/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.3195 - accuracy: 0.8847 - val_loss: 0.3826 - val_accuracy: 0.8633

Epoch 8/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.3073 - accuracy: 0.8886 - val_loss: 0.3666 - val_accuracy: 0.8693

Epoch 9/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.2961 - accuracy: 0.8919 - val_loss: 0.3588 - val_accuracy: 0.8742

Epoch 10/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.2863 - accuracy: 0.8958 - val_loss: 0.3517 - val_accuracy: 0.8757

Epoch 11/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.2769 - accuracy: 0.8986 - val_loss: 0.3448 - val_accuracy: 0.8793

Epoch 12/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.2685 - accuracy: 0.9014 - val_loss: 0.3388 - val_accuracy: 0.8824

Epoch 13/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.2602 - accuracy: 0.9045 - val_loss: 0.3381 - val_accuracy: 0.8845

Epoch 14/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.2527 - accuracy: 0.9073 - val_loss: 0.3322 - val_accuracy: 0.8860

Epoch 15/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.2448 - accuracy: 0.9104 - val_loss: 0.3315 - val_accuracy: 0.8880

Epoch 16/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.2381 - accuracy: 0.9125 - val_loss: 0.3255 - val_accuracy: 0.8882

Epoch 17/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.2308 - accuracy: 0.9152 - val_loss: 0.3284 - val_accuracy: 0.8922

Epoch 18/50

1687/1687 [==============================] - 10s 6ms/step - loss: 0.2245 - accuracy: 0.9178 - val_loss: 0.3286 - val_accuracy: 0.8904

Epoch 19/50

1687/1687 [==============================] - 10s 6ms/step - loss: 0.2180 - accuracy: 0.9202 - val_loss: 0.3273 - val_accuracy: 0.8914

Epoch 20/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.2125 - accuracy: 0.9226 - val_loss: 0.3339 - val_accuracy: 0.8905

Epoch 21/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.2063 - accuracy: 0.9248 - val_loss: 0.3332 - val_accuracy: 0.8917

Epoch 22/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.2005 - accuracy: 0.9271 - val_loss: 0.3401 - val_accuracy: 0.8899

Epoch 23/50

1687/1687 [==============================] - 8s 4ms/step - loss: 0.1951 - accuracy: 0.9289 - val_loss: 0.3365 - val_accuracy: 0.8919

Epoch 24/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1892 - accuracy: 0.9314 - val_loss: 0.3368 - val_accuracy: 0.8919

Epoch 25/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.1842 - accuracy: 0.9329 - val_loss: 0.3425 - val_accuracy: 0.8897

Epoch 26/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.1788 - accuracy: 0.9353 - val_loss: 0.3532 - val_accuracy: 0.8902

Epoch 27/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1735 - accuracy: 0.9372 - val_loss: 0.3451 - val_accuracy: 0.8912

Epoch 28/50

1687/1687 [==============================] - 8s 4ms/step - loss: 0.1684 - accuracy: 0.9392 - val_loss: 0.3593 - val_accuracy: 0.8879

Epoch 29/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1631 - accuracy: 0.9409 - val_loss: 0.3554 - val_accuracy: 0.8887

Epoch 30/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1606 - accuracy: 0.9422 - val_loss: 0.3521 - val_accuracy: 0.8899

Epoch 31/50

1687/1687 [==============================] - 7s 4ms/step - loss: 0.1539 - accuracy: 0.9452 - val_loss: 0.3712 - val_accuracy: 0.8872

Epoch 32/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1485 - accuracy: 0.9469 - val_loss: 0.3724 - val_accuracy: 0.8877

Epoch 33/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.1455 - accuracy: 0.9479 - val_loss: 0.3766 - val_accuracy: 0.8884

Epoch 34/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.1407 - accuracy: 0.9503 - val_loss: 0.3825 - val_accuracy: 0.8885

Epoch 35/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1347 - accuracy: 0.9525 - val_loss: 0.3984 - val_accuracy: 0.8827

Epoch 36/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.1315 - accuracy: 0.9538 - val_loss: 0.3995 - val_accuracy: 0.8840

Epoch 37/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1267 - accuracy: 0.9555 - val_loss: 0.4112 - val_accuracy: 0.8827

Epoch 38/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1240 - accuracy: 0.9570 - val_loss: 0.4069 - val_accuracy: 0.8882

Epoch 39/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.1222 - accuracy: 0.9575 - val_loss: 0.4143 - val_accuracy: 0.8875

Epoch 40/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1146 - accuracy: 0.9604 - val_loss: 0.4058 - val_accuracy: 0.8904

Epoch 41/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1106 - accuracy: 0.9630 - val_loss: 0.4182 - val_accuracy: 0.8890

Epoch 42/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.1101 - accuracy: 0.9621 - val_loss: 0.4404 - val_accuracy: 0.8870

Epoch 43/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1050 - accuracy: 0.9634 - val_loss: 0.4228 - val_accuracy: 0.8904

Epoch 44/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.1003 - accuracy: 0.9659 - val_loss: 0.4312 - val_accuracy: 0.8907

Epoch 45/50

1687/1687 [==============================] - 7s 4ms/step - loss: 0.0978 - accuracy: 0.9667 - val_loss: 0.4527 - val_accuracy: 0.8862

Epoch 46/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.0950 - accuracy: 0.9675 - val_loss: 0.4713 - val_accuracy: 0.8859

Epoch 47/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.0914 - accuracy: 0.9696 - val_loss: 0.4621 - val_accuracy: 0.8874

Epoch 48/50

1687/1687 [==============================] - 8s 5ms/step - loss: 0.0878 - accuracy: 0.9708 - val_loss: 0.4743 - val_accuracy: 0.8869

Epoch 49/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.0858 - accuracy: 0.9712 - val_loss: 0.4744 - val_accuracy: 0.8910

Epoch 50/50

1687/1687 [==============================] - 9s 5ms/step - loss: 0.0834 - accuracy: 0.9719 - val_loss: 0.4940 - val_accuracy: 0.8850

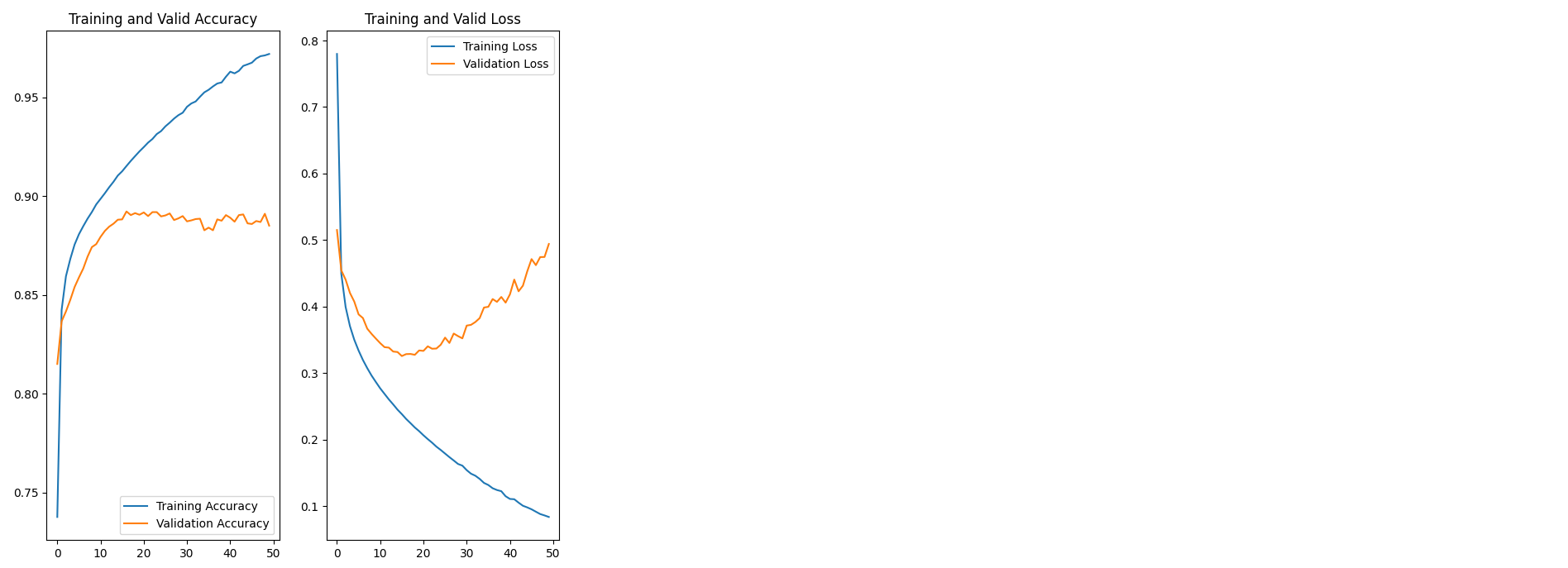

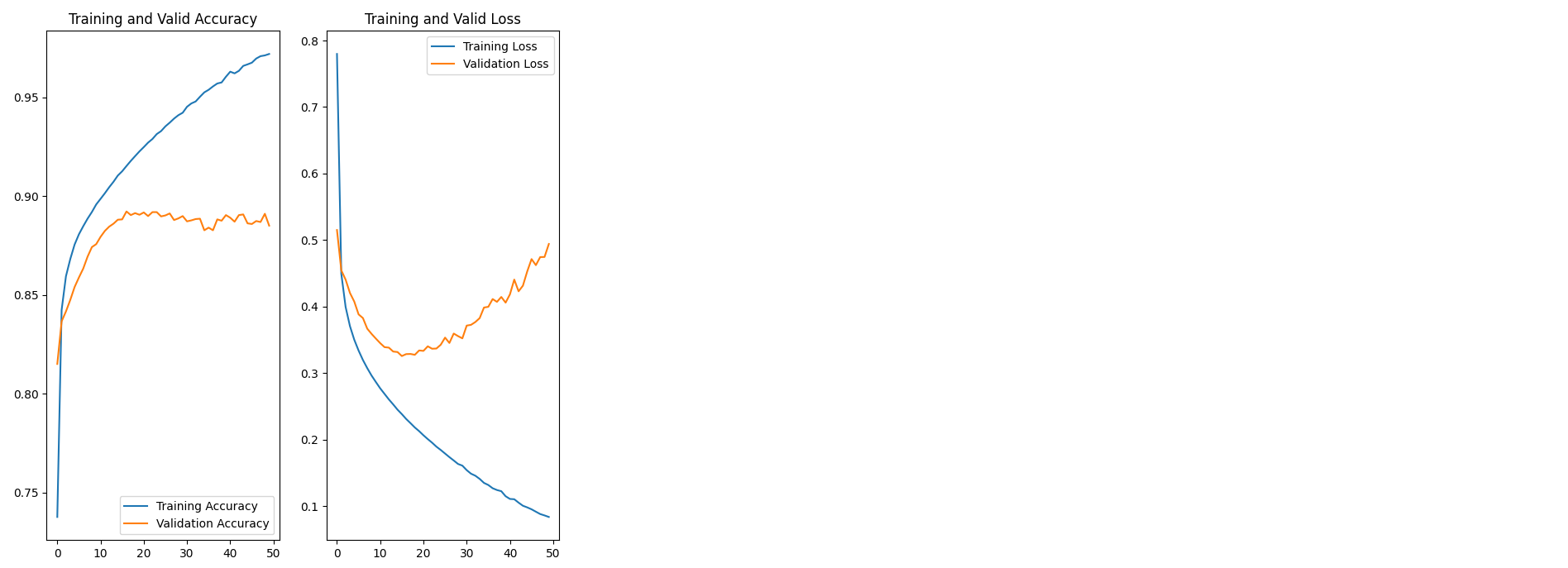

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs_range = range(max_epochs)

plt.figure(figsize=(8, 8))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Valid Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Valid Loss')

plt.show()

results = model.evaluate(test_dataset)