Server 구성

| Server | master | slave01 | slave02 | slave03 |

|---|---|---|---|---|

| OS | centos7 | centos7 | centos7 | centos7 |

| Disk Size | 1000G | 1000G | 1000G | 1000G |

| Memory | 32G | 16G | 16G | 16G |

| Processors | 12 | 12 | 12 | 12 |

전제 조건(Prerequisites)

- 소프트웨어 설치 (Installing Software)

Spark 다운로드 (Download)

- Spark의 설치는 Master와 Slave 모든 서버에서 동일하게 수행해야 합니다.

- Download Url

- Spark 홈페이지 : https://spark.apache.org/downloads.html

- Spark 미러 사이트 : https://archive.apache.org/dist/spark/spark-2.4.0/

- Version

- 앞에서 설치한 hadoop 2.4.1 버전과의 호환성을 고려하여 Spark도 2.4.0 버전으로 설치하였습니다.

- Download

- Download 서버 : master

- Download 위치 : ~

cd ~

wget https://archive.apache.org/dist/spark/spark-2.4.0/spark-2.4.0-bin-hadoop2.7.tgz

tar xvf spark-2.4.0-bin-hadoop2.7.tgz

sudo mv ~/spark-2.4.0-bin-hadoop2.7 ~/spark경로 설정 (Path)

- Spark_HOME

- spark를 설치한 위치가 경로가 되므로 저의 경우에는 '~/spark' 가 경로가 됩니다.

- PATH

- 위에서 지정한 경로들을 PATH에 추가해주면 해당 bin파일로 가지 않아도 해당 명령을 사용할 수 있습니다.

- 적용

- 진행 노드 : master, slave01, slave02, slave03

- 파일 : ~/.bashrc

- 경로 적용

- 재부팅 ( reboot )

- source ~/.bashrc

export SPARK_HOME=~/spark

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbinSpark 구성 (Configuring the Spark)

- Spark 클러스터를 구성하려면 spark-env.sh 파일을 수정해야합니다. $SPARK_HOME/conf 디렉토리에서 spark-env.sh.template 파일을 복사하고 spark-env.sh로 이름을 변경합니다.

- 진행 노드 : hostname에 등록한 hostname으로 대체 ex) master

- 파일 위치 : ~/spark/conf

- spark-env.sh

$ cd $SPARK_HOME/conf

$ cp spark-env.sh.template spark-env.sh

$ vim spark-env.shexport SPARK_MASTER_HOST=master

export SPARK_MASTER_PORT=7077

export SPARK_WORKER_CORES=2

export SPARK_WORKER_MEMORY=4g

export SPARK_WORKER_INSTANCES=1

export JAVA_HOME=${JAVA_HOME}

export HADOOP_HOME=${HADOOP_HOME}

export YARN_CONF_DIR=${YARN_CONF_DIR}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/etc/hadoop"}slaves

- 진행 노드 : master

- 파일 : ~/spark/conf/slaves

slaves에 등록된 host는 worker로 실행됩니다.

$ vim ~/spark/conf/slavesslave01

slave02

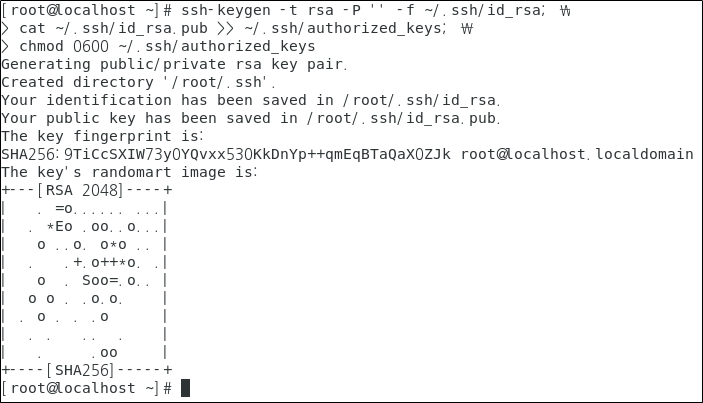

slave03암호가 없는 SSH 설정(Setup passphraseless ssh)

- 진행 노드 : master

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa; \

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys; \

chmod 0600 ~/.ssh/authorized_keys

배포

- 진행 노드 : master

배포를 진행하기 전에 배포할 서버의 IP를 체크합니다. 그 후 scp를 사용하여 파일 및 폴더를 해당 서버에 전송합니다.

- scp

master에서 slave01,slave02,slave03으로 전송하는 것으로 master에서 둘 다 실행하시면 됩니다.

# slave01 ~/로 전송

scp -r ~/.ssh (계정)@slave01:~/

# slave02 ~/로 전송

scp -r ~/.ssh (계정)@slave02:~/

# slave03 ~/로 전송

scp -r ~/.ssh (계정)@slave03:~/ - Spark

master에서 slave01,slave02,slave03로 전송하는 것으로 master에서 둘 다 실행하시면 됩니다.

# slave01 ~/로 전송

scp -r ~/spark (계정)@slave01:~/

# slave02 ~/로 전송

scp -r ~/spark (계정)@slave02:~/

# slave03 ~/로 전송

scp -r ~/spark (계정)@slave03:~/Spark 시작

- 진행 노드 : master

Spark의 시작은 Master 서버에서만 수행하면 됩니다.

- master 서버에서 start-all 실행

$ ~/spark/sbin/start-all.sh

$ ~/spark/sbin/start-history-server.shSpark 종료

- 진행 노드 : master

Spark의 중지는 Master 서버에서만 수행하면 됩니다.

$ ~/spark/sbin/stop-all.sh

$ ~/spark/sbin/stop-history-server.sh

$ rm -rf ~/spark/evevntLog/*SPARK Web UI

Spark Context

- http://master:4040 (Spark 실행 중일 때만 접근 가능)

Spark Master

Spark Worker

Spark History Server

Pyspark, Anaconda, Jupyter 환경 설정

- Pyspark, Anaconda, Jupyter를 설치한다면 데이터 분석에 강력한 환경을 구성할 수 있습니다.

- Python으로 머신러닝이나 EDA를 조금만 해본 사람들이라면 충분히 공감할 것입니다.

- Python 라이브러리를 이용한다면 상당한 수준의 시각화까지 가능하기 때문에 가능하다면 이것들을 꼭 구축해주는 것이 좋습니다.

Anaconda 설치 (Install)

- 이것도 꼭 전 서버에 동일하게 설치해 주시기 바랍니다.

$ wget https://repo.anaconda.com/archive/Anaconda3-2021.05-Linux-x86_64.sh

$ bash Anaconda3-2021.05-Linux-x86_64.sh환경 설정

$ vim ~/.bashrcexport CONDA_HOME=~/naconda3

export PATH=PATH:CONDA_HOME/bin:$CONDA_HOME/condabin

export PYSPARK_PYTHON=python3

export PYSPARK_DRIVER_PYTHON=jupyter

export PYSPARK_DRIVER_PYTHON_OPTS='notebook --allow-root'$ source ~/.bashrc-allow-rootLinux의 root 계정으로 접근하려고 할 때 필요합니다.

$ conda config --set auto_activate_base FalseJupiter 설치와 외부 접속 설정

jupiter notebook 설치 (Install)

$ pip install jupyter

$ jupyter notebook --generate-config환경 설정

$ vim /root/.jupyter/jupyter_notebook_config.pyc.NotebookApp.notebook_dir = '/data'

c.NotebookApp.open_browser = False

c.NotebookApp.password =

c.NotebookApp.port = 8888

c.NotebookApp.ip = '172.17.0.2'

c.NotebookApp.allow_origin = '*'비밀번호 생성

$ python (파이썬 프롬프트 접근)

from notebook.auth import passwd

passwd()출력되는 문자열을 복사하여 c.NotebookApp.password의 값으로 붙여넣기.

Pyspark 실행

$ ~/spark/bin/pyspark

실행하면 Jupyter Notebook이 뜬다.