■ Axomic Definition of Probability

⭐ Sample Space : A collection of all sample points of a random experiment

⭐ event : A subset of the sample space

■ Probability axioms

- P(A)≥0

- P(U)=1

- If AB=ϕ, P(A∪B)=P(A)+P(B)

⭐ Probability is assined to "Event"

■ Properties of probability

- P(ϕ)=0

- P(A∪B)=P(A)+P(B)−P(A∩B)

- P(Ac)=1−P(A)

- If {A1,A2,⋯,An} is a sequence of mutually exclusive events, P(⋃i=1nAi)=∑i=1nP(Ai)

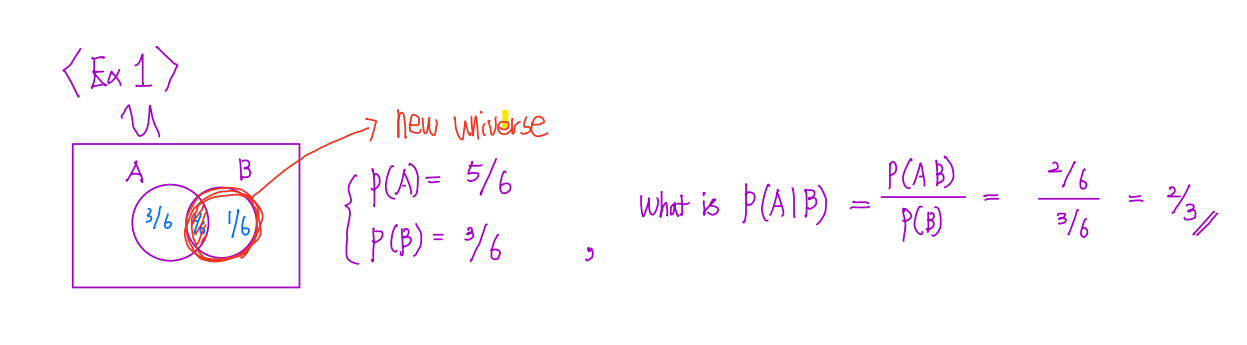

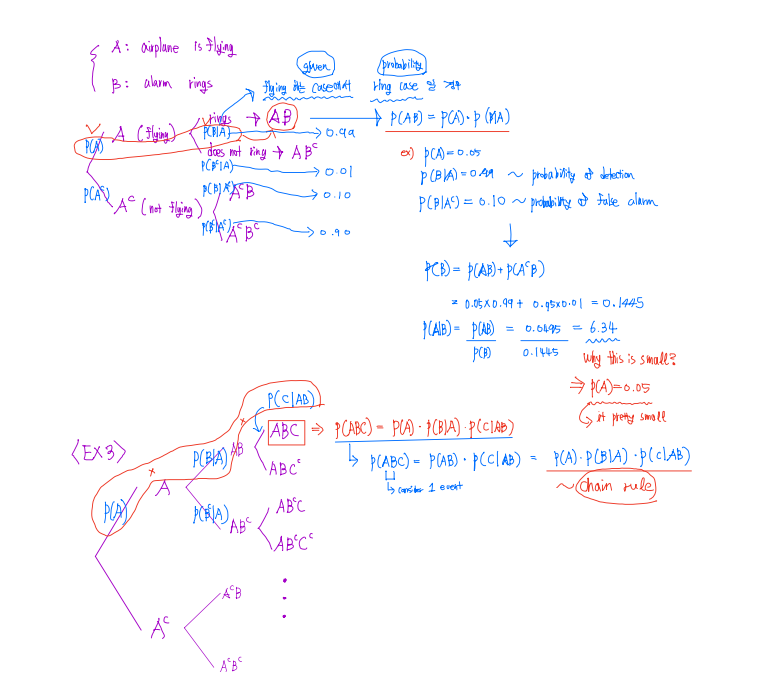

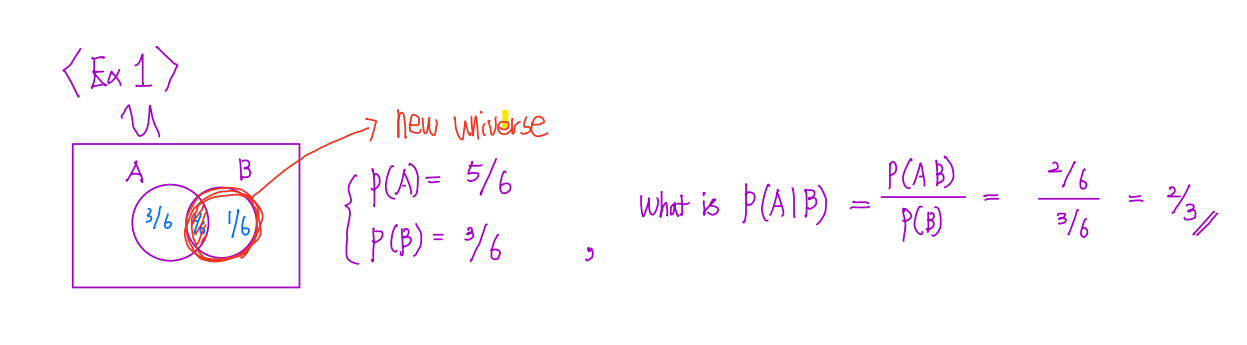

■ Conditional Probability

• Definition : The conditional probability of event A, given B is defined as.

P(A∣B)=P(B)P(AB)⇔P(B∣A)×P(B)P(A)

P(AB)=P(A∣B)×P(B)→ This expression is more comfortable than above equation.

( ∵P(B) can be 0)

✏️ Exercise 1

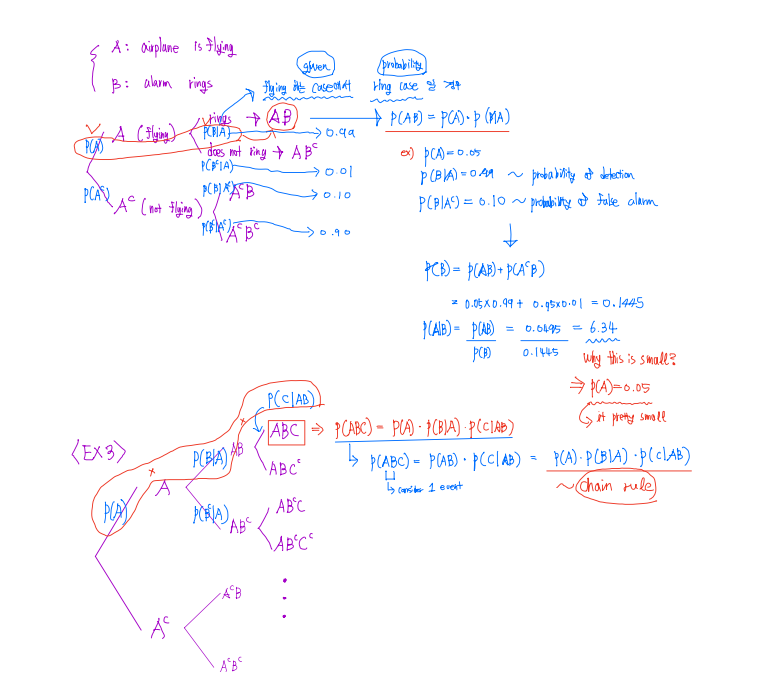

✏️ Exercise 2 & 3

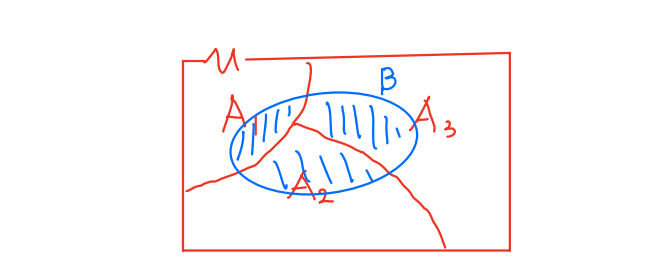

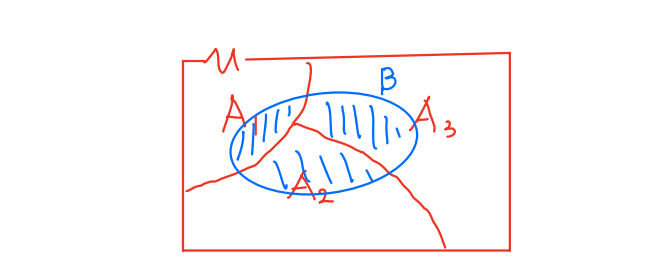

■ ⭐ Total Probability Theorem ⭐

{A1,A2,A3} ~ partion of U

P(B)=P(A1B)+P(A2B)+P(A3B)

⇔P(A1)×P(B∣A1)+P(A2)×P(B∣A2)+P(A3)×P(B∣A3)

∴∑i=13P(B∣Ai)×P(Ai)

In genral, P(B)=∑i=1nP(B∣Ai)×P(An) ~ Total Probability Theorem

■ Baye's Rule

- This rule focus on calculationg below thing.

P(Ai∣B)=?

P(Ai) ~ Prior prbability ~ initial believe (It means occurs A it self, not related to B)

P(Ai∣B)=P(B)P(B∣Ai)×P(B)P(Ai) (P(B)=∑i=1nP(B∣Ai)×P(An))

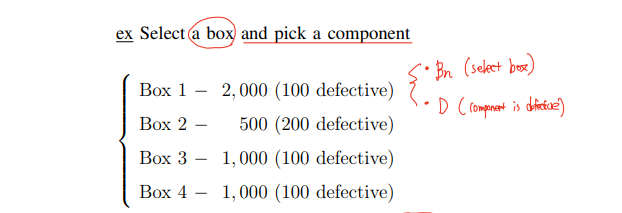

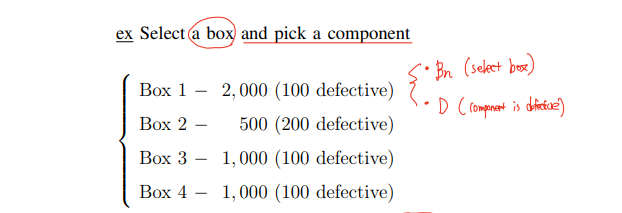

Example

(a) What is the probability that the picked one is defective?

Solution)

P(D)=∑i=14P(D∣Bi)×P(Bi)

=1/4×(0.05+0.4+0.1+0.1)

∴ 0.1625

(b) If the picked one is defective, what is the probability that it came from Box2?

Solution)

P(B2∣D)=P(D)P(D∣B2)×P(B2)

=0.1625(0.4)×(0.25)

∴ 0.6154

■ Independence

- Two events A and B are said to be independence iff

→ Whether or not occur event B, probability A is not changed

P(A∣B)=P(A)

⇔P(B∣A)=P(B)

⇔P(AB)=P(A)×P(B)

🚨 Question

If P(A)=0 and P(B)=0, Can these two events be both independent and mutually exclusive?

Answer : NO!!

P(AB)=P(A)×P(B)

In this case, P(AB)=0, (P(A)=P(B))=0

It is not make sense...! Because according to the independence,

It should be P(AB)=P(A)×P(B)

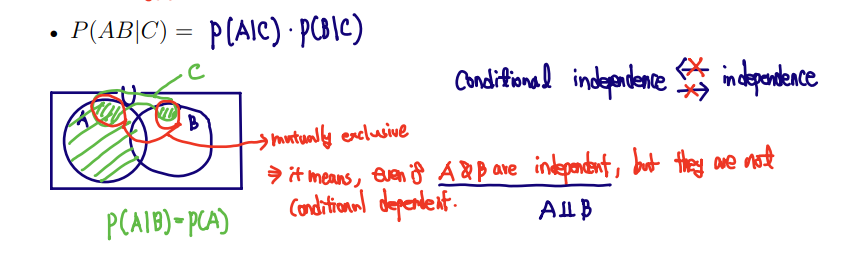

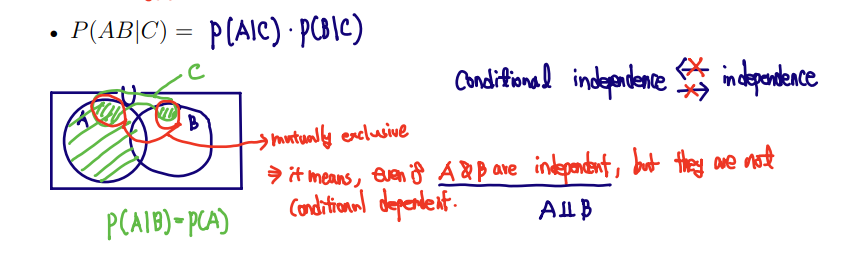

■ Conditional Independence

✏️ Example

- Consider two unfair coins A and B

- Choose a coin and toss it twice

- P(head∣A)=0.9 and P(head∣B)=0.1

- H1= { First toss is head } and H2= { Second toss is head }

(a) Once we know it is coin A, are H1 and H2 independent ?

📋Solution

P(H1H2∣A)=P(H1∣A)×P(H2∣A)

⇔0.9×0.9=0.9×0.9

∴H1 and H2 are conditional independent

(b) If we don't know which coin it is, are H1 and H2 independent ?

📋Solution

P(H1H2)=P(H1)×P(H2)

P(H1)=P(H1∣A)×P(A)+P(H1∣B)×P(B)

⇔21×(0.9+0.1)

⇔21

Same progress P(H2)

However, P(H1H2)=P(H1H2∣A)×P(A)+P(H1H2∣B)×P(B)

⇔(0.9×0.9×0.5)+(0.1×0.1×0.5)=0.41=P(H1)×(H2)

∴H1 and H2 are dependent

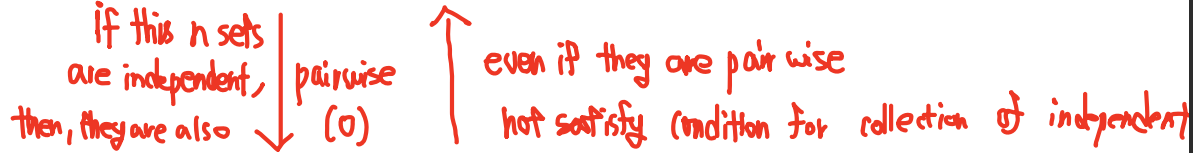

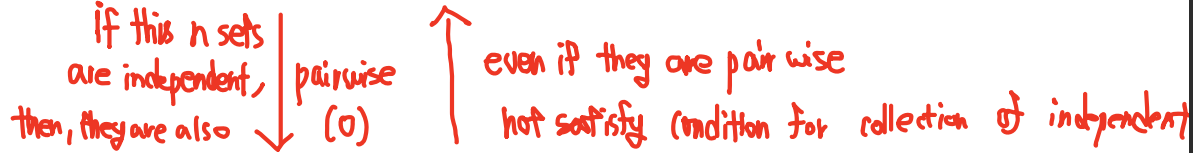

■ Independence of Collection of Events

- Events A1,A2,⋯,An are said to be independent iff

for any set of distinct index I⊂{1,2,⋯,n}

P(⋂i∈IAi)=∏i∈IP(Ai)

Is this necessary-sufficeint condition? => NO, necessary condition.

<cf> Any Set must be express to the product of each of probability

■ Pairwise Independence

- Events A1,A2,⋯,An are said to be pairwise independent iff

P(AiAj)=P(Ai)×P(Aj), ∀i=j (Any pair must be satisfied)

✏️ Example

- A={First toss is head}→P(A)=1/2

- B={Second toss is head}→P(B)=1/2

- C={First and second toss give the same result}→P(C)=1/2

What we want to do...

1. Determined that 3 events are independent or not.

2. Determined that 3 events are Pairwise events or not.

- P(AB)=P(BC)=P(CA)=41

- P(ABC)=41=P(A)×P(B)×P(C)

∴ Pairwise independence does not imply independence!

But! Independence can imply the Pairwise independence!

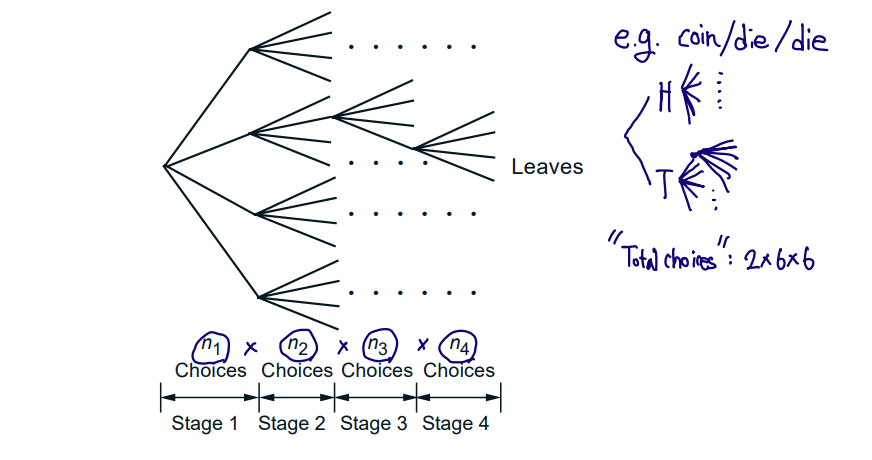

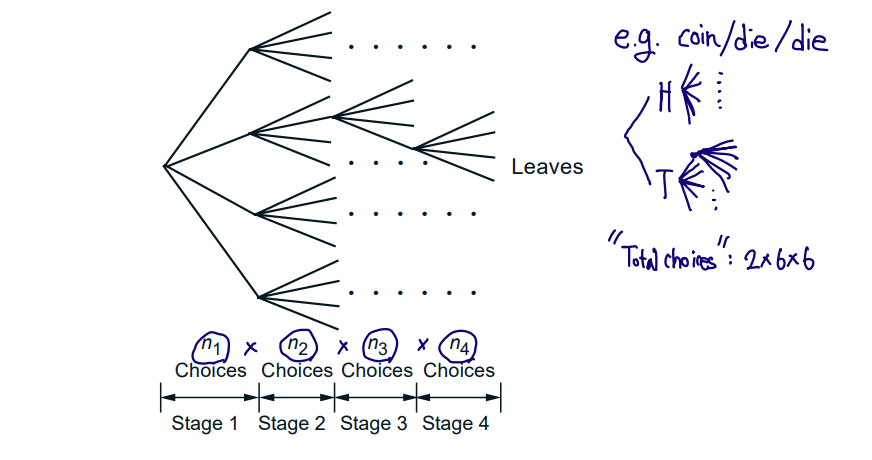

■ Counting Principle

- Experiment consisting of r stages

- ∃ni choices at stage i

- Number of choices = n1×n2×⋯×nr

✏️ Example

1) Number of license paltes (e.g. HGU0387)

📋Solution

- # Alphabetical number: 26

- # Integer number: 10

∴26×26×26×10×10×10×10

2) Number of subsets of an n-element set

📋Solution

[ EX ]

{1,2,3,4}→{1},{1,2,3},⋯

Binary Decision: 2×2×2×2=24

We can see the pattern when we use 'Binary Decision'

∴n-element→2n

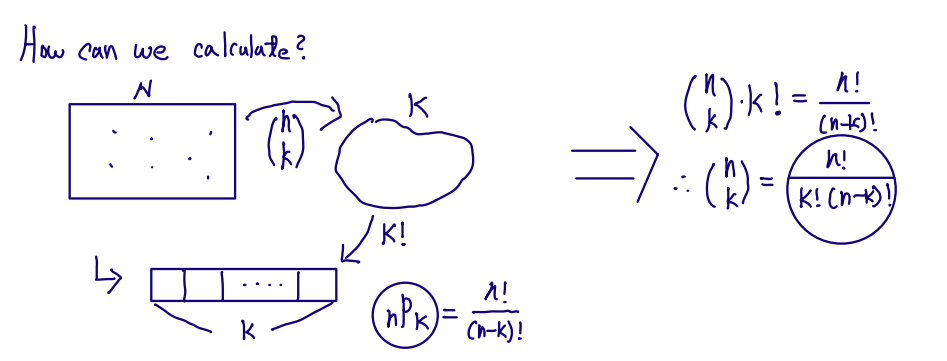

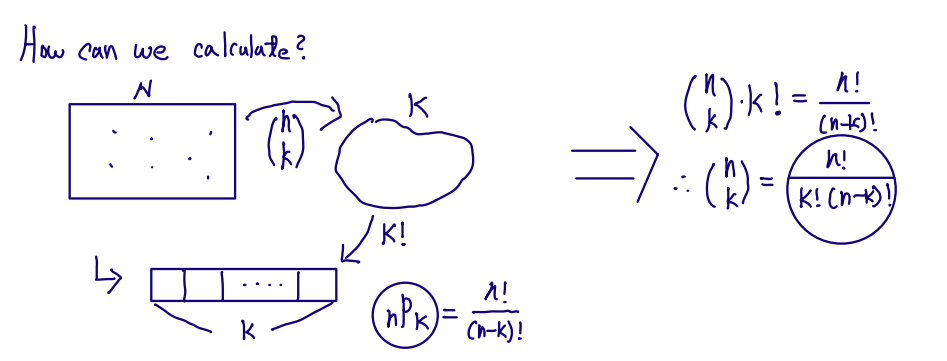

■ Permunations

- K-permutations: Number of ways of picking k out of n objects and arrange them in a sequence

(1) nCk ×k!=k!n×(n−1)×⋯×(n−k+1)×k!

(2) Choose k and arrange in {1,2,3,⋯,n}→(n−k)!n!=nP =k

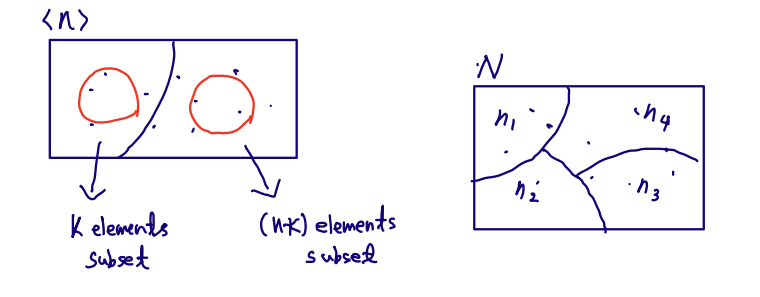

■ Combinations

- Number of k-element subsets of a given n-element set that no ordering of the selected elements

- (kn)=nCk : "n choose k"

<Conference>

"Binomial coefficients"

Ex)∑k=0n(kn) =(0n) + (1n) + ⋯+ (nn) =2n=(nC0+⋯+nCn)

It means that we choose the elements true or fals.

Therefore, each element have 2 choices, total cases are 2n

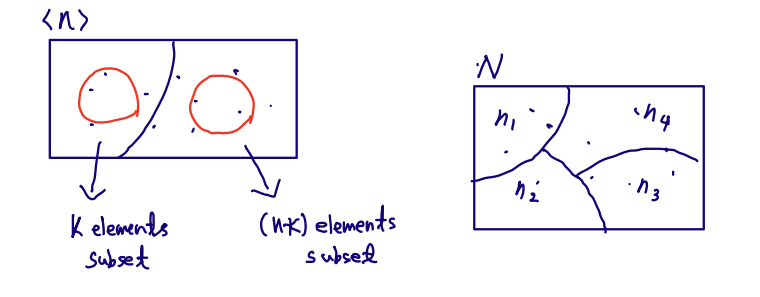

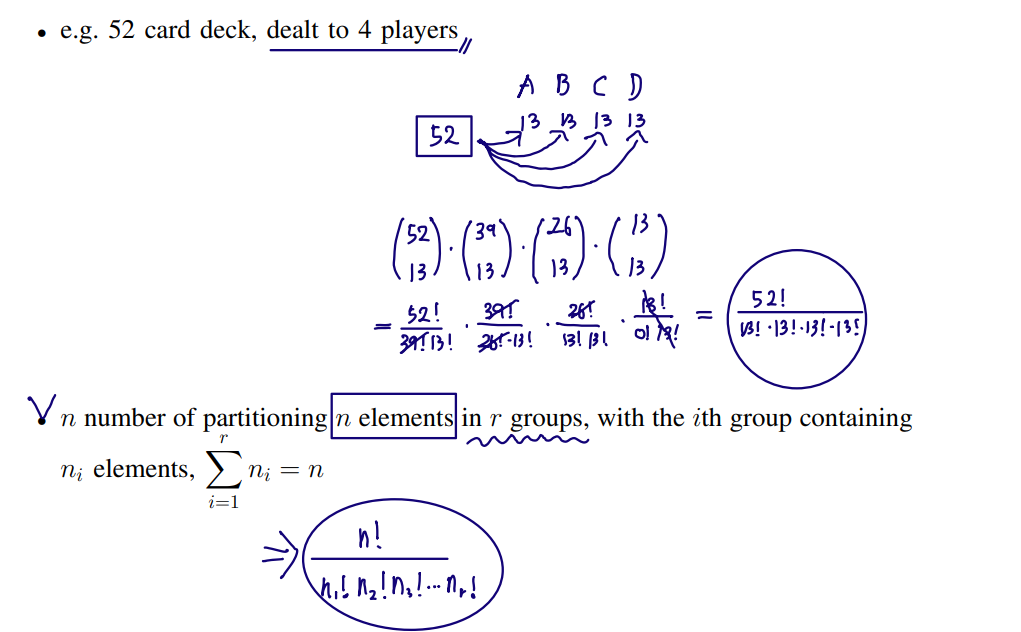

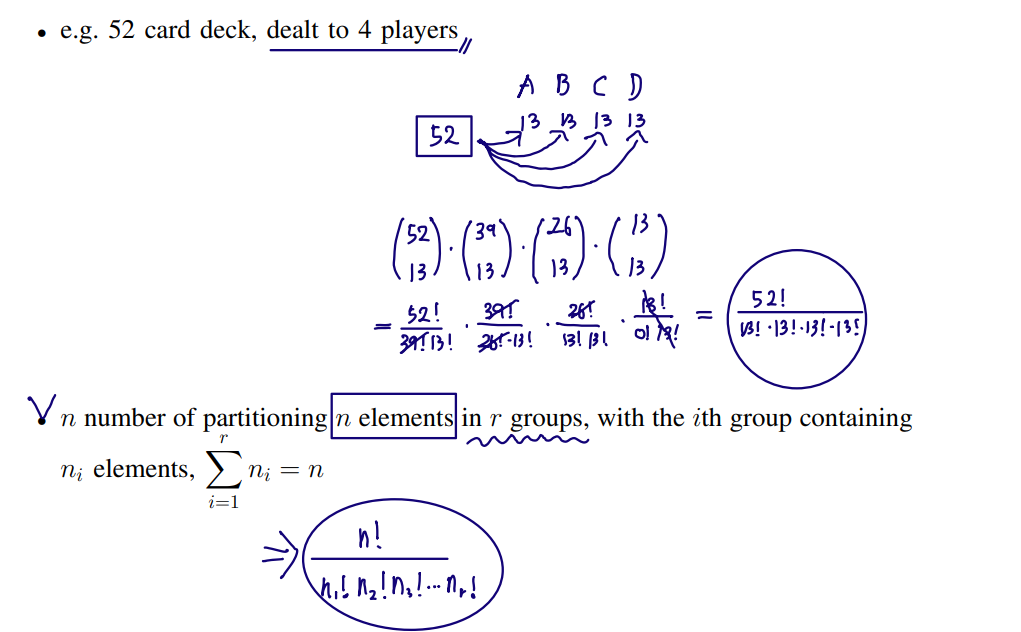

■ Partitions

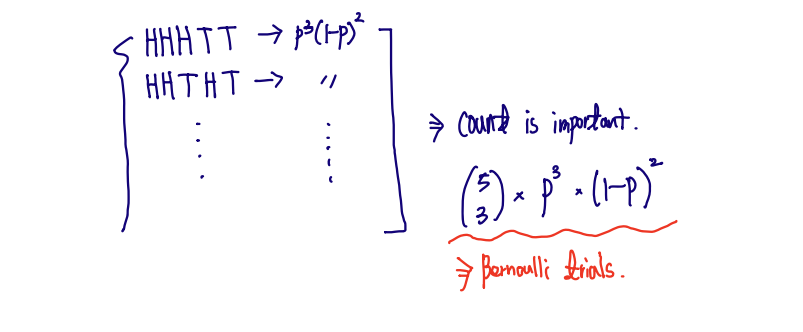

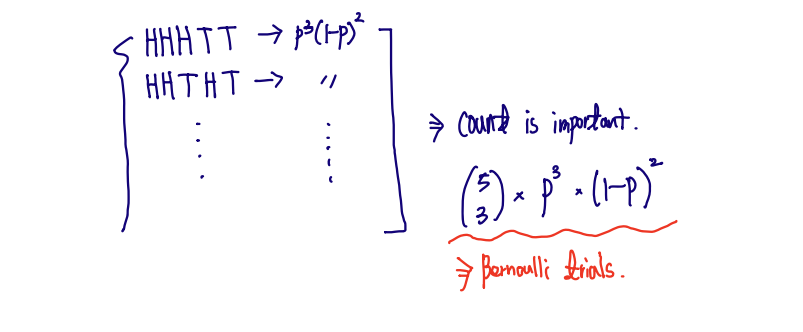

■ Bernoulli Trials

Let A be an event in a random experiment with P(A)=p and P(Ac)=1−p

Repeating this experiment n times, probability that A occurs k times in any order is calculated by.....

Pn(k) = (kn) ×pk×(1−p)n−k ~ "Binomial Probability"

e.g. We toss 5 coins independently

- P(H)=p, P(T)=1−p

- P(HHHTT)=p×p×p×(1−p)2

- P( 3 heads and 2 tails in any order ) = ???

■ Generalization

Let [A1,A2,⋯,Ar] be a partition of U. Let P(Ai)=pi, ∑i=1rpi=1, and ∑i=1rki=n.

Then,

Pn(k1,k2,⋯,kr)= k1!×k2!×⋯×kr!n! ×(P1)k1×(P2)k2×⋯×(Pr)kr

본 글은 HGU 2023-2 확률변수론 이준용 교수님의 수업 필기 내용을 요약한 글입니다.