DATA SET

import tensorflow as tf

tf.keras.utils.set_random_seed(42)

tf.config.experimental.enable_op_determinism()

from tensorflow import keras

from sklearn.model_selection import train_test_split

(train_input, train_target), (test_input, test_target) = \

keras.datasets.fashion_mnist.load_data()

train_scaled = train_input.reshape(-1, 28, 28, 1) / 255.0

train_scaled, val_scaled, train_target, val_target = train_test_split(

train_scaled, train_target, test_size=0.2, random_state=42)📌합성곱 층

📍 1층

- 샘플 하나에 대해 32개 필터

- kernel_size=3 : 3x3x1 필터 (input depth=1과 동일)

- padding = 'same' : 28x28x32 특성맵 (input과 동일)

- MaxPooling2D(2) : 14x14x32 특성맵 (높이,너비 축소)

model = keras.Sequential()

model.add(keras.layers.Conv2D(32, kernel_size=3, activation='relu',

padding='same', input_shape=(28,28,1)))

model.add(keras.layers.MaxPooling2D(2))-> 14x14x32 특성맵에 대해 두 번째 합성곱 층

📍 2층

- kernel_size=(3,3) : 3x3x32 필터 64개 (채널 갯수 증가)

- padding='same' : 14x14x64 특성맵

- MaxPooling2D(2) : 7x7x64 특성맵

model.add(keras.layers.Conv2D(64, kernel_size=(3,3), activation='relu',

padding='same'))

model.add(keras.layers.MaxPooling2D(2))-> dense 층으로 10개의 확률값 필요

- Flatten() : 3차원 -> 1차원, 3136개 뉴런 입력

- 은닉층 : 3136개 뉴런 -> 100개의 뉴런(활성함수 relu) 완전 연결

- 출력층 : 100개 뉴런 -> softmax -> 10개의 확률

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(100, activation='relu'))

model.add(keras.layers.Dropout(0.4))

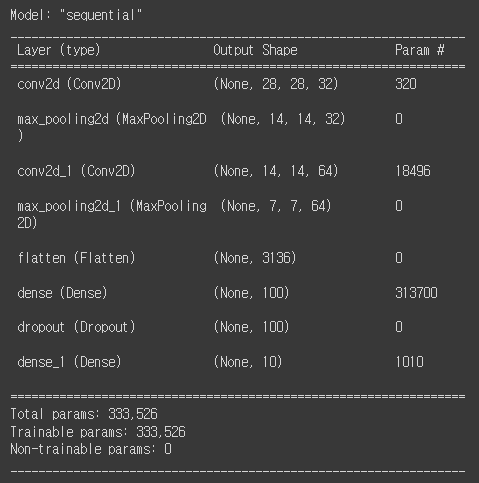

model.add(keras.layers.Dense(10, activation='softmax'))📍 모델 요약

- param = 가중치

- 합성곱층 가중치 <<< dense층(완전연결층) 가중치

-> 적은 갯수의 가중치로도 효과적으로 이미지 특징을 잘 잡아냄

📍 모델 컴파일 & 훈련

- 콜백 함수

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy',

metrics='accuracy')

checkpoint_cb = keras.callbacks.ModelCheckpoint('best-cnn-model.h5',

save_best_only=True)

early_stopping_cb = keras.callbacks.EarlyStopping(patience=2,

restore_best_weights=True)

history = model.fit(train_scaled, train_target, epochs=20,

validation_data=(val_scaled, val_target),

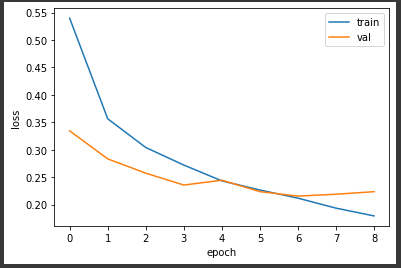

callbacks=[checkpoint_cb, early_stopping_cb])-> 7번째 epoch에서 조기종료

📍 평가 & 예측

- evaluate() : 평가 [손실값, 정확도]

model.evaluate(val_scaled, val_target)[0.2154655158519745, 0.922166645526886]

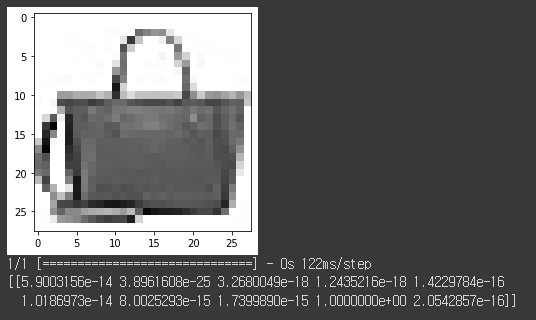

- 1x28x28x1 인 이미지(가방)를 predict

plt.imshow(val_scaled[0].reshape(28, 28), cmap='gray_r')

plt.show()

preds = model.predict(val_scaled[0:1])

print(preds)-> [10개의 확률] 출력

: 9번째 원소만 1이고 나머지는 0에 가까움 = 가방

- Test Set 점수

test_scaled = test_input.reshape(-1, 28, 28, 1) / 255.0

model.evaluate(test_scaled, test_target)[0.23708684742450714, 0.9139000177383423]