📌 IMDB 영화 리뷰 데이터 분석

- 감성분석

- 긍정/부정 text data set 각 25000개

- 이진분류

📍 Data 불러오기

- 500개 단어만 사용

- Text -> 정수

from tensorflow.keras.datasets import imdb

(train_input, train_target), (test_input, test_target) = imdb.load_data(

num_words=500)

print(train_input.shape, test_input.shape)

print(train_input[0])

print(train_target[:20])(25000,) (25000,)

[1, 14, 22, 16, 43, 2, 2, 2, 2, 65, 458, ...

-> '1' : 샘플의 시작 부분을 의미 // '2' : 500개 단어에 포함되지 않은 단어

[1 0 0 1 0 0 1 0 1 0 1 0 0 0 0 0 1 1 0 1]

📍 Data Set

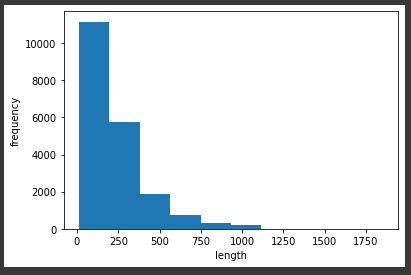

- 샘플의 길이가 천차만별 (단어 개수)

from sklearn.model_selection import train_test_split

import numpy as np

import matplotlib.pyplot as plt

train_input, val_input, train_target, val_target = train_test_split(

train_input, train_target, test_size=0.2, random_state=42

)

lengths = np.array([len(x) for x in train_input])

print(np.mean(lengths), np.median(lengths))

plt.hist(lengths)

plt.xlabel('length')

plt.ylabel('frequency')

plt.show()237.9088125 179.0 // 평균값 > 중간값

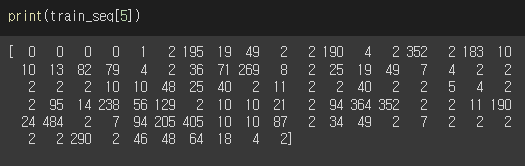

📍 시퀀스 패딩

-

문장의 길이를 지정해서 자르거나 빈 곳은 0으로 패딩

(일반적으로 문장의 앞부분을 자르거나 패딩함) -

토큰 = 단어 개수 = 타임스텝 = 100

from tensorflow.keras.preprocessing.sequence import pad_sequences

train_seq = pad_sequences(train_input, maxlen=100)

val_seq = pad_sequences(val_input, maxlen=100)

print(train_seq.shape)(20000, 100) // 샘플, 토큰

📌 순환 신경망 모델

📍 모델 생성

from tensorflow import keras

model = keras.Sequential()

model.add(keras.layers.SimpleRNN(8, input_shape=(100, 500)))

model.add(keras.layers.Dense(1, activation='sigmoid'))- 원 핫 인코딩 : 500개 vector -> 원소 하나만 1, 나머지 0

- 20000x100인 train_seq -> 20000x100x500

train_oh = keras.utils.to_categorical(train_seq)

val_oh = keras.utils.to_categorical(val_seq)

print(train_oh.shape)

print(train_oh[0][0][:12])

print(np.sum(train_oh[0][0]))(20000, 100, 500)

[0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]

1.0 // 원소 하나만 1

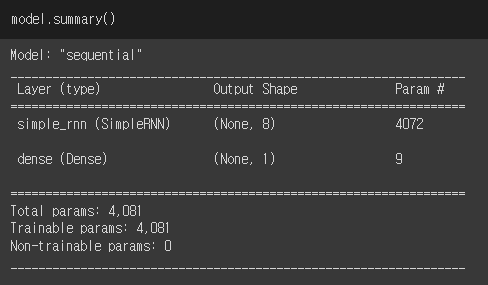

-

RNN Param

500개 토큰(원핫) x 8개 뉴런 완전연결

+8개 순환되는 은닉상태 h x 8개 뉴런 완전연결

+8개 절편

= 4072 -

Dense Param

8개 뉴런 x 1개 가중치

+1개 절편

= 9

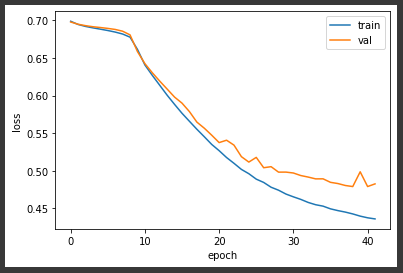

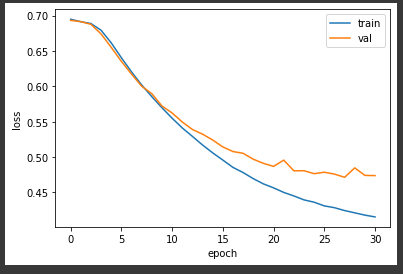

📍 모델 훈련

- RMSprop 옵티마이저 : learning_rate=1e-4

- 이진분류 손실함수 : binary_crossentropy

- 콜백함수 : Checkpoint, EarlyStopping

rmsprop = keras.optimizers.RMSprop(learning_rate=1e-4)

model.compile(optimizer=rmsprop, loss='binary_crossentropy', metrics=['accuracy'])

checkpoint_cb = keras.callbacks.ModelCheckpoint('best-simplernn-model.h5', save_best_only=True)

early_stopping_cb = keras.callbacks.EarlyStopping(patience=3, restore_best_weights=True)

history = model.fit(train_oh, train_target, epochs=100, batch_size=64,

validation_data=(val_oh, val_target),

callbacks=[checkpoint_cb, early_stopping_cb])plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.xlabel('epoch')

plt.ylabel('loss')

plt.legend(['train', 'val'])

plt.show()-> 80% 정확도

-> 데이터가 늘어날수록 메모리 낭비 심함

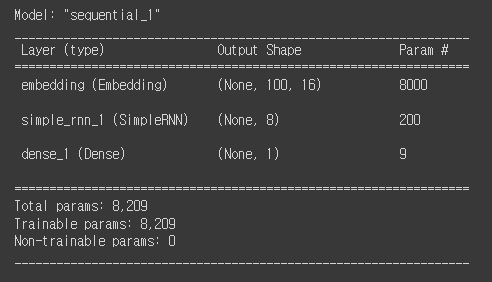

📍 단어 임베딩 사용

- 단어를 실수 벡터로 임베딩

- 거리 계산으로 단어 비교 가능

- 입력 차원 100 -> 출력 차원 16 (토큰 하나 당)

model2 = keras.Sequential()

model2.add(keras.layers.Embedding(500, 16, input_length=100))

model2.add(keras.layers.SimpleRNN(8))

model2.add(keras.layers.Dense(1, activation='sigmoid'))

model2.summary()-

Embedding Param

100개 토큰 -> 16 차원 실수 벡터

x 가중치 500

= 8000 -

SimpleRNN Param

16개 출력 x 8개 뉴런

+8개 은닉상태 x 8개 뉴런

+8개 절편

= 200

-> 비슷한 결과