1. Logistic Loss Function

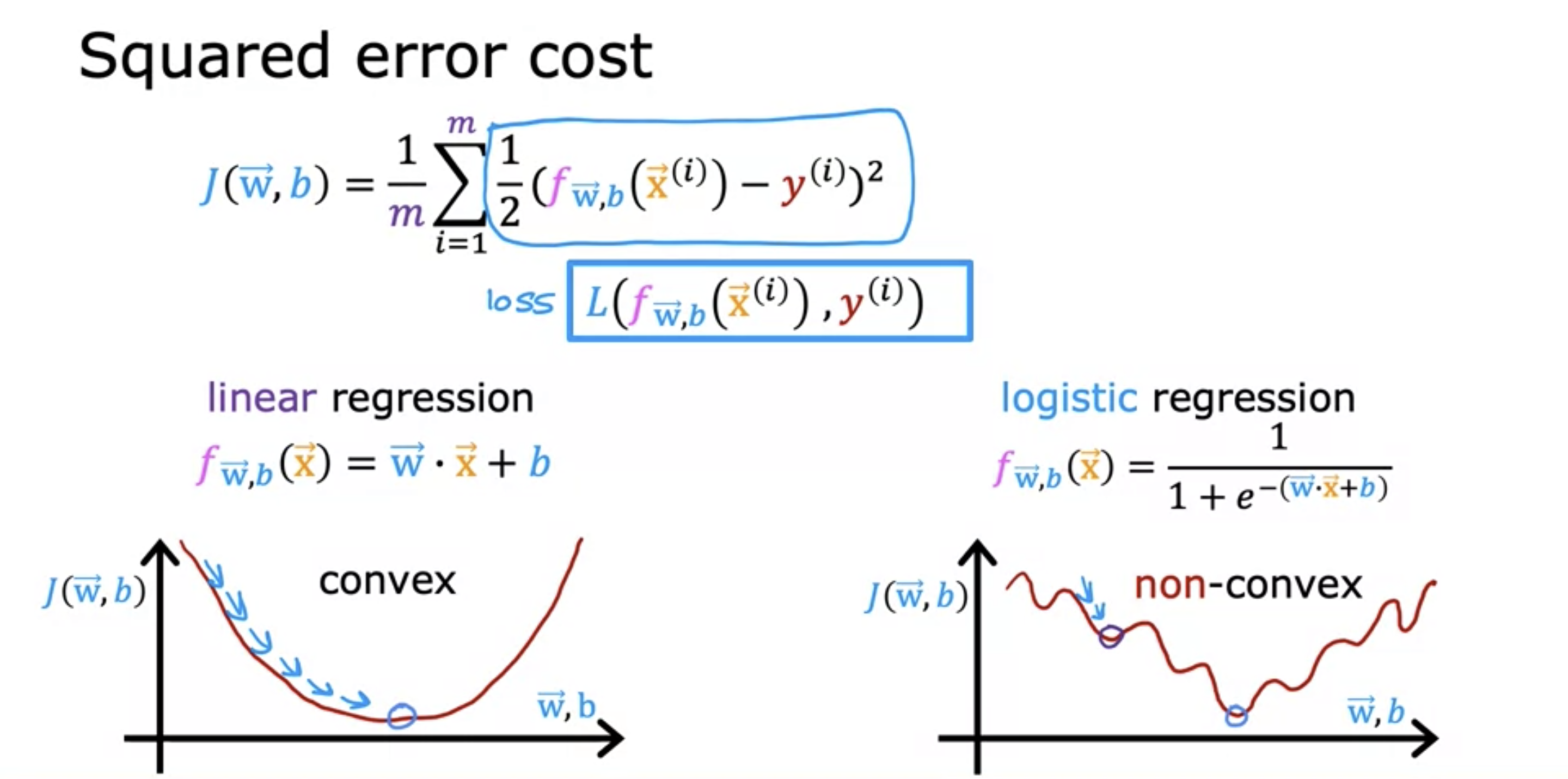

- The squared cost function for logistic regression shows many local minima, which is not ideal for gradient descent.

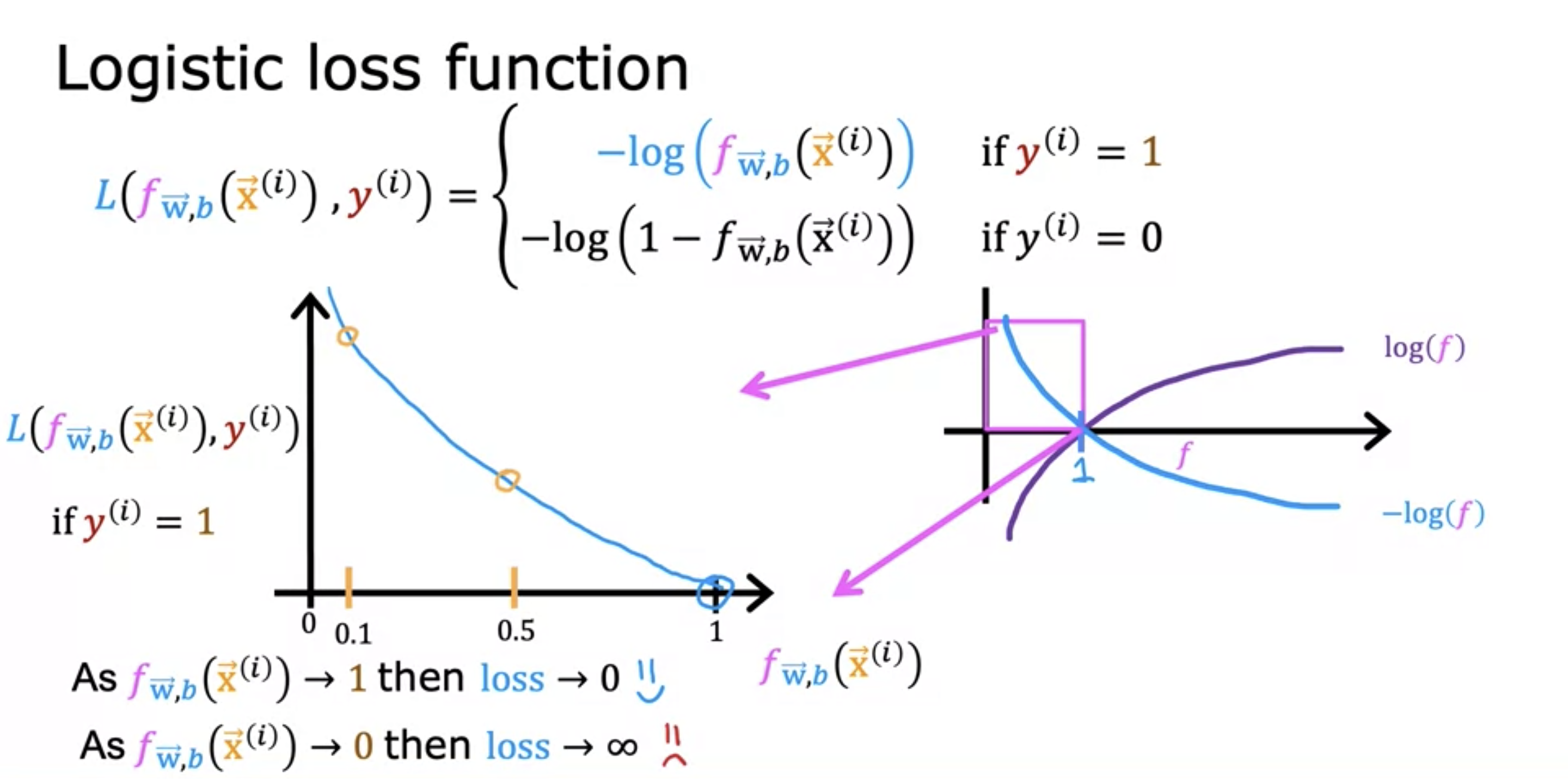

- The upper branch log function works for y = 1, because the cost decreases exponentially when expected value gets closer to 1.

- Closer to 0, cost goes to infinity.

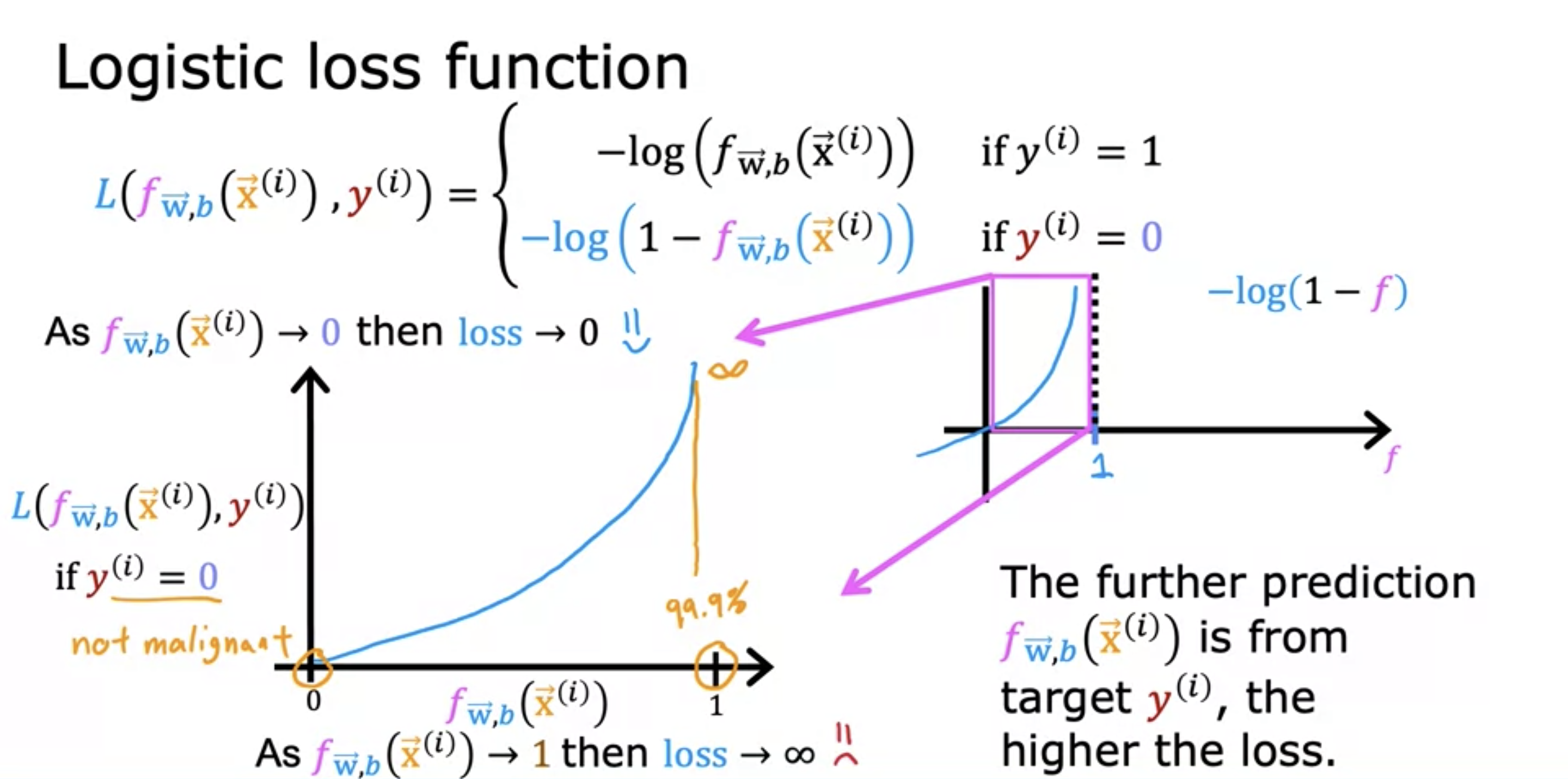

- The opposite for y = 0.

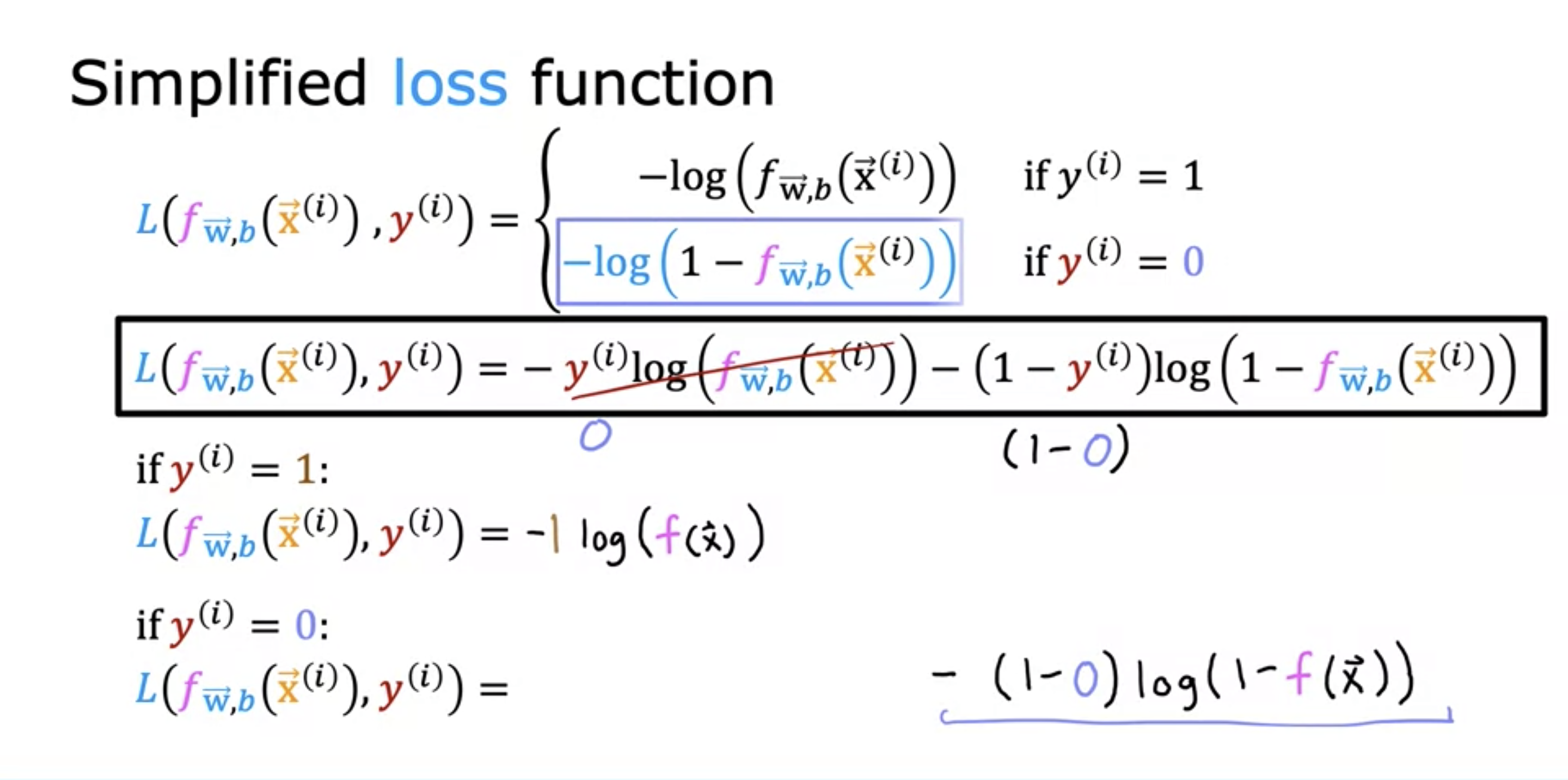

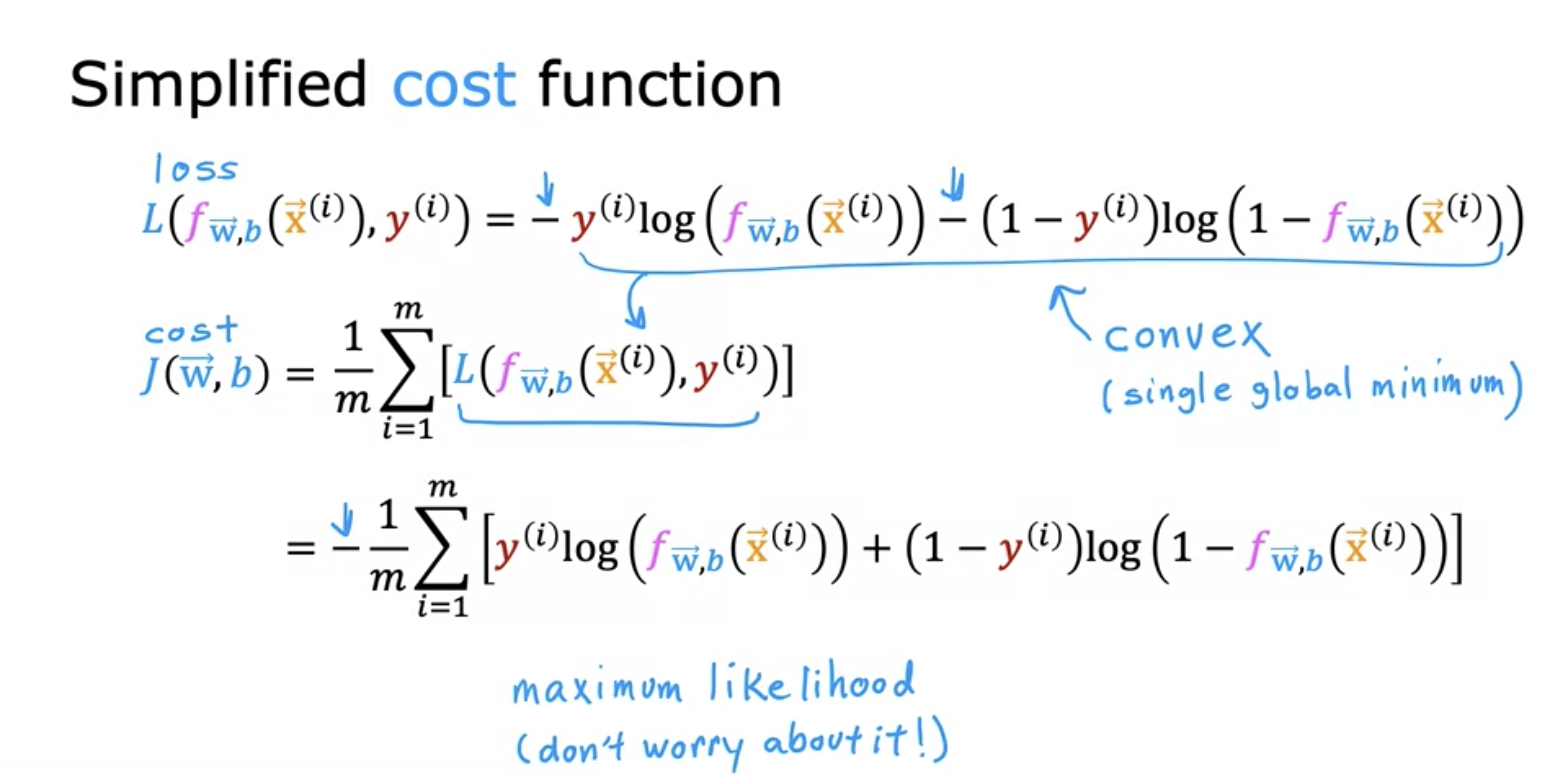

2. Simplified Cost Function

- either one of the two terms gets eliminated because y is only either 1 or 0.

- average of all the losses