1. Logistic Regression

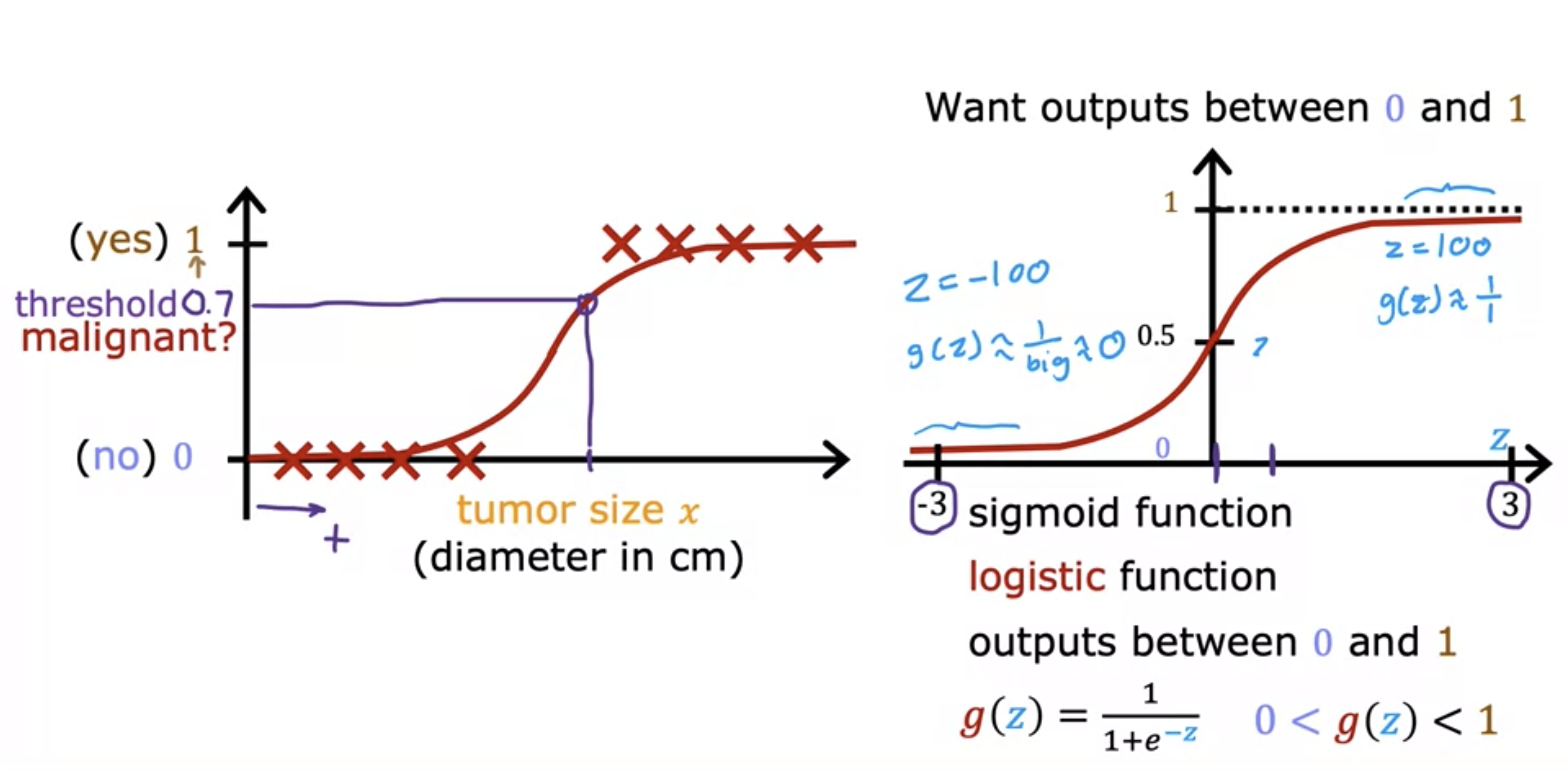

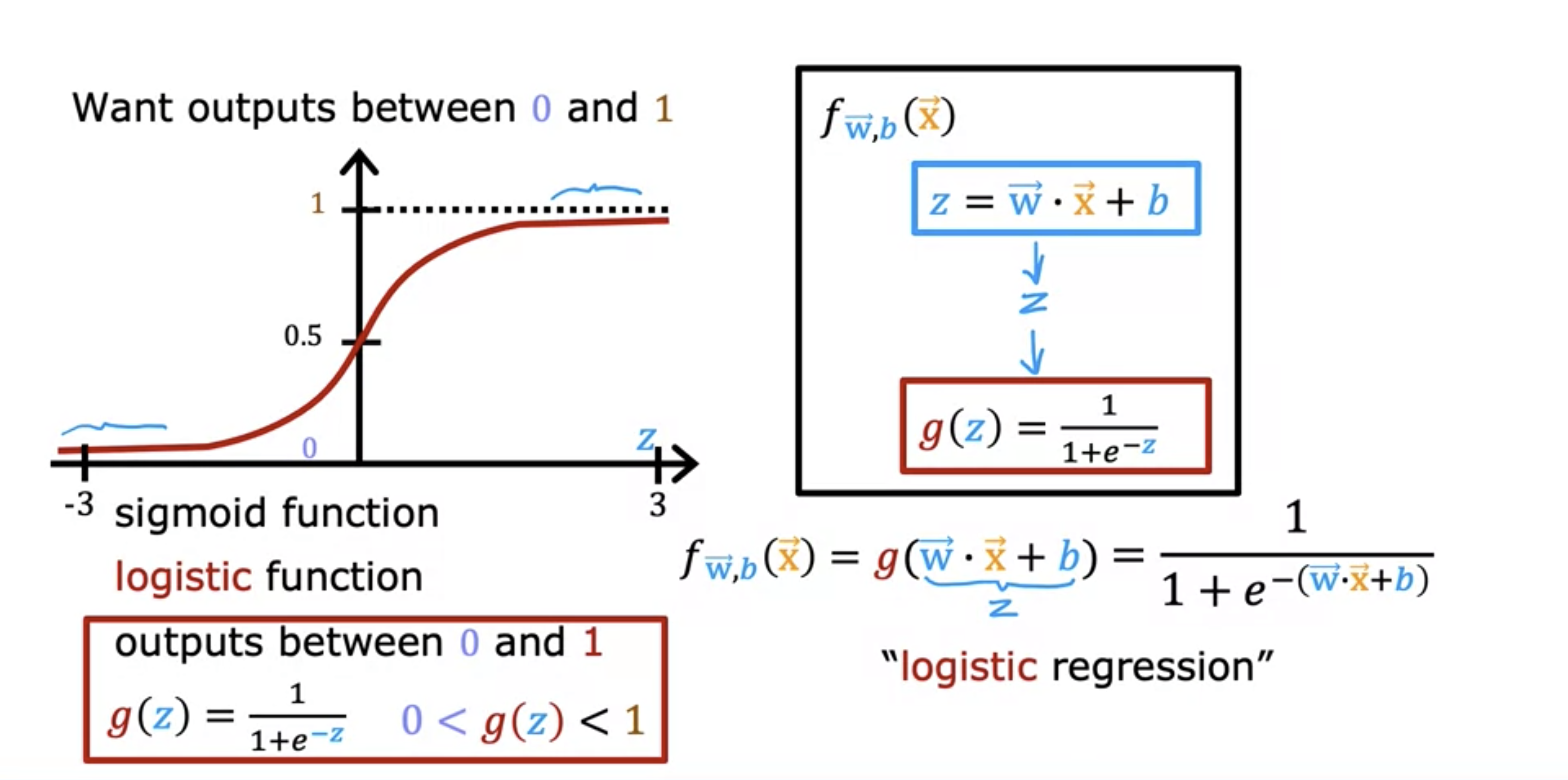

- Sigmoid function addresses the classification problem well.

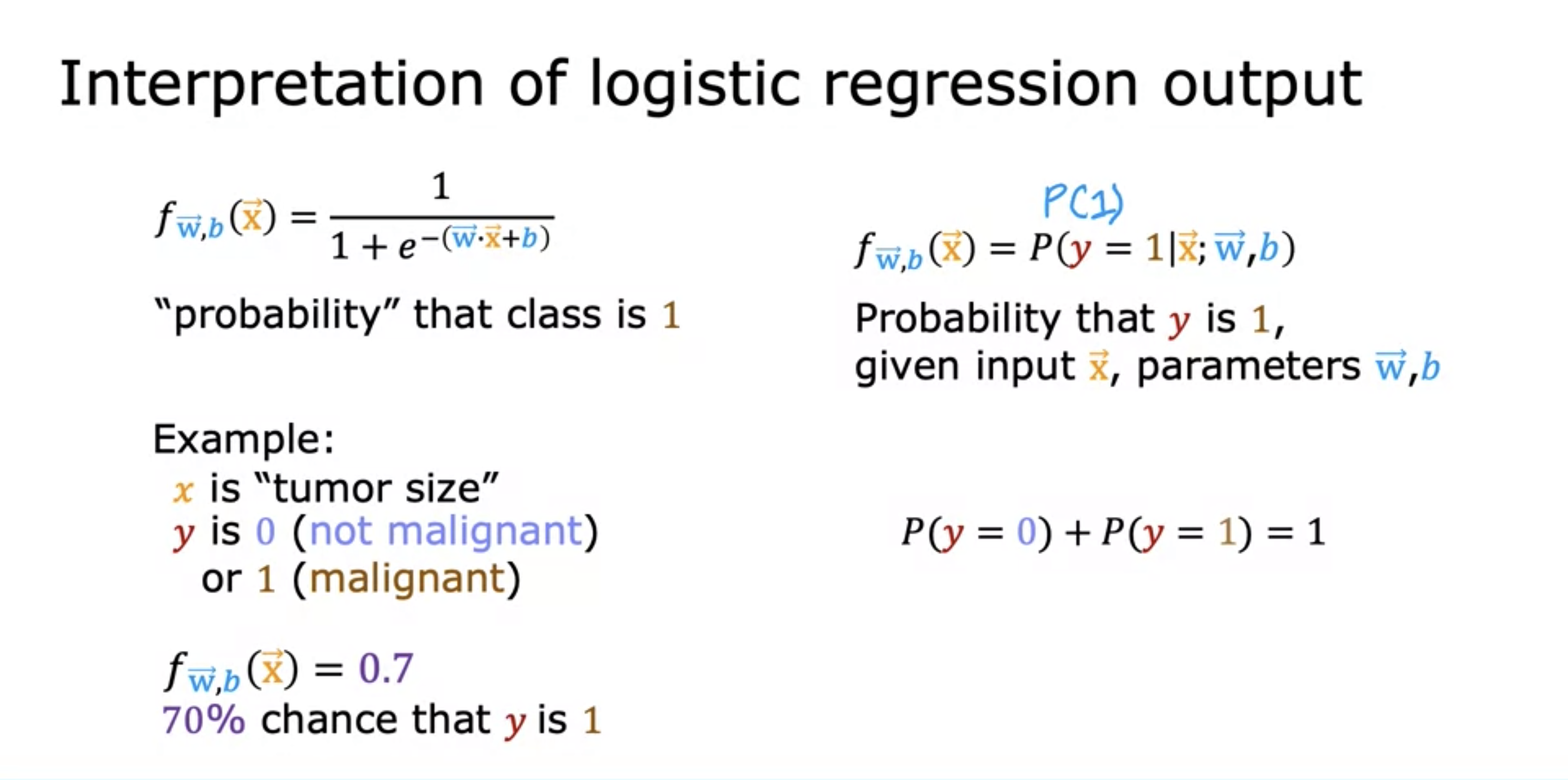

- The output of logistic regression is the probability that class is 1 (positive).

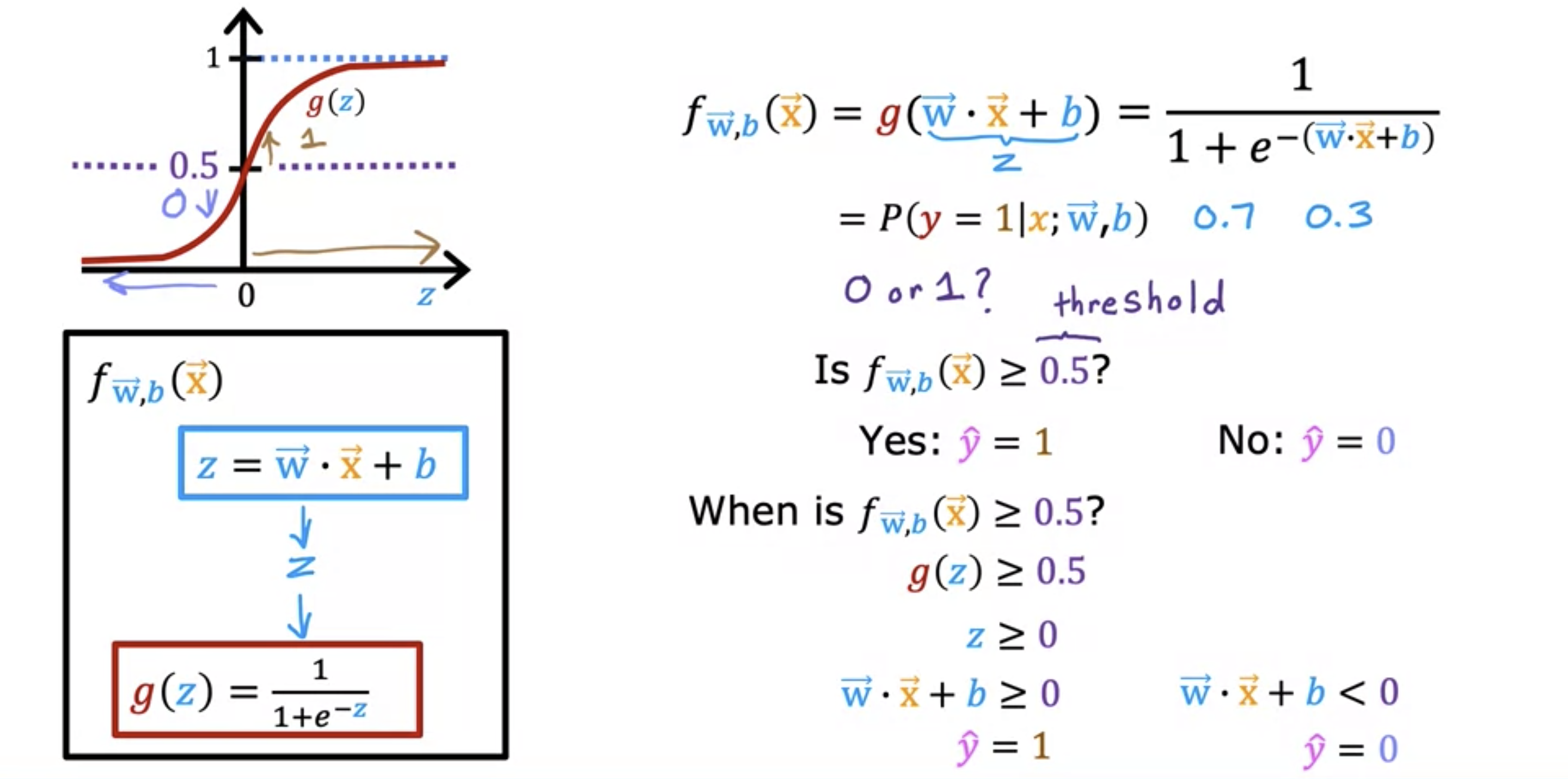

2. Decision Boundary

- Above the threshold is positive, while below is negative.

- Threshold does not need to be 0.5.

- For subjects like brain tumors, it would be safer with lower thresholds, because false positives are safer than false negative.

- If w * x + b >= 0 , then positive. Plotting out w * x+b makes the decision boundary.

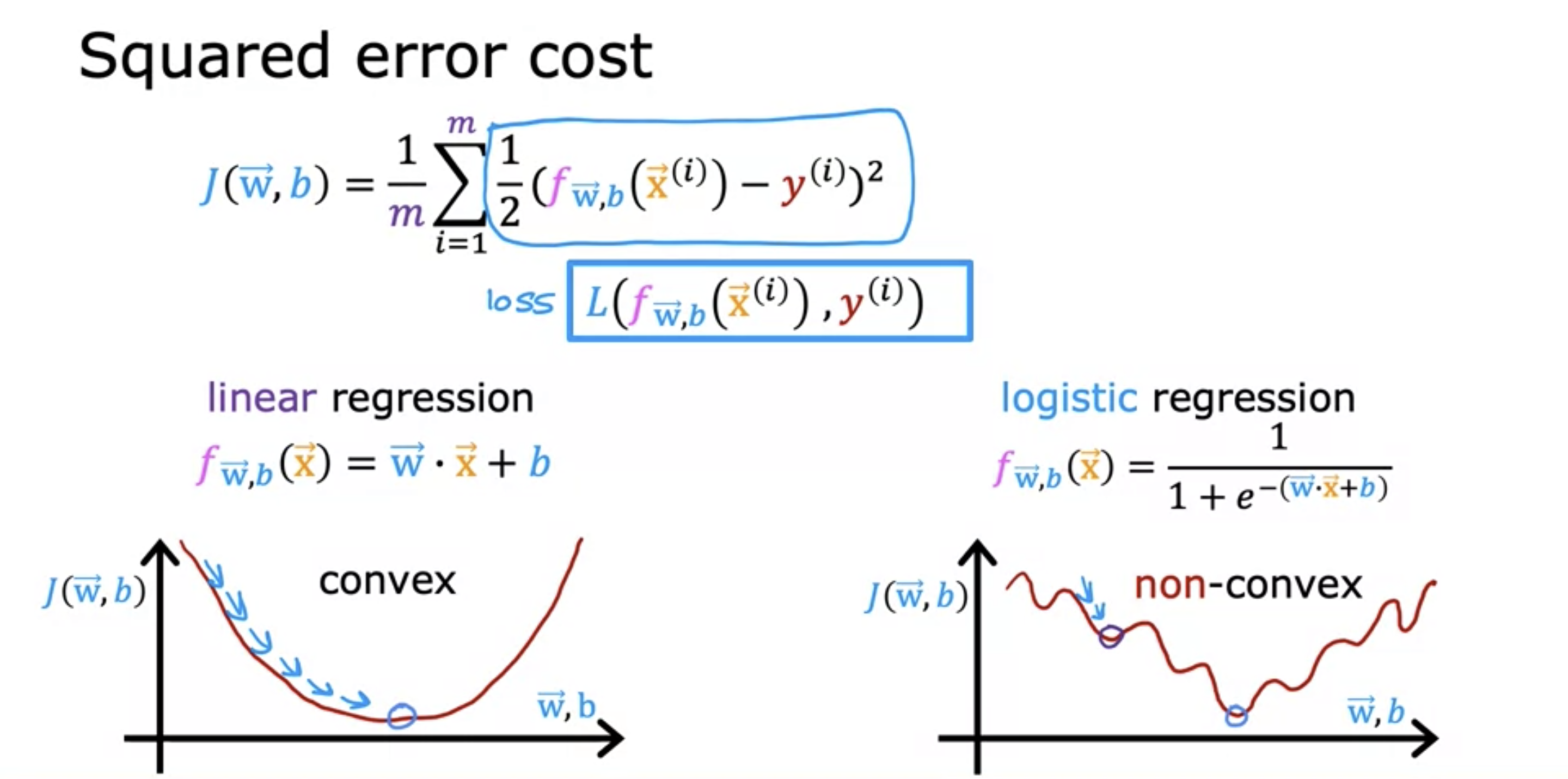

3. Cost Function for Logistic Regression

- The squared cost function for logistic regression shows many local minima, which is not ideal for gradient descent.

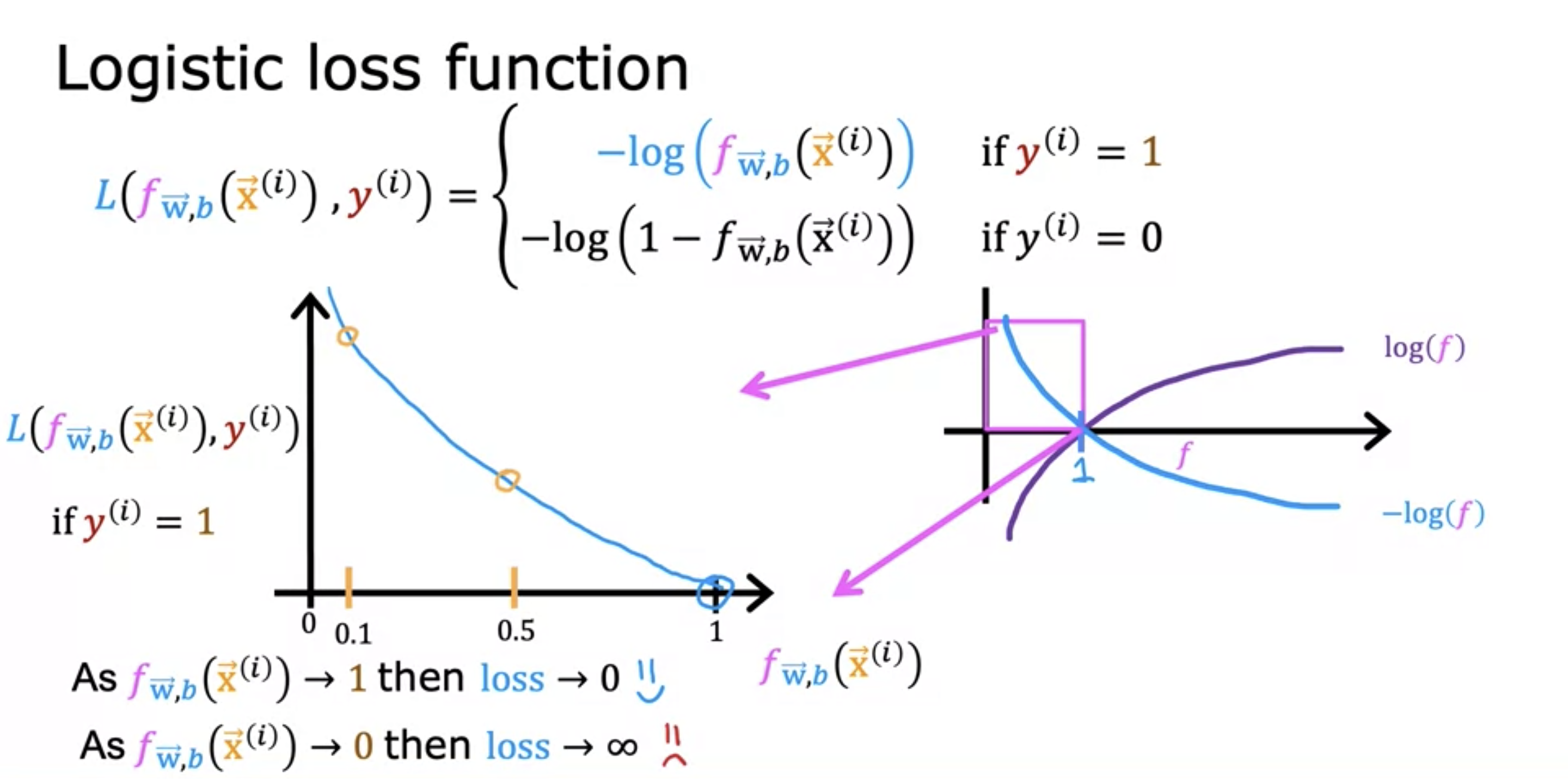

- The upper branch log function works for y = 1, because the cost decreases exponentially when expected value gets closer to 1.

- Closer to 0, cost goes to infinity.

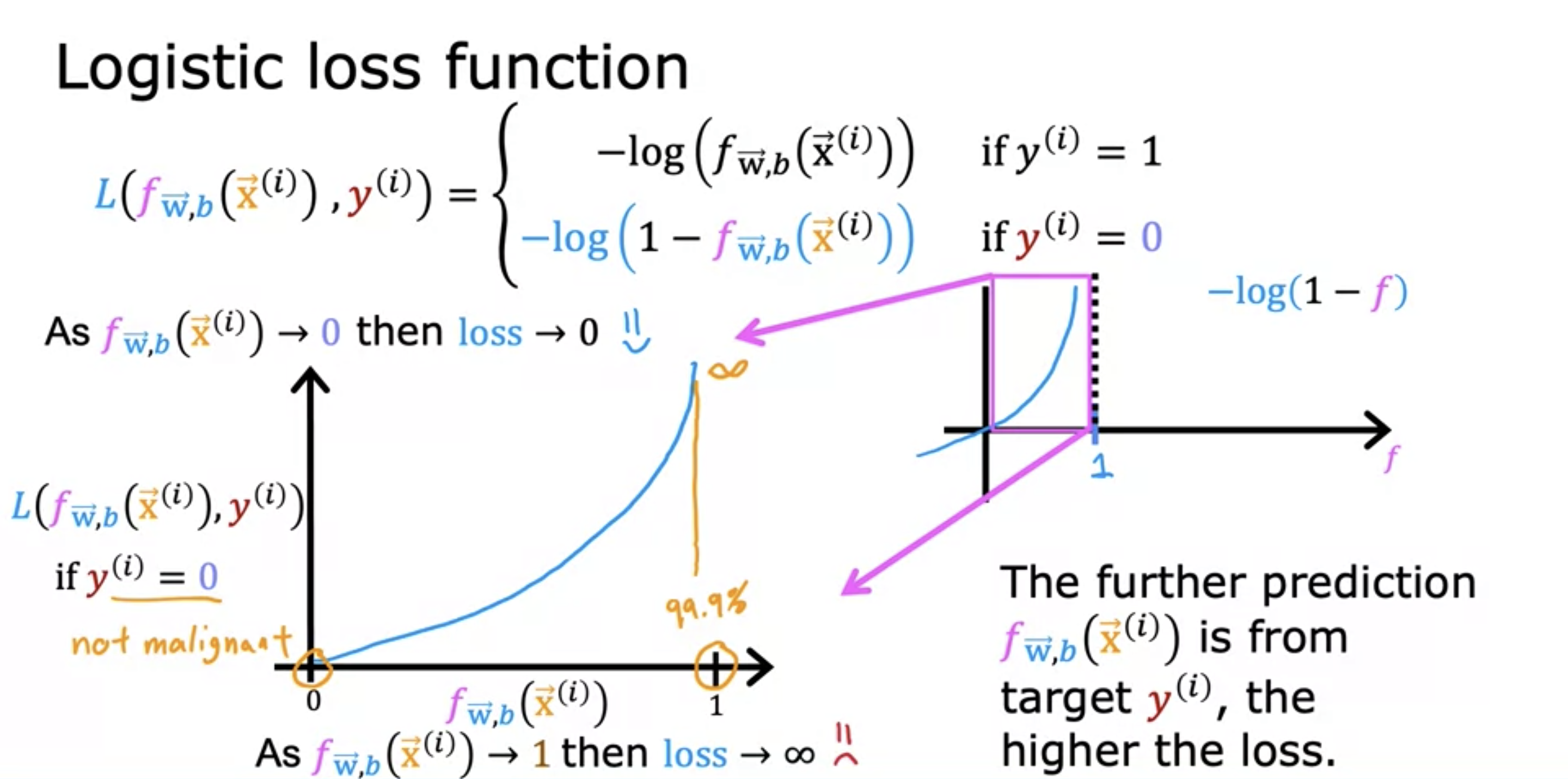

- The opposite for y = 0.

큰 도움이 되었습니다, 감사합니다.