🖥️ Overview

- Can simplify code, increase efficiency

- Kernels are generally multithreaded

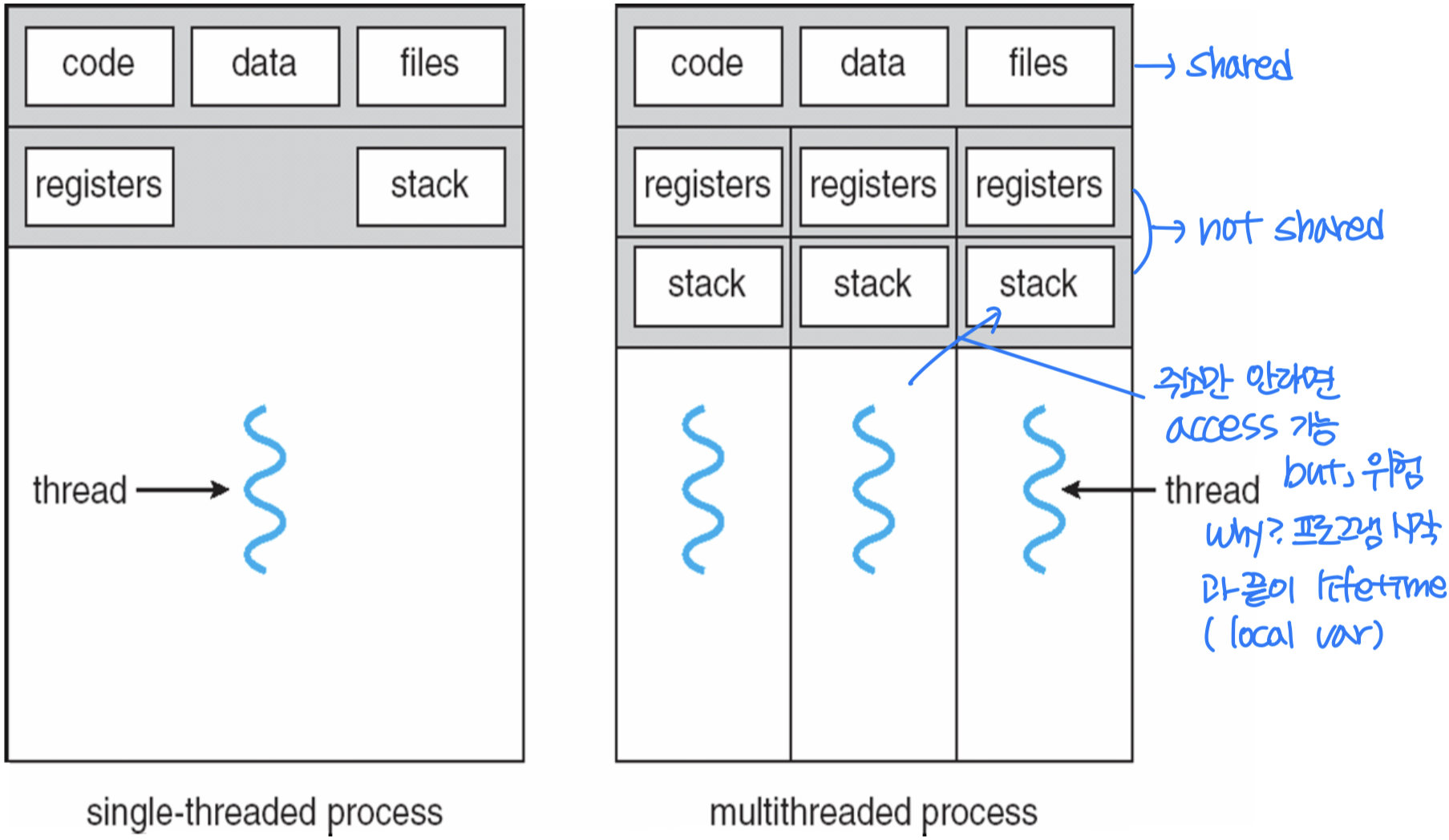

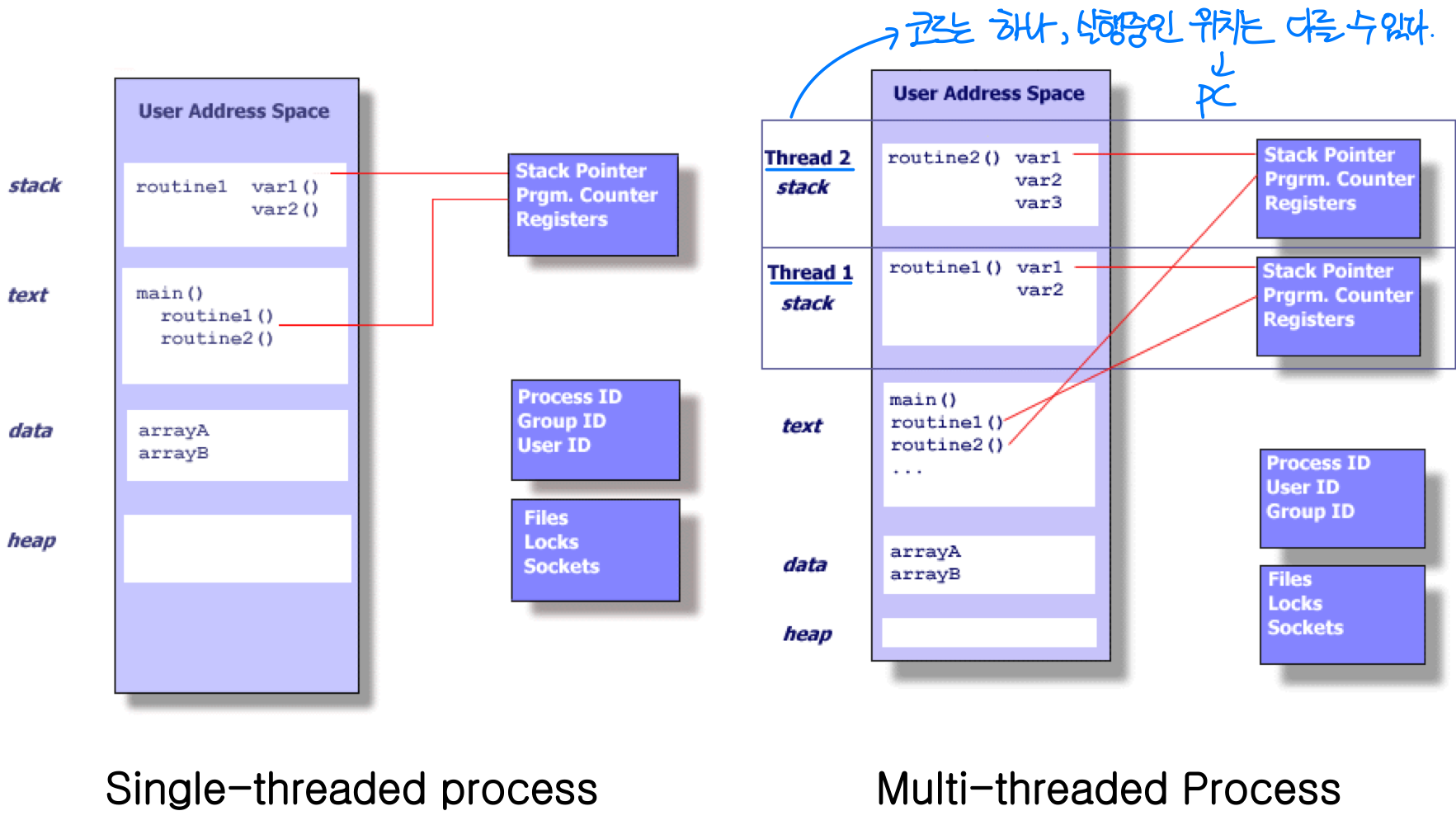

- Process: program in execution → 자원공유 x

- Each process occupies(점유) resources required for execution - Thread: a way for a program to split itself into two or more simultaneously(동시에) running tasks

- Smaller unit than process

- Threads in a process share resources - A thread is comprised(구성) of

- Thread ID, program counter, register set, stack(local var, function) → 공유x resources

Process vs. Thread

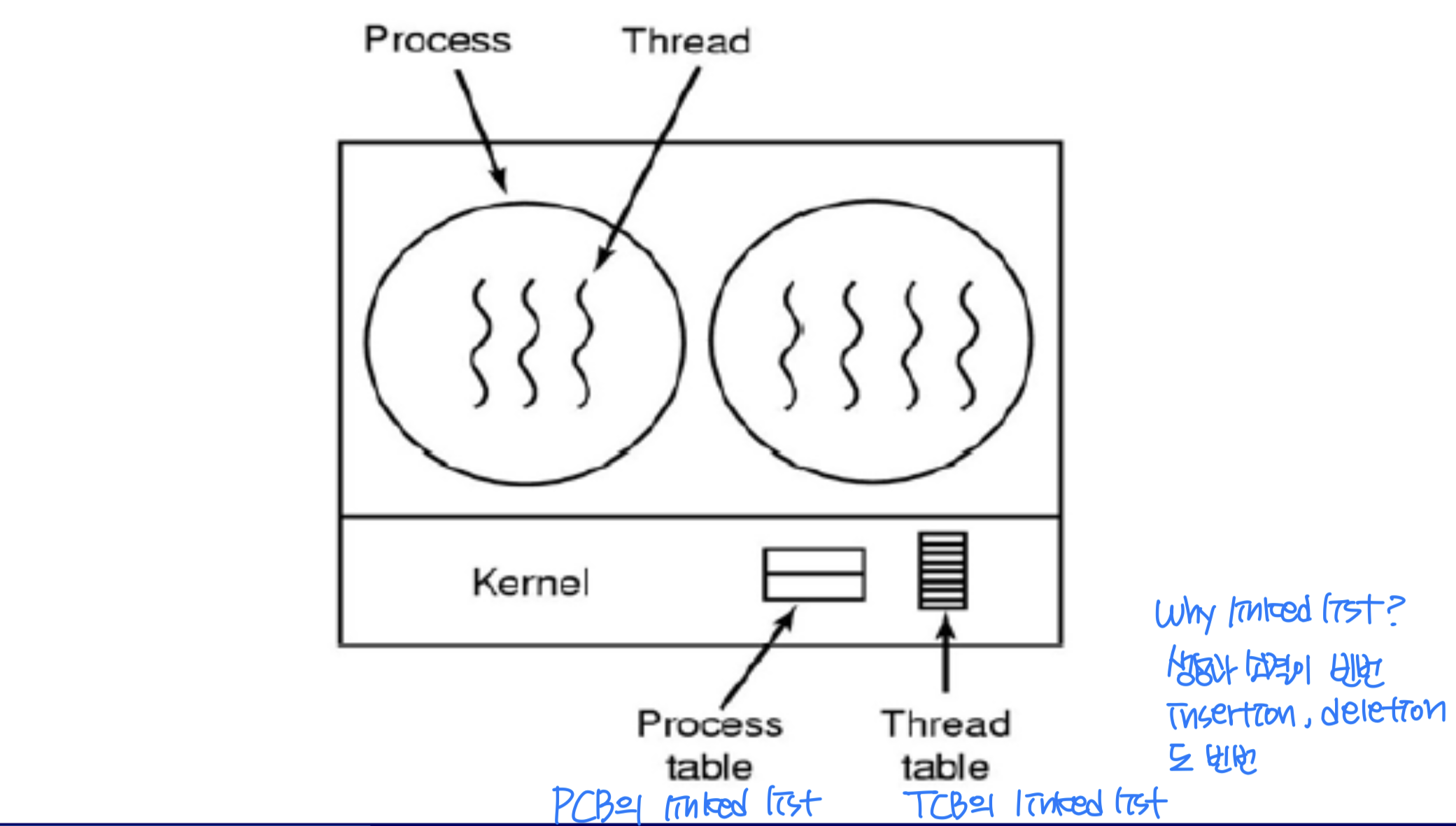

Thread Control Block (TCB)

- Thread Control Block (TCB) : A data structure in the operating system kernel which contains thread-specific information needed to manage it

- Examples of information in TCB

- Thread id

- State of the thread (running, ready, waiting, start, done)

- Stack pointer (stack을 공유하지 않기 때문)

- Program counter

- Thread's register values

- Pointer to the process control block (PCB)안에 속히기때문

Why Thread?

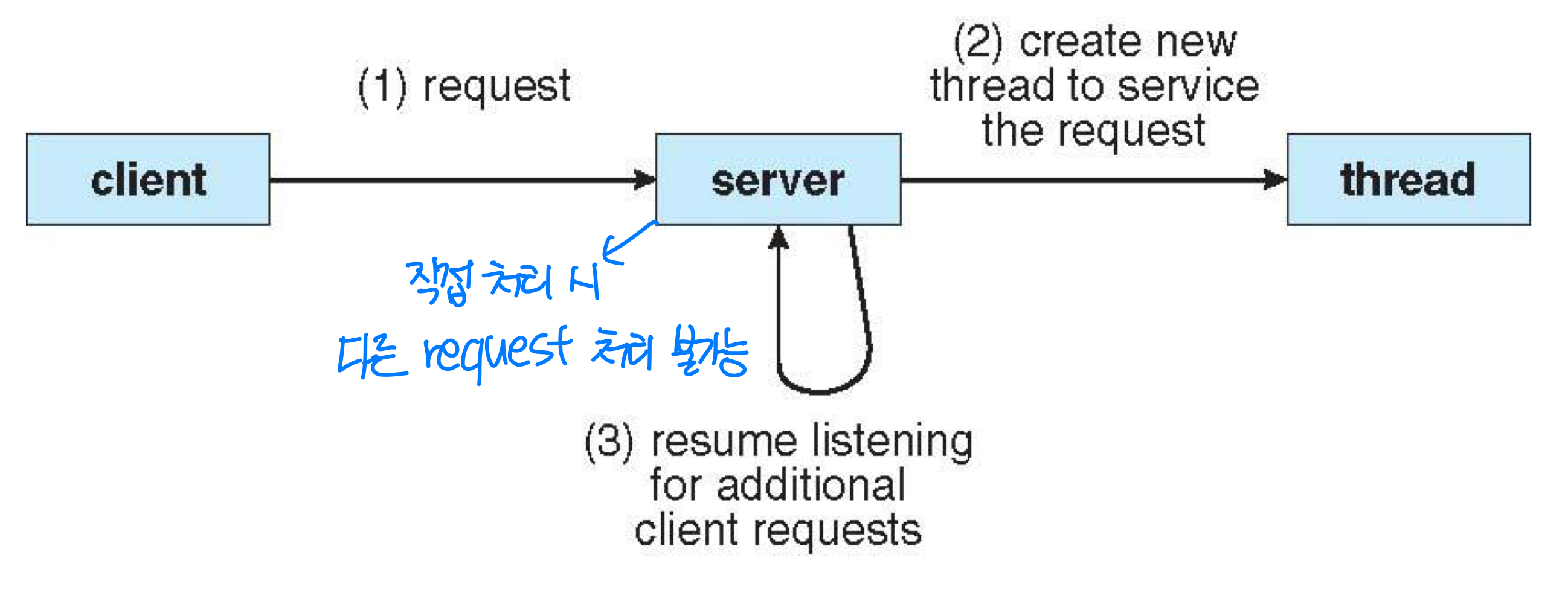

- Process creation is expensive in time and resource

Ex) Web server accepting thousands of requests

- Compared with single-threaded process

- Scalability(확장성)

- Utilization(활용) of multiprocessor architectures - Responsiveness(반응성)

Ex_ GUI Thread

- Scalability(확장성)

- Compared with multiple processes

- Resource sharing

- 글로벌 변수로 간단하게 공유, 많은 공유가 필요하면 Thread - Economy(절약)

- Creating process is about 30 times slower than creating thread

- Resource sharing

Multicore Programming

- Multicore or multiprocessor systems putting pressure on programmers, challenges include:

- Dividing activities (분배)

- Balance (비슷하게 분배)

- Data splitting

- Data dependency

- Testing and debugging- 디버깅이 어렵고 복잡 → How? Log File ( 파일에 Print )

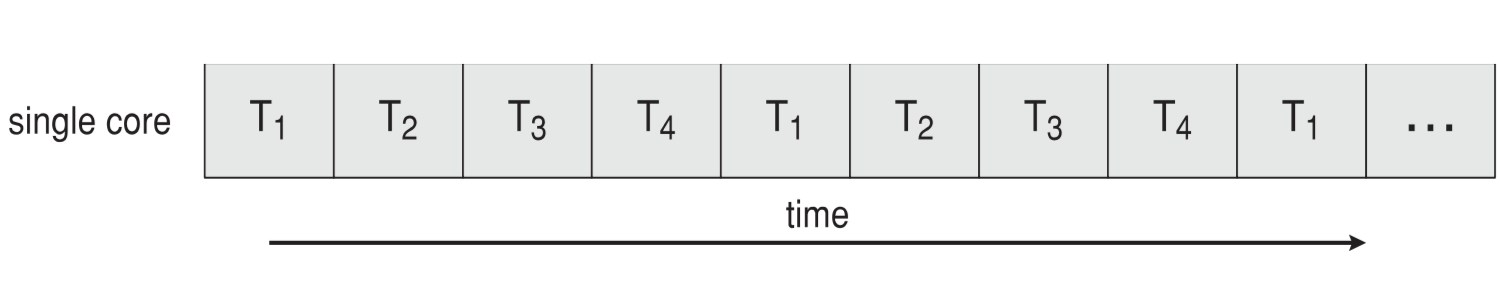

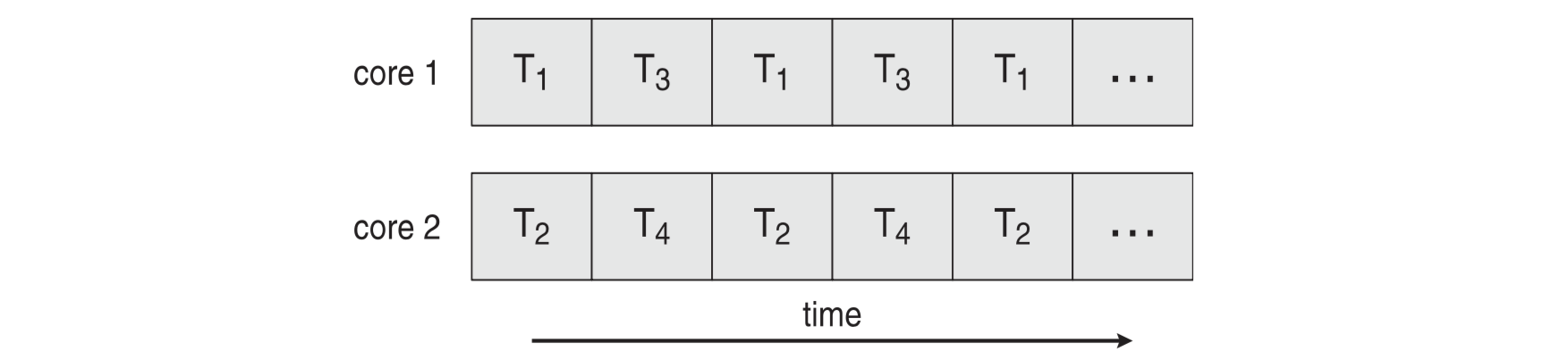

Concurrency vs. Parallelism

- Concurrency supports more than one task making progress

- Single processor / core, scheduler(time sharing, multi tasking) providing concurrency

Ex) Concurrent execution on single-core system:

- Parallelism implies(의미) a system can perform more than one task simultaneously(동시에)

Ex) Parallelism on a multi-core system:

Multicore Programming (Cont.)

- Types of parallelism

- Data parallelism – distributes subsets of the same data across multiple cores, same operation on each thread

→ Ex_ TA 4명이 100명을 채점하는 것 (각 25명씩)

- Task parallelism – distributing threads across cores, each thread performing unique operation

→ Task가 dependency하지 않을 때, 병렬처리의 속도가 빠르다 - As # of threads grows, so does architectural support for threading

- CPUs have cores as well as hardware threads

- Consider Oracle SPARC T4 with 8 cores, and 8 hardware threads per core

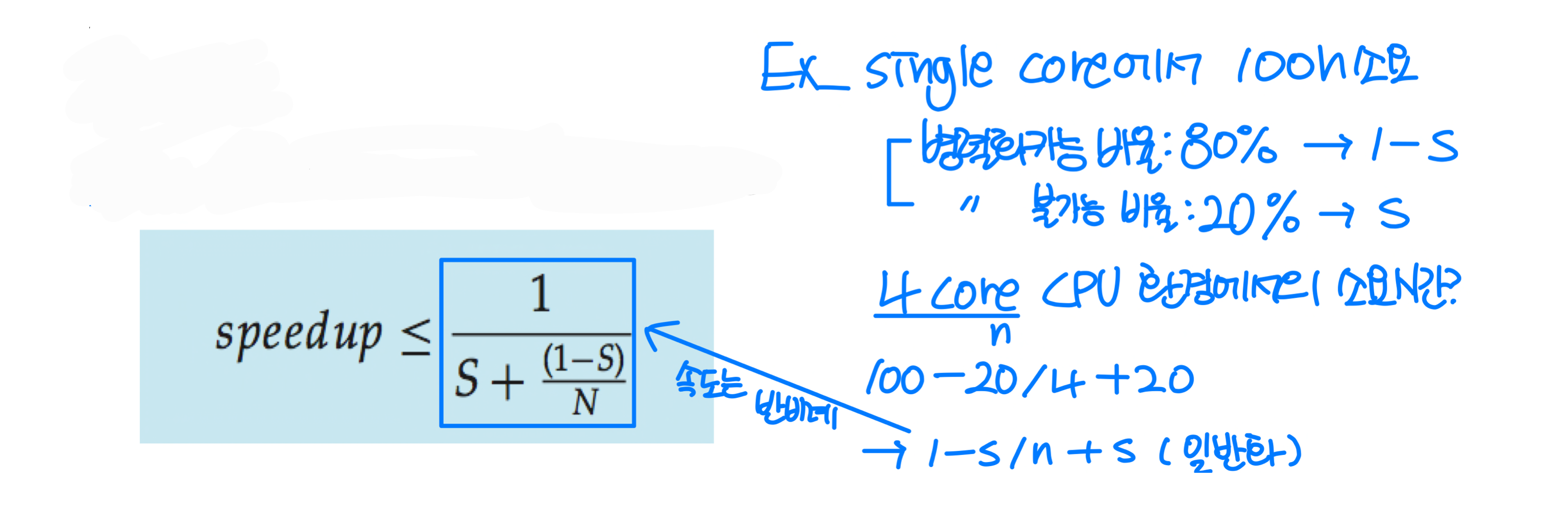

Amdahl’s Law

- S is serial portion(비율), N processing cores

Ex) Application is 75% parallel / 25% serial, moving from 1 to 2 cores results in speedup of 1.6 times

Ex) Application is 75% parallel / 25% serial, moving from 1 to 2 cores results in speedup of 1.6 times

- As N approaches infinity, speedup approaches 1 / S

- Serial portion of an application has disproportionate(불균형) effect on performance gained by adding additional cores

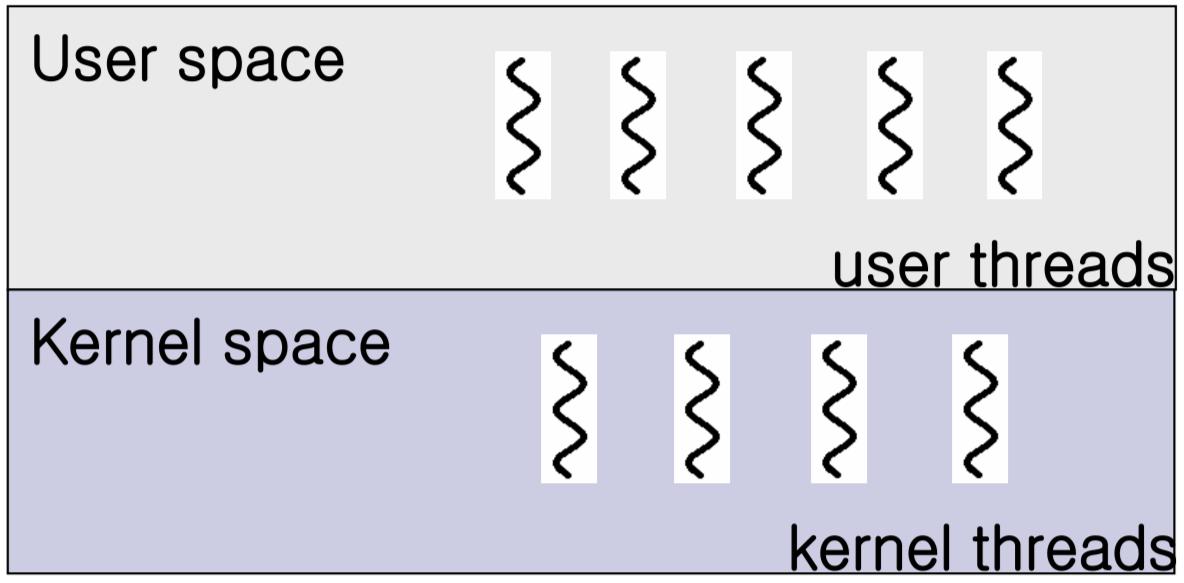

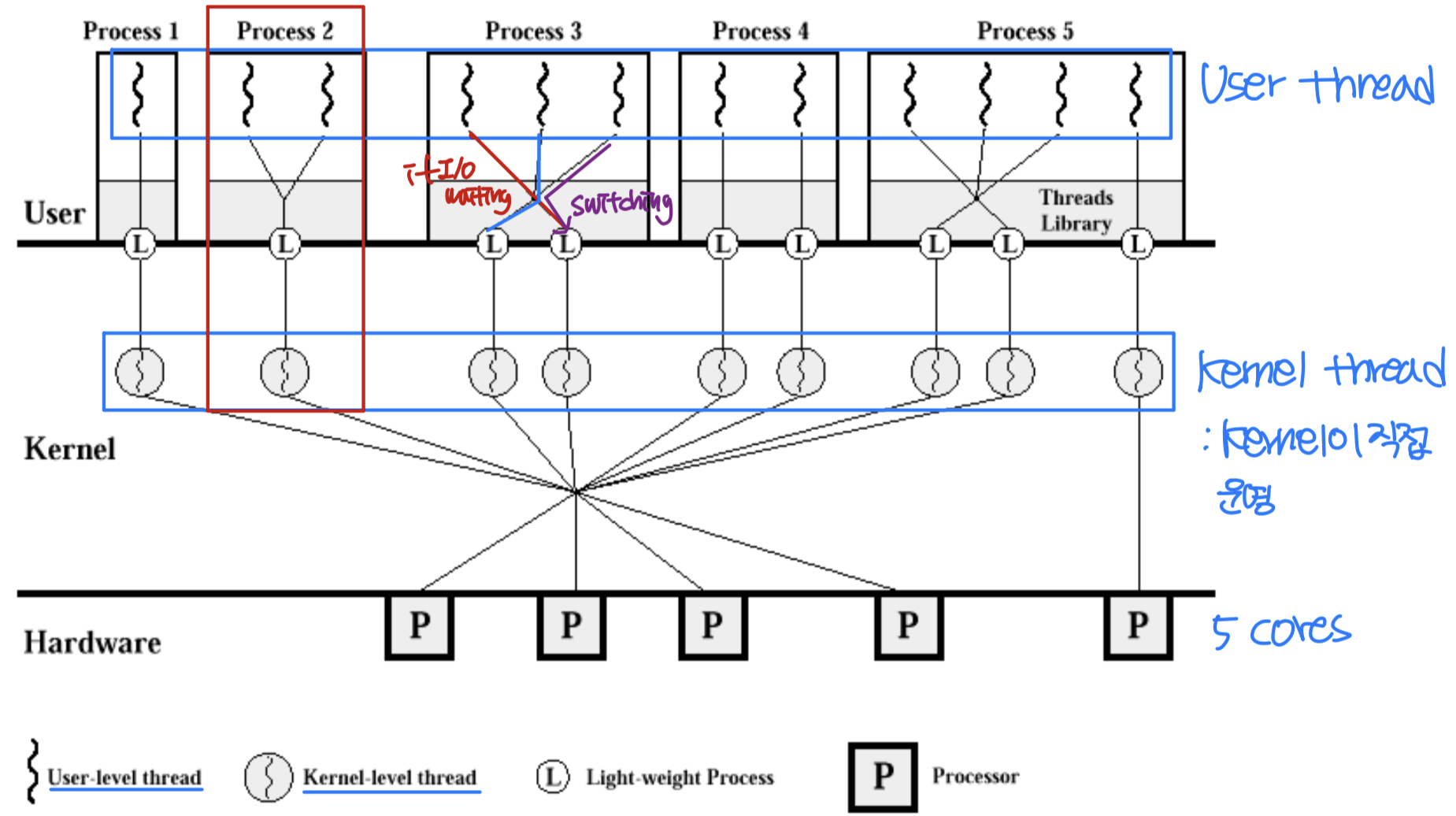

🖥️ Multithreading models

-

User thread: thread supported by thread library in user level

- Created by library function call (not system call)

- Kernel is not concerned(관여하다) in user thread

- Switching of user thread is faster than kernel thread (그리고 가볍다) -

Kernel thread: thread supported by kernel

- Created and managed by kernel

- Scheduled by kernel

- Cheaper than process (process > kernel thread > user thread)

- More expensive than user thread

-

Major issue: correspondence between user treads and kernel threads

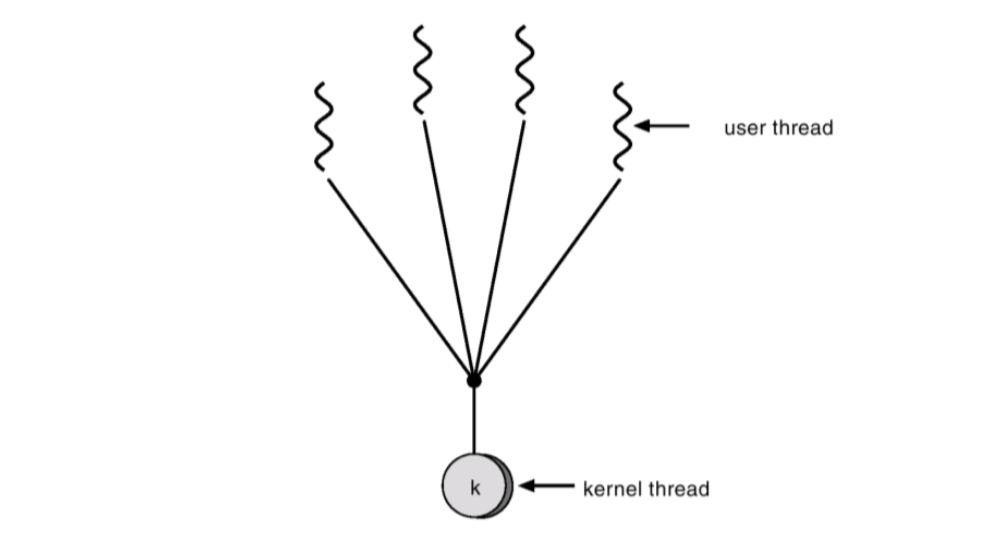

Many-to-one model

- Many user threads are mapped to single kernel thread

- Threads are managed by user-level thread library

- Ex) Green threads, GNU Portable Threads

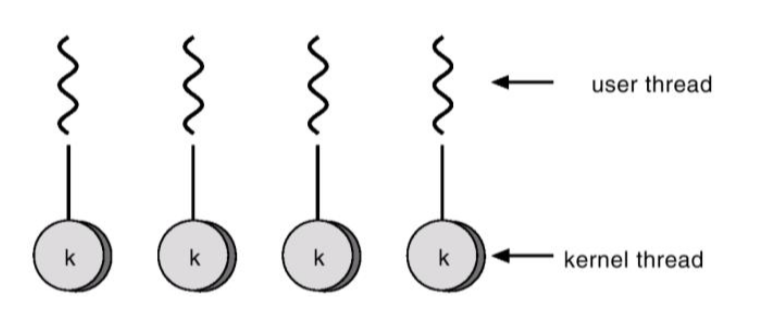

One-to-one model

- Each user thread is mapped to a kernel thread

- Provides more concurrency

- Problem: overhead

- Threads are managed by kernel

- Ex) Linux, Windows, Solaris

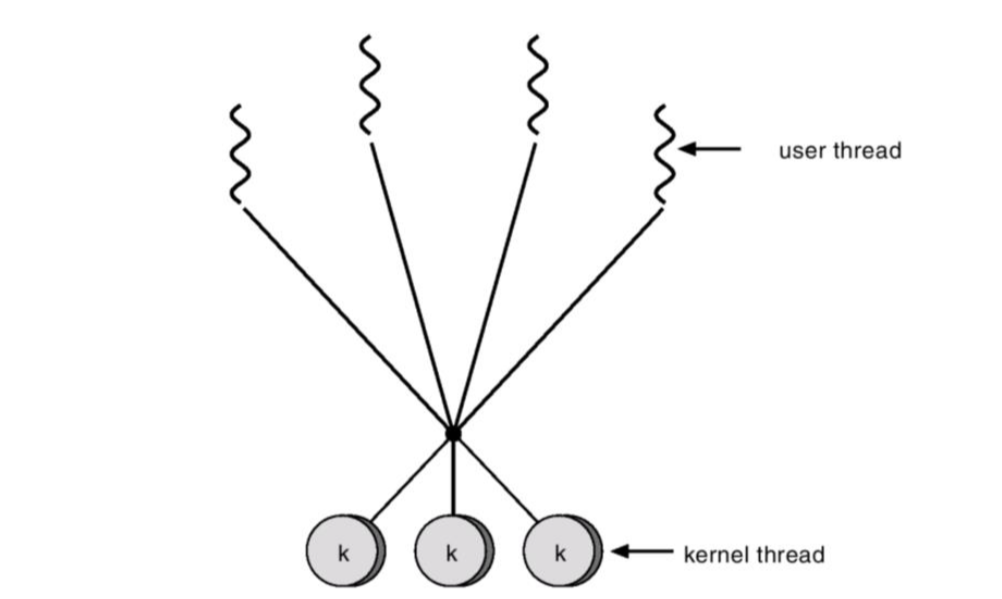

Many-to-Many model

- Multiplex many user level threads to smaller or equal number of kernel threads

- Compromise(타협) between n:1 model and 1:1 model

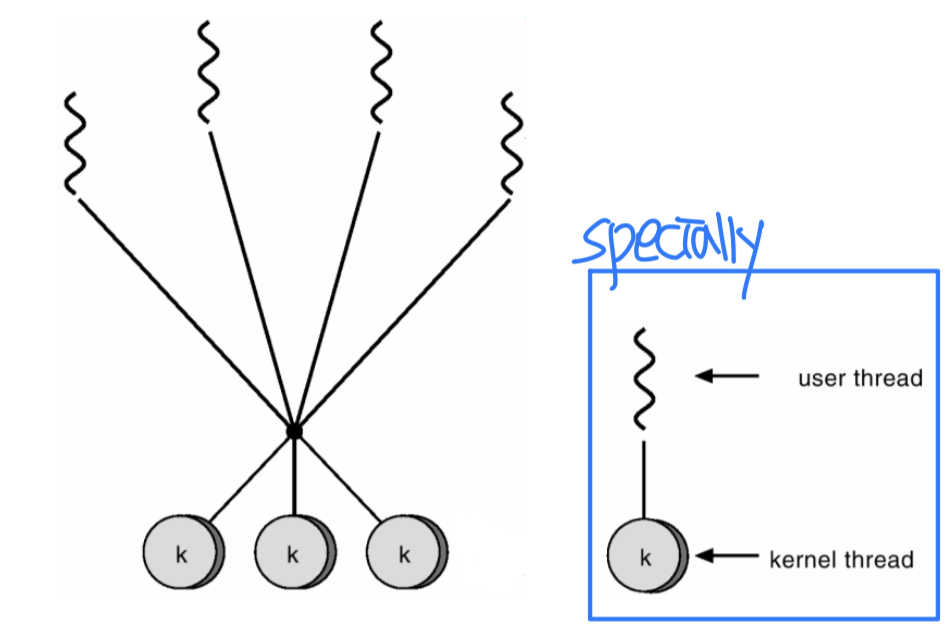

Two-level model

- Variation(변형) of N:M model

- Basically N:M model

- A user thread can be bound to a kernel thread

- Ex) IRIX, HP-UX, Tru64, Solaris( <=8 )

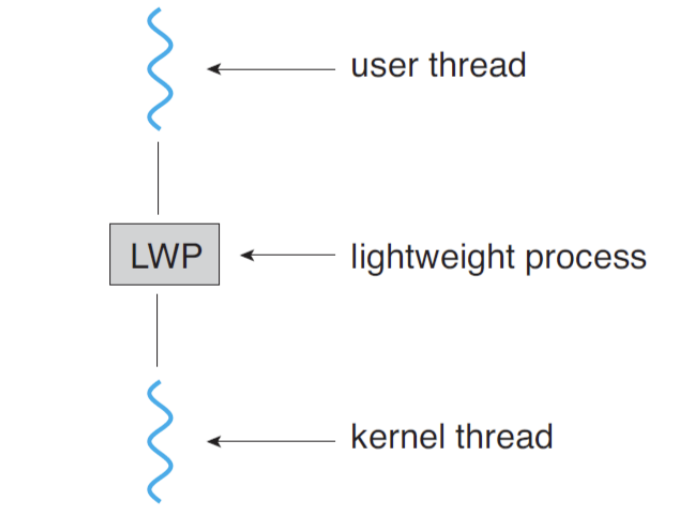

Scheduler Activation and LWP

- Communication between the kernel and the thread library

in many-to-many model and two-level model

- Scheduler activation is one scheme for communication between user thread library and kernel - In many-to-many model and two-level model, user threads are connected with kernel threads through LWP

- Lightweight process (LWP) ( job description , 주문서)

- A data structure connecting user thread to kernel thread

- Basically, a LWP corresponds(해당) to a kernel thread, but there are some exceptions

- To the user-level thread library, a LWP appears to be a virtual processor - Connection between user / kernel threads through LWP

- Kernel provides a set of virtual processors(LWP’s)

- User level thread library schedules user threads onto virtual processors

- If a kernel thread is blocked or unblocked, kernel notices it to thread library( upcall )

- Upcall handler schedules properly(제대로)

- If a kernel thread is blocked, assign the LWP to another thread

- If a kernel thread is unblocked, assign an LWP to it

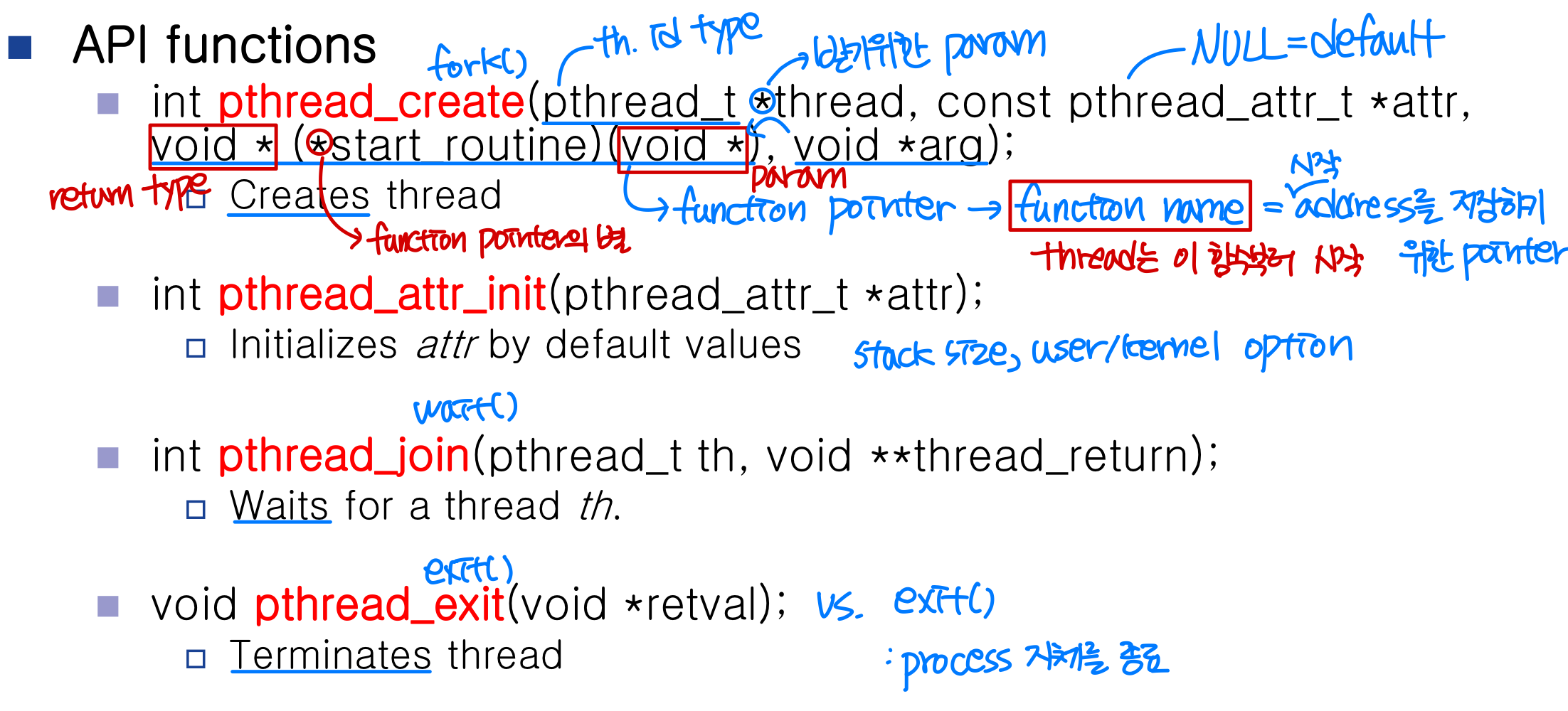

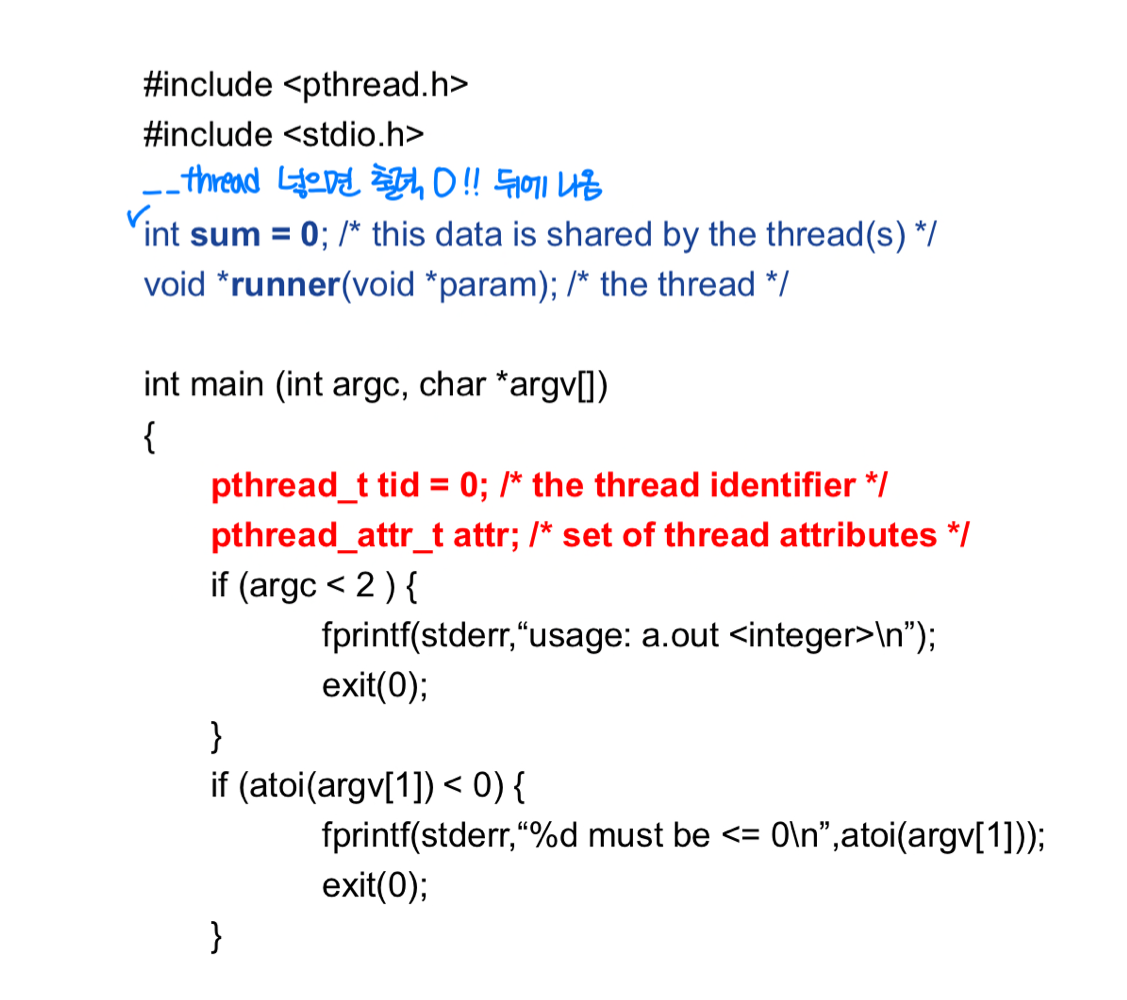

🖥️ Thread libraries

- Thread library: set of API’s to create and manage threads

- User level library

- Kernel level library (kernel의 scheduler 자체) - Examples)

- POSIX Pthreads

- old) LinuxThreads (고유명사)

- new) NPTL (Native POSIX Thread Library)

- GNU Portable Threads

- Open source Pthreads for win32 - Win32 threads

- Java threads

- POSIX Pthreads

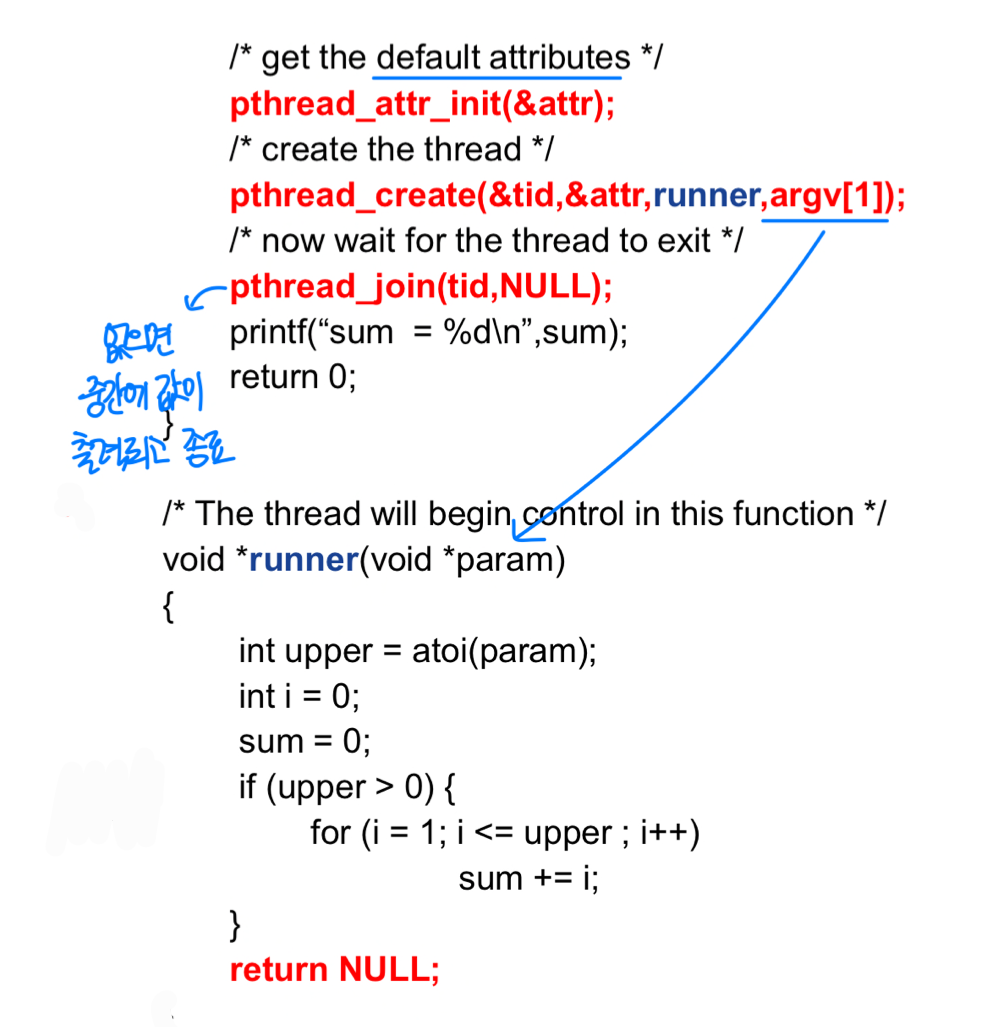

POSIX Pthreads

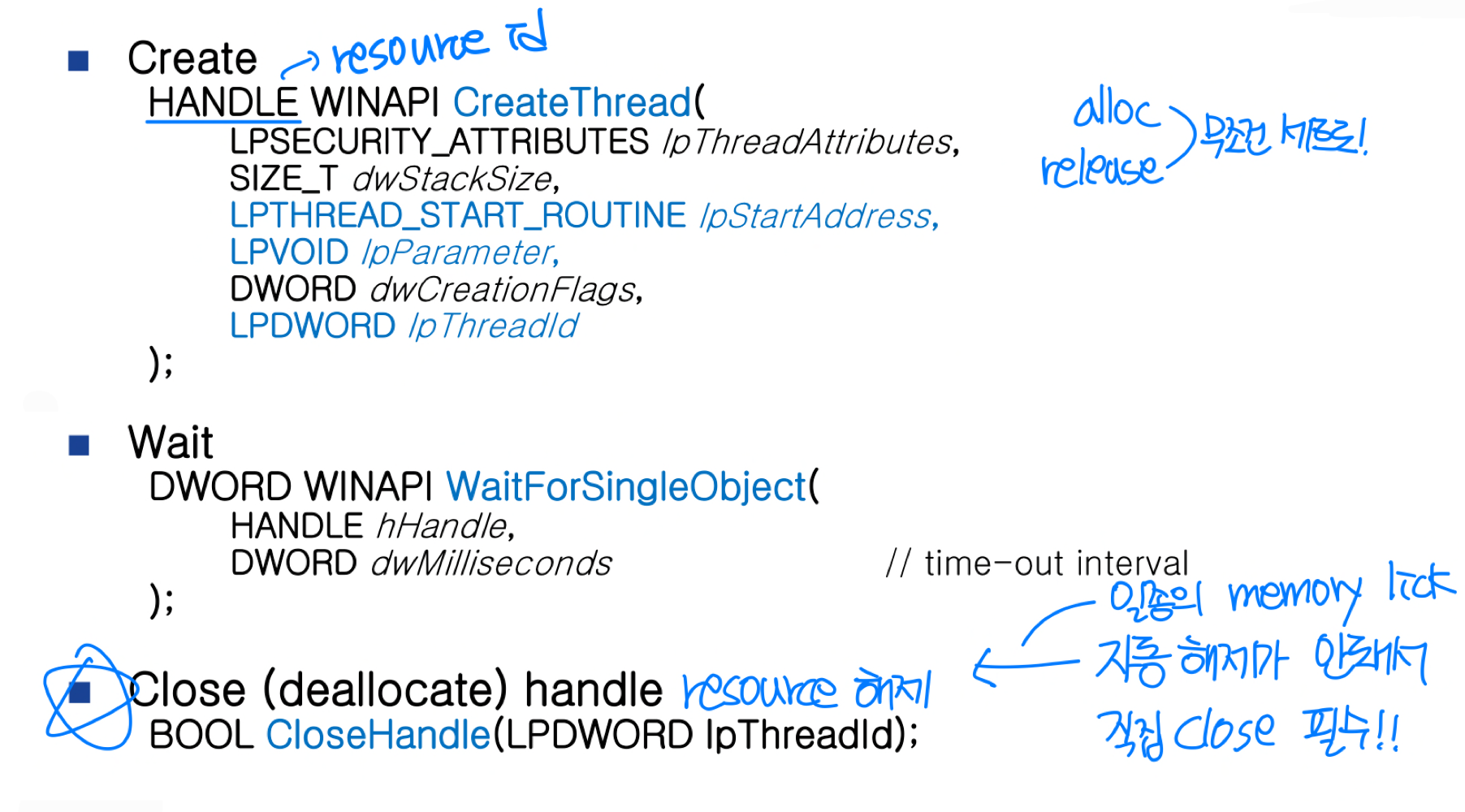

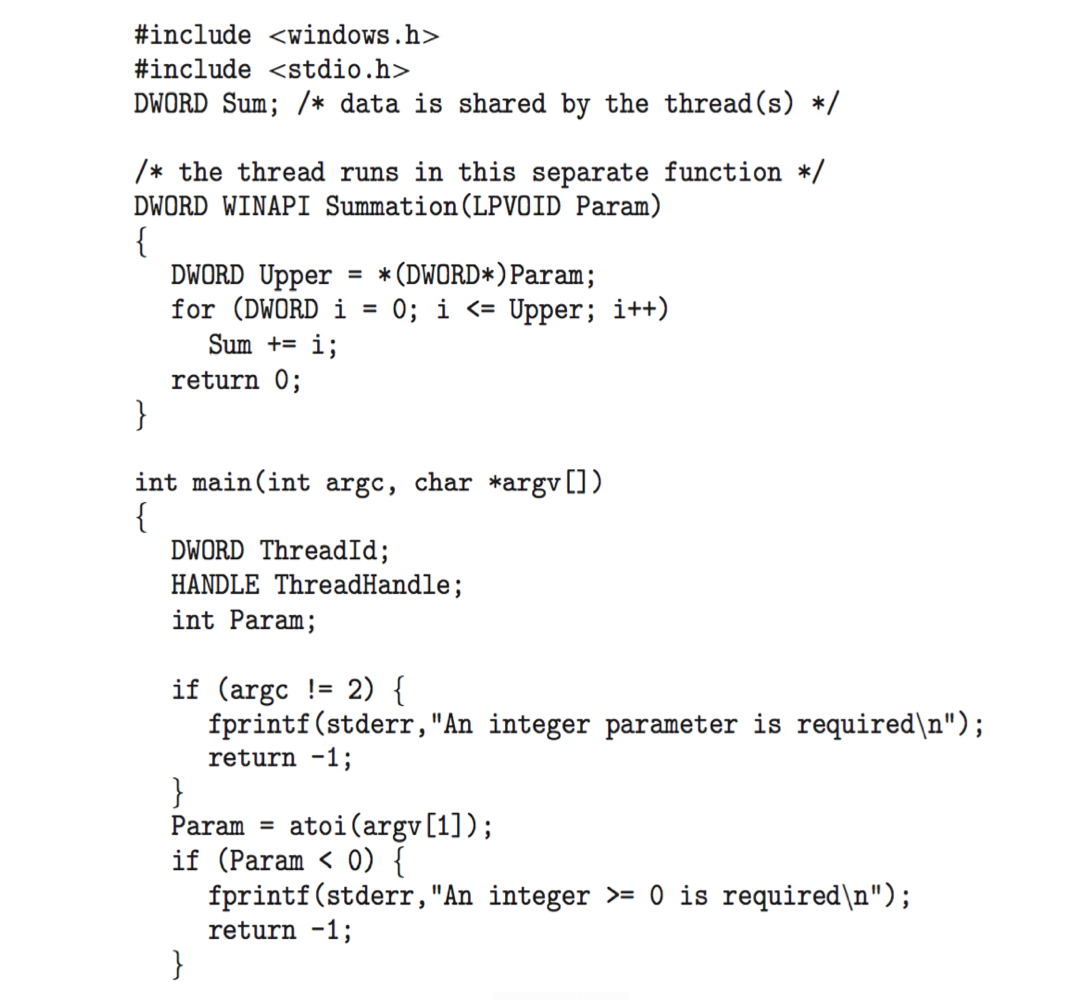

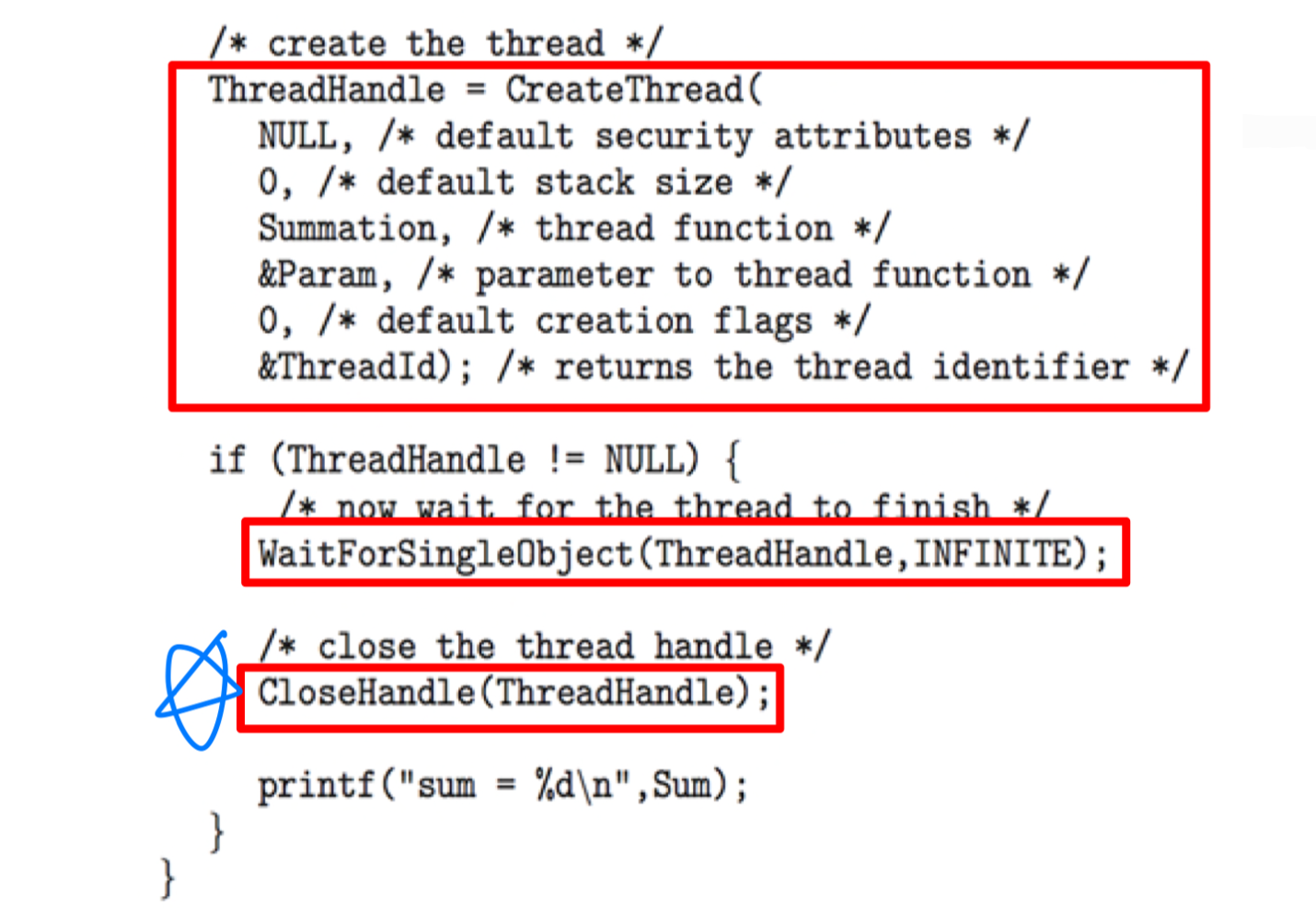

Windows Treads

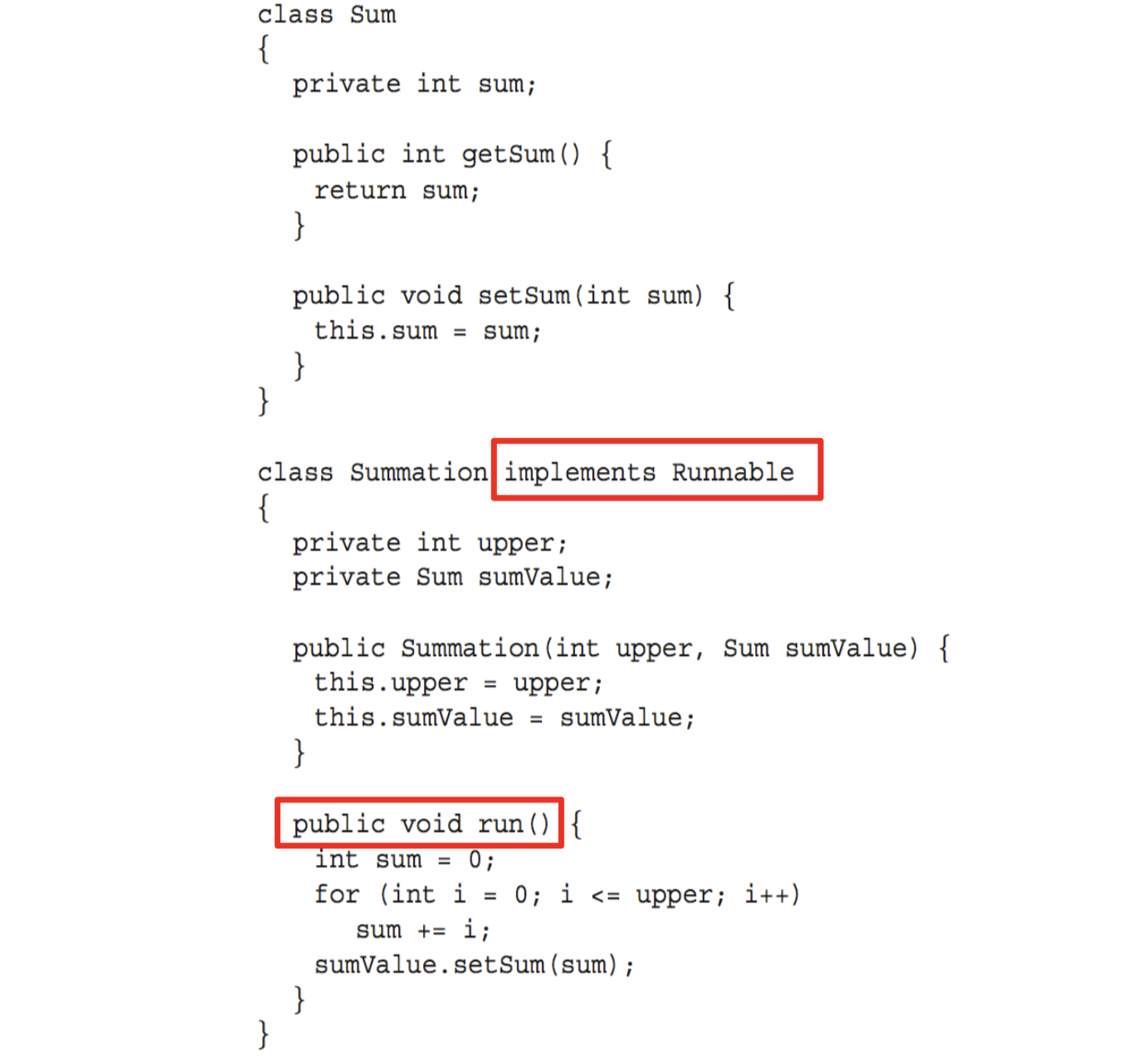

Java Threads

- Java threads are managed by the JVM

- Typically, implemented using the threads model provided by underlying OS - Java threads may be created by

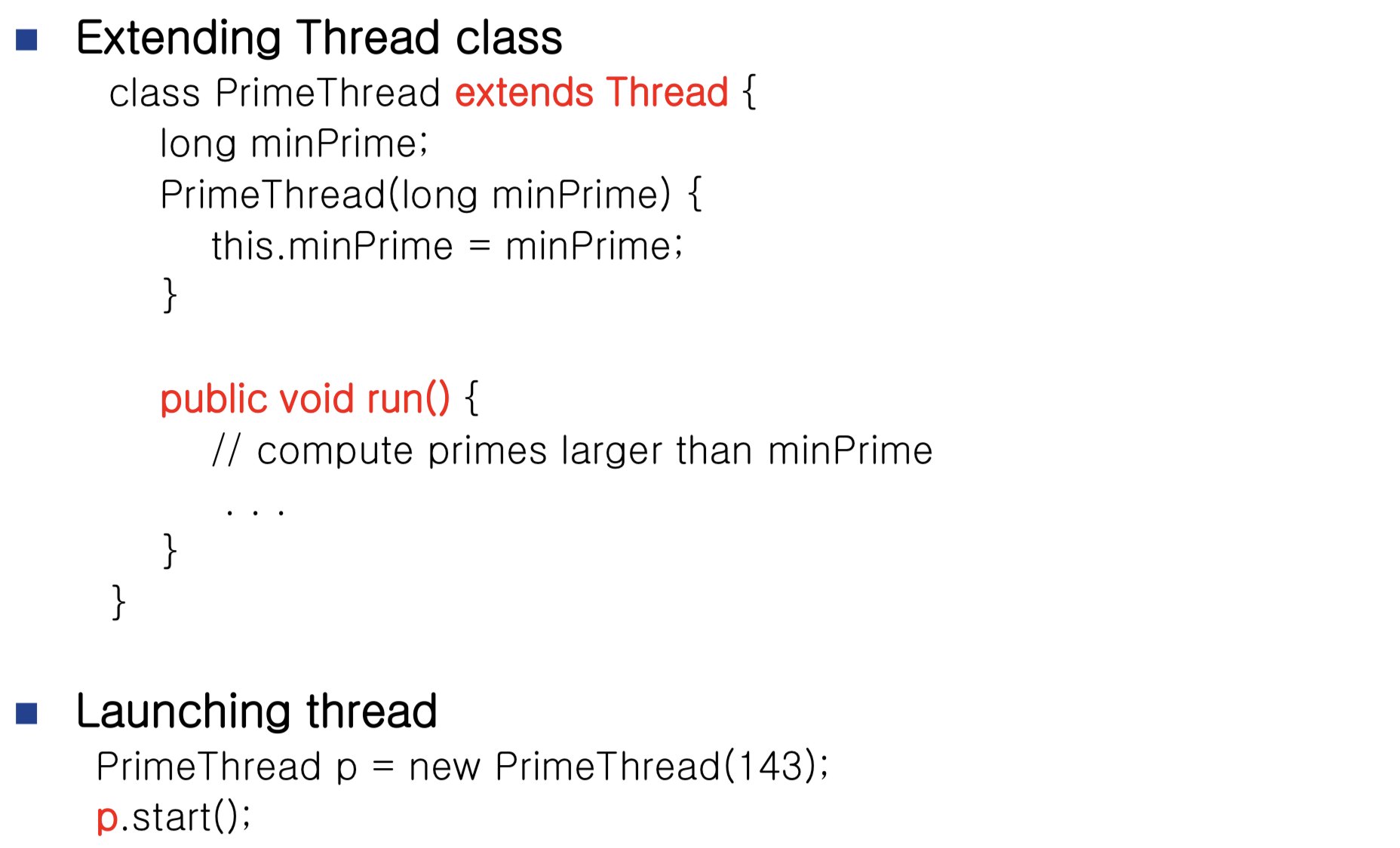

- Extending Thread class

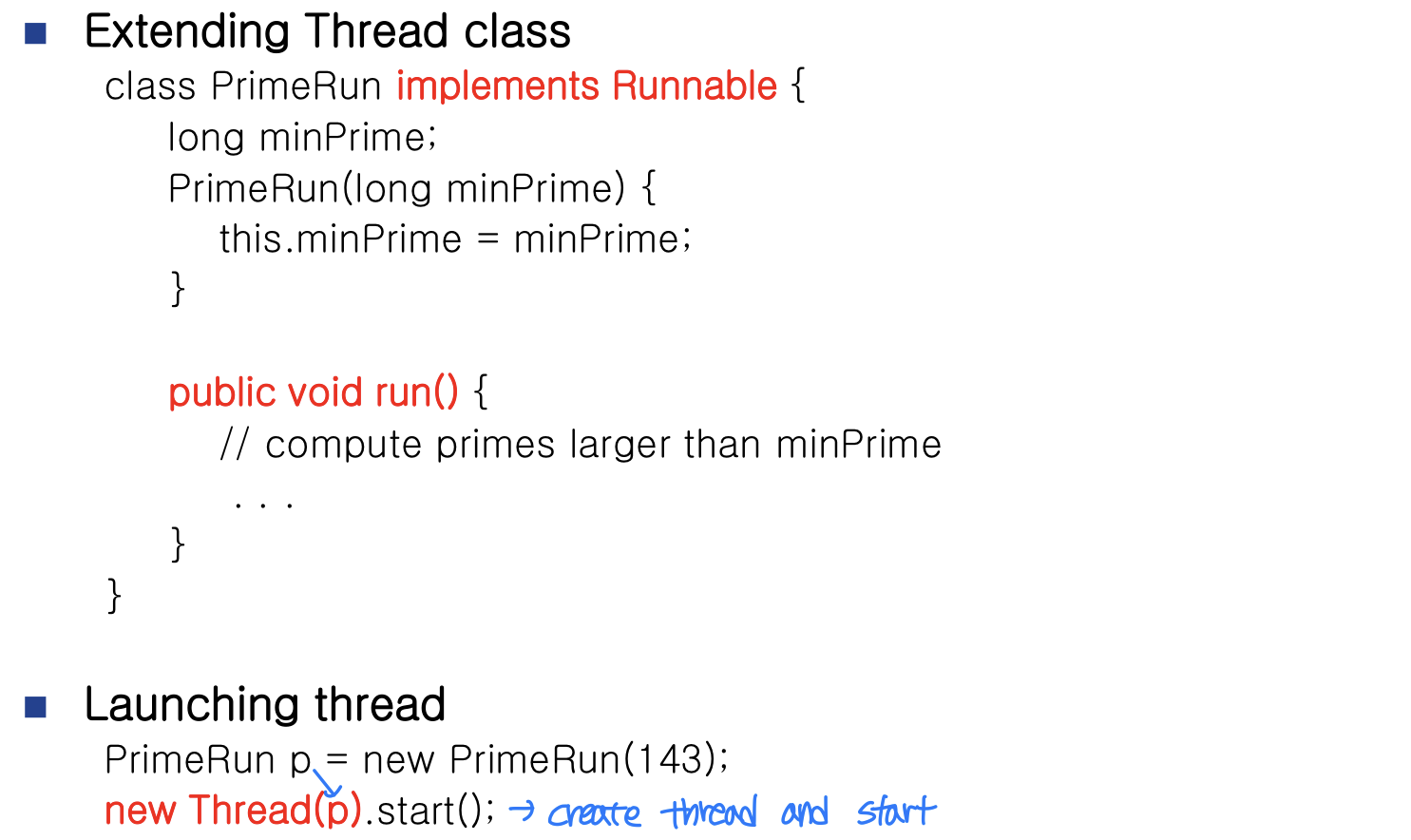

- Implementing the Runnable interface → 여러개 implment할 수 있음

Using Thread Class

using Running Interface

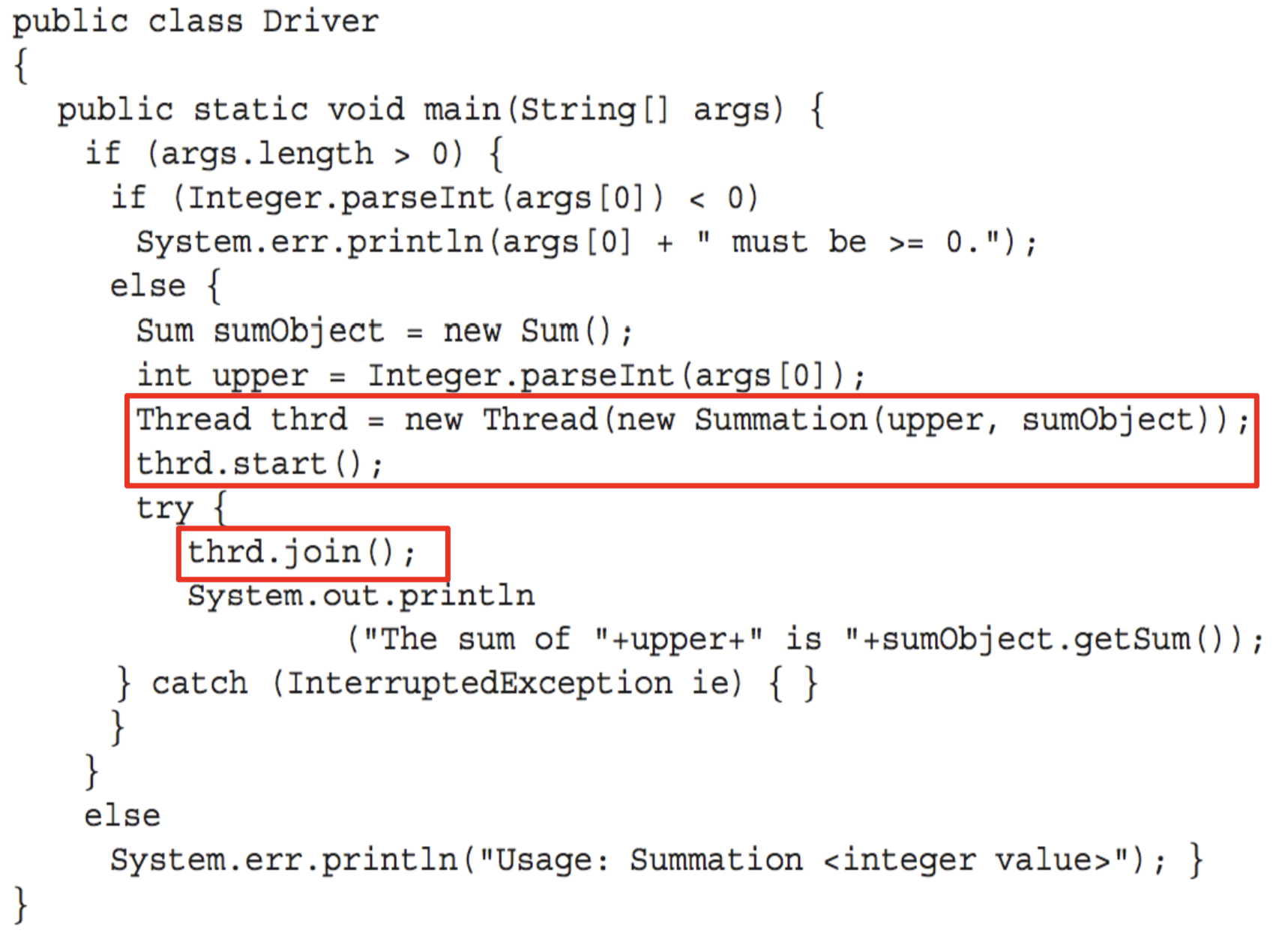

Example

Implicit Threading

- Creation and management of threads done by compilers and run-time libraries rather than programmers

- Three methods

- Thread Pools

- OpenMP

- Grand Central Dispatch - Other methods include Microsoft Threading Building Blocks (TBB), java.util.concurrent package

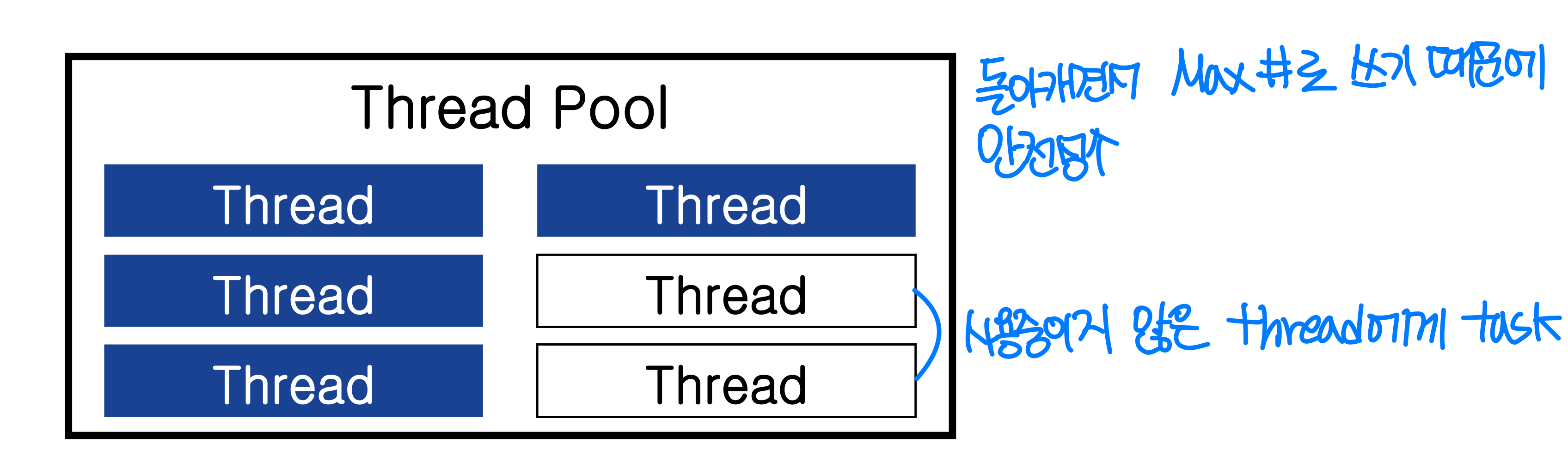

Tread Pools

- Create a number of threads in a pool where they await work

- Advantages

- Usually slightly(약간) faster to service a request with an existing thread than create a new thread

- Allows the number of threads in the application(s) to be bound to the size of the pool

- Separating task to be performed from mechanics of creating task allows different strategies for running task- Ex_ Tasks could be scheduled to run periodically

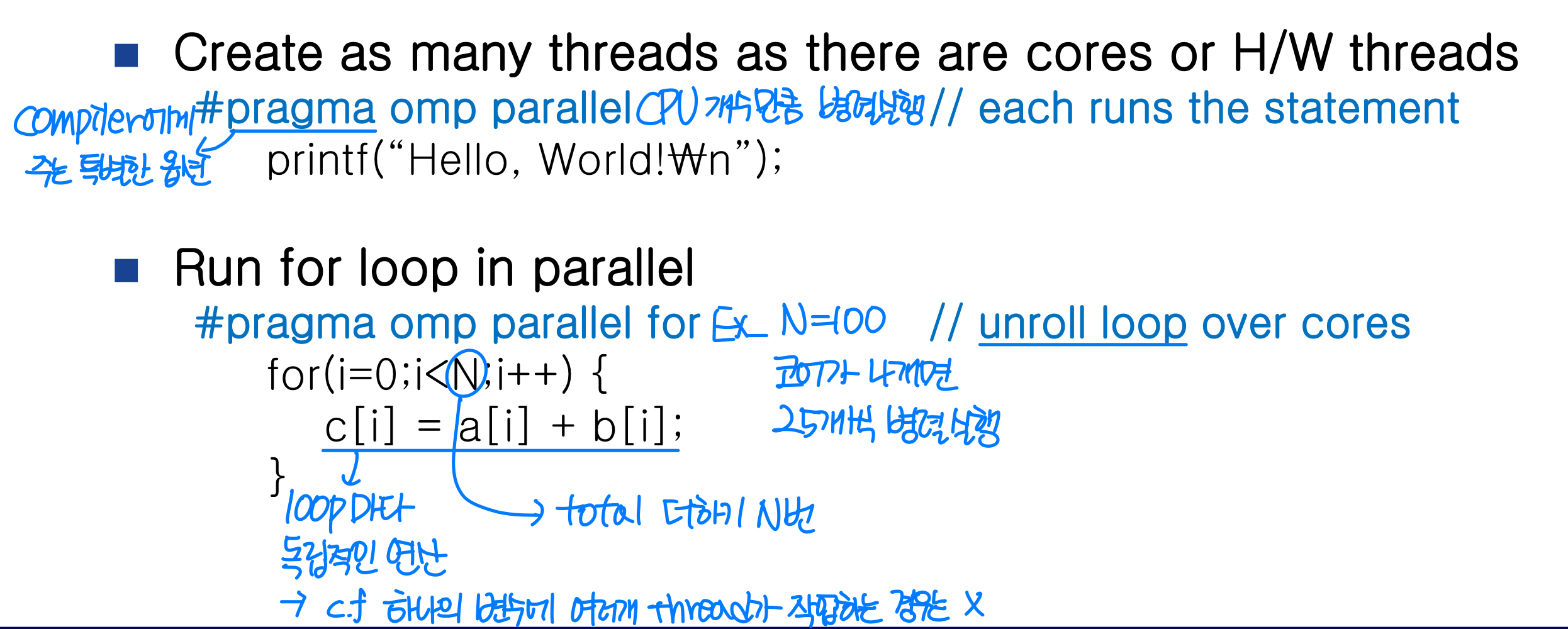

OpenMP

gcc -fopenmp main.c

- Provides support for parallel programming in shared-memory environments

- Set of compiler directives and an API for C, C++, FORTRAN

- Identifies parallel regions – blocks of code that can run in parallel

➡️ Loop unrolling : 루프를 작은 반복으로 분해하고 다른 코어에서 동시에 실행하여 실행 성능을 향상시키는 것

➡️ Loop unrolling : 루프를 작은 반복으로 분해하고 다른 코어에서 동시에 실행하여 실행 성능을 향상시키는 것

🖥️ Threading issues

fork() and exec()

- fork() on multithreaded process

- Duplicates all threads in the process?

- Duplicates only corresponding thread?

→ UNIX supports two versions of fork- fork() : 전부 다 복사

- fork1() : 호출한 현재 thread만 복사

- exec() on multithreaded process

- Replace entire(전체) process - Ex_ process in 3 threads → fork()시 3개의 thread 모두 복사

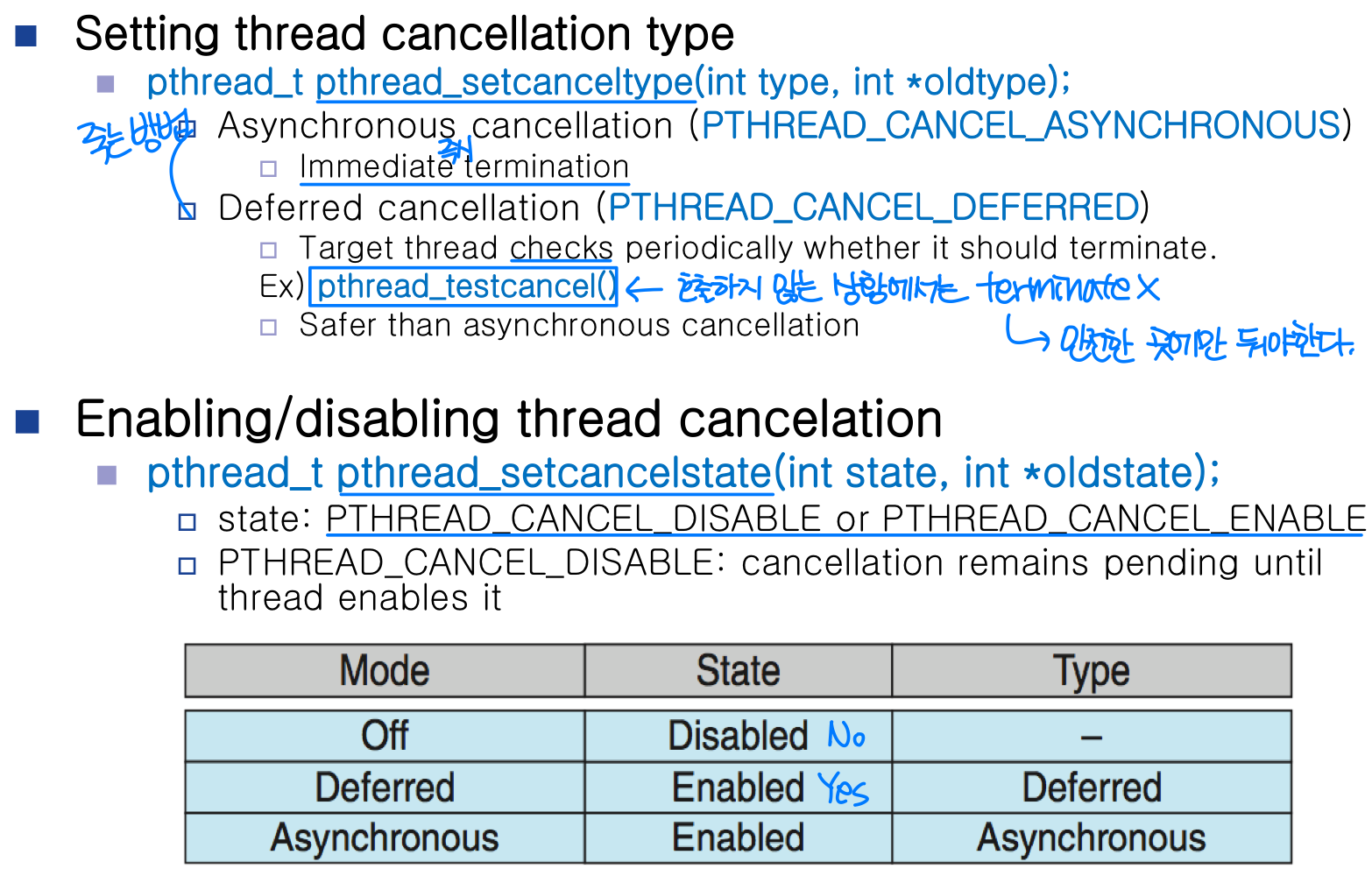

Cancellation

- Thread cancellation

- Terminating a thread before it has completedpthread_cancel(tid);

→ 바로 죽이는 명령 x - Problem with thread cancellation

- A thread share the resource with other threads

cf. A process has its own resource

→ A thread can be cancelled while it updates data shared with other threads

→ Process보다 더 주의

➡️ Disable : Enable할 때까지 보류 상태로 유지

➡️ Disable : Enable할 때까지 보류 상태로 유지

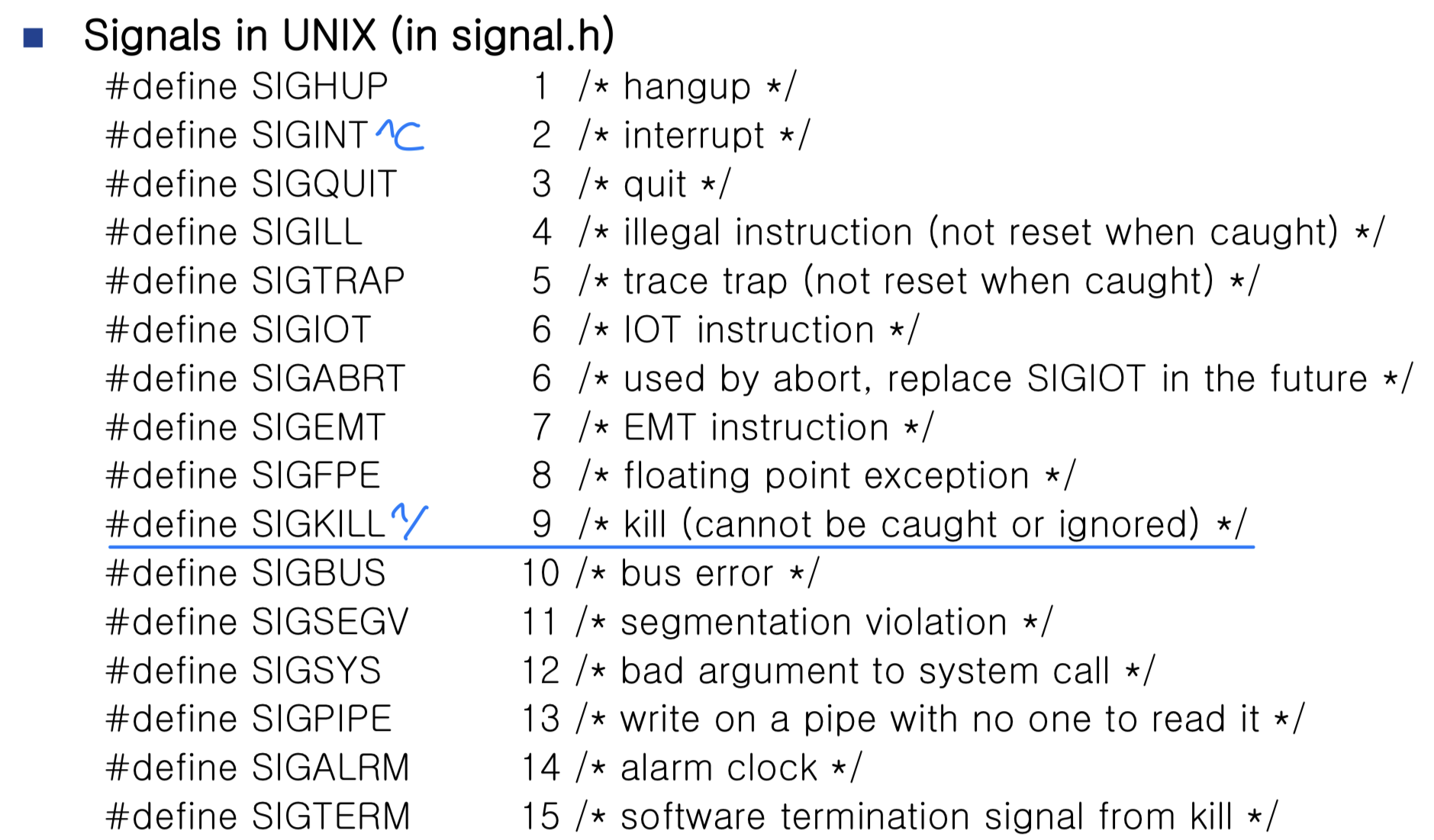

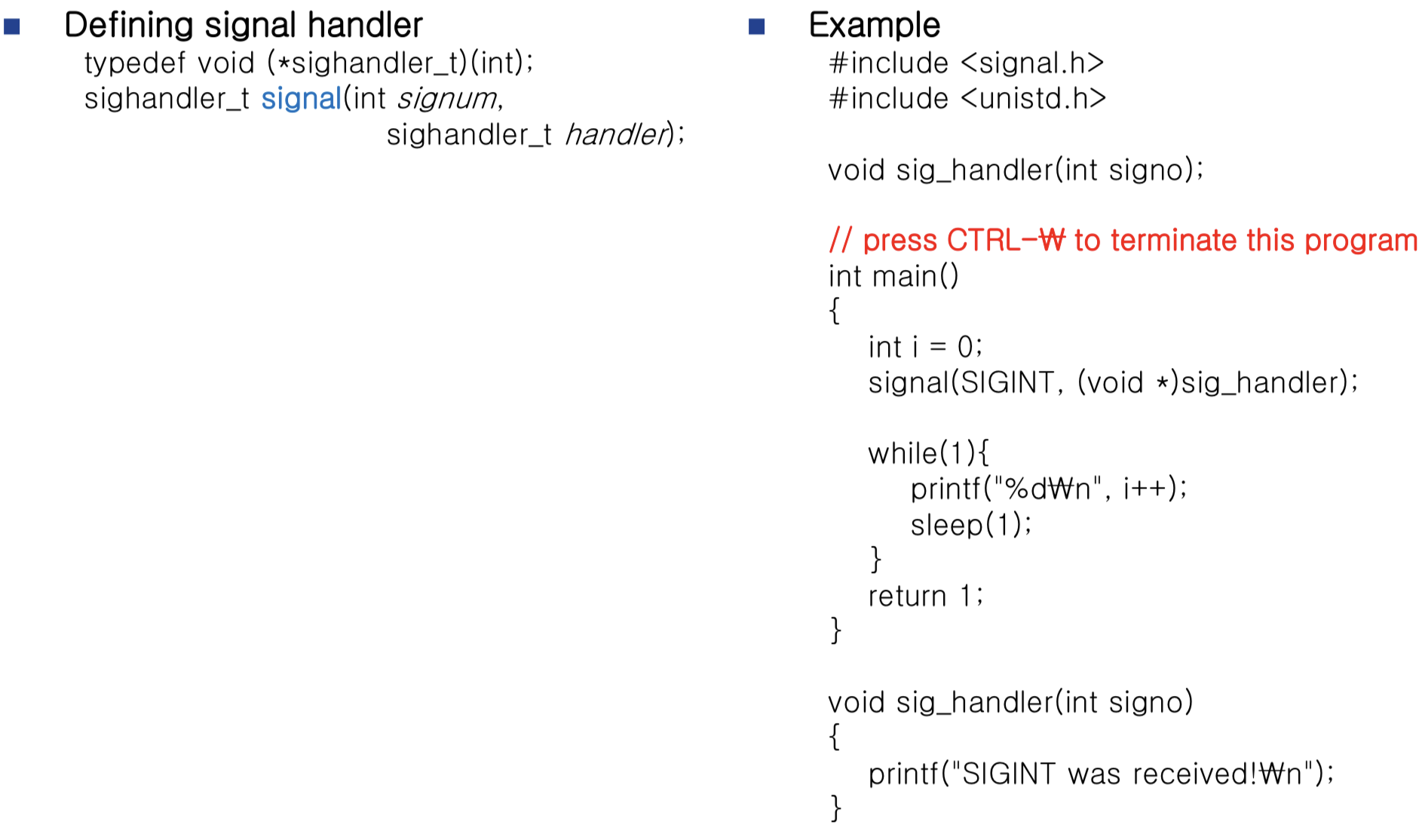

Signal handling

- Signal: mechanism provided by UNIX to notify(알리다) a process a particular(특정) event has occurred

→ user mode에서 event처리 cf. kernel mode interrupt

- A signal can be generated by various sources

- The signal is delivered to a process.

- The process handles it- Default signal handler (kernel)

- User-defined signal handler

- Types of signal

- Synchronous: signal from same process

Ex) illegal(잘못된) memory access, division by 0

- Asynchronous: signal from external sources

Ex) <Ctrl>-C → signal handler 실행 → terminate - The operating system uses signals to report exceptional situations to an executing program

- A signal is a limited form of IPC used in Unix and other POSIX-compliant(호환) operating systems

- Essentially it is an asynchronous notification sent to a process to notify it of an event that occurred

- Question: What thread the signal should be delivered?

- Possible options

- To the thread to which the signal applies(적용되는)

- To every thread in the process

- To certain(특정) threads in the process

- Assign a specific thread to receive all signals

➔ depend on type of signal - Another scheme: specify(지정) a thread to deliver the signal

Ex) pthread_kill(tid, signal) in POSIX

→ kill or 그냥 signal로 가능 / 죽이는건 cancel

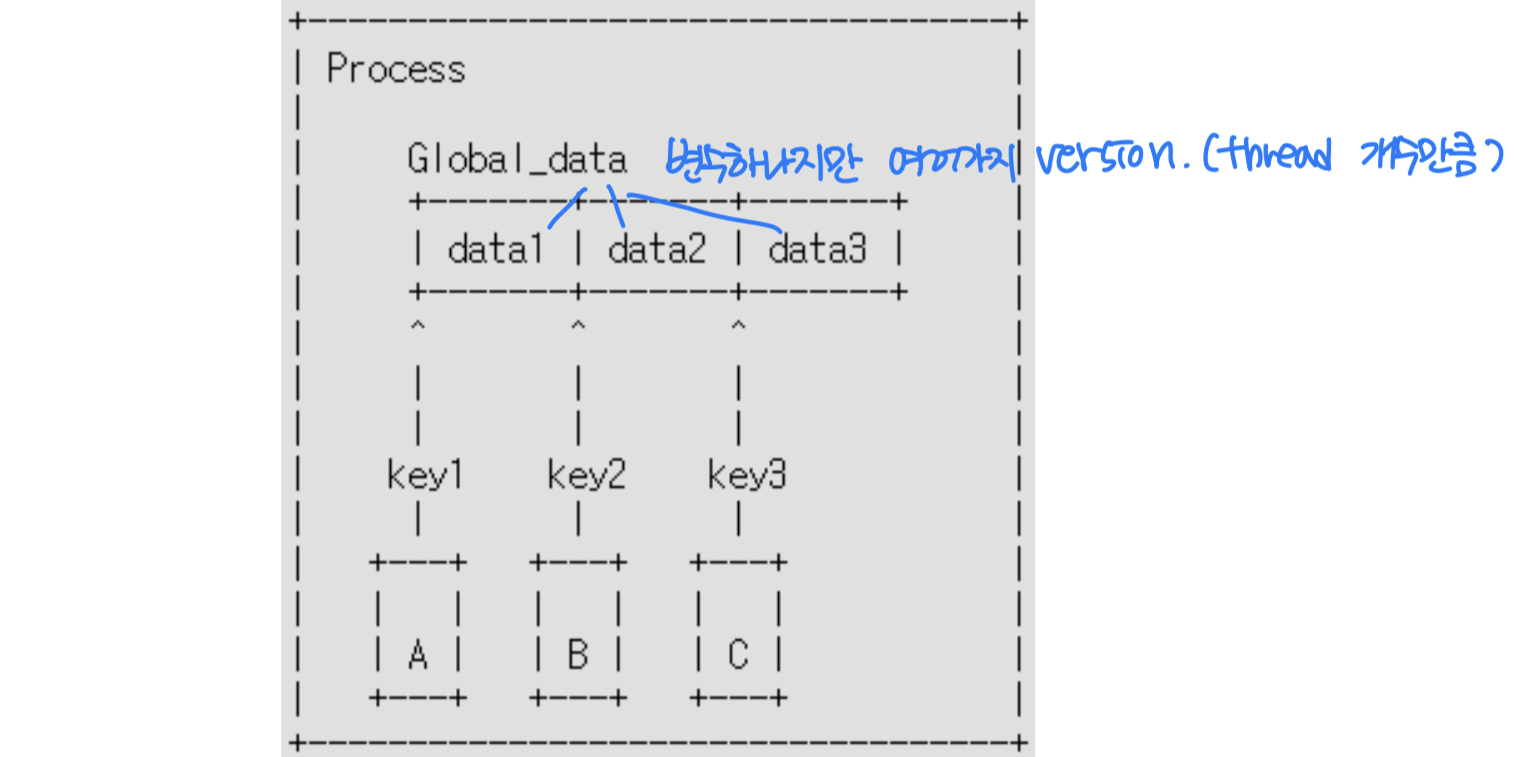

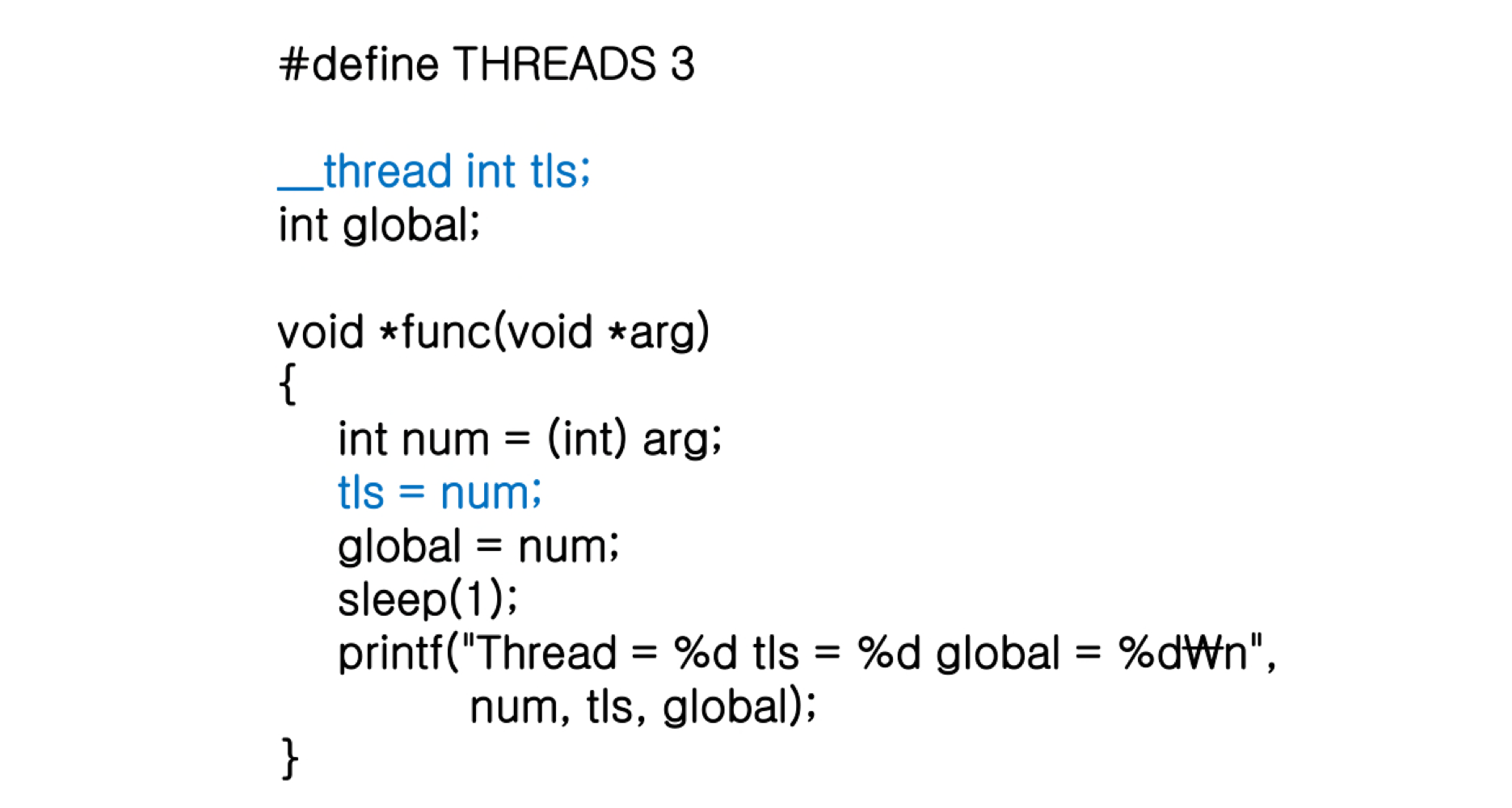

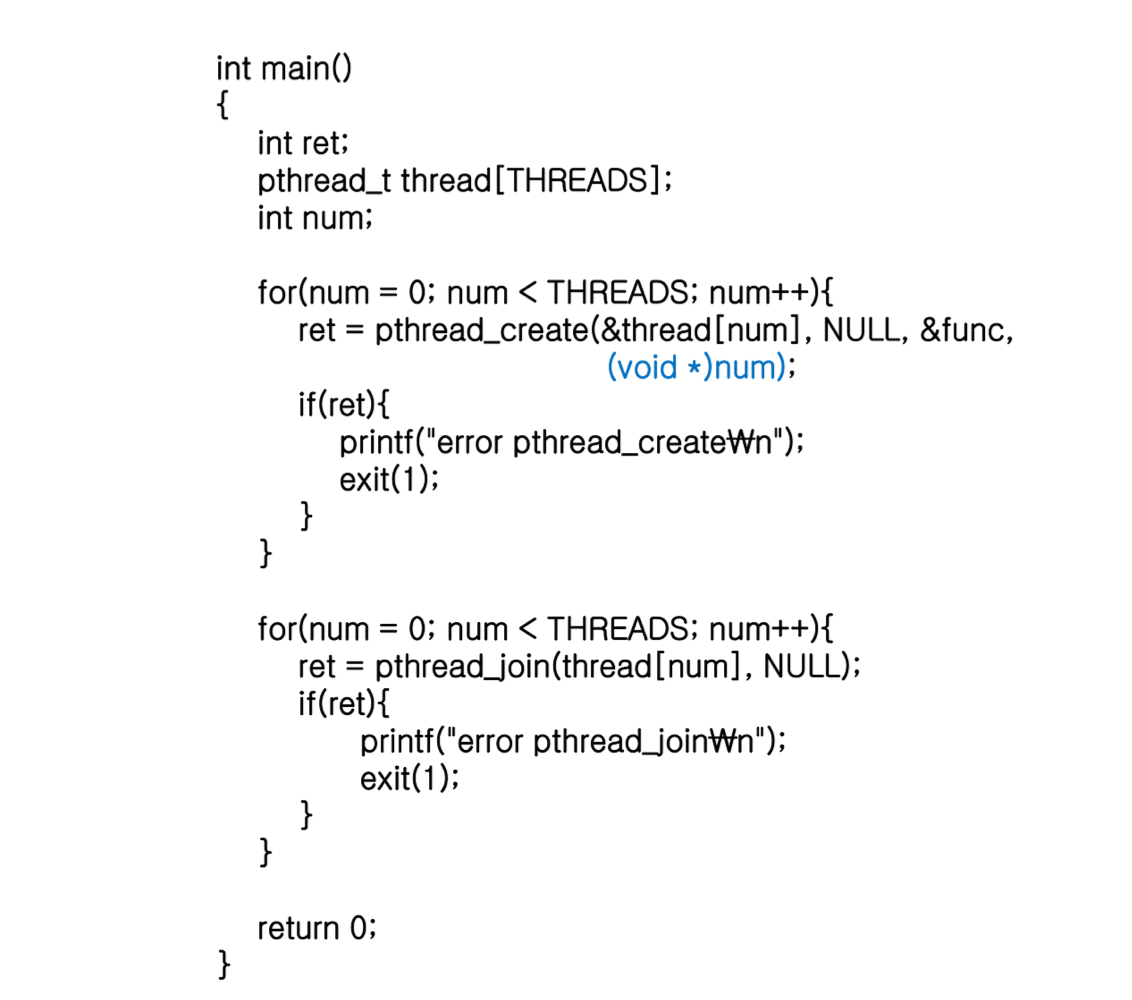

Thread-local storage

- In a process, all threads share global variables

- Sometimes, thread-local storage(TLS) is required

- Many OS’s support thread specific data

Ex) A key is assigned to each thread. - thread_demo2.c

→ main의 avg 배열을(local var) child thread가 주소로 접근

why? join으로 main이 기다리기 때문 - Thread-local storage(TLS) allows each thread to have its own copy of data

Ex) __thread int tls; // on pthread- Each thread has its own ‘int tls’ variable

- Different from local variables

- Local variables visible only during single function invocation

로컬 변수는 특정 함수 내에서 선언되어 해당 함수가 호출될 때 생성되고, 함수 실행이 끝나면 소멸 - TLS visible across function invocations

함수 호출이 끝나더라도, 해당 변수는 스레드에서 여전히 유지

- Local variables visible only during single function invocation

- Similar to static data

- TLS is unique to each thread

Static data는 해당 변수가 선언된 파일 내에서만 전역적으로 사용

- TLS is unique to each thread

- Useful when you do not have control over the thread creation process (i.e., when using a thread pool)

TLS를 사용하면 각 스레드에서 작업을 처리하는 동안 스레드 간 변수의 공유와 동기화를 수행할 수 있다. 이를 통해 스레드 풀에서 작업자 스레드를 관리하면서도, 스레드 간에 변수의 값을 안전하게 유지

Scheduler activation (already covered)

HGU 전산전자공학부 김인중 교수님의 23-1 운영체제 수업을 듣고 작성한 포스트이며, 첨부한 모든 사진은 교수님 수업 PPT의 사진 원본에 필기를 한 수정본입니다.