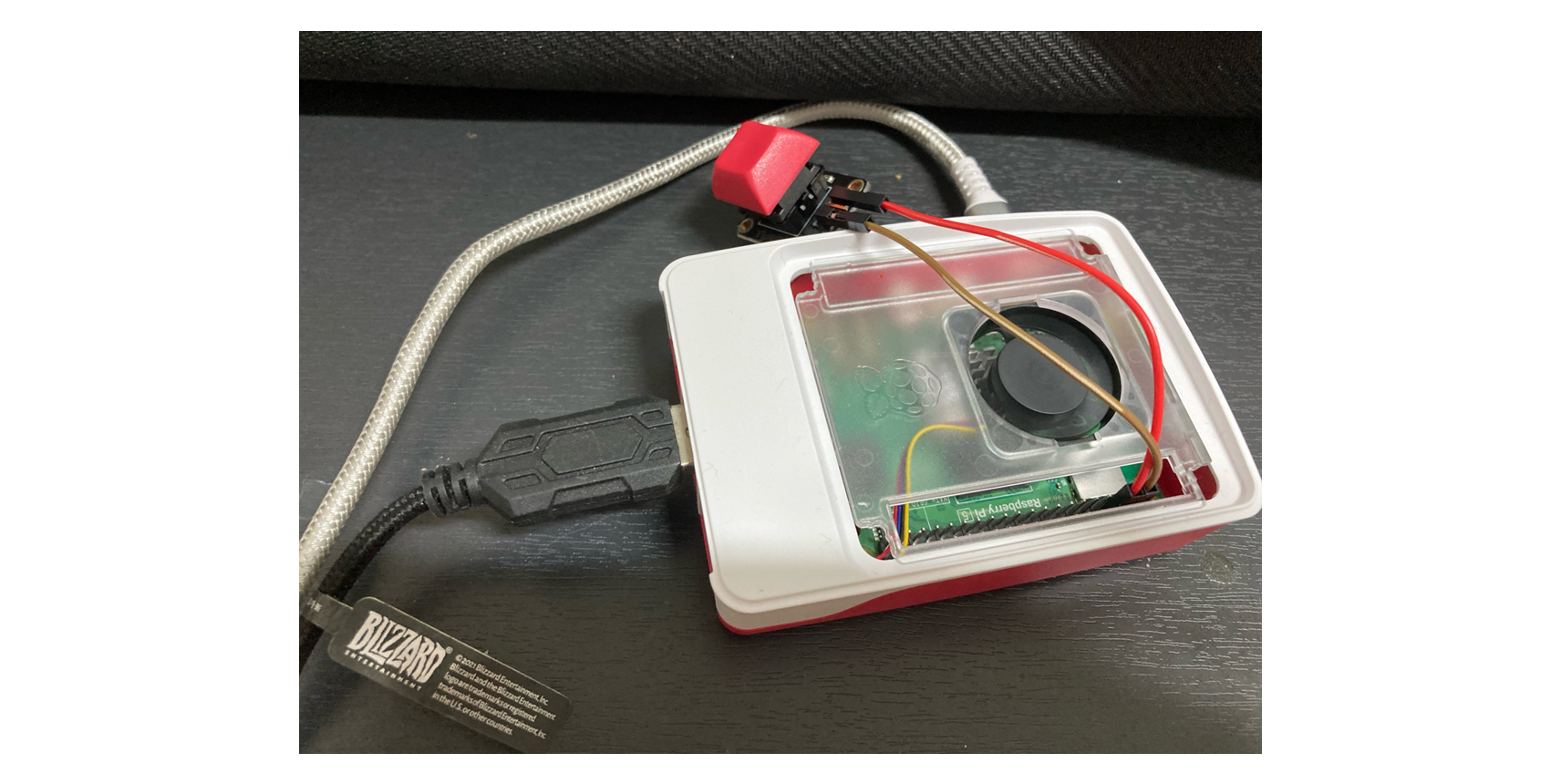

raspberry pi 5 with llamafile

현재 Raspberry pi 5 로 llamafile 을 이용하여 query-answer 를 완료 하였다.

아래 코드에 대해 조금 더 자세히 분석, 해석, 동작 원리를 파악한 후,

언어, 소리 등을 계속 업그레이드 진행 할 예정.

- llamafile

wget https://huggingface.co/jartine/TinyLlama-1.1B-Chat-v1.0-GGUF/resolve/main/TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile?download=true

result

-

Query

Transcription: I am little bit sad.

https://drive.google.com/file/d/1Bjzp709fKFSBplegLYPFHxS_iy7cb3tn/view?usp=drive_link -

Answer

LLM result: ChatCompletionMessage(content="AI assistant: I understand your emotions. It's normal to feel sad sometimes. However, it's essential to remember that sadness is a natural part of life. It's okay to feel sad, and it's also okay to seek help when you need it. Remember that you can always reach out to your AI assistant for support and guidance.", role='assistant', function_call=None, tool_calls=None)

https://drive.google.com/file/d/1BrSXFatmoFMo1TGeQM4jX2mlh1gUaKhV/view?usp=drive_link

code

import time

import math

from io import BytesIO

import pyaudio

import wave

import RPi.GPIO as GPIO

from gtts import gTTS

from pygame import mixer

from openai import OpenAI

import whisper

BUTTON = 8

GPIO.setmode(GPIO.BOARD)

GPIO.setup(BUTTON, GPIO.IN, pull_up_down=GPIO.PUD_UP)

SPEECH_RECOG_MODEL = whisper.load_model("base")

OPENAI_CLIENT = OpenAI(

base_url="http://127.0.0.1:8080/v1",

api_key = "sk-no-key-required"

)

'''

0 {'index': 0,

'structVersion': 2,

'name': 'USB PnP Audio Device: Audio (hw:2,0)',

'hostApi': 0,

'maxInputChannels': 1,

'maxOutputChannels': 2,

'defaultLowInputLatency': 0.007979166666666667,

'defaultLowOutputLatency': 0.007979166666666667,

'defaultHighInputLatency': 0.032,

'defaultHighOutputLatency': 0.032,

'defaultSampleRate': 48000.0}

'''

CHUNK = 4096

FORMAT = pyaudio.paInt16

CHANNELS = 1

# In digital audio, 44,100 Hz (alternately represented as 44.1 kHz) is a common sampling frequency.

RATE = 44100

RECORD_SECS = 5

def record_wav(query_voice_file):

# show details of devices.

audio = pyaudio.PyAudio()

# create pyaudio object.

stream = audio.open(format = FORMAT,

rate = RATE,

channels = 1,

input = True,

frames_per_buffer=CHUNK)

print("start to record voice")

frames = []

# loop through stream and append audio chunks to frame array.

print(int( (RATE / CHUNK) * RECORD_SECS))

for index_rec in range(0, int( (RATE / CHUNK) * RECORD_SECS)):

data = stream.read(CHUNK)

frames.append(data)

print("finished recording")

# stop the stream, close it, and terminate the pyaudio instantiation.

stream.stop_stream()

stream.close()

audio.terminate()

# save the audio frames as .wav file.

wavefile = wave.open(query_voice_file,'wb')

wavefile.setnchannels(CHANNELS)

wavefile.setsampwidth(audio.get_sample_size(FORMAT))

wavefile.setframerate(RATE)

wavefile.writeframes(b''.join(frames))

wavefile.close()

return

def text_to_speech(answer_text, answer_sound_file):

# convert text to sound bytes.

sound_byte = BytesIO()

tts = gTTS(text=answer_text, lang="en-uk")

tts.write_to_fp(sound_byte)

# save sound bytes.

tts.save(answer_sound_file)

# calculate the sound length from save file.

mixer.init()

sound_info = mixer.Sound(answer_sound_file)

sleep_time = math.ceil(sound_info.get_length())

sound_byte.seek(0)

# play sound during the length of the voice.

mixer.music.load(sound_byte, "mp3")

mixer.music.play()

time.sleep(sleep_time)

def main():

# record voice

query_voice_file = 'query.wav'

record_wav(query_voice_file=query_voice_file)

# convert voice to text with openai whisper

result = SPEECH_RECOG_MODEL.transcribe(query_voice_file, fp16=False)

print("Transcription: {0}".format(result["text"]))

request = result["text"]

# query llamafile (LLaMA_CPP)

completion = OPENAI_CLIENT.chat.completions.create(

model="LLaMA_CPP",

messages=[

{"role": "system", "content": "You are an AI assistant. Your priority is helping users with their requests."},

{"role": "user", "content": request}

]

)

# convert text to speech with espeak

print("LLM result: {0}".format(completion.choices[0].message))

# use gTTS instead of espeak.

answer_sound_file = "answer.mp3"

answer_text = completion.choices[0].message.content

text_to_speech(answer_text, answer_sound_file)

# os.system('espeak "{0}" 2>/dev/null'.format(completion.choices[0].message.content))

if __name__ == "__main__":

print("Ready...")

while(True):

if GPIO.input(BUTTON) == GPIO.LOW:

print("Button pressed")

main()