1. Binomial Distribution

Binomial Distribution은 success의 확률에 대한 distribution이다. 아래와 같이 표현한다.

- : 양의 정수 , : probability ( )

강의에서 교수님이 Binomial distribution을 설명하실 때 3가지로 해석하신다. 하나씩 살펴보자.

1) Story

- : number of successes in independent trials.

- : probability of success

→ Every trial results in success or failure, but not both.

2) Sum of Indicator Random Variables

- , (i.i.d = independent, identically distributed )

- = have the same distribution.

- = 1 if jth trial = success, =0 otherwise

→ Indicator variable indicates whether the jth trial was a success or not.

→ exactly same as 1). (counting # of successes)

Random Variable VS Distribution

- distribution : explains how the probabilities of X will behave in different situations.

- multiple r.v.s can have the same distriution.

3) PMF (Probability Mass Function)

- def) probability of X on any particular value

(PMF for binomial dist.)

4) Binomial Distribution의 조건

- N identical trials.

- each trial being success or failure.

- probability of success must be same in all trials.

- each trial must be independent.

2. PMF (Probability Mass Function)

- S: sample space → different possible outcomes

- random variable : assigning a number to each pebble.

- ex) is an event.

- event = subset of sample space.

- Can interpret this as a function.

- Function that maps Sample space → interger (this case, 7)

- ex) is an event.

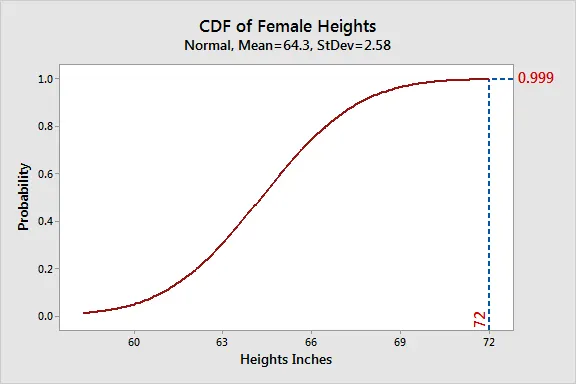

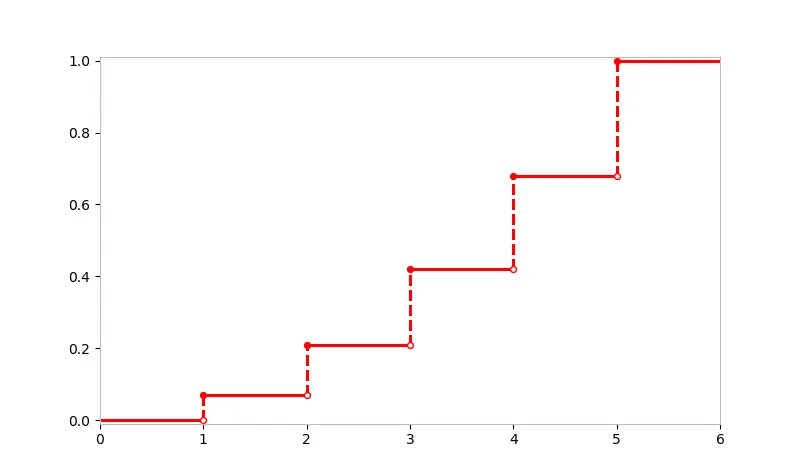

1) CDF (Cumulative Distribution Function)

- is an event.

- : CDF of .

- one way to describe a distribution.

Continuous r.v.s' CDF

Discrete r.v.s' CDF

→ Good to have a visual idea of a CDF.

2) PMF - Discrete r.v.s

- Discrete r.v.s : possible values should be something you can list.

- : could be infinite or finite

- PMF = for all j.

- 가능한 모든 값들에 대한 확률을 정의해야 함.

- 로 많이 표현함.

- blueprint for X.

- 조건 : (for discrete r.v.s)

Binomial Distribution PMF (revisit)

- ,

- 위의 조건 확인

- sum : , by Binomial Theorem.

Arithmetics between two distributions

- , independent. Then,

- If the random variables are in the same sample space, you can add, subtract, multiply, divide.. etc. them.

- Distributions should be Independent, probability of success must be same to use this property!

해석

1) Story

- adding two dist. is same as adding the number of successes from each trials.

2) Sum of Indicator Random Variables

→ sum of =

3) PMF

- (Law of total probability)

( → use PMF directly)

→ are independent, so (X has no impact on Y.)

=

= (VanderMonde Identity)

-> so PMF proves that is TRUE.

3. Common Mistakes - Thinking that it is a Binomial when it’s not.

- Ex1) 5 card hand from a 52 card deck. → Find distribution of the number of aces in the hand. → PMF or CDF

- Let

- PMF

- Find , This is 0 except if

- Distribution is NOT Binomial.

- trials are not independent.

- if one ace comes out, prob. of success changes in the next trial.

- trials are not independent.

- Distribution is NOT Binomial.

- for

- same as elk problem (from h.w.)

- some elks are tagged, some are not. → when collecting a sample, what is the prob. of the collected sample has exactly k tagged elks?

- same as elk problem (from h.w.)

- Find , This is 0 except if

- Ex) Have b black, and w white marbles , pick simple random samples(= all subsets of that size are equally likely)of size n.

→ Find the Distribution of # of white marbles in the sample (pretty much the same as ex1.)

- ,

- This Distribution is called Hypergeometric distribution.

- sampling without replacement. (trials are not independent, so not binomial)

- If you sample with replacement, it will be Binomial!

Validation of the Hypergeometric Dist. PMF

,

- Sum = 1?

- (via VanderMonde)