Summary

[배경]

- Prompt engineering은 추가 훈련 없이 LLM을 효율적으로 사용할 수 있게 해줌.

- 하지만 효율적으로 Prompt 디자인하는 것은 challenge함

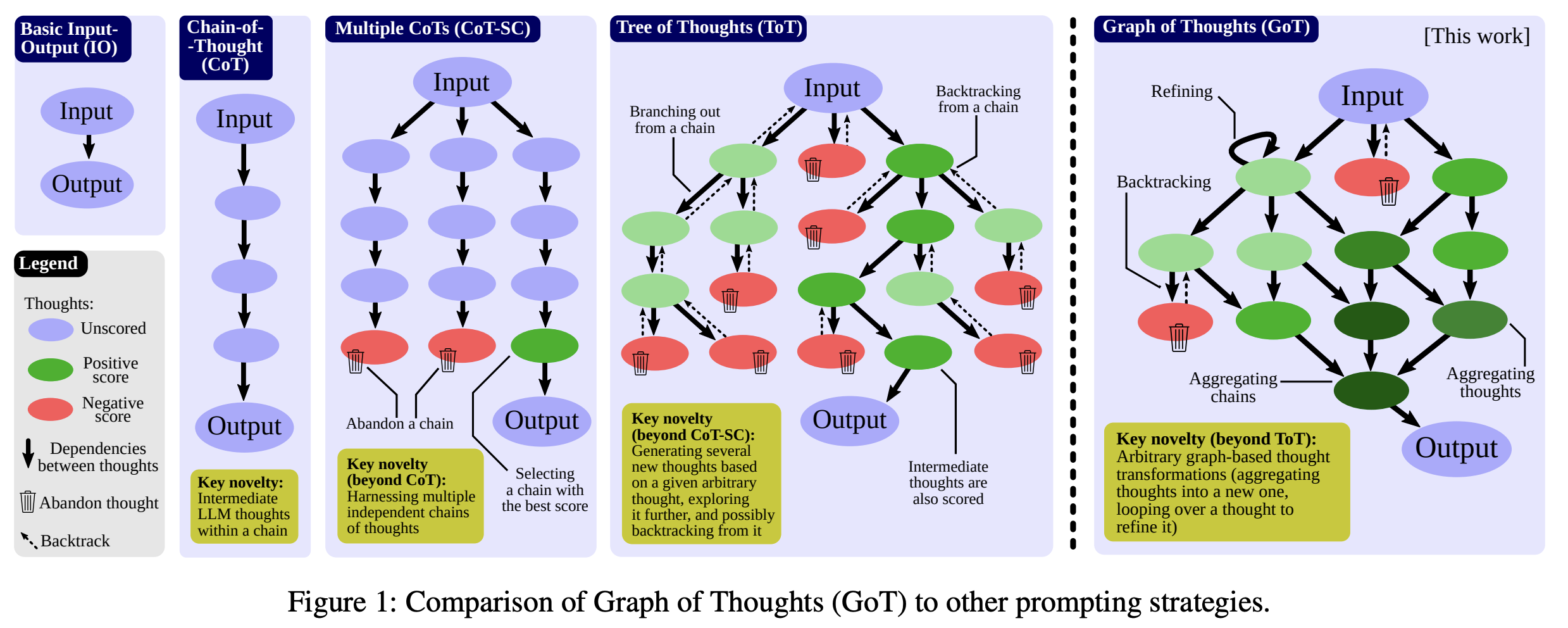

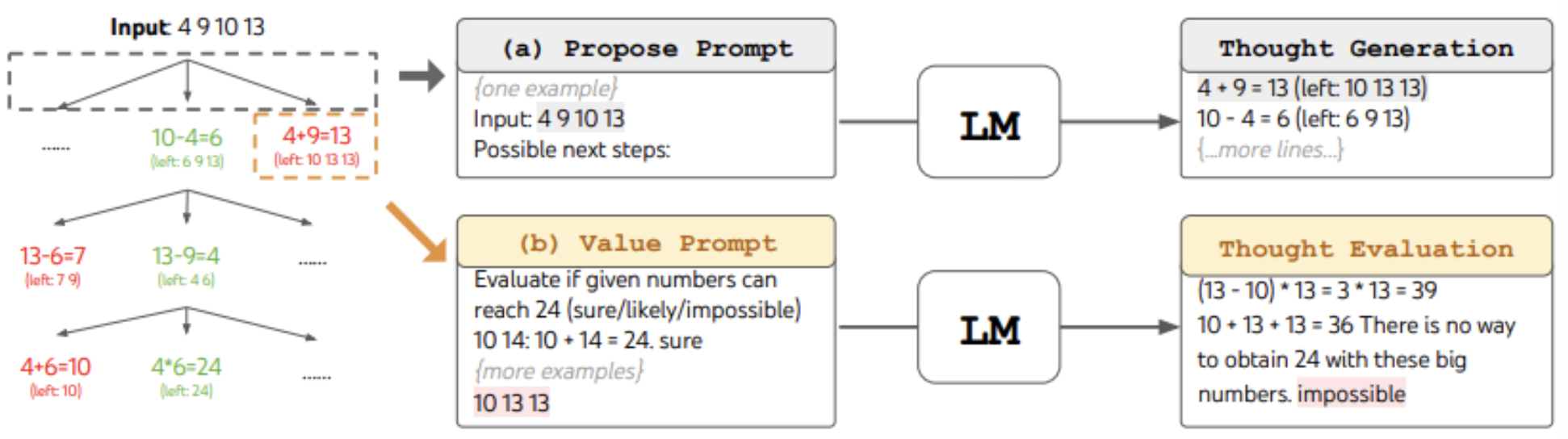

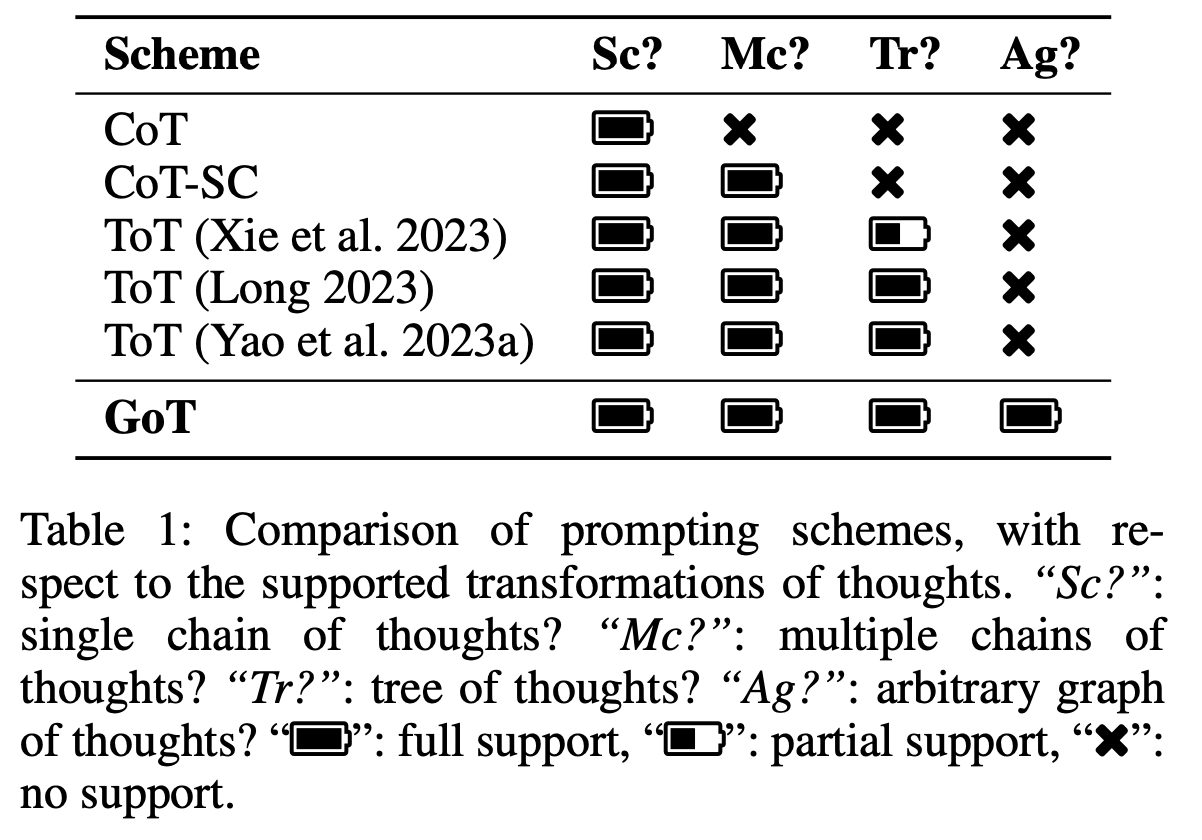

[기존연구한계]

- Chain-of-Thoughts: 단 하나의 추론 경로만 가짐. 정확한 추론 경로 보장 X

- Self-consistency of Chanin of Thoughts (CoT-SC): 제일 잘 나온 애들 중에 voting. backtracking 같은 local exploration할 수 없음. (중간에 이게 잘못됐다고 해도, 다시 고려하는 과정이 X)

- Tree of Thoughts: 추론 경로 단조롭고 유연하지 않음. 만들어지는 Thoughts 대부분 버려짐.

[Graph of Thoughts]

- 그래프를 활용하여 개념 간의 관계와 맥락을 표현함으로써 프롬프트를 설계하여 Human reasoning 기반 더 복잡한 사고체계 반영.

- Process

- 주어진 태스크 subtask로 분리한 후 핵심 개념들 식별하고, 관계 정의하여 arbitrary graph로 표현.

- 분리된 subtask 그래프 상에서 질의응답 필요한 경로 탐색 후, 해당 정보 프롬프트에 반영.

- Aggregation, Generation 등의 reasoning 거쳐 최종 결과 통합.

[결과 & Contribution]

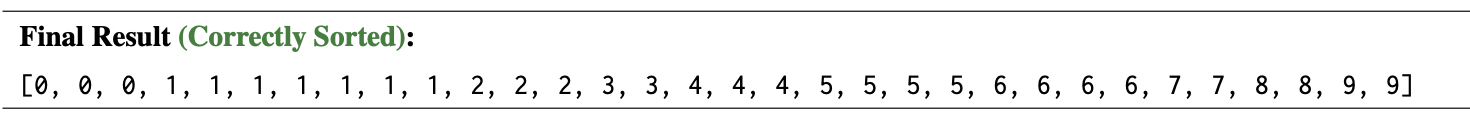

- sorting task: Tree of Thoughts 에 비해 62% 정확도 향상 & 31% cost 감소

- volume of thought (LLM이 output 만들 때 기여한 정보량) : good

- API만들어서 다른 prompt engineering 기법에도 쉽게 적용 가능

Research Background & Goal

Preliminaries: Prompting Approaches

Input-Output (IO): 중간과정 없이 바로 input 입력 후 출력 y directly.

- input x ➡︎ output y

Chain-of-Thought (CoT): 수학문제 풀 때 효과 good

- x ➡︎ a_1 ➡︎ a_2 ... ➡︎ y

- IO 대비 월등한 효과 선보임. But, 단 하나의 추론 경로만 가짐. 정확한 추론 경로 보장 X

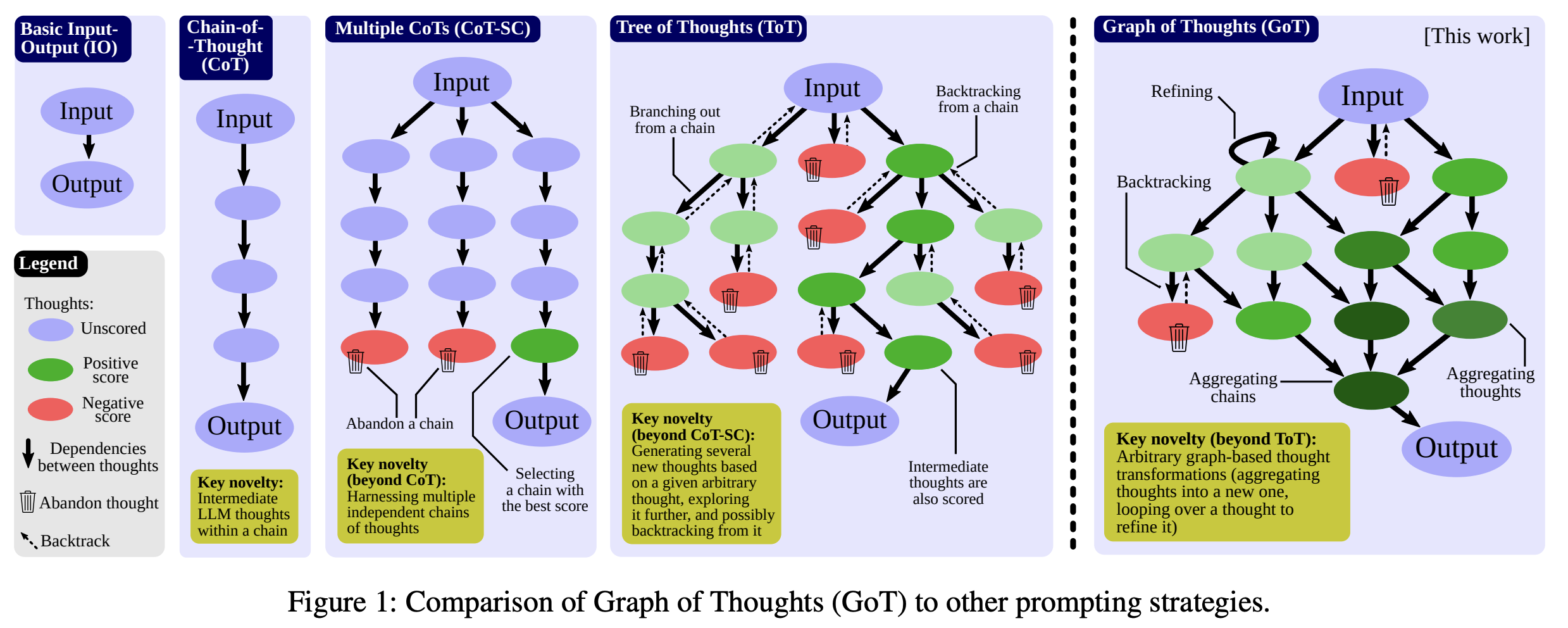

Multiple CoTs; Self-Consistency with CoT (CoT-SC): 여러 방식으로 결과 도출한 후, 최적의 결과를 정답으로 추론.

- CoT가 독립적으로 k개 있음.

- scoring metric 기반으로 최적의 output 뽑음.

- CoT 개선한 방식임. But, backtracking 같은 local exploration할 수 없음. (중간에 이게 잘못됐다고 해도, 다시 고려하는 과정이 X)

Self-Consistency Improves Chain of Thought Reasoning in Language Models (ICLR ’23)

수학문제 풀 때, 다양한 방법으로 푼 다음, 가장 적절한 결과를 정답으로 도출

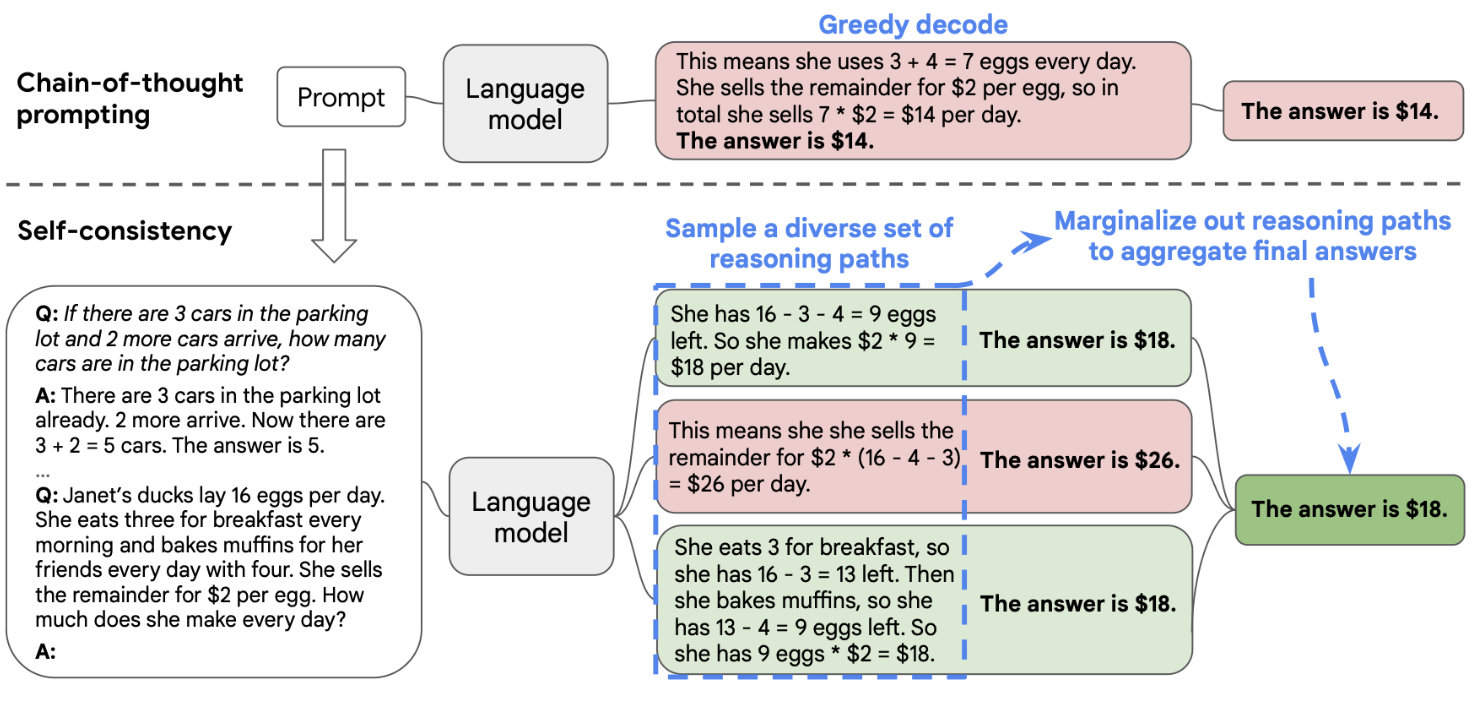

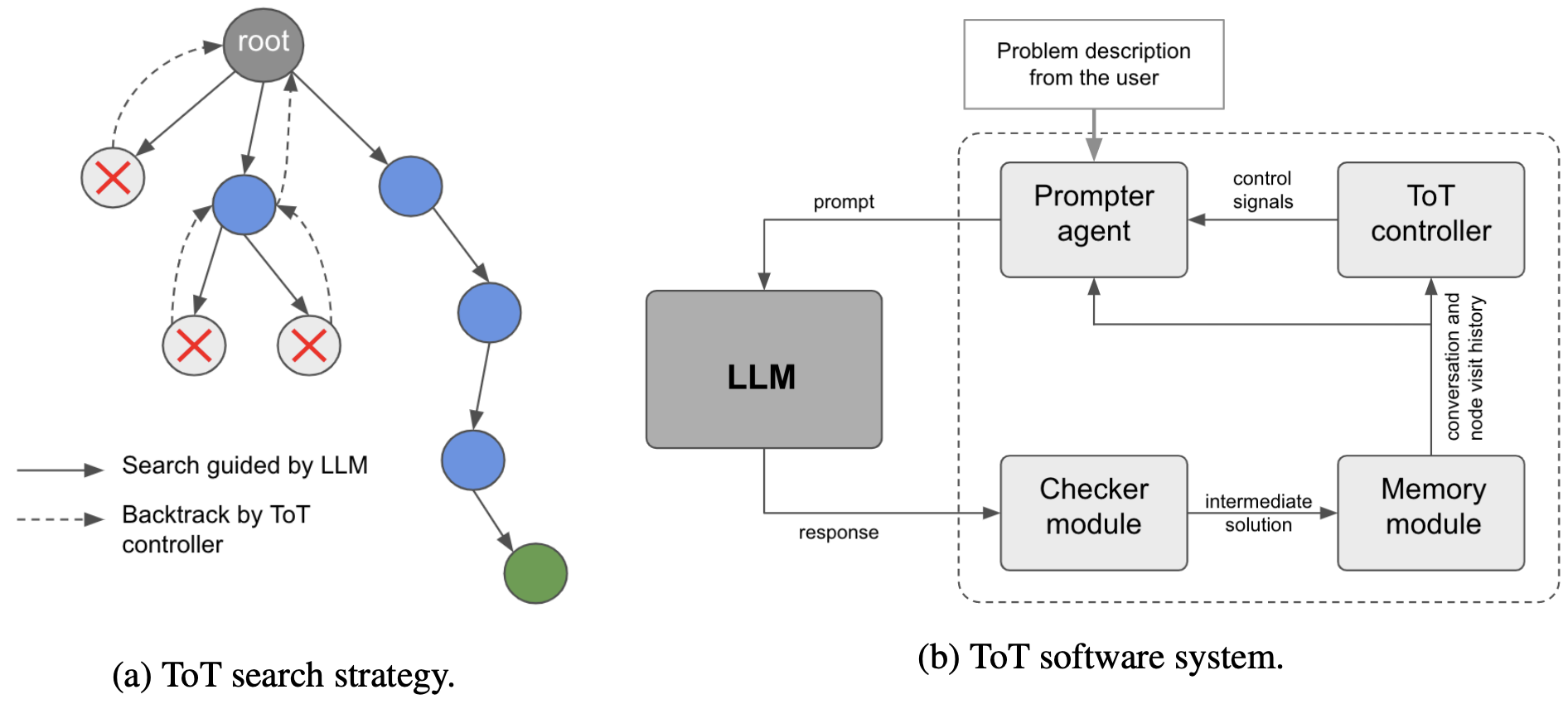

Tree of Thought (ToT): 다양한 추론 경로 탐색 후, scoring metric 기반으로 최적의 결과 찾음.

- CoT-SC에서 reasoning 과정을 graph 형태로 표현함. single node는 solution, thoughts들 나타냄.

- 방법

- thought generator: 기존 node 기반으로 새로운 k개 노드 생성

- state evaluator : 새로 생성된 노드의 점수 계산 (LLM or human eval 가능)

- search algorithm (BFS, DFS) 등으로 최적 경로 찾기.

- 추론 경로 단조롭고 유연하지 않음. 만들어지는 Thoughts 대부분 버려짐.

두 개 논문이 거의 동시에 나왔음. 개념은 완전 똑같은 듯. 근데 뒤에 논문이 NeurIPS에 나오기도 했고, 인용수도 훨씬 많음

(1) Large Language Model Guided Tree-of-Thought (arXiv, '23) 2023.5.15 submitted

(2) Tree of Thoughts: Deliberate Problem Solving with Large

Language Models (NeurIPS ’23) 2023. 5.17 submitted

Research Goal

-

Goal:

LLM의 Reasoning 과정을 arbitrary graph로 표현하여, 더 복잡하고, 더 사람의 문제해결 방식과 유사한 과정을 표현해 내겠다! -

Why?:

인간의 문제해결과정은 non-linear하며, 최종 결론을 내리기까지 기존의 사고들이 결합하기도 (Aggregation), 기존 애들을 바탕으로 새로운 아이디어를 만들어내기도 함(Generation). 그런데 CoT, ToT 애들로는 이런 특징이 표현이 안된다.

-

How?

thought transformation 방식 (generation, aggregation, refine 등)을 적용해서 reasoning process를 graph로 표현한다. -

Contribution

- (1) Graph of Thoughts (GoT) 제안: 구조화된 reasoning을 통해 LLM의 capability 향상

- (2) Modular architecture 설계해서 손쉽게 GoT 쓸 수 있게 함. [CODE]

- 다른 techinques에도 적용할 수 있고, indivisual thought들 controllable하게 할 수 있음.

- (3) GoT 적용한 다양한 사례 제시 ( sorting, keyword counting for summaries, set operations, document merging)

- (4) SOTA 와 비교 분석

- 다른 prompt 기술과 비교했을 때, 성능은 좋아지고, cost는 더 감소함.

- (5) Volum of a thought; 새로운 metric 제시.

- e.g.) the volume of v == v까지 도달할 수 있는 LLM thought의 수는 몇개? (ToT가 버려지는 thought 많아서 만든 듯)

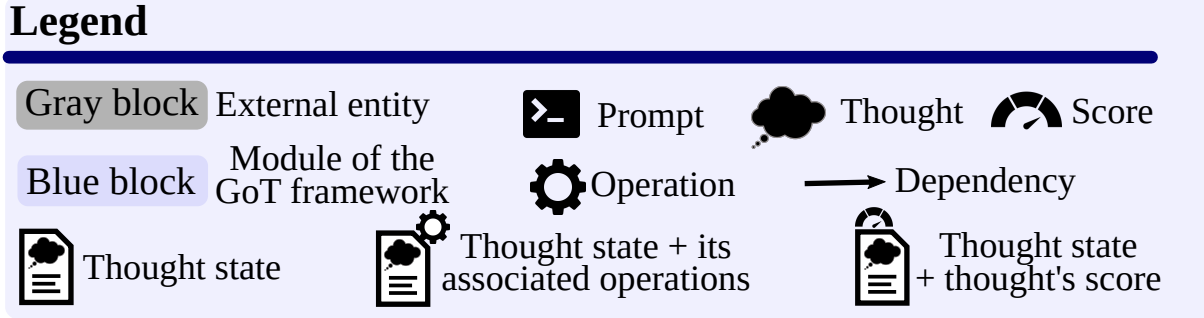

The GoT Framework

Reasoning Process

- Heterogeneous graph G= (V,E,c)

- V: a solution (task가 뭐냐에 따라서 solution의 형태는 달라질 수 있음.)

- E(t_1, t_2): t1을 기반으로 t2가 생성되었음을 뜻함.

- c: node 별로 class가 다를 수 있음. (e.g. writing paragraph task에서, plans, actual paragraph가 각각 node가 될 수 있음)

Transformations of Thoughts

Aggregation Transformations

하나의 thought로 합치기

Refining Transformations

self-loop

Generation Transformations

여러개의 subtask로 분할 or 새로운 thought들로

Scoring & Ranking Thoughts

- 이것도 task별로 어떻게 계산할지 다름.

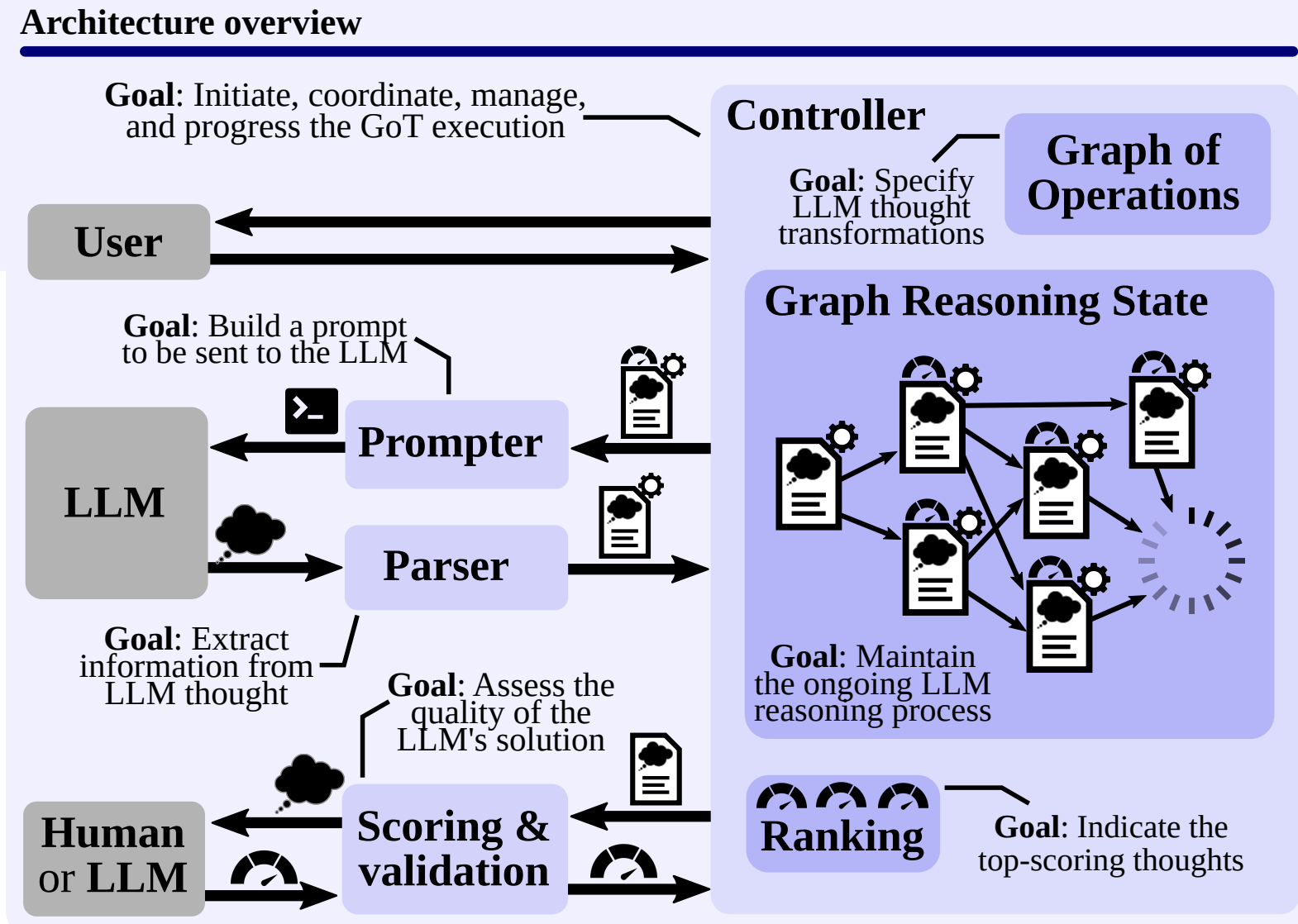

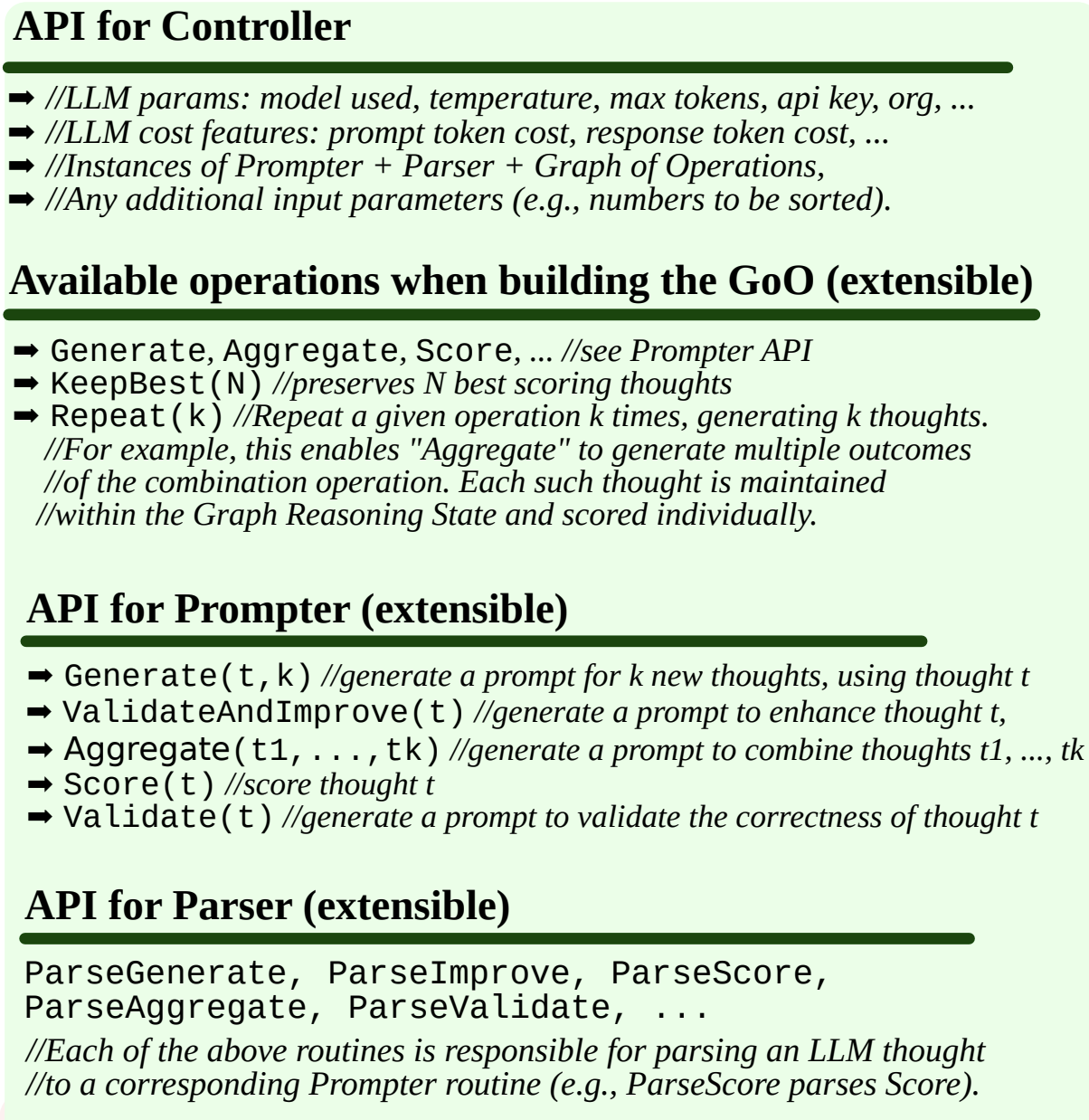

System Architecture & Extensibility

구성 요소

Prompter

: LLM에게 줄 메세지 준비

Parser

: LLM thought에서 정보 추출

Scoring & Validation

: LLM thought를 verify

Controller

: 전체 reasoning process를 총괄하고, 어떻게 진행할 지 결정

- Controller를 이루는 두 개 요소

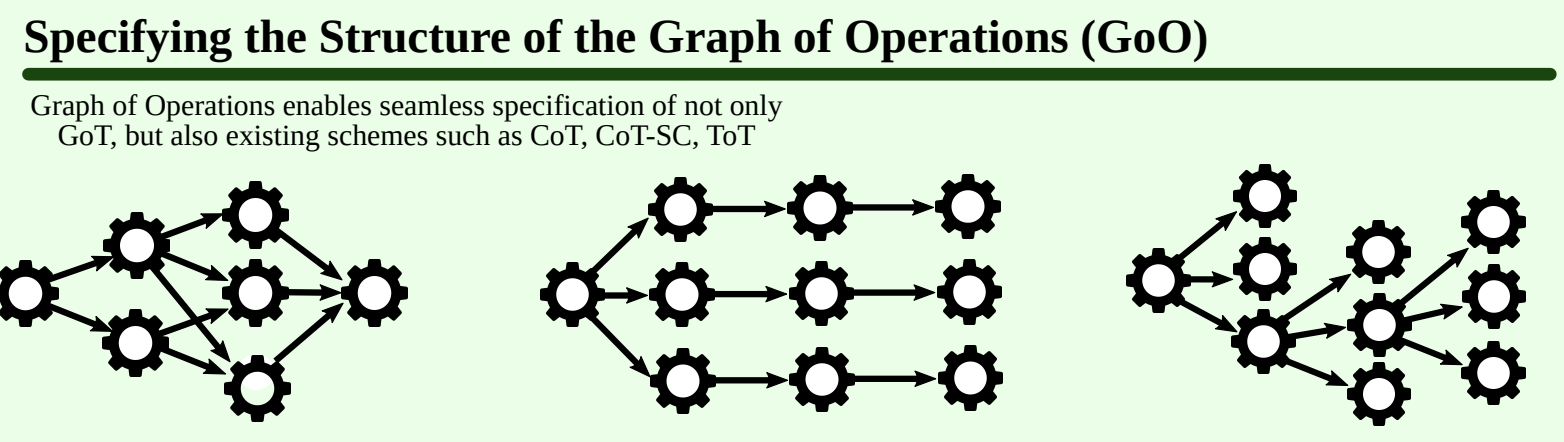

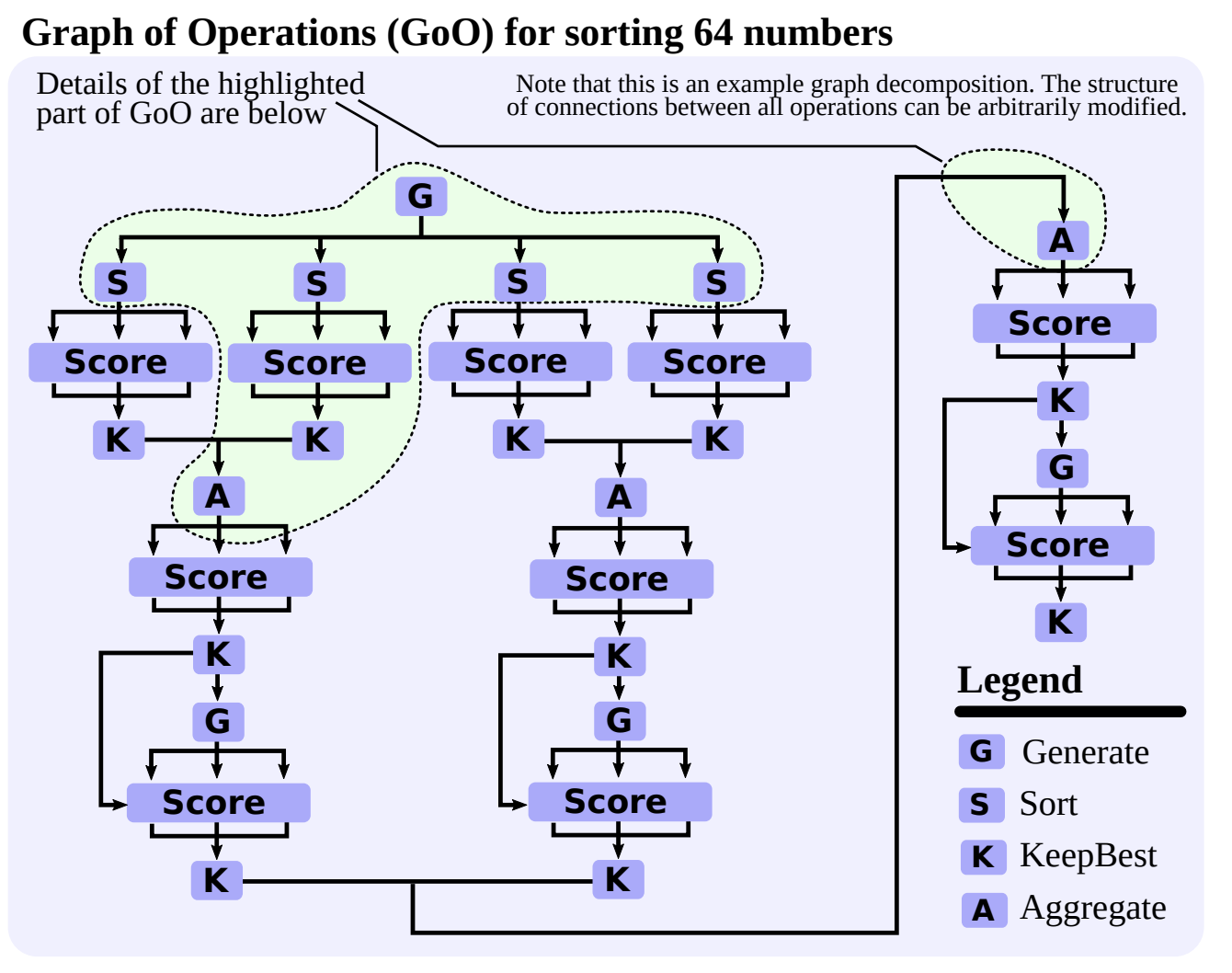

- Graph of Perations (GoO): 태스크별로 고정된 graph decomposition (subtask로 분해) static structure.

task가 뭐냐에 따라 사전에 정의되어 있어야함 (trasformation의 순서나, dependencies). 유동적으로 알아서 해주는 줄 알았는데, 그게 아니었음. 이게 GoT의 한계인듯. 분해되지 않는 task엔 적용하기 힘들듯..??

- Graph Reasoning state (GRS): dynamic structure. LLM reasoning process의 상태 (history of its thoughts, and their states)

- Graph of Perations (GoO): 태스크별로 고정된 graph decomposition (subtask로 분해) static structure.

APIs

docomentation

Examples of prompts using APIs

예시 코드: Generate

class Generate(Operation):

"""

Operation to generate thoughts.

"""

operation_type: OperationType = OperationType.generate

def __init__(

self, num_branches_prompt: int = 1, num_branches_response: int = 1

) -> None:

"""

Initializes a new Generate operation.

:param num_branches_prompt: Number of responses that each prompt should generate (passed to prompter). Defaults to 1.

:type num_branches_prompt: int

:param num_branches_response: Number of responses the LM should generate for each prompt. Defaults to 1.

:type num_branches_response: int

"""

super().__init__()

self.num_branches_prompt: int = num_branches_prompt

self.num_branches_response: int = num_branches_response

self.thoughts: List[Thought] = []

def get_thoughts(self) -> List[Thought]:

"""

Returns the thoughts associated with the operation.

:return: List of generated thoughts.

:rtype: List[Thought]

"""

return self.thoughts

def _execute(

self, lm: AbstractLanguageModel, prompter: Prompter, parser: Parser, **kwargs

) -> None:

"""

Executes the Generate operation by generating thoughts from the predecessors.

The thoughts are generated by prompting the LM with the predecessors' thought states.

If there are no predecessors, the kwargs are used as a base state.

:param lm: The language model to be used.

:type lm: AbstractLanguageModel

:param prompter: The prompter for crafting prompts.

:type prompter: Prompter

:param parser: The parser for parsing responses.

:type parser: Parser

:param kwargs: Additional parameters for execution.

"""

previous_thoughts: List[Thought] = self.get_previous_thoughts()

if len(previous_thoughts) == 0 and len(self.predecessors) > 0:

return

if len(previous_thoughts) == 0:

# no predecessors, use kwargs as base state

previous_thoughts = [Thought(state=kwargs)]

# 이전 thought 있을 경우!! task가 generate 이라 generate prompt 가져옴

for thought in previous_thoughts:

base_state = thought.state

prompt = prompter.generate_prompt(self.num_branches_prompt, **base_state)

self.logger.debug("Prompt for LM: %s", prompt)

responses = lm.get_response_texts(

lm.query(prompt, num_responses=self.num_branches_response)

)

self.logger.debug("Responses from LM: %s", responses)

for new_state in parser.parse_generate_answer(base_state, responses):

new_state = {**base_state, **new_state}

self.thoughts.append(Thought(new_state))

self.logger.debug(

"New thought %d created with state %s",

self.thoughts[-1].id,

self.thoughts[-1].state,

)

if (

len(self.thoughts)

> self.num_branches_prompt

* self.num_branches_response

* len(previous_thoughts)

and self.num_branches_prompt > 0

):

self.logger.warning(

"Generate operation %d created more thoughts than expected",

self.id,

)

self.logger.info(

"Generate operation %d created %d new thoughts", self.id, len(self.thoughts)

)

Class Improve

for thought in previous_thoughts:

improve_prompt = prompter.improve_prompt(**thought.state)

self.logger.debug("Prompt for LM: %s", improve_prompt)

responses = lm.get_response_texts(lm.query(improve_prompt, num_responses=1))

self.logger.debug("Responses from LM: %s", responses)

state_update = parser.parse_improve_answer(thought.state, responses)

self.thoughts.append(Thought({**thought.state, **state_update}))generate prompt

=> 다 사전 정의되어 있어야하는 것이 코드에서도 확인되는 구만!

if current is None or current == "":

return self.got_split_prompt.format(input=input)

# if current is just a sublist of the original input, return the split prompt

if kwargs["phase"] == 1:

return self.sort_prompt.format(input=current)

if (

"unsorted_sublist" in kwargs

and kwargs["unsorted_sublist"] != ""

and len(kwargs["unsorted_sublist"]) < len(original) - 5

):

original = kwargs["unsorted_sublist"]

return self.tot_improve_prompt.format(

input=original,

incorrectly_sorted=current,

length=len(utils.string_to_list(original)),

)

Example Use Cases

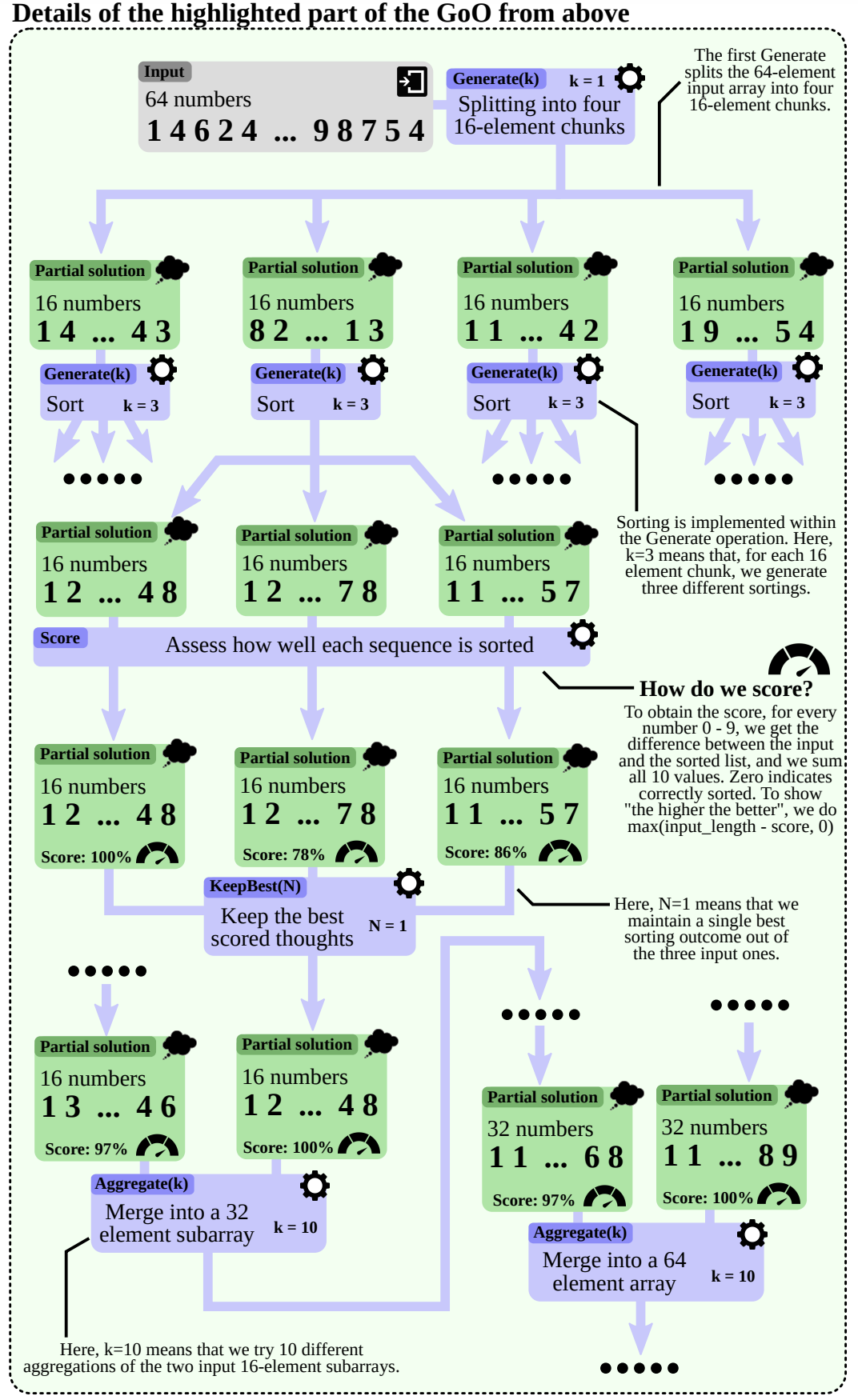

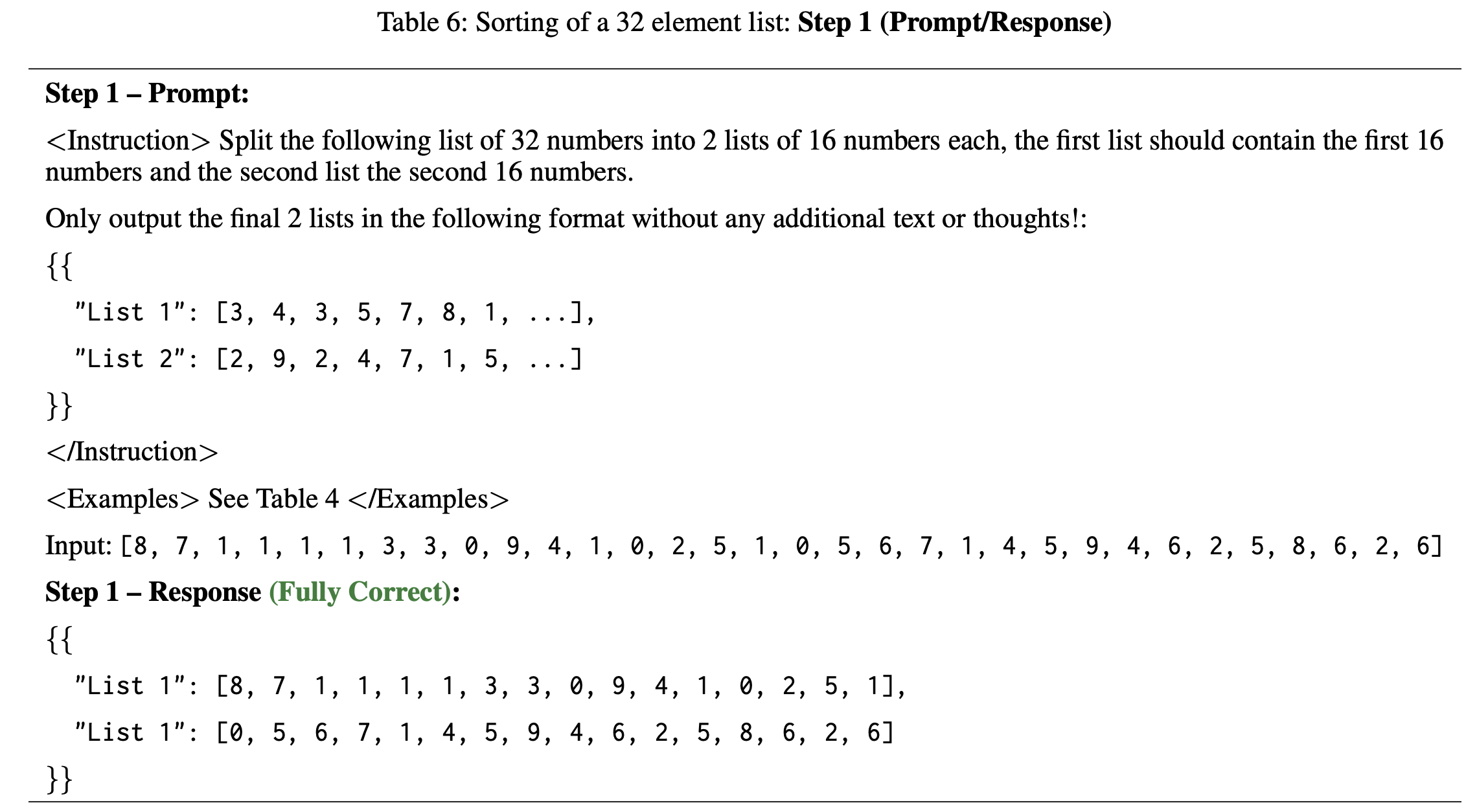

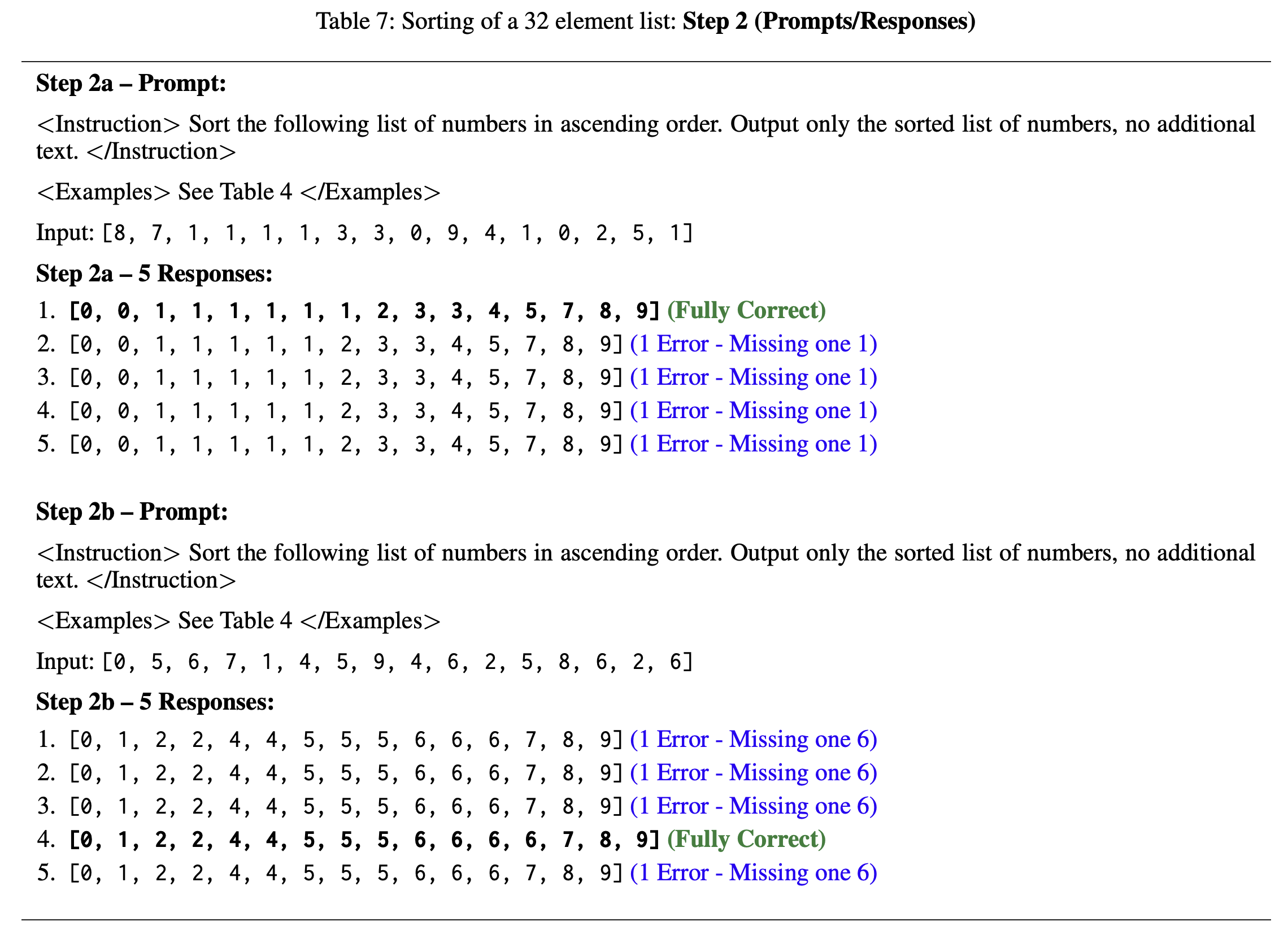

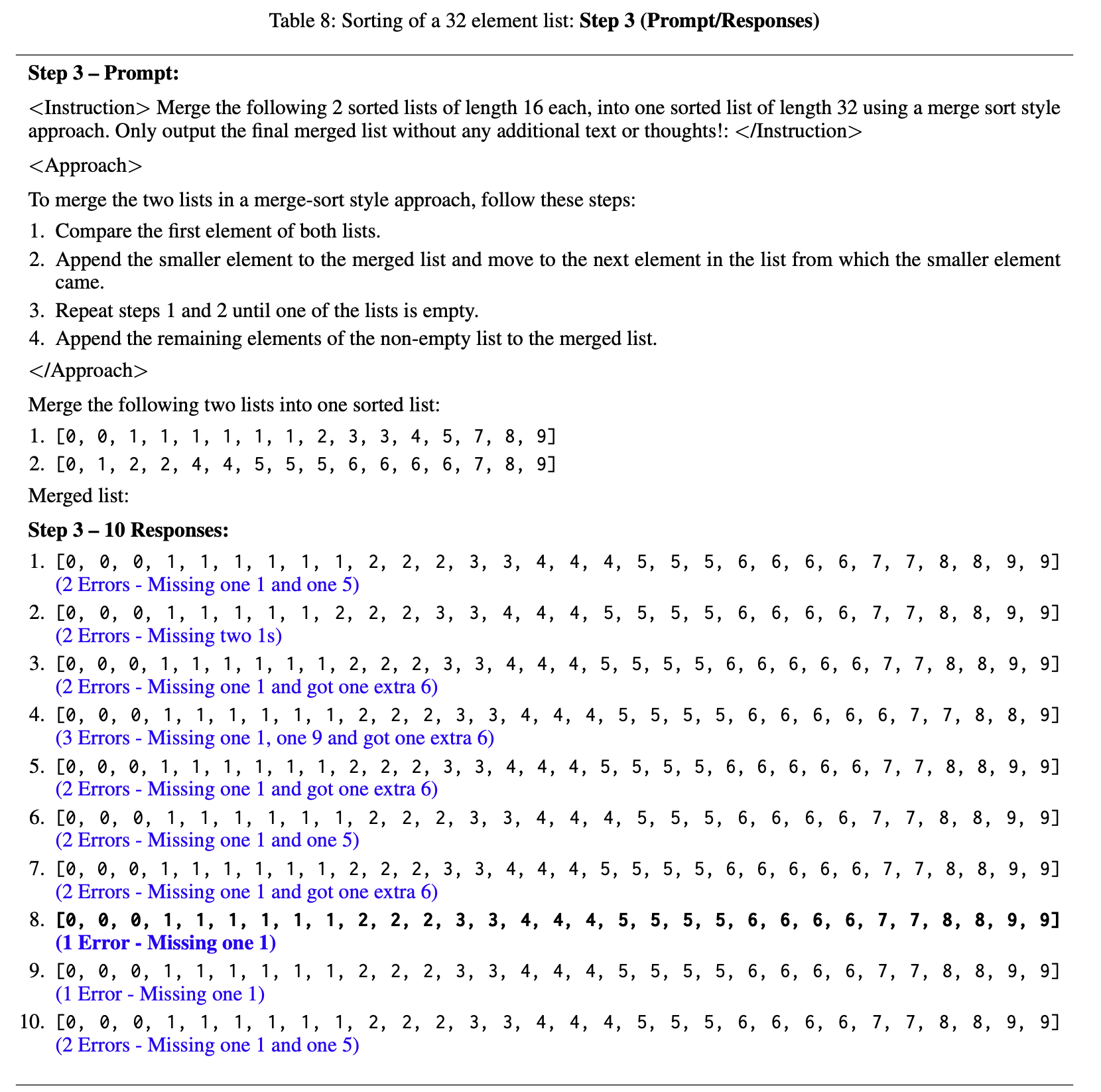

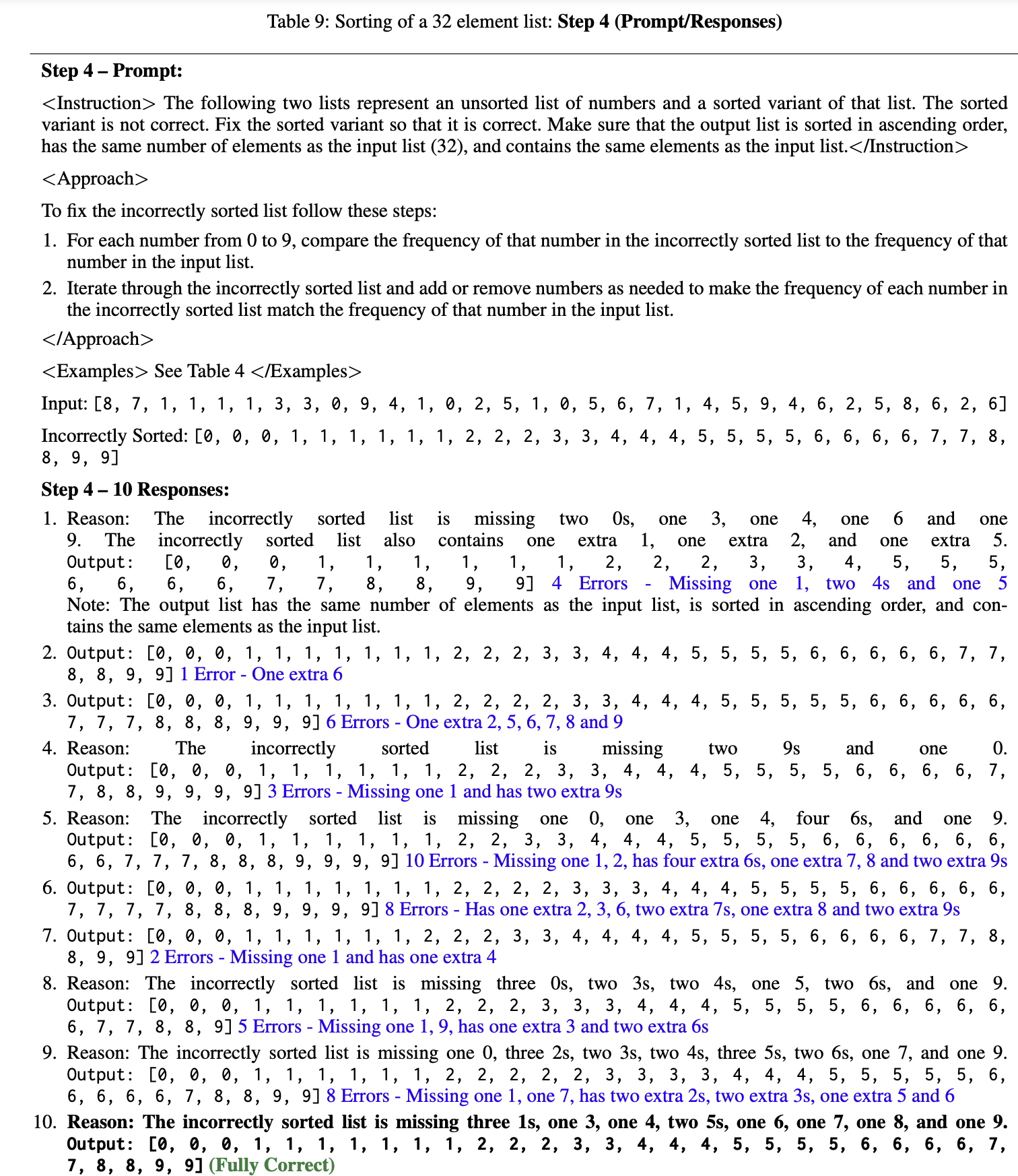

Sorting

Set Operations

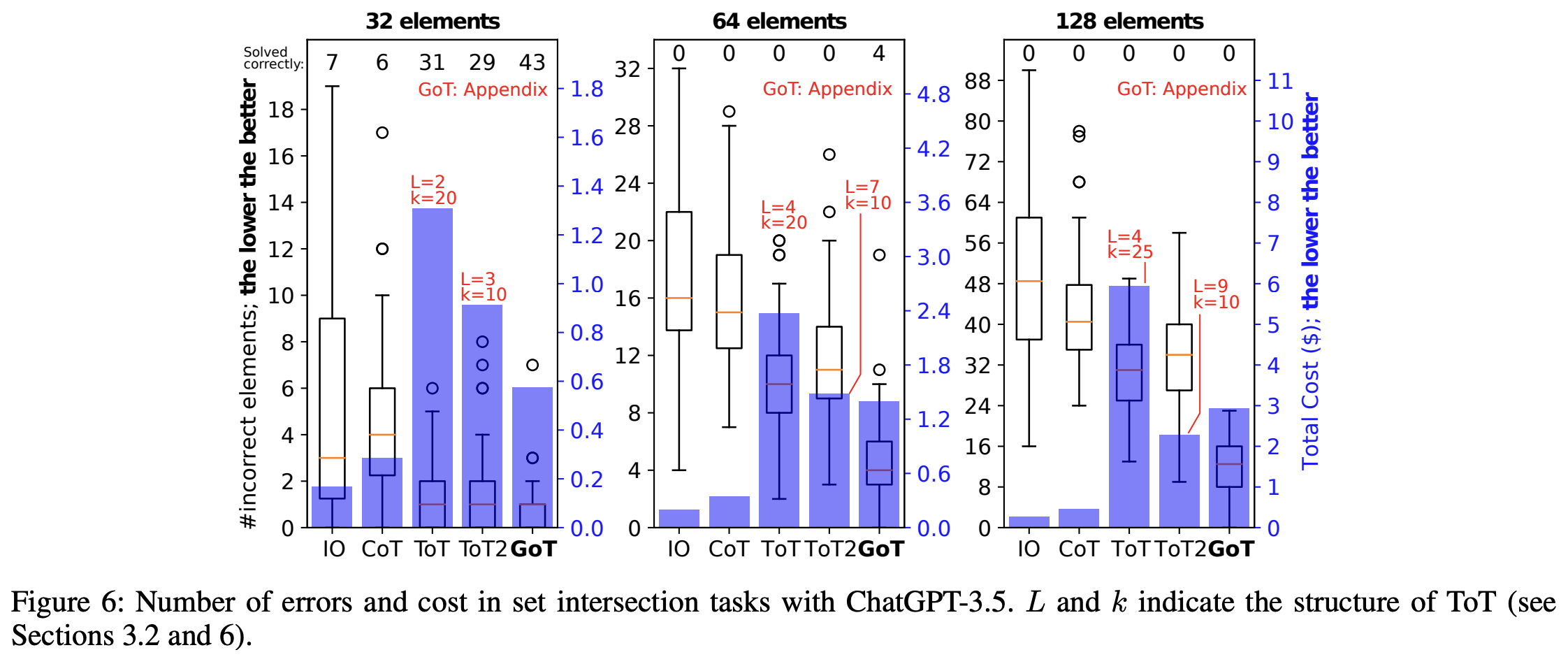

- Sorting이랑 똑같은데, input이 두개임. 하나 sorting한 후에 새로 set intersection. 디테일한 건 논문 참고

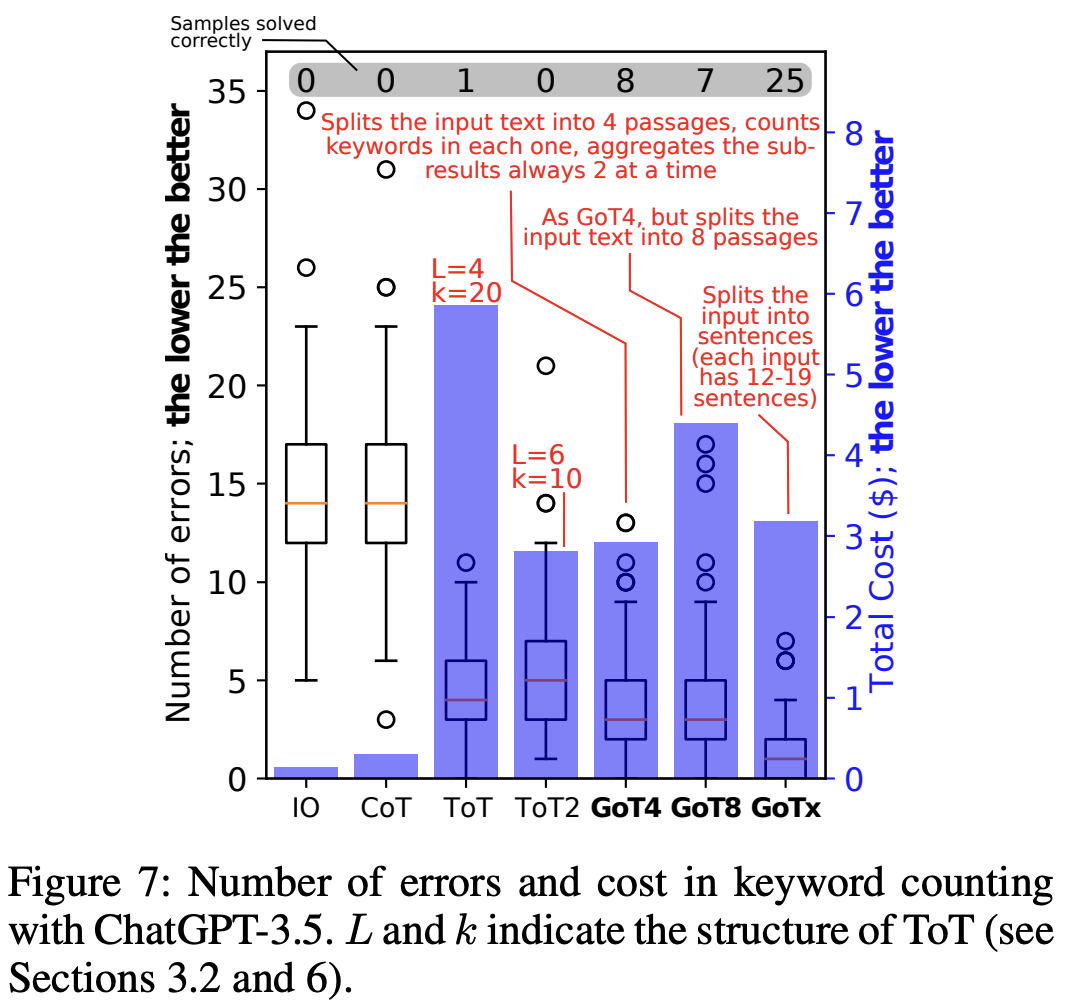

Keywrod Counting

- text안에 특정 keyword 갯수 세기 (e.g. 나라 이름 몇개?)

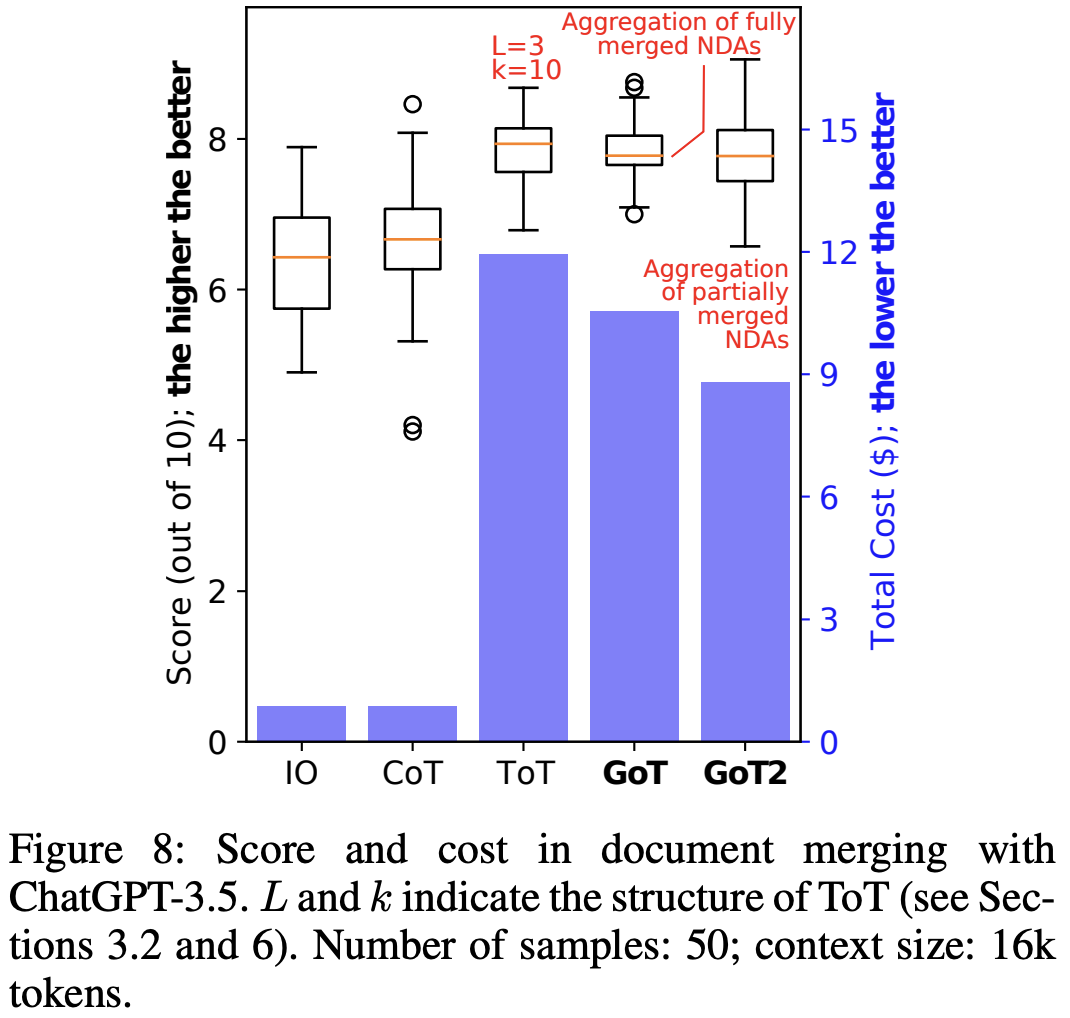

Document Merging

- 여러 개 문장들을 합쳐서 하나의 문서로 만들기

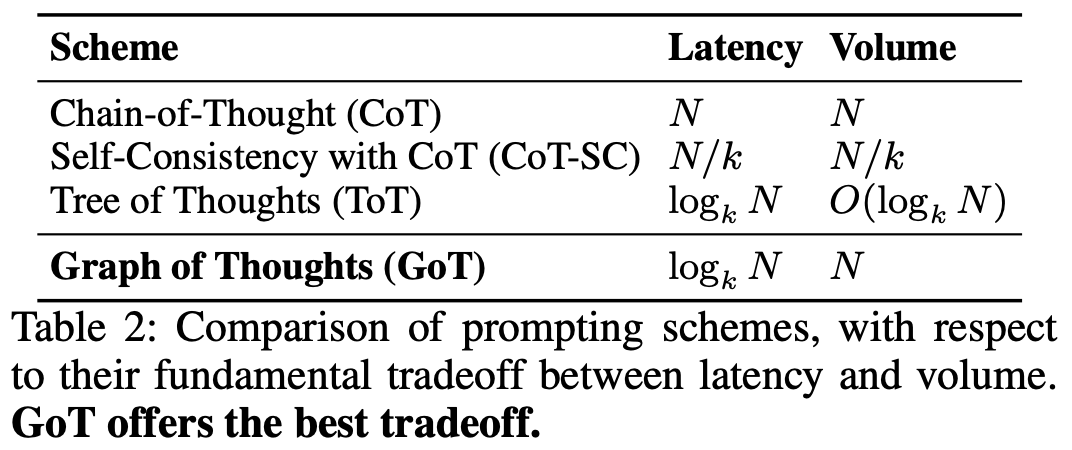

The Latency-Volume Tradeoff

- Notation

- latency: 최종 output에 도달할 때까지 graph의 hop수

- volume: e.g) 최종 thought N이 만들어지는데 영향을 미친 이전 thought 갯수.

- O(1) : single thougth 출력해낼 때의 cost

- k: 새로운 thoughts갯수, branching factor (각 노드에서 뻗어나가는 가지 갯수)

- log_k N : k-진 트리 깊이

- 결과

- ToT는 만들어지는 hop, thought들은 많은데, 결정에 영향을 미치는 애는 적다.

- CoT-SC 최종 결과에 영향을 미치는 애들 냅두고 나머지는 삭제되어서 N/K

- GoT에서는 트리의 리프에서 반대 방향으로 연결된 구조를 가지고 있기 때문에, 이 구조는 모든 중간 생각들에서 최종 생각까지 도달할 수 있는 경로가 존재. (backtracking)

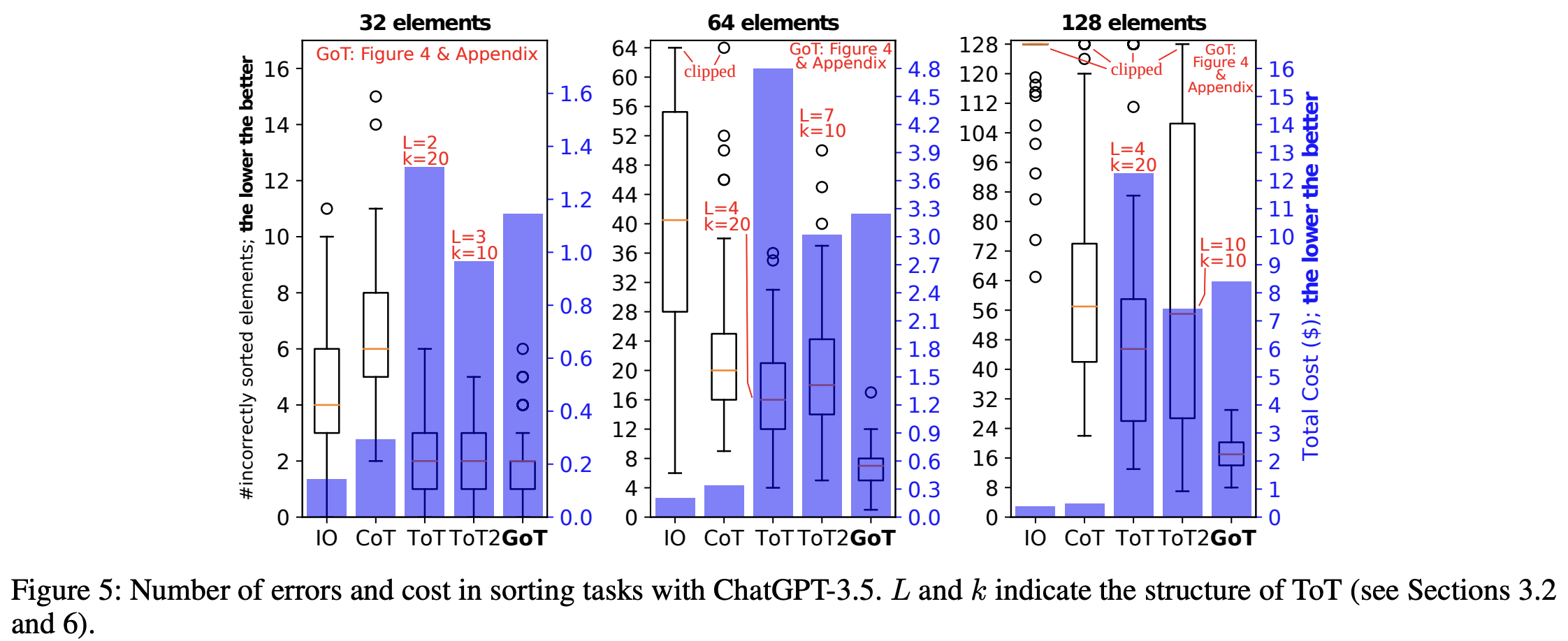

Evaluation

Evaluation Methodology

- GPT3.5 사용

- ToT2: lower k (각 노드에서 뻗어나가는 가지 갯수) but higher Layer (트리 깊이)

- k가 많을 수록 한번에 여러가지 옵션 고려하는 거라 계산량 증가될 수 있음.

- 반대로 k가 적으면 선택지가 줄어서 계산은 덜 복잡하지만, 단계가 더 필요할 수 있음 (trade off 관계)

Analysis of GoT's Advantages

GoT vs ToT

ToT보다 성능과 cost더 좋음

GoT vs IO & CoT

- median error CoT보다 65%, IO보다 83% 더 낮음

multiple generation이 효과적인 성능 영향

Increasing Complexity of Tackled Problems

Task가 복잡해져도 잘한다

P=128일 때 (task 수가 128개)

Task별 결과

-

Sorting

-

Set intersection

-

Keyword Counting

-

Document Merging

Discussion on Task Decompostion

- Task Decomposition의 장점 (GoT4 Vs GoT8 Vs GoTx)

- 비용 효율성을 높이고 LLM이 더 쉽게 작업을 해결할 수 있도록 도움.

- few-shot 예시의 크기를 줄이는 것이 프롬프트 오버헤드를 줄이는 데 효과적

- 하위 작업의 결과를 결합하는 것이 전체 작업을 한 번에 해결하는 것보다 더 쉬움

GoT라는 새로운 개념 알수있어서 좋았습니다!! 재밌는 내용이네요 !! 🤩