github : github.com/hayannn/AIFFEL_MAIN_QUEST/NewsCategoryMultipleClassification

18-1. 들어가며

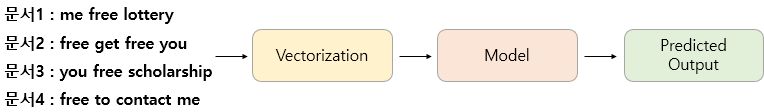

Intro. 머신러닝을 이용한 텍스트 분류

- 텍스트 분류(Text Classification)

- 주어진 텍스트를 Pre-defined Class(사전 정의 클래스)로 분류

- ex) 스팸 메일 자동 분류, 사용자 리뷰 감성 분류, 뉴스 카테고리 분류...

- AI 알고리즘 수행 과정

- 주어진 문장(문서) 벡터화 ➡️ AI 모델에 입력 ➡️ 예측 카테고리 리턴

- 딥러닝 모델 사용 : 벡터화-워드임베딩, 모델-RNN, CNN, BERT

- 이번 내용은 DL이 아닌 ML로 진행

- DL을 쓰지 않는 상황에서의 텍스트 벡터화를 통한 feature 생성 연습

- 다중 클래스 분류(Multiclass Classification)로 진행

학습 내용

- 로이터 뉴스 데이터

- 데이터 확인 & 분포 확인

- 데이터 복원(원본 데이터)

- 벡터화

- 나이브 베이즈 분류기(사이킷런)

- F1-Score, Confusion Matrix

- 머신러닝 모델 사용

- Complement Naive Bayes Classifier(CNB)

- 로지스틱 회귀(Logistic Regression)

- 선형 서포트 벡터 머신(Linear Support Vector Machine)

- 결정 트리(Decision Tree)

- 랜덤 포레스트(Random Forest)

- 그래디언트 부스팅 트리(GradientBoostingClassifier)

- 보팅(Voting)

학습 목표

- 로이터 뉴스 데이터의 이해 및 분포 확인

- F1-Score, Confusion Matrix 이해 및 사용

- 머신러닝 모델 사용 & 성능 비교

사전 준비

- 클라우드 환경

$ mkdir -p ~/aiffel/reuters_classifiaction18-2. 로이터 뉴스 데이터 (1) 데이터 확인하기

로이터 뉴스 데이터

- 46개 클래스

- 뉴스가 어떤 카테고리에 속하는지를 예측

- 텐서플로우 데이터셋 제공

다운로드

from tensorflow.keras.datasets import reuters

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

import pandas as pdTrain Data & Test Data Load

데이터를 나눠서 저장

num_words- (빈도수 기준) 상위 단어 사용 개수 조절

- 1 ~ 10,000번 단어까지만 사용한다는 의미

- 10,000보다 높은 정수가 맵핑된 단어들이 사라지는 의미는 아님(특정 번호로 맵핑됨)

- OOV 문제가 일어나지 않게끔 하는 것

(x_train, y_train), (x_test, y_test) = reuters.load_data(num_words=10000, test_split=0.2)

데이터 구성 확인

print('훈련 샘플의 수: {}'.format(len(x_train)))

print('테스트 샘플의 수: {}'.format(len(x_test)))

데이터 출력

- Train : 8,982개, Test : 2,246개(8:2)

- 숫자 시퀀스 출력

- 뉴스 데이터 다운 시 단어에 해당하는 번호로 변환된 상태로 출력되기 때문

- 즉, 텍스트의 수치화 과정이 이미 진행된 것!

print(x_train[0])

print(x_test[0])[1, 2, 2, 8, 43, 10, 447, 5, 25, 207, 270, 5, 3095, 111, 16, 369, 186, 90, 67, 7, 89, 5, 19, 102, 6, 19, 124, 15, 90, 67, 84, 22, 482, 26, 7, 48, 4, 49, 8, 864, 39, 209, 154, 6, 151, 6, 83, 11, 15, 22, 155, 11, 15, 7, 48, 9, 4579, 1005, 504, 6, 258, 6, 272, 11, 15, 22, 134, 44, 11, 15, 16, 8, 197, 1245, 90, 67, 52, 29, 209, 30, 32, 132, 6, 109, 15, 17, 12]

[1, 4, 1378, 2025, 9, 697, 4622, 111, 8, 25, 109, 29, 3650, 11, 150, 244, 364, 33, 30, 30, 1398, 333, 6, 2, 159, 9, 1084, 363, 13, 2, 71, 9, 2, 71, 117, 4, 225, 78, 206, 10, 9, 1214, 8, 4, 270, 5, 2, 7, 748, 48, 9, 2, 7, 207, 1451, 966, 1864, 793, 97, 133, 336, 7, 4, 493, 98, 273, 104, 284, 25, 39, 338, 22, 905, 220, 3465, 644, 59, 20, 6, 119, 61, 11, 15, 58, 579, 26, 10, 67, 7, 4, 738, 98, 43, 88, 333, 722, 12, 20, 6, 19, 746, 35, 15, 10, 9, 1214, 855, 129, 783, 21, 4, 2280, 244, 364, 51, 16, 299, 452, 16, 515, 4, 99, 29, 5, 4, 364, 281, 48, 10, 9, 1214, 23, 644, 47, 20, 324, 27, 56, 2, 2, 5, 192, 510, 17, 12]레이블 출력

- 정수로 구성

print(y_train[0])

print(y_test[0])

현재 클래스 개수 확인

- 레이블이 0부터 시작 ➡️ 레이블 최댓값 + 1

num_classes = max(y_train) + 1

print('클래스의 수 : {}'.format(num_classes))

적은 양이 아니기 때문에 정확도를 높이기 쉽지 않아 보임

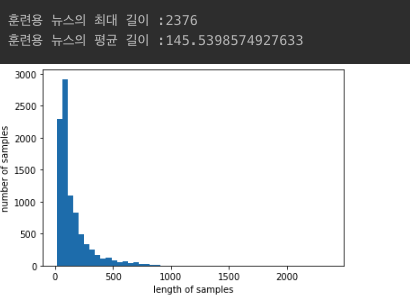

데이터 분포 확인

- 뉴스 데이터 길이 분포

- 평균과 최대값 차이가 큰 편

- 500 ~ 1,000 사이 길이의 뉴스도 일부 있는 것으로 보임

print('훈련용 뉴스의 최대 길이 :{}'.format(max(len(l) for l in x_train)))

print('훈련용 뉴스의 평균 길이 :{}'.format(sum(map(len, x_train))/len(x_train)))

plt.hist([len(s) for s in x_train], bins=50)

plt.xlabel('length of samples')

plt.ylabel('number of samples')

plt.show()

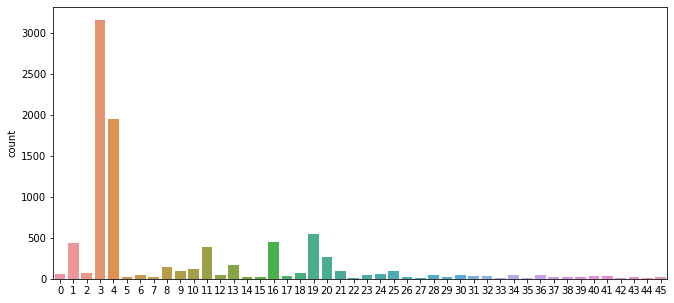

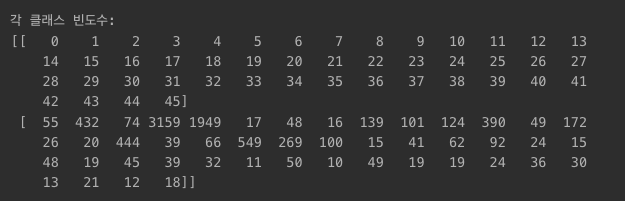

클래스 분포 확인

- 모델 성능에 영향을 크게 주기 때문

- 3, 4번 클래스가 대부분(19, 16, 1, 11...)

fig, axe = plt.subplots(ncols=1)

fig.set_size_inches(11,5)

sns.countplot(x=y_train)

plt.show()

- 수치로 다시 확인

- 3번 : 3,159개

- 4번 : 1,949개

- 19번 : 549개

- 16번 : 444개

unique_elements, counts_elements = np.unique(y_train, return_counts=True)

print("각 클래스 빈도수:")

print(np.asarray((unique_elements, counts_elements)))

18-3. 로이터 뉴스 데이터 (2) 데이터 복원하기

원본 뉴스 데이터로 복원

- 해당 데이터셋의 경우에는 이미 정수 시퀀스로 변환되어 있음

- 그러나, 일반적인 경우에는 텍스트의 수치화가 우선되어야 하기 때문에 원본 텍스트 복원을 시도

word_index

- 단어 : key, 정수 : value 딕셔너리

- word_iddex에 저장

word_index = reuters.get_word_index(path="reuters_word_index.json")

맵핑 정보 확인

- the

word_index['the']

- it

word_index['it']

로이터 뉴스 데이터 특징

- 0번, 1번, 2번의 경우 ➡️ 특별 토큰 맵핑 번호로 사용

<pad>, <sos>, <unk>- 즉,

word_index결과 숫자 +3이 실제 맵핑 인덱스인 것index_to_word = { index+3 : word for word, index in word_index.items() }print(index_to_word[4]) print(index_to_word[16])

index_to_word에 특별 토큰 추가

- 0, 1, 2번 인덱스에 해당하는 특별 토큰까지 추가해야 완성

for index, token in enumerate(("<pad>", "<sos>", "<unk>")):

index_to_word[index]=token첫번째 훈련용 뉴스 기사 ➡️ 원본 텍스트 복원

- 전처리가 일부 되어 있기 때문에, 자연스럽지는 않으나 문맥이 있는 텍스트

print(' '.join([index_to_word[index] for index in x_train[0]]))<sos> <unk> <unk> said as a result of its december acquisition of space co it expects earnings per share in 1987 of 1 15 to 1 30 dlrs per share up from 70 cts in 1986 the company said pretax net should rise to nine to 10 mln dlrs from six mln dlrs in 1986 and rental operation revenues to 19 to 22 mln dlrs from 12 5 mln dlrs it said cash flow per share this year should be 2 50 to three dlrs reuter 3OOV 문제와 UNK 토큰

- OOV(Out-Of-Vocabulary) 또는 UNK(Unknown)

- 미처 학습하지 못한 단어를 지칭

- 모르는 단어 ➡️

<unk>토큰으로 변환되는 것

(x_train, y_train), (x_test, y_test) = reuters.load_data(num_words=10000, test_split=0.2)- num_words=10000

- 10,000이 넘는 단어들이

<unk>토큰 번호 즉, 2번으로 변환되어 출력된 것

- 10,000이 넘는 단어들이

Q. 정수 시퀀스 [4, 587, 23, 133, 6, 30, 515]를 index_word를 이용해 텍스트 시퀀스로 변환

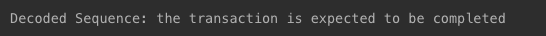

sequence = [4, 587, 23, 133, 6, 30, 515] decoded_sequence = [index_to_word.get(index, '?') for index in sequence] print("Decoded Sequence:", " ".join(decoded_sequence))

Q. [4, 12000, 23, 133, 6, 30, 515] -> 실제 출력 정수 시퀀스 추측

A. [4, 2, 23, 133, 6, 30, 515]

- 12000의 경우에는, 상위 10,000개의 단어만 유지되니 범위를 넘어선 것이기 때문에 OOV가 되어 2로 출력됨

전체 훈련용 뉴스 데이터&테스트용 뉴스 데이터를 텍스트 데이터로 변환

- train

decoded = []

for i in range(len(x_train)):

t = ' '.join([index_to_word[index] for index in x_train[i]])

decoded.append(t)

x_train = decoded

print(len(x_train))

- test

decoded = []

for i in range(len(x_test)):

t = ' '.join([index_to_word[index] for index in x_test[i]])

decoded.append(t)

x_test = decoded

print(len(x_test))

- 확인

x_train[:5]['<sos> <unk> <unk> said as a result of its december acquisition of space co it expects earnings per share in 1987 of 1 15 to 1 30 dlrs per share up from 70 cts in 1986 the company said pretax net should rise to nine to 10 mln dlrs from six mln dlrs in 1986 and rental operation revenues to 19 to 22 mln dlrs from 12 5 mln dlrs it said cash flow per share this year should be 2 50 to three dlrs reuter 3',

'<sos> generale de banque sa lt <unk> br and lt heller overseas corp of chicago have each taken 50 pct stakes in <unk> company sa <unk> factors generale de banque said in a statement it gave no financial details of the transaction sa <unk> <unk> turnover in 1986 was 17 5 billion belgian francs reuter 3',

'<sos> shr 3 28 dlrs vs 22 cts shr diluted 2 99 dlrs vs 22 cts net 46 0 mln vs 3 328 000 avg shrs 14 0 mln vs 15 2 mln year shr 5 41 dlrs vs 1 56 dlrs shr diluted 4 94 dlrs vs 1 50 dlrs net 78 2 mln vs 25 9 mln avg shrs 14 5 mln vs 15 1 mln note earnings per share reflect the two for one split effective january 6 1987 per share amounts are calculated after preferred stock dividends loss continuing operations for the qtr 1986 includes gains of sale of investments in <unk> corp of 14 mln dlrs and associated companies of 4 189 000 less writedowns of investments in national <unk> inc of 11 8 mln and <unk> corp of 15 6 mln reuter 3',

"<sos> the farmers home administration the u s agriculture department's farm lending arm could lose about seven billion dlrs in outstanding principal on its severely <unk> borrowers or about one fourth of its farm loan portfolio the general accounting office gao said in remarks prepared for delivery to the senate agriculture committee brian crowley senior associate director of gao also said that a preliminary analysis of proposed changes in <unk> financial eligibility standards indicated as many as one half of <unk> borrowers who received new loans from the agency in 1986 would be <unk> under the proposed system the agency has proposed evaluating <unk> credit using a variety of financial ratios instead of relying solely on <unk> ability senate agriculture committee chairman patrick leahy d vt <unk> the proposed eligibility changes telling <unk> administrator <unk> clark at a hearing that they would mark a dramatic shift in the agency's purpose away from being farmers' lender of last resort toward becoming a big city bank but clark defended the new regulations saying the agency had a responsibility to <unk> its 70 billion dlr loan portfolio in a <unk> yet <unk> manner crowley of gao <unk> <unk> arm said the proposed credit <unk> system attempted to ensure that <unk> would make loans only to borrowers who had a reasonable change of repaying their debt reuter 3",

'<sos> seton co said its board has received a proposal from chairman and chief executive officer philip d <unk> to acquire seton for 15 75 dlrs per share in cash seton said the acquisition bid is subject to <unk> arranging the necessary financing it said he intends to ask other members of senior management to participate the company said <unk> owns 30 pct of seton stock and other management members another 7 5 pct seton said it has formed an independent board committee to consider the offer and has deferred the annual meeting it had scheduled for march 31 reuter 3']x_test[:5]['<sos> the great atlantic and pacific tea co said its three year 345 mln dlr capital program will be be substantially increased to <unk> growth and expansion plans for <unk> inc and <unk> inc over the next two years a and p said the acquisition of <unk> in august 1986 and <unk> in december helped us achieve better than expected results in the fourth quarter ended february 28 its net income from continuing operations jumped 52 6 pct to 20 7 mln dlrs or 55 cts a share in the latest quarter as sales increased 48 3 pct to 1 58 billion dlrs a and p gave no details on the expanded capital program but it did say it completed the first year of the program during 1986 a and p is 52 4 pct owned by lt <unk> <unk> of west germany reuter 3',

"<sos> philippine sugar production in the 1987 88 crop year ending august has been set at 1 6 mln tonnes up from a provisional 1 3 mln tonnes this year sugar regulatory administration <unk> chairman <unk> yulo said yulo told reuters a survey during the current milling season which ends next month showed the 1986 87 estimate would almost certainly be met he said at least 1 2 mln tonnes of the 1987 88 crop would be earmarked for domestic consumption yulo said about 130 000 tonnes would be set aside for the u s sugar quota 150 000 tonnes for strategic reserves and 50 000 tonnes would be sold on the world market he said if the government approved a long standing <unk> recommendation to manufacture ethanol the project would take up another 150 000 tonnes slightly raising the target the government for its own reasons has been delaying approval of the project but we expect it to come through by july yulo said ethanol could make up five pct of gasoline cutting the oil import bill by about 300 mln pesos yulo said three major philippine <unk> were ready to start manufacturing ethanol if the project was approved the ethanol project would result in employment for about 100 000 people sharply reducing those thrown out of work by depressed world sugar prices and a <unk> domestic industry production quotas set for the first time in 1987 88 had been submitted to president corazon aquino i think the president would rather wait <unk> the new congress <unk> after the may elections he said but there is really no need for such quotas we are right now producing just slightly over our own consumption level the producers have never enjoyed such high prices yulo said adding sugar was currently selling locally for 320 pesos per <unk> up from 190 pesos last august yulo said prices were driven up because of speculation following the <unk> bid to control production we are no longer concerned so much with the world market he said adding producers in the <unk> region had learned from their <unk> and diversified into corn and <unk> farming and <unk> production he said diversification into products other than ethanol was also possible within the sugar industry the <unk> long ago <unk> their <unk> yulo said they have 300 sugar mills compared with our 41 but they <unk> many of them and diversified production we want to call this a <unk> <unk> instead of the sugar industry he said sugarcane could be fed to pigs and livestock used for <unk> <unk> or used in room <unk> when you cut sugarcane you don't even have to produce sugar he said yulo said the philippines was lobbying for a renewal of the international sugar agreement which expired in 1984 as a major sugar producer we are urging them to write a new agreement which would revive world prices yulo said if there is no agreement world prices will always be depressed particularly because the european community is <unk> its producers and dumping sugar on the markets he said current world prices holding steady at about 7 60 cents per pound were <unk> for the philippines where production costs ranged from 12 to 14 cents a pound if the price holds steady for a while at 7 60 cents i expect the level to rise to about 11 cents a pound by the end of this year he said yulo said economists forecast a bullish sugar market by 1990 with world consumption <unk> production he said sugar markets were holding up despite <unk> from artificial sweeteners and high fructose corn syrup but we are not happy with the reagan administration he said since <unk> we have been regular suppliers of sugar to the u s in 1982 when they restored the quota system they cut <unk> in half without any justification manila was <unk> watching washington's moves to cut domestic support prices to 12 cents a pound from 18 cents the u s agriculture department last december slashed its 12 month 1987 sugar import quota from the philippines to 143 780 short tons from 231 660 short tons in 1986 yulo said despite next year's increased production target some philippine mills were expected to shut down at least four of the 41 mills were not working during the 1986 87 season he said we expect two or three more to follow suit during the next season reuter 3",

"<sos> the agriculture department's widening of louisiana gulf differentials will affect county posted prices for number two yellow corn in ten states a usda official said all counties in iowa will be affected as will counties which use the gulf to price corn in illinois indiana tennessee kentucky missouri mississippi arkansas alabama and louisiana said <unk> <unk> deputy director of commodity operations division for the usda usda last night notified the grain industry that effective immediately all gulf differentials used to price interior corn would be widened on a sliding scale basis of four to eight cts depending on what the differential is usda's action was taken to lower excessively high posted county prices for corn caused by high gulf prices we've been following this louisiana gulf situation for a month and we don't think it's going to get back in line in any nearby time <unk> said <unk> said usda will probably narrow back the gulf differentials when and if gulf prices <unk> if we're off the mark now because we're too high wouldn't we be as much off the mark if we're too low he said while forecasting more adjustments if gulf prices fall <unk> said no other changes in usda's price system are being planned right now we don't tinker we don't make changes <unk> and we don't make changes often he said reuter 3",

'<sos> <unk> <unk> oil and gas partnership said it completed the sale of interests in two major oil and gas fields to lt energy assets international corp for 21 mln dlrs the company said it sold about one half of its 50 pct interest in the oak hill and north <unk> fields its two largest producing properties it said it used about 20 mln dlrs of the proceeds to <unk> principal on its senior secured notes semi annual principal payments on the remaining 40 mln dlrs of notes have been satisfied until december 1988 as a result it said the company said the note agreements were amended to reflect an easing of some financial covenants and an increase of interest to 13 5 pct from 13 0 pct until december 1990 it said the <unk> exercise price for 1 125 000 warrants was also reduced to 50 cts from 1 50 dlrs the company said energy assets agreed to share the costs of increasing production at the oak hill field reuter 3',

'<sos> strong south <unk> winds were keeping many vessels trapped in the ice off the finnish and swedish coasts in one of the worst icy periods in the baltic for many years the finnish board of navigation said in finland and sweden up to 50 vessels were reported to be stuck in the ice and even the largest of the <unk> <unk> were having difficulties in breaking through to the <unk> ships <unk> officials said however icy conditions in the southern baltic at the soviet oil ports of <unk> and <unk> had eased they said weather officials in neighbouring sweden said the icy conditions in the baltic were the worst for 30 years with ships fighting a losing battle to keep moving in the coastal stretches of the gulf of <unk> which <unk> finland and sweden the ice is up to one <unk> thick with <unk> and <unk> packing it into almost <unk> walls three metres high swedish <unk> officials said weather forecasts say winds may ease during the weekend but a further drop in temperature could bring shipping to a standstill the officials said reuter 3']18-4. 벡터화 하기

벡터화 실습을 위한 라이브러리 import

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.feature_extraction.text import TfidfTransformerDTM, TF-IDF 행렬

- ML로 텍스트 분류 진행 예정

- 벡터화도 인공 신경망이 아닌 방식을 사용

- DTM의 열 개수는 무조건 길이를 더한 값이 되지는 않음

- 중복이 제거되면 더 작아질 수도 있음!

- DTM 기반의 성능 저하 요소 : 불용어

DTM

- 사이킷런

CountVectorizer()으로 생성 - DTM 생성 및 크기 확인

- 열 개수의 경우, DTM이 자체적으로 불필요 토큰을 더 제거하기 때문에

num_words=10,000을 사용했음에도 9,670개로 잡히는 것

- 열 개수의 경우, DTM이 자체적으로 불필요 토큰을 더 제거하기 때문에

dtmvector = CountVectorizer()

x_train_dtm = dtmvector.fit_transform(x_train)

print(x_train_dtm.shape)

TF-IDF 행렬

- DTM의 불용어로 인한 성능 저하 문제 보완

- 모든 문서에 걸쳐 빈도수가 높다면 ➡️ 중요도를 낮게 보고 낮은 가중치 부여

- 사이킷런

TfidfTransformer()로 생성 - 추가 전처리를 하지 않으면, DTM가 크기 동일

tfidf_transformer = TfidfTransformer()

tfidfv = tfidf_transformer.fit_transform(x_train_dtm)

print(tfidfv.shape)18-5. 나이브 베이즈 분류기

ML 라이브러리 import

from sklearn.naive_bayes import MultinomialNB #다항분포 나이브 베이즈 모델

from sklearn.linear_model import LogisticRegression, SGDClassifier

from sklearn.naive_bayes import ComplementNB

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.ensemble import VotingClassifier

from sklearn.svm import LinearSVC

from sklearn.metrics import accuracy_score #정확도 계산

print('=3')나이브 베이즈 분류기(Multinomial Naive Bayes Classifier)

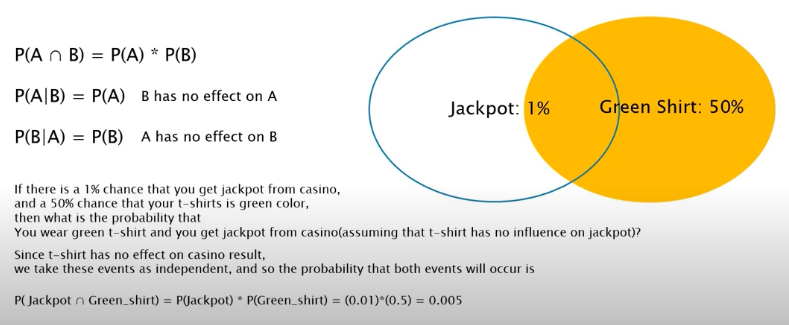

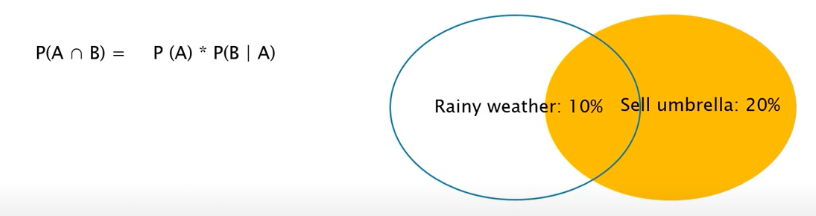

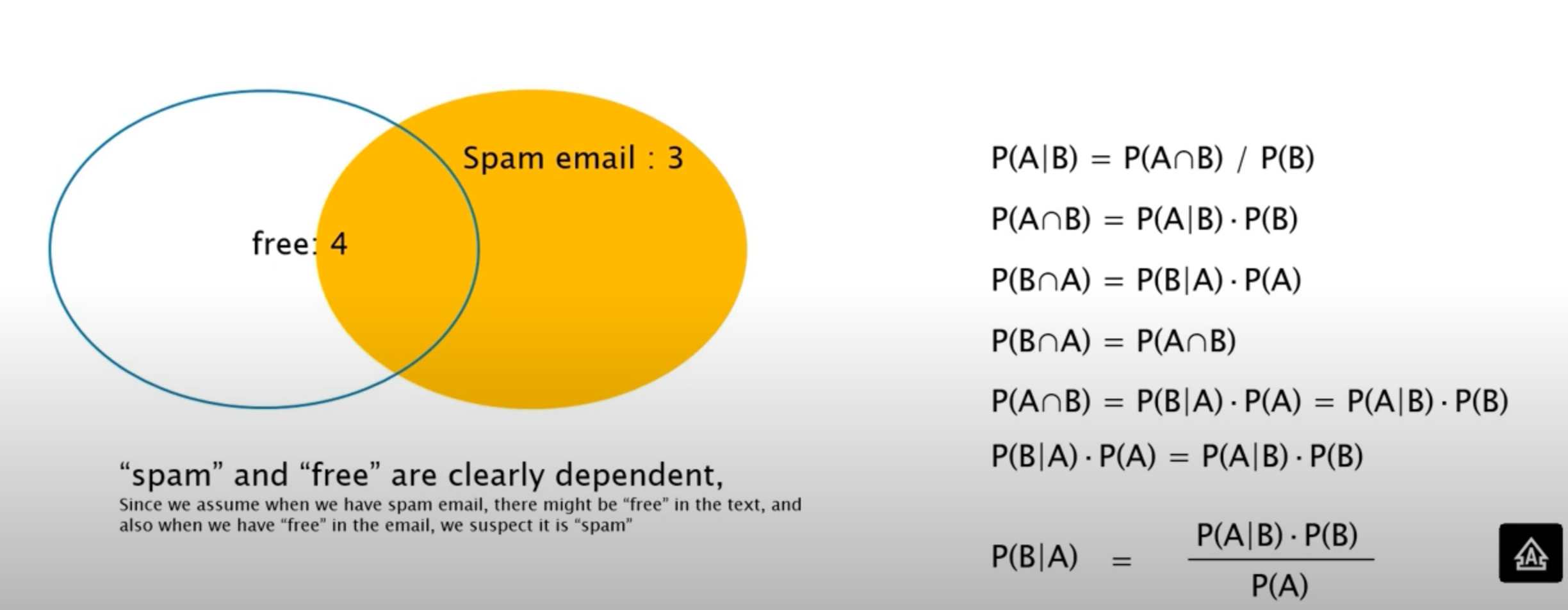

-

2개의 event가 독립적일 때

-

독립적이지 않을 때

- 나이브 베이즈 이론의 증명

-

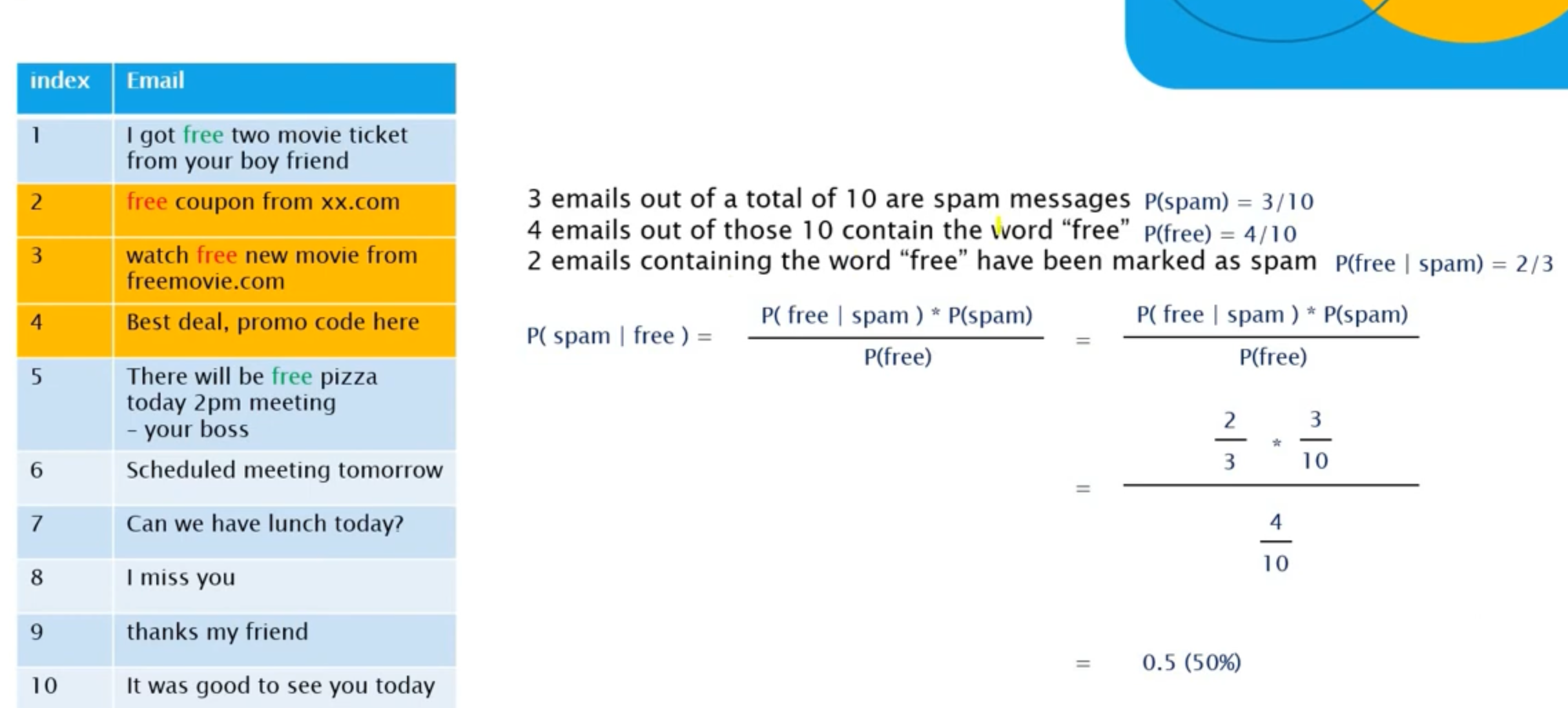

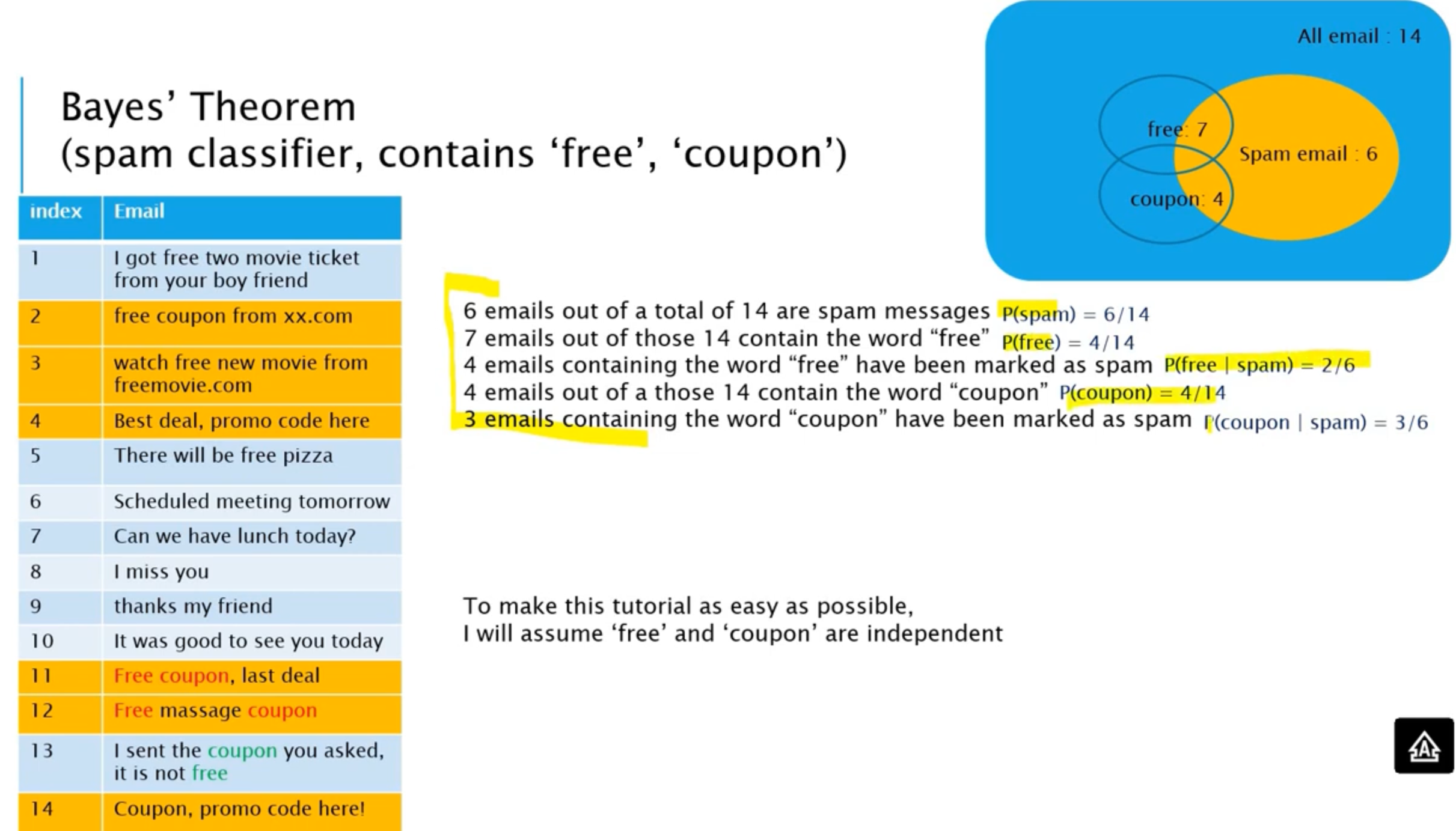

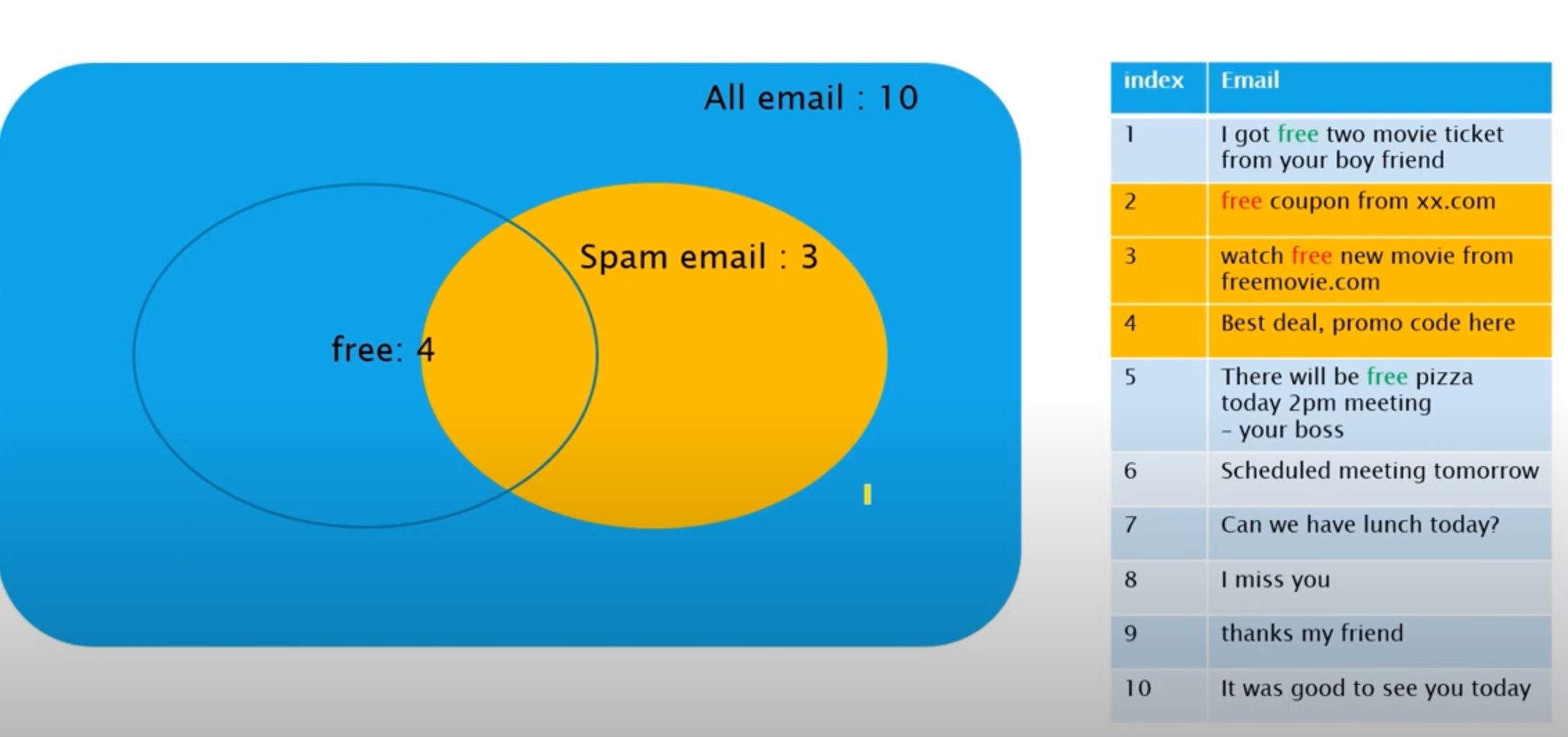

스팸 메일 분류 구현

- Bayes' Theorem

- 10개의 이메일 중 3개는 스팸 메일, 그 중 4개의 이메일에서

free단어가 들어있었다고 가정

- 10개의 이메일 중 3개는 스팸 메일, 그 중 4개의 이메일에서

- Bayes' Theorem

-

스팸 메일의 확률

-

free 단어가 들어있는 메일 확률

-

스팸 메일 중 free 단어가 들어있는 메일 확률

이 3가지를 이용하여 free라는 단어가 들어있을 때, 그 메일이 스팸메일인 것인지에 대한 예측을 할 수 있음()

나이브 베이즈 분류기 구현

- 사이킷런

MultinomialNB()사용 - 모델 학습

model = MultinomialNB()

model.fit(tfidfv, y_train)

- 테스트 데이터 전처리

- DTM 변환 ➡️ TF-IDF 행렬 변환

- 테스트 데이터 예측

- 예측값 vs 실제값

x_test_dtm = dtmvector.transform(x_test)

tfidfv_test = tfidf_transformer.transform(x_test_dtm)

predicted = model.predict(tfidfv_test)

print("정확도:", accuracy_score(y_test, predicted))

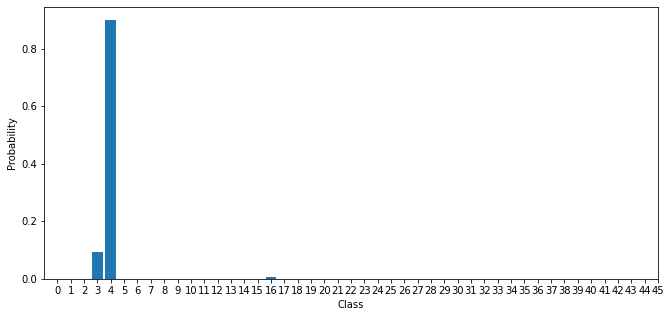

임의 샘플에 대한 예측 테스트

- 4번째 샘플(인덱스 3) 원문

x_test[3]'<sos> <unk> <unk> oil and gas partnership said it completed the sale of interests in two major oil and gas fields to lt energy assets international corp for 21 mln dlrs the company said it sold about one half of its 50 pct interest in the oak hill and north <unk> fields its two largest producing properties it said it used about 20 mln dlrs of the proceeds to <unk> principal on its senior secured notes semi annual principal payments on the remaining 40 mln dlrs of notes have been satisfied until december 1988 as a result it said the company said the note agreements were amended to reflect an easing of some financial covenants and an increase of interest to 13 5 pct from 13 0 pct until december 1990 it said the <unk> exercise price for 1 125 000 warrants was also reduced to 50 cts from 1 50 dlrs the company said energy assets agreed to share the costs of increasing production at the oak hill field reuter 3'- 샘플 레이블 확인

y_test[3]

확률 그래프 시각화

probability_3 = model.predict_proba(tfidfv_test[3])[0]

plt.rcParams["figure.figsize"] = (11,5)

plt.bar(model.classes_, probability_3)

plt.xlim(-1, 21)

plt.xticks(model.classes_)

plt.xlabel("Class")

plt.ylabel("Probability")

plt.show()

model.predict(tfidfv_test[3])

분석 : 4번 클래스에 대한 확률은 90%

- 10%는 3번이라고 예측함

- 즉, 90%의 확률로 확신하니 이 샘플은 잘 예측한 것

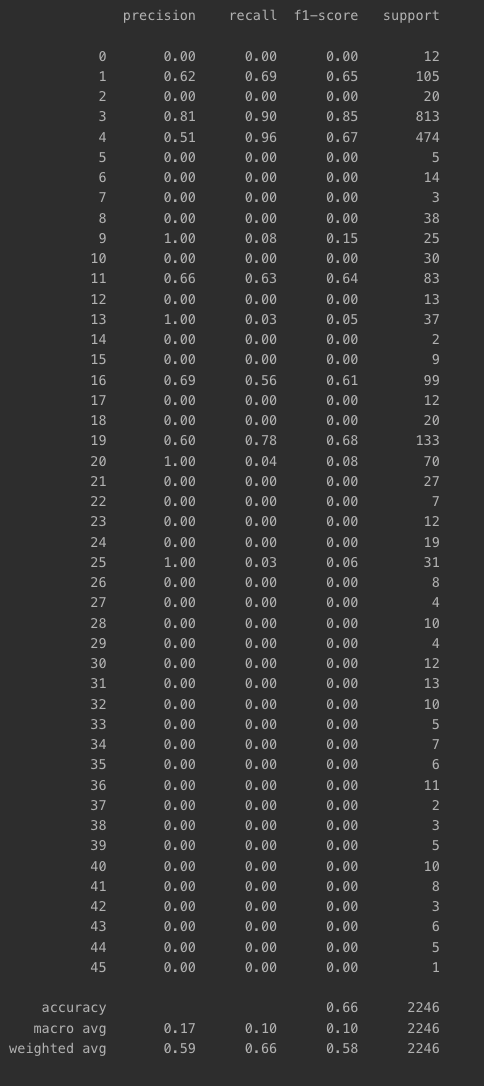

18-6. F1-Score, Confusion Matrix

F1-Score

라이브러리 import

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrixPrecision, Recall & F1 Score

Accuracy의 경우, label 불균형을 제대로 고려할 수 없기 때문에 F1-Score를 이용하기도 함!

classification_report()- 사이킷런

metrics패키지 - 정밀도, 재현율, F1-Score 구함

- 각 클래스를 positive 클래스로 봤을 때의 지표를 구하고, 평균값으로 전체 모델 성능 평가

- 사이킷런

print(classification_report(y_test, model.predict(tfidfv_test), zero_division=0))

macro: 단순평균weighted: 가중평균accuracy: 정확도

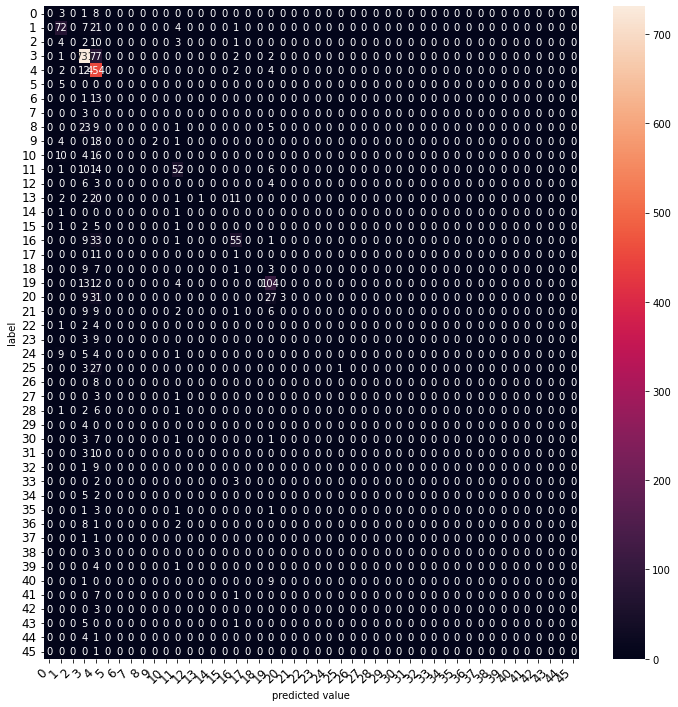

Confusion Matrix

- 추가 자료 : https://mjdeeplearning.tistory.com/31

TP : True로 예측, 실제 값 True

TN : False로 예측, 실제 값 False

FP : True로 예측, 실제 값 False

FN : False로 예측, 실제 값 True

def graph_confusion_matrix(model, x_test, y_test):#, classes_name):

df_cm = pd.DataFrame(confusion_matrix(y_test, model.predict(x_test)))#, index=classes_name, columns=classes_name)

fig = plt.figure(figsize=(12,12))

heatmap = sns.heatmap(df_cm, annot=True, fmt="d")

heatmap.yaxis.set_ticklabels(heatmap.yaxis.get_ticklabels(), rotation=0, ha='right', fontsize=12)

heatmap.xaxis.set_ticklabels(heatmap.xaxis.get_ticklabels(), rotation=45, ha='right', fontsize=12)

plt.ylabel('label')

plt.xlabel('predicted value')graph_confusion_matrix(model, tfidfv_test, y_test)

18-7. 다양한 머신러닝 모델 사용해보기 (1)

Complement Naive Bayes Classifier(CNB)

-

기존 나이브 베이즈 분류기 단점

- 독립 변수 : 조건부 독립의 가정을 함

- 샘플이 특정 클래스에 편향되어 있거나, 결정 경계 가중치가 쏠려있다면 모델이 그 특정 클래스를 선호하여 제대로된 예측 불가

-

컴플리먼트 나이브 베이즈 분류기

- 데이터 불균형을 반영해 가중치 부여

- 나이브 베이즈 분류기보다 성능이 더 좋음

- 로이터 뉴스 데이터 역시 3번, 4번 클래스로 집중되어 있어 불균형한 데이터이기 때문에 더 적합할 것

cb = ComplementNB()

cb.fit(tfidfv, y_train)

predicted = cb.predict(tfidfv_test)

print("정확도:", accuracy_score(y_test, predicted))

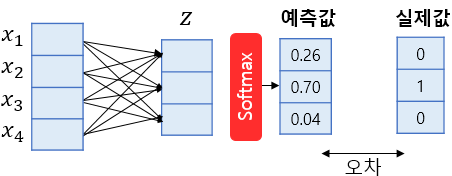

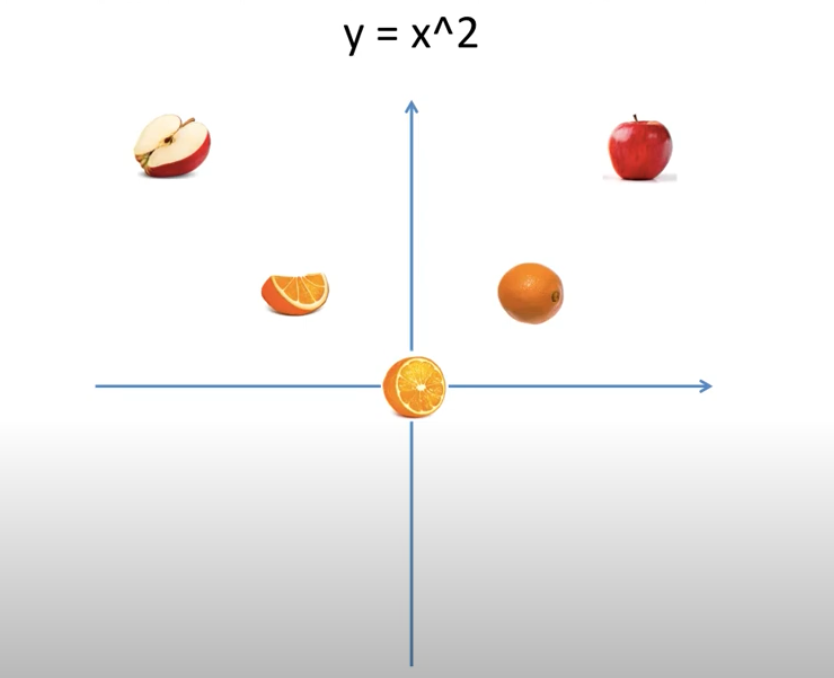

로지스틱 회귀(Logistic Regression)

-

선형 분류 알고리즘

-

softmax 함수를 이용한 다중 클래스 분류 알고리즘 사용 가능

- 소프트맥스 회귀(Softmax Regression)

-

실제로는 분류를 진행함!

-

Softmax?

- 클래스가 N개일 경우, N차원 벡터가 ➡️ "각 클래스가 정답일 확률" 표현할 수 있도록 정규화

- ex) 3개 클래스 중 1개 클래스의 예측이 필요하니, 소프트맥스 회귀 출력은 3차원 벡터, 각 벡터 차원은 특정 클래스 확률

- 오차와 실제값 차이를 줄이는 과정을통해 "가중치", "편향" 학습

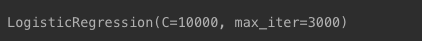

소프트맥스 회귀 구현

- 사이킷런

LogisticRegression()사용

lr = LogisticRegression(C=10000, penalty='l2', max_iter=3000)

lr.fit(tfidfv, y_train)

- 지표 확인

predicted = lr.predict(tfidfv_test)

print("정확도:", accuracy_score(y_test, predicted))

81% 정도의 성능이 나옴

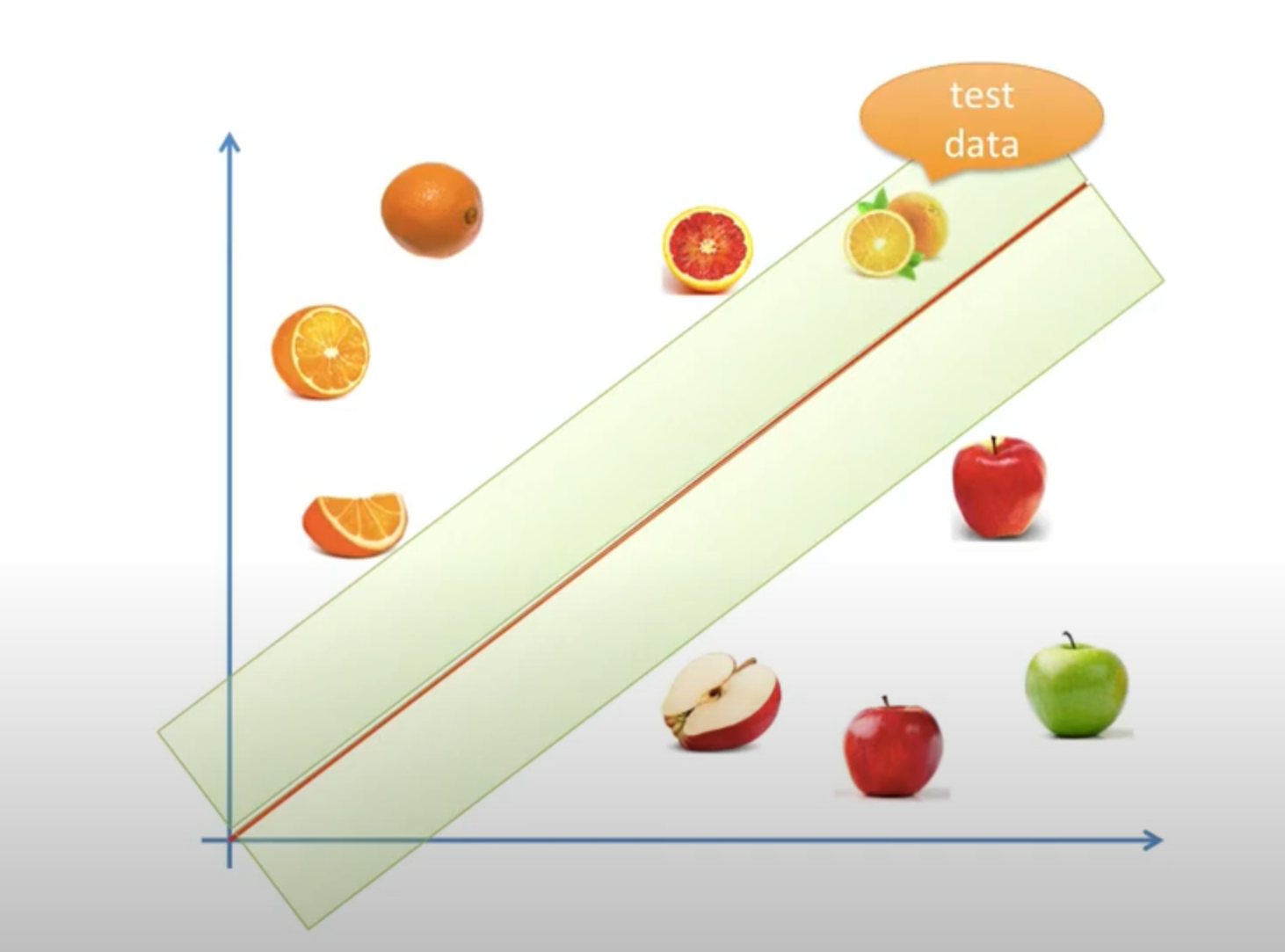

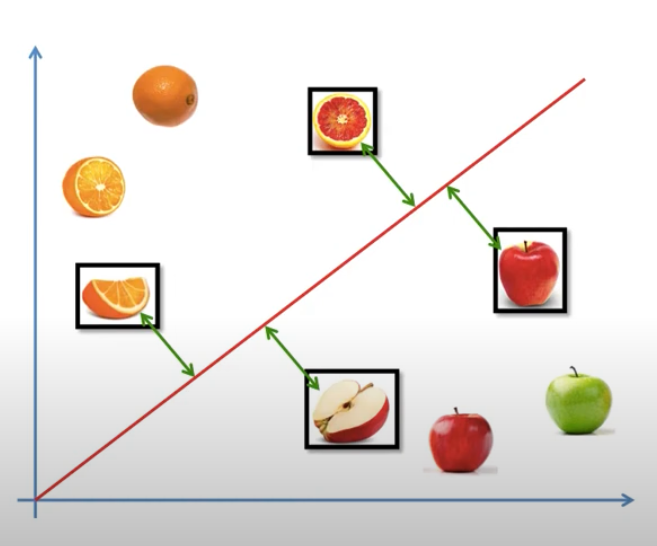

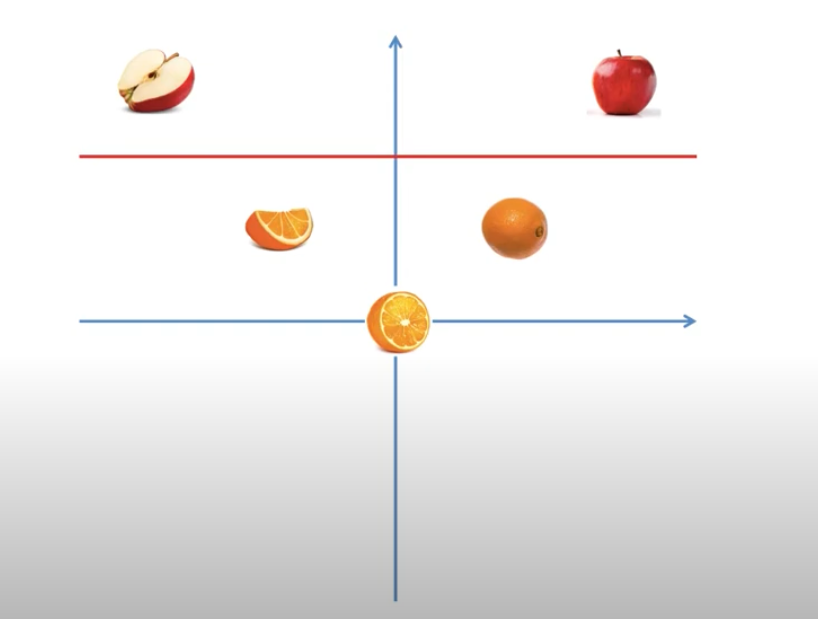

선형 서포트 벡터 머신(Linear Support Vector Machine)

- 선형 분류 알고리즘

- SVM

- decision boundary와 매우 가깝게 있는 경우, 분류가 잘못되기도 함

- margin을 이용하여 새 데이터가 잘 구별될 수 있도록 함

- margin을 이용하여 새 데이터가 잘 구별될 수 있도록 함

support vector

-

decision boundary와 가장 가깝게 있는 데이터

-

이 데이터를 기준으로 margin 형성

-

분류 사용 시, support vector만 사용

-

1차원 데이터를 2차원으로 옮김

- linearly separabel해짐

- linearly separabel해짐

-

다만, 계산량이 많아지기 때문에 kenel trick을 사용

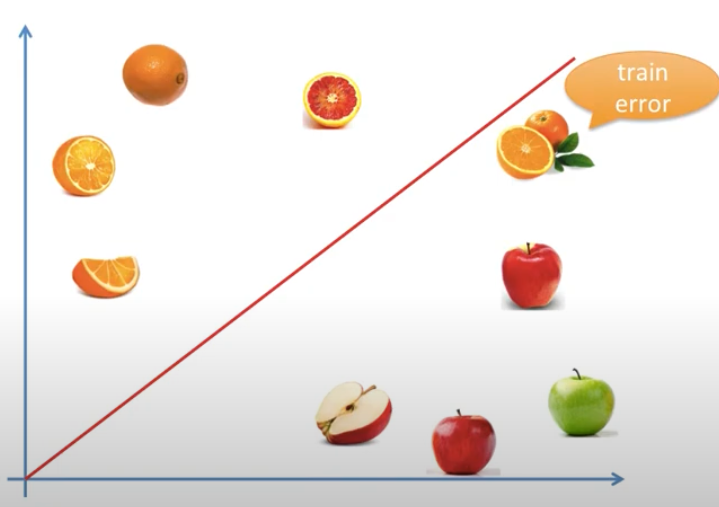

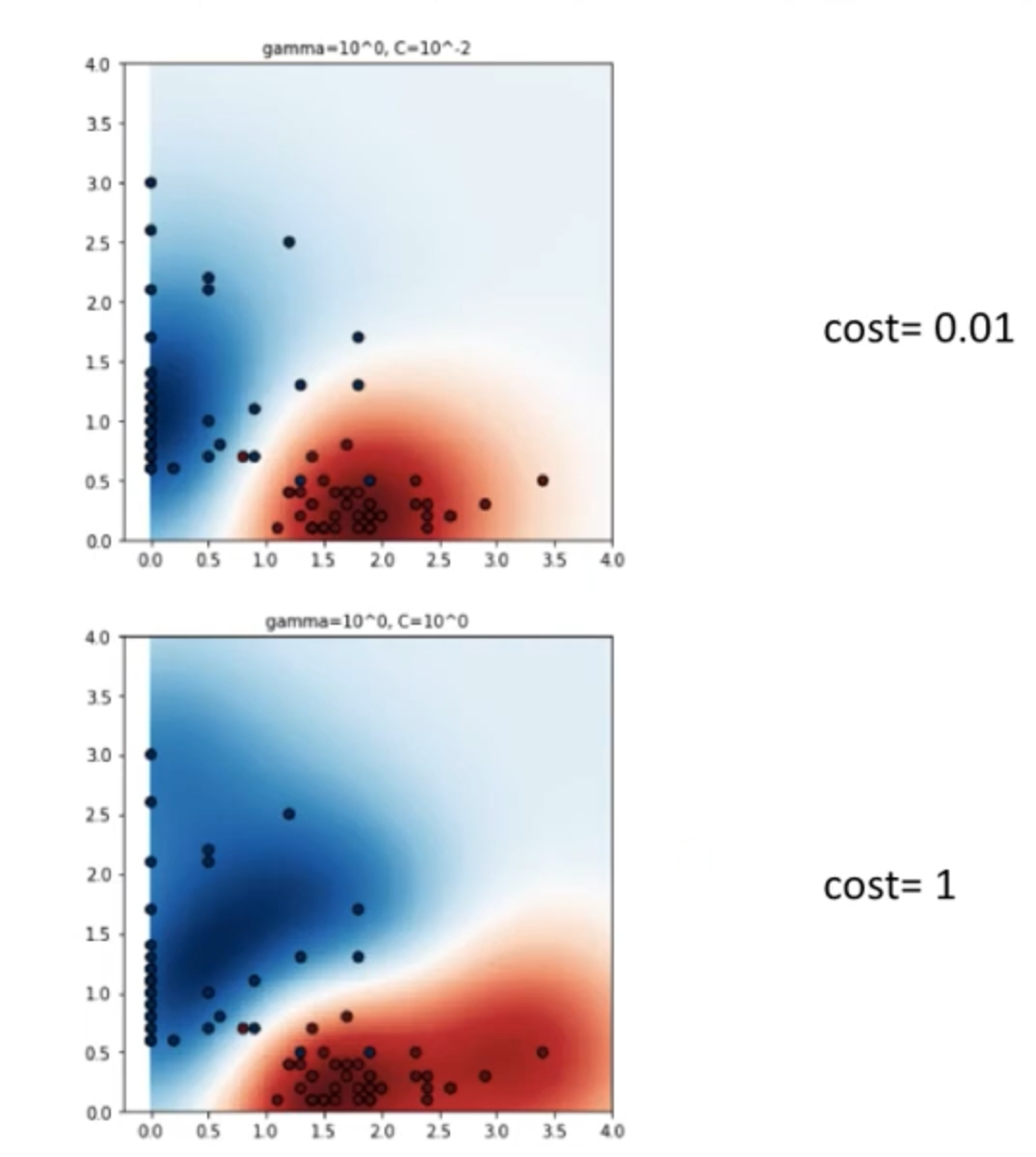

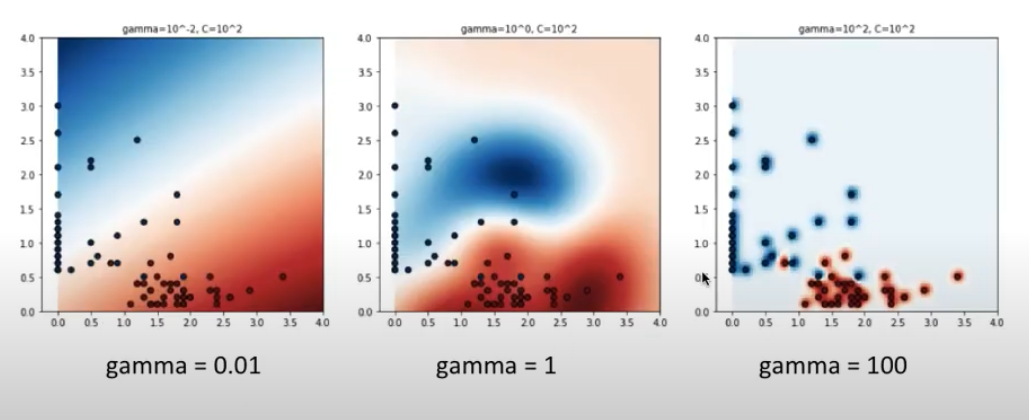

SVM Parameter

- Cost

- 마진 간격 설정

- cost를 너무 작게 잡으면, 마진이 커지고 train error가 많아질 수 있음

- cost를 너무 크게 잡으면, 마진이 작아지고 학습 시의 error는 적어지나 새로운 데이터의 경우에는 분류가 잘못될 확률이 높아짐

- Gamma

- train data 1개당 영향을 끼치는 범위를 조절하는 변수

- 작을수록 영향 영역이 커짐

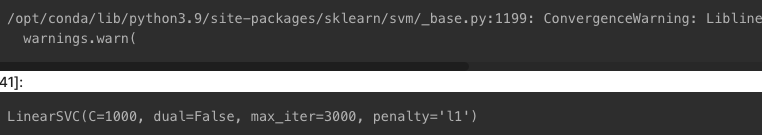

서포트 벡터 머신 사용

- 사이킷런

LinearSVC- 이진 분류 알고리즘이나, 일대다 방식을 이용해 다중 클래스 분류로 이용

- 클래스 개수만큼 이진 분류 모델 생성

- 그 예측값들 중 가장 높은 점수를 내는 것을 선정

lsvc = LinearSVC(C=1000, penalty='l1', max_iter=3000, dual=False)

lsvc.fit(tfidfv, y_train)

predicted = lsvc.predict(tfidfv_test)

accuracy = accuracy_score(y_test, predicted)

print(f"정확도: {accuracy}")

18-8. 다양한 머신러닝 모델 사용해보기 (2)

결정 트리(Decision Tree)

- 회귀 문제에 주로 사용

- 사이킷런

DecisionTreeClassifier()사용 max_depth: 결정 트리 깊이- 고차원 & 희소한 데이터는 성능이 잘 나오지 않음

- DTM, TF-IDF 행렬은 대부분 0을 가지고 있기 때문에, 희소 데이터 특성으로 인해 성능이 잘 나오지 않을 것

tree = DecisionTreeClassifier(max_depth=10, random_state=0)

tree.fit(tfidfv, y_train)

predicted = tree.predict(tfidfv_test)

print("정확도:", accuracy_score(y_test, predicted))

정확도 : 약 62%

랜덤 포레스트(Random Forest)

-

앙상블(Ensemble)

- ML 모델 여러 개 연결

- 랜덤 포레스트, 그래디언트 부스팅 트리 : 결정 트리를 사용하는 앙상블 모델

-

랜덤 포레스트는 질문이 랜덤

- 결정 트리의 경우, 가장 좋은 질문을 선정하여 질좋은 질문을 함

-

결정 트리가 오버피팅되었을 경우에, 랜덤 포레스트가 하나의 솔루션이 될 수 있음(앙상블로 해결)

forest = RandomForestClassifier(n_estimators=5, random_state=0)

forest.fit(tfidfv, y_train)

predicted = forest.predict(tfidfv_test)

print("정확도:", accuracy_score(y_test, predicted))

그래디언트 부스팅 트리(GradientBoostingClassifier)

- 결정 트리 여러 개를 묶어 사용하는 앙상블 모델

- 이전 트리의 오차를 보완하는 방식

- 일부 특성을 무시함

- 랜덤 포레스트를 먼저 써보고, 해보는 것이 좋음

- 트리 깊이가 1 ~ 5 사이 : 메모리 사용 효율적, 예측 빠름

- 정확도가 좋고 예측 속도가 빠르지만, 훈련 시간 속도가 느리고, 희소 고차원 데이터의 경우 사용이 어려움

grbt = GradientBoostingClassifier(random_state=0, verbose=3) # verbose=3

grbt.fit(tfidfv, y_train)Iter Train Loss Remaining Time

1 1.4608 15.94m

2 95544.1548 16.02m

3 105411.1055 15.87m

4 26490374809120059619893320924222374741943986946048.0000 15.72m

5 3332464259228453694671945105465820387521328203545526380221295913764842145866429631276902168311601749602693928777633481065758720.0000 15.57m

6 3332464259228453694671945105465820387521328203545526380221295913764842145866429631276902168311601749602693928777633481065758720.0000 15.42m

7 3332464259228453694671945105465820387521328203545526380221295913764842145866429631276902168311601749602693928777633481065758720.0000 15.26m

8 3332464259228453694671945105465820387521328203545526380221295913764842145866429631276902168311601749602693928777633481065758720.0000 15.10m

9 3332464259228453694671945105465820387521328203545526380221295913764842145866429631276902168311601749602693928777633481065758720.0000 14.93m

10 3332464259228453694671945105465820387521328203545526380221295913764842145866429631276902168311601749602693928777633481065758720.0000 14.76m

11 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 14.60m

12 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 14.44m

13 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 14.27m

14 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 14.11m

15 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 13.95m

16 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 13.78m

17 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 13.62m

18 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 13.47m

19 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 13.30m

20 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 13.14m

21 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 12.98m

22 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 12.81m

23 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 12.65m

24 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 12.48m

25 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 12.31m

26 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 12.14m

27 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 11.98m

28 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 11.82m

29 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 11.65m

30 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 11.49m

31 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 11.32m

32 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 11.16m

33 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 10.99m

34 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 10.83m

35 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 10.66m

36 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 10.50m

37 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 10.34m

38 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 10.17m

39 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 10.01m

40 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 9.84m

41 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 9.67m

42 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 9.51m

43 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 9.35m

44 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 9.18m

45 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 9.02m

46 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 8.86m

47 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 8.70m

48 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 8.54m

49 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 8.38m

50 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 8.22m

51 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 8.06m

52 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 7.90m

53 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 7.73m

54 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 7.57m

55 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 7.41m

56 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 7.25m

57 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 7.08m

58 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 6.92m

59 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 6.76m

60 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 6.59m

61 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 6.43m

62 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 6.27m

63 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 6.11m

64 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 5.94m

65 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 5.78m

66 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 5.61m

67 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 5.45m

68 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 5.28m

69 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 5.12m

70 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 4.96m

71 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 4.79m

72 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 4.63m

73 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 4.46m

74 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 4.30m

75 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 4.13m

76 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 3.97m

77 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 3.80m

78 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 3.64m

79 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 3.47m

80 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 3.31m

81 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 3.14m

82 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 2.98m

83 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 2.81m

84 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 2.65m

85 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 2.48m

86 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 2.32m

87 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 2.15m

88 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 1.99m

89 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 1.82m

90 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 1.66m

91 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 1.49m

92 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 1.32m

93 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 1.16m

94 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 59.61s

95 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 49.67s

96 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 39.73s

97 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 29.80s

98 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 19.87s

99 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 9.93s

100 14834291270935598097793813192422686817633454293445808551806972586635235618984506254543094943361504759490214512223227966451089408.0000 0.00s

predicted = grbt.predict(tfidfv_test)

print("정확도:", accuracy_score(y_test, predicted))

정확도 : 76%

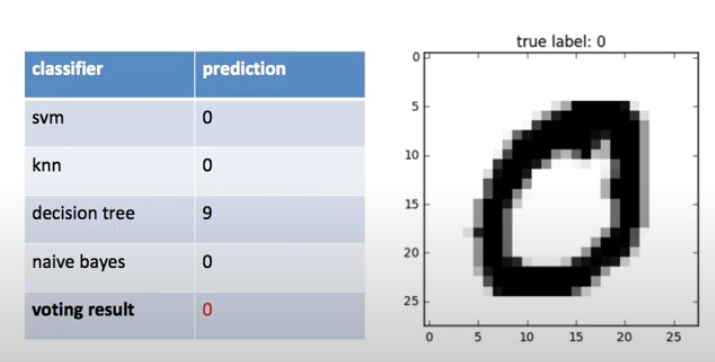

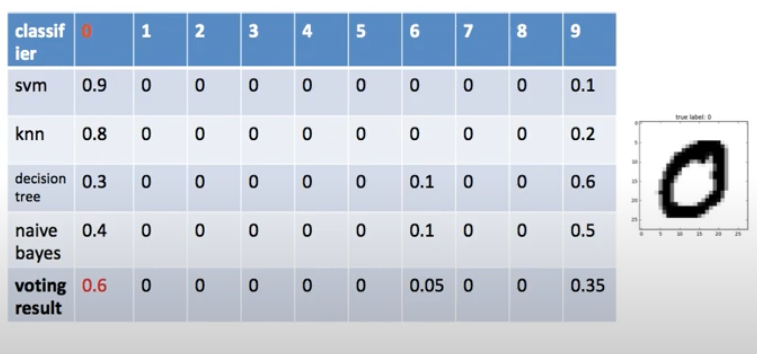

보팅(Voting)

-

hard voting

- 투표 용지가 1장이라고 생각하면 됨

- 결과물에 대한 최종값을 투표해 결정

-

soft voting

- confidence value(신뢰 구간) 사용

- 최종 결과무이 나올 확률값을 모두 합해 ➡️ 최종 결과물에 대한 각각의 확률을 구하여 최종값을 냄

로지스틱 회귀, CNB, 그래디언트 부스팅 트리를 soft voting

voting_classifier = VotingClassifier(estimators=[

('lr', LogisticRegression(C=10000, penalty='l2')),

('cb', ComplementNB()),

('grbt', GradientBoostingClassifier(random_state=0))

], voting='soft', n_jobs=-1)

voting_classifier.fit(tfidfv, y_train)

predicted = voting_classifier.predict(tfidfv_test) #테스트 데이터에 대한 예측

print("정확도:", accuracy_score(y_test, predicted)) #예측값과 실제값 비교

정확도 : 81%