from sklearn.model_selection import train_test_split

x_train, x_test, y_train, y_test = train_test_split(x, y, test_size=0.3, random_state=1)

______________________________________________

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler()

scaler.fit(x_train)

x_train_s = scaler.transform(x_train)

x_test_s = scaler.transform(x_test)

_____________________________________________________________________

_____________________________________________________________________

1) Decision Tree

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import cross_val_score

model = DecisionTreeClassifier(max_depth=5, random_state=1)

cv_score = cross_val_score(model, x_train, y_train, cv=10)

print(cv_score)

print('평균:', cv_score.mean())

print('표준편차:', cv_score.std())

<출력>

[0.66666667 0.75925926 0.74074074 0.64814815 0.7037037 0.74074074

0.75925926 0.81132075 0.79245283 0.67924528]

평균: 0.7301537386443047

표준편차: 0.05141448587329709

result = {}

result['DecisionTree'] = cv_score.mean()

_____________________________________________________________________

2) KNN

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import cross_val_score

model = KNeighborsClassifier(n_neighbors=5)

cv_score = cross_val_score(model, x_train_s, y_train, cv = 10)

print(cv_score)

print('평균:', cv_score.mean())

print('표준편차:', cv_score.std())

result['KNN'] = cv_score.mean()

_____________________________________________________________________

3) Logistic Regression

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import cross_val_score

model = LogisticRegression()

cv_score = cross_val_score(model, x_train, y_train, cv = 10)

print(cv_score)

print('평균:', cv_score.mean())

print('표준편차:', cv_score.std())

result['Logistic'] = cv_score.mean()

_____________________________________________________________________

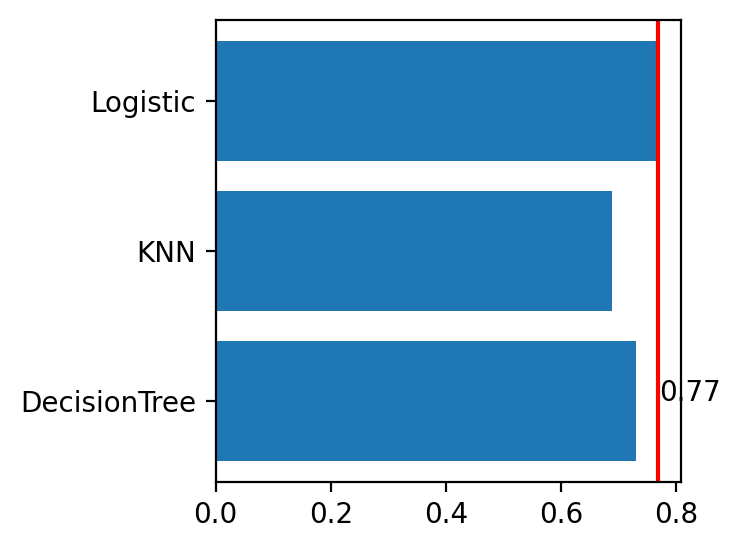

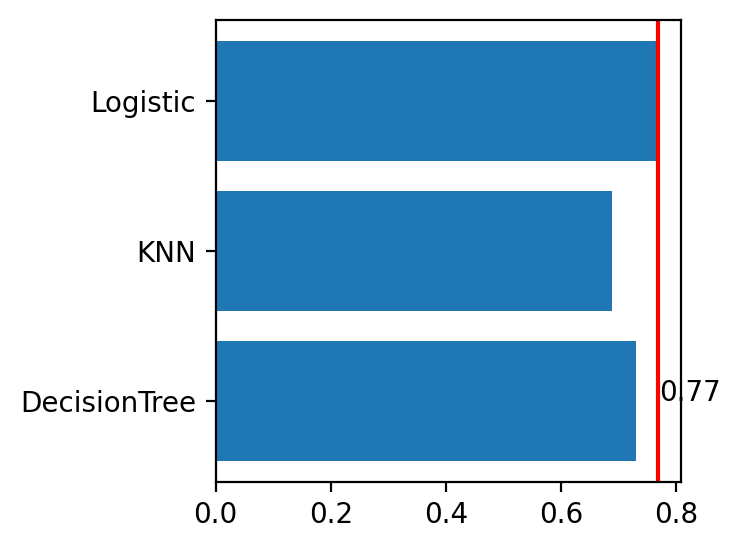

4) 성능 비교 시각화

print(result)

<출력>

{'DecisionTree': 0.7301537386443047, 'KNN': 0.6889587700908455, 'Logistic': 0.7690426275331936}

plt.barh(list(result), result.values())

plt.axvline(result['Logistic'], color = 'r')

- 이를 통해, Logistic 알고리즘을 사용하는게 효과적임을 알 수 있다.

- 다음 과정은 LogisticRegressor를 이용하여 동일하게 성능 평가 및 시각화 단계를 수행하면 된다.