paper review

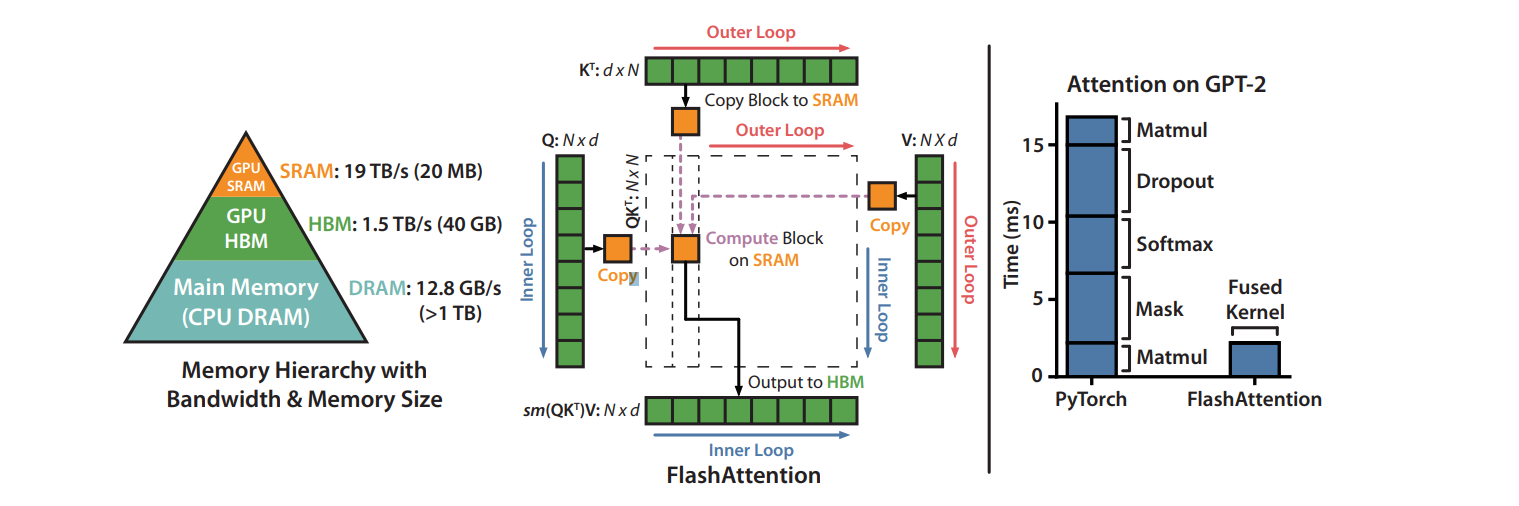

1.[Paper Review] FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness

make attention algorithnms IOaware;accounting for reads and writes between levels of GPU memoryFlashAttentionIO-aware exact attention algorithmuse til

2023년 10월 4일

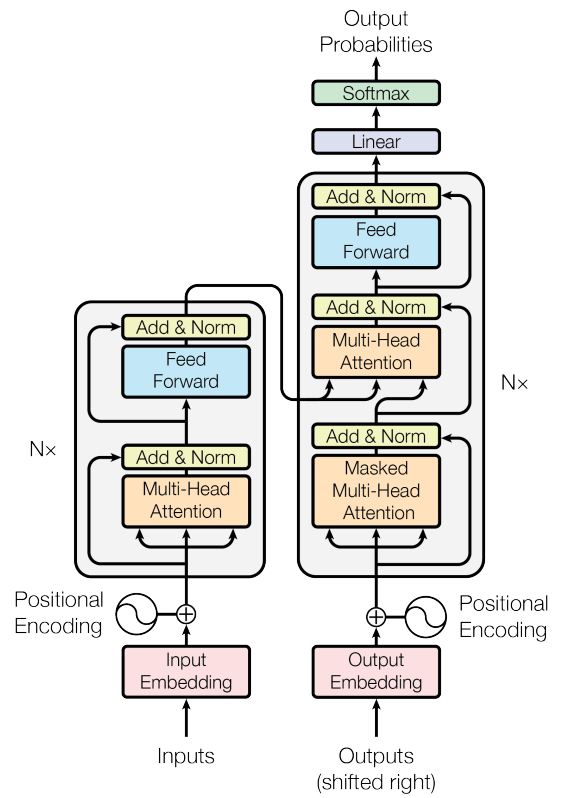

2.[Paper Review] Attention Is All You Need

We propose a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutionsExperi

2023년 10월 12일