Project Description & Goal

Nature of the Project

"Social XR for Lifelong Learning"

The project is about design thinking process focused around humans (Empathise - Interpret - Ideate - Verify). However, this time we had to focus on analysing the existing solutions and how we can leverage XR (Extended Reality) to overcome the current limitations.

Our Project

Our project was about building a new ubiquitous language-learning platform, which leverages VR to overcome the current limitations. We made this decision based on the Empathise, Interpret & Ideate processes.

"People want to learn anytime and anywhere, inside or outside of the classroom throughout their lives."

Project Journey

Empathise

We wanted the whole design process to be wholeheartedly rooted in user needs, just like last time.

Immerse

We tried to think about things that we would like to learn in the future (e.g. language, musical instruments, other skills, etc.) and consider what is available as of now.

As I have been playing the piano since I was 5 years old and recently started practicing the guitar as well, I initially thought musical instruments could be an interesting idea. However, I came to realise that it is not inclusive and ubiquitous: not inclusive in the sense that it only targets a very small pool of users and not ubiquitous in the sense that some instruments can't be carried around.

Observe

In our daily life, we tried to observe if potential users were trying to learn something on their own via different platforms (e.g. learning from YouTube videos).

My father, who is working in Korea right now, came to HK for a business trip a few weeks ago. I observed that he started learning Chinese using Duolingo on his phone. This is when it hit me that language learning would be a very good idea as it is both inclusive and ubiquitous.

Contact

As always, this was the most prominent part of the Empathise process. Hei Tung has carried out interview both face-to-face and online to hear about potential users' thought and stories.

Literature Survey

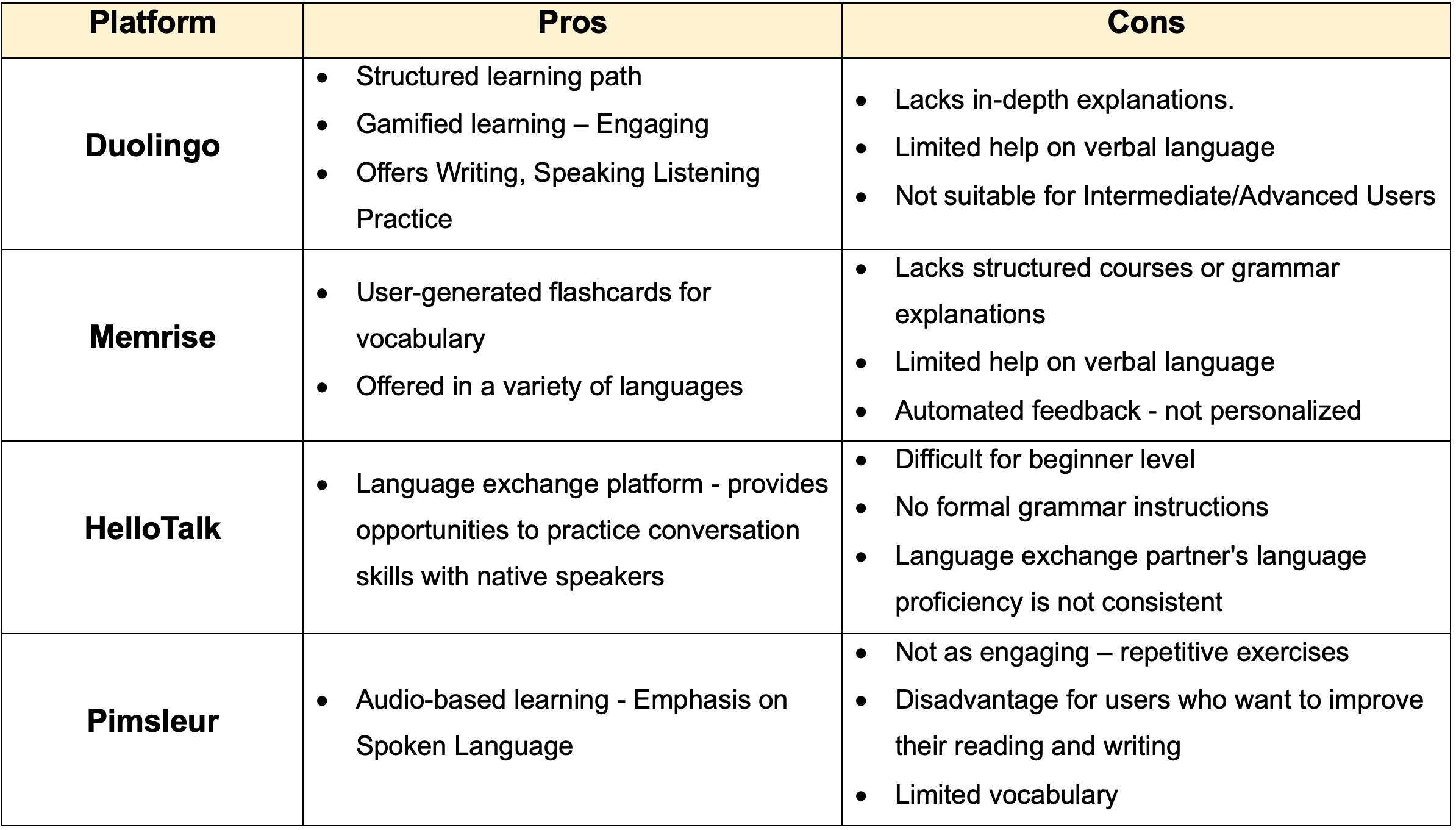

This section is something unique compared to our previous two projects. The main theme of the project is to leverage technology so that we can overcome the current limitations, thus identifying the current limitations was crucial.

Currently, we can categorise language apps into four categories

- Course-based: Duolingo, Babbel

- Vocabulary: Memrise, Anki

- Language exchange: Hello Talk

- Audio-based: Pimsleur

I carried out literature survey both online and offline. I have carried out some research online to get a feel of what these apps are like and see how people feel about some of these apps. I also interviewed people offline who are currently using these apps. As mentioned previously, my father has been using Duolingo for some time so he provided some interesting insights. Moreover, my sister, who often uses Anki for revision, also gave insights on apps with user-generated content.

Interpret

Based on the above, we carried out some of the Interpret process.

Organise

We realised that language learning is something that is the most inclusive in the sense that anyone can engage with it as long as they are willing to.

Generate POV

Usually, we would generate different personas & storyboards to explore different branch of ideas and narrow down to the final idea. However, based on needfinding and literature survey, we already realised that a novel language-learning platform would be the most helpful for many.

I came up with a few starting ideas (with a focus on language learning) for our group to explore with personas and storyboards.

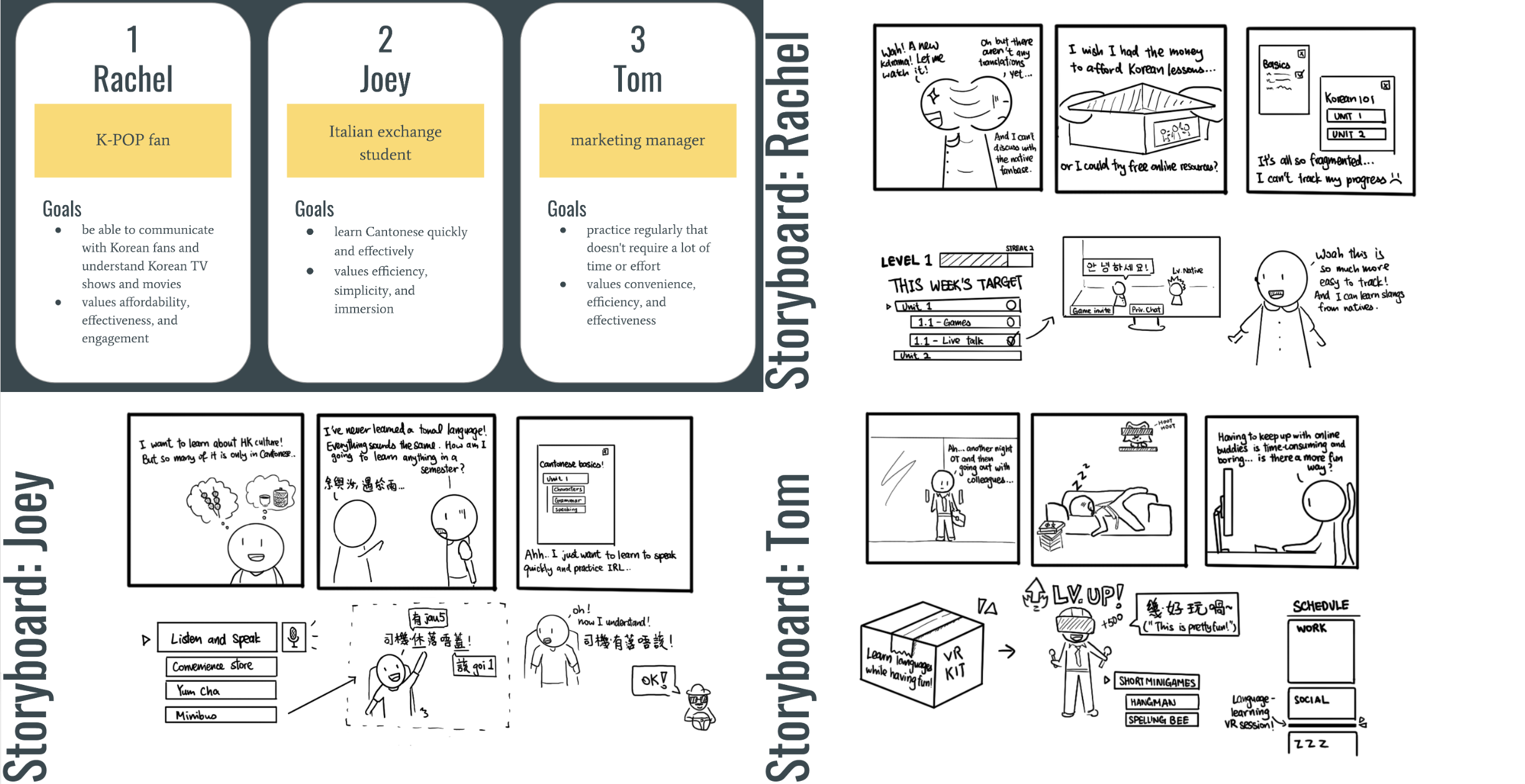

Cherry and Hei Tung have generated personas and storyboards based on the above. We used these storyboards to explore various solutions and conduct speed-dating to narrow down the features.

Ideate

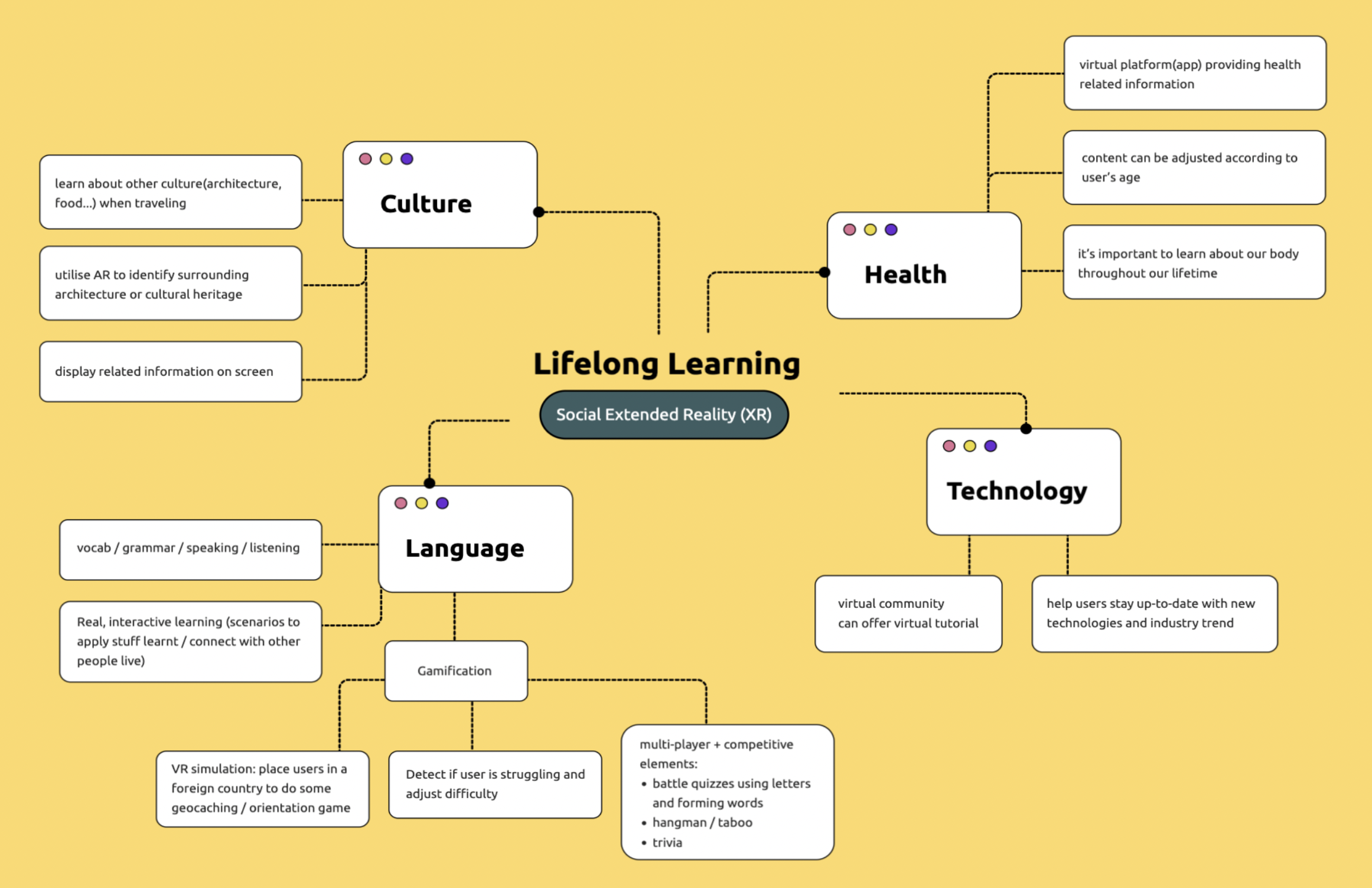

Below is a mind map, by Cherry, which shows some of our ideas (not just limited to but including language learning).

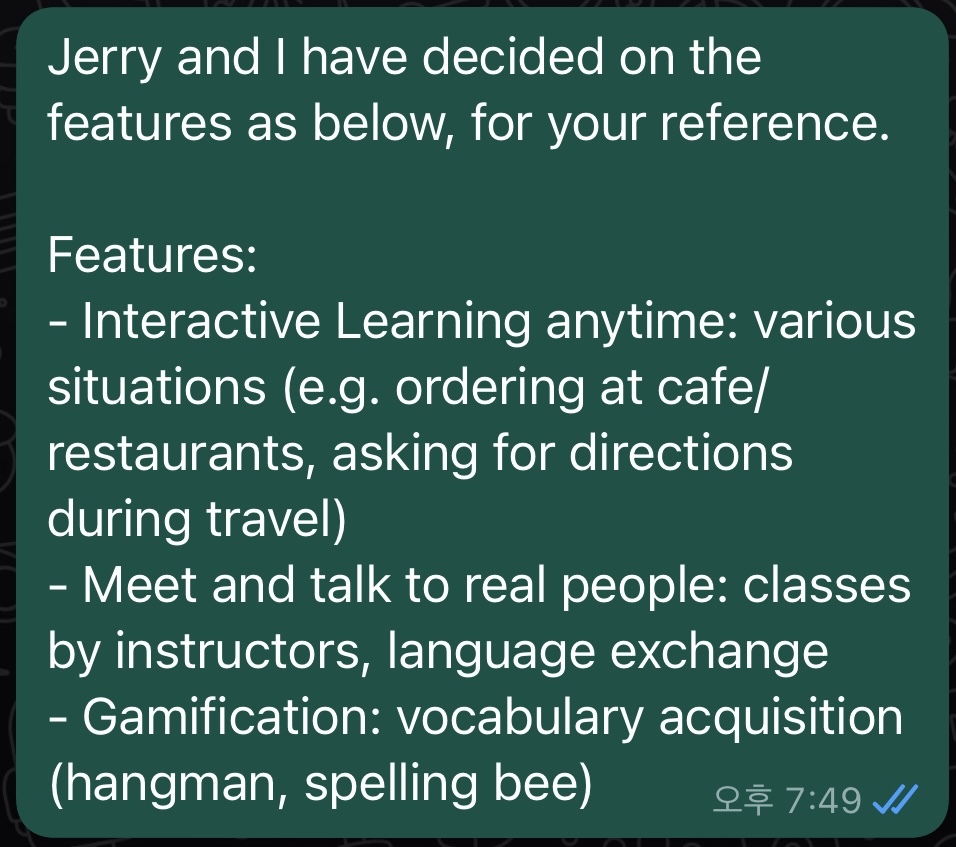

Jerry and I were responsible for implementation. Firstly, we have consolidated our main features based on feeedback from speed-dating.

Feature 1: Interactive Learning

I was mainly responsible for consolidating the first feature: interactive learning.

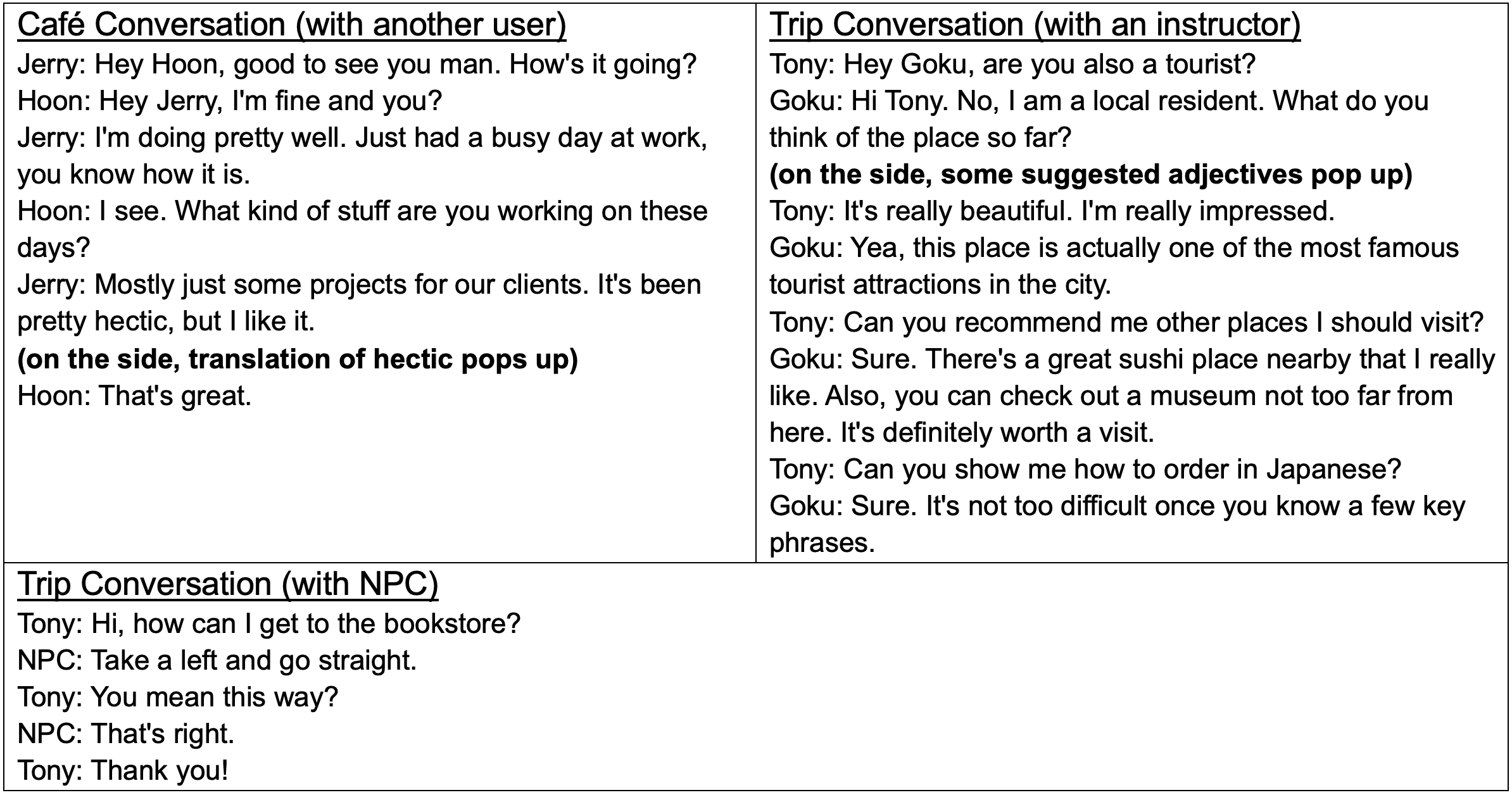

- We provide a wide range of scenarios in which users can apply what they learned. For instance, we offer scenarios like ordering food at a cafe or asking for directions on a trip. This enables users to engage in interactive learning by fully utilising their visual and auditory senses.

- We are leveraging VR to implement this feature. The implementation was done on Mozilla Hub (users can actually use their own VR devices on this platform).

- This addresses the problem of static learning.

- We want language learning to ubiquitous even with this feature on its own. So we have made it possible for users to interact with other users, selected instructors, and non-player characters (NPCs).

- If users are detected to having difficulties, we provide suggested phrases or words to use, as well as translations.

- Detection: based on elapsed time of silence, eye movement for users with VR devices

- Suggestions: each scenario has a fixed pool of phrases/words that users can use, suggestions can be made based on speech recognition

- Translations: translations can be provided using speech recognition and translation engine

Jerry and I implemented the first feature on Mozilla Hub. This required us to construct different scenarios and possible use cases first, then explore Mozilla Hub to implement them.

I have constructed the scenarios below and created some of the avatars for them.

This feature is best illustrated by a video that Jason has created.

Feature 2 & 3: Classes & Gamification

Jerry was mainly responsible for consolidating these features.

For these features, it was difficult to implement them on Mozilla Hub. So I used Figma to create a simple, low-fidelity prototype to illustrate the idea, as in the slides.

Verify

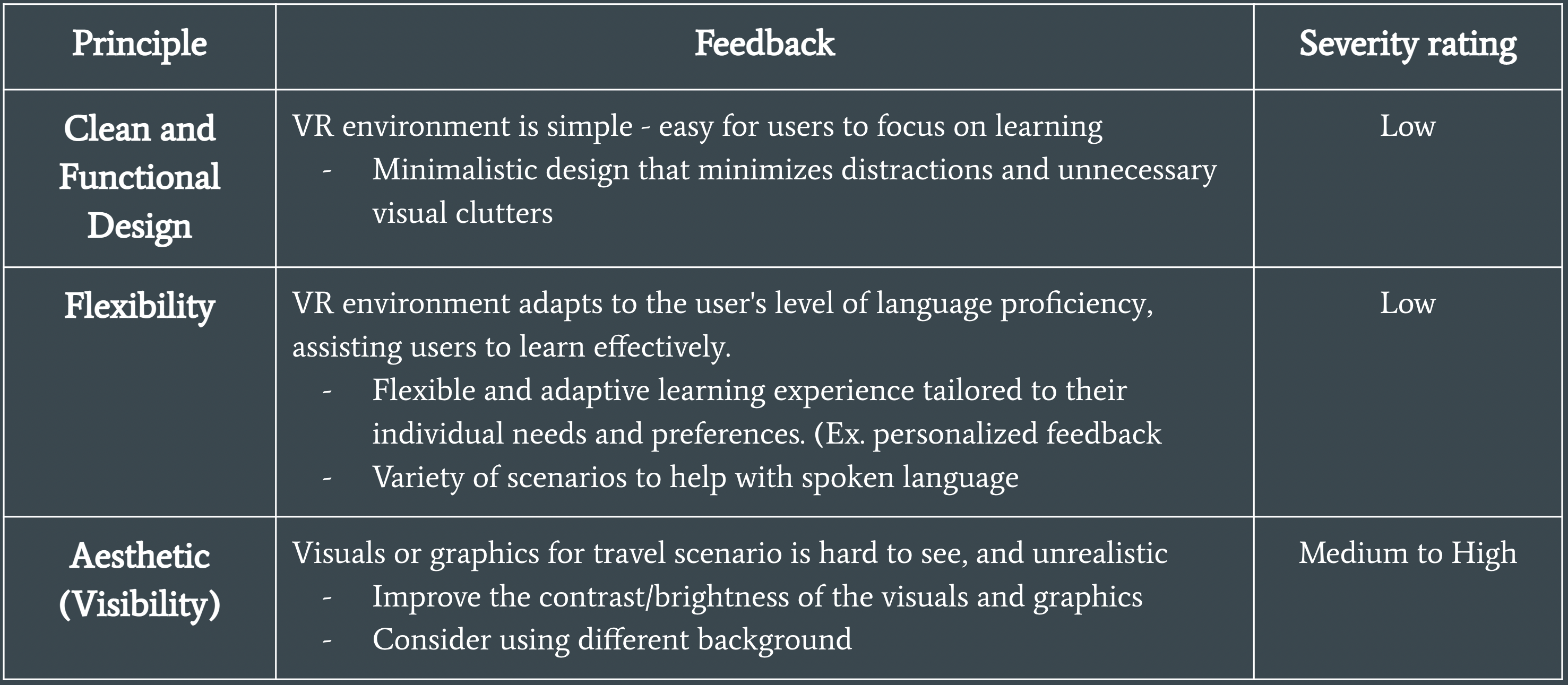

We wanted to make sure that our interpretation of user needs and scenarios are correct. So Hyunji carried out speed dating and received feedback from HKUST students.

Final Results

With everyone's hard work and efforts, we have put all the pieces together in harmony.

Personal Reflection

Sadly, my final project journey has come to an end.

Although this project may not be the finest implementation-wise, I believe our idea was really solid and interesting as we have based everything on the design process and literature survey. And again, it was interesting to try new things such as exploring the VR experience on Mozilla Hub and trying to prototype some features using Figma.

I am truly grateful for all the efforts that my groupmates have put in, especially for this project. It was the time of the semester where students get burnt out from tons of assignments and exams, but my groupmates really gave the best they could.

Thank you!

Thank you so much for being on the journey with me!

Please feel free to reach out if you have any comments or suggestions :)

I agree that realization can be quite problematic, but in general, this project seems to be highly helpful for all language learners. Right now, I check Promova blog to learn more about Adverbs in English, and I'm planning to work with a tutor there to improve my skills even more, and I don't think there's a better way to learn a language at the moment.