In this post, I will talk about the memory in the computer architecture.

There is a conflict having large and fast memory. If we choose large memory, then it might be slow. On the other hand, the fast memory is small.

So, we have to create memory hierarchy. Suppose that there are two memories, one is near memory and the other is distant memory. In this case, we can access all of them, but the time of access near memory is less than distant memory.

This is the reason that memory hierarchy.

Locality

Locality is a principle that makes having a memory hierarchy a good idea. There are two types of locality.

Temporal locality

-> The same item will tend to be referenced again soon.

Spatial locality

-> The nearby items will tend to be referenced soon.

This is the memory hierarchy of a modern computer system. We can divide many sections in our memory. By doing so, we can shorten the time cost.

If we want to use memory hierarchy, we should protect some rules.

- Every data are stored on disk.

- Copy recently accessed items from disk to smaller DRAM memory.

- Copy more recently accessed items from DRAM to smaller SRAM memory.

In addition, there are three things that we must know.

block

-> The block is minimum unit of data. In the cache architecture, there are many blocks.

hit

-> If the data is in the upper level, we call it hit. It means that we want to use some data and it is in the cache memory.

miss

-> If the data requested is not in the upper level, we call it miss. It means that we want to use some data but there is no this data in cache memory.

However, there are two issues.

- How do we know if a data item is in the cache?

- If there is a data item, then how do we find it?

To solve these problems, we can use Direct Mapped Cache.

Directed Mapped Cache

In the Directed Mapped Cache, there is a Mapping concept.

We can set the address is modulo the number of blocks in the cache.

- Location in the Cache = (Block Address) modulo (Number of Cache Blocks in the Cache)

For example, if we have 5 blocks and the address of the memory is 9, then the location in the cache is 9 modulo 5. So, the result is 4.

To use Directed Mapped Cache, we must know about the Valid bit and Tag.

Valid bit is needed to indicate whether an entry contains valid address. Tag is used to corresponds to a requested word.

In other words, Valid bit is used to decide this data is valid or not and Tag is used to find the real memory address.

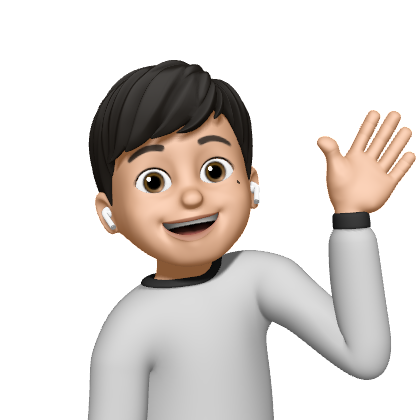

For example, in this picture, there are 5 address. We can use last three bits to Index that are corresponding with the cache blocks. We can use first and second bits that are used to Tag. 11010 and 10010 are in the same block of cache. In this case, we can compare two address by using Tag. In addition, there is V part, that is Valid bit. If there is a data, then the valid bit become 1(Valid).

The valid bit is used to judge this data is valid or not. If we use multi processing, the data in the memory can be modified in other CPU. So, if the data are modified, we have to mark it is not valid. In this case, we should use the valid bit.

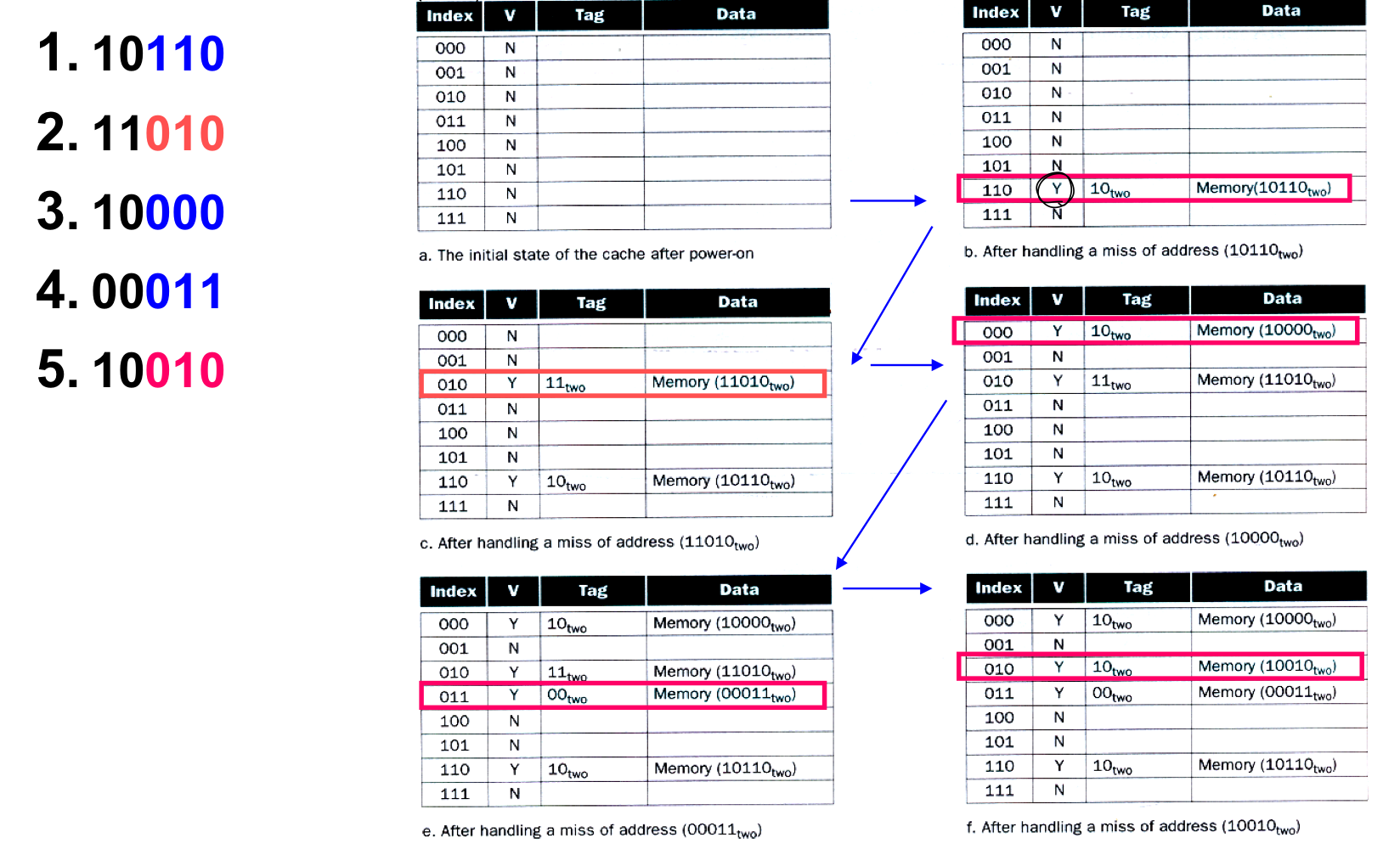

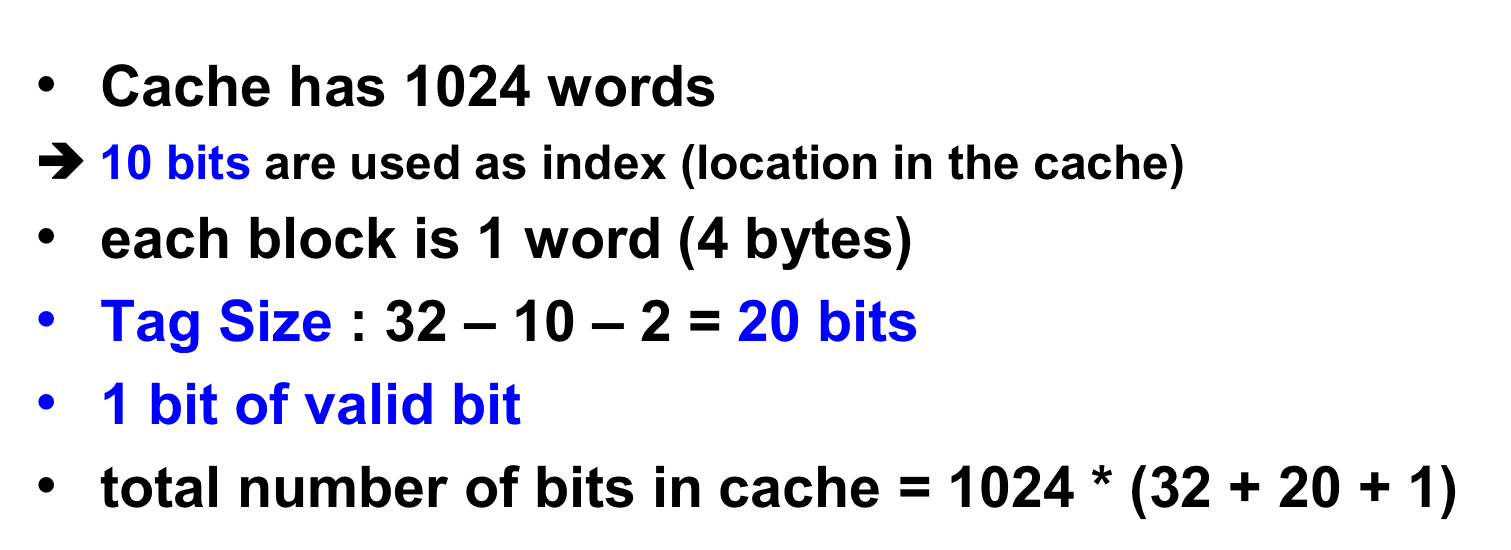

In the MIPS architecture, cache has 1024 words. It means we have to use 10 bits to express the cache location. In addition, we have to use block offset. However, in the MIPS architecture, we just use word size data address. So, we don't have to consider block offset. Finally, we can use 20 bits to Tag.

This is the summary.

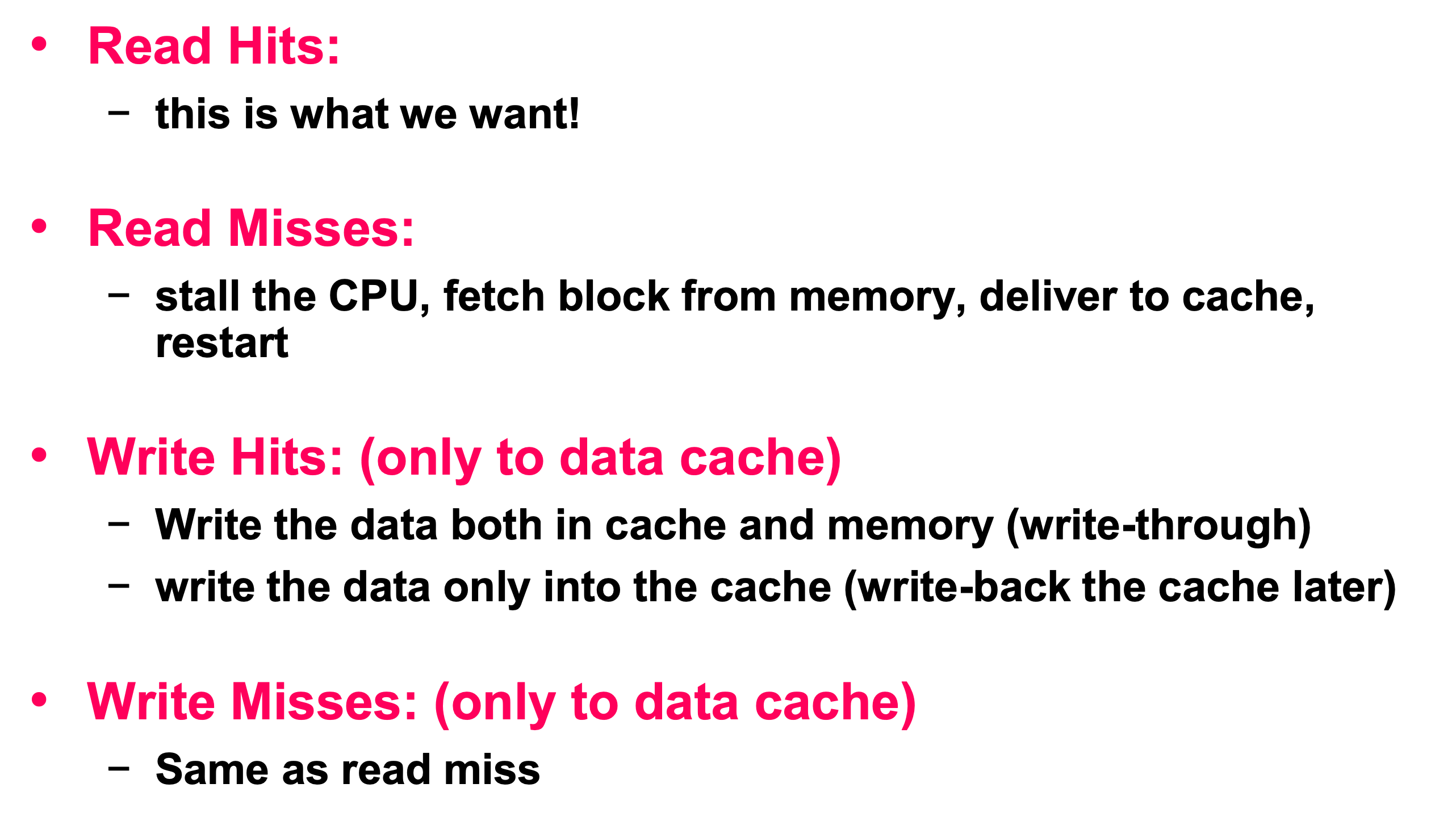

Hits and Misses

Hits

The data that we want are in the cache

Misses

The data that we want are not in the cache. We have to stall the CPU, fetch block from memory, deliver to cache and restart.

In the previous post, I talked about Data memory and Instruction memory. If we use pipeline structure, we have to use two memory. Because we must access data and instruction at same time.

By using cache, we can make data cache and instruction cache.

The value of PC is automatically increased. So, if miss is occured, we have to decrease the value of PC. Next, we have to read block from memory and freeze the CPU. After doing that, we have to write data to cache and restart the instruction.

However, the data cache doesn't use PC value. So, just doing step 2, 3, and 4.

Write Through

The information is written to both the block in the cache and to the block in the main memory.

It means that if we modify some value in cache, we have to modify the main memory's value. If we write some value in cache memory, it is automatically reflected with main memory.

Write Back

The information is written only to the block in the cache. The modified cache block is written to main memory only when it is replaced.

To use this architecture, we have create Dirty bit to check it is dirty or not. If Dirty bit is 1, we have to update the main memory. If it is 0, we don't have to update it.

If we use Write Through, read miss doesn't result in writes to memory. However, we always have to combined with write buffers so that we don't wait for lower level memory write.