LLM: multi-agent

https://x2bee.tistory.com/412 블로그 요약

현재 LLM을 활용하는 방법은 주로 Single Agent Architecture

Agent: LLM을 하나의 지성체로 보고 스스로의 기억을 기반으로 계획하고, 행동하고, 도구(Tools)를 사용하며 능동적으로 문제를 해결하는 하나의 존재 단위

single agent 프로세스

1. 사용자 질의를 분석하여 카테고리와 질문 추출

2. FAQ 데이터가 저장된 vector store에서 사용자 질문과 비슷한 QA pair 서치

3. 해당 지식을 기반으로 LLM이 응답

간단한 답변 작성은 가능 but

참고해야할 데이터의 수가 많고 hallucination(인공지능이 직접 새로운-잘못된- 데이터 생성)를 최소화해야 하는 경우

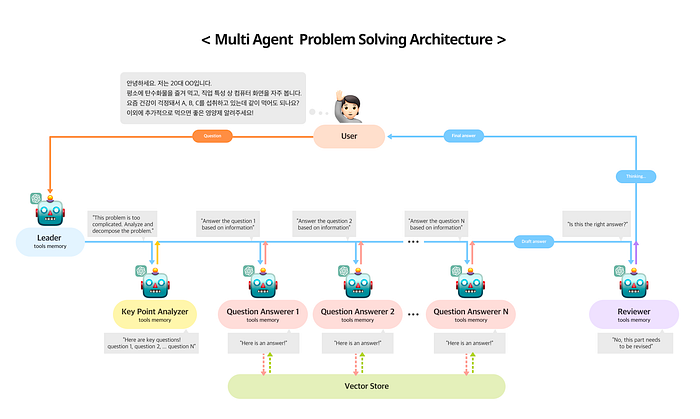

: 여러 agent에게 역할을 부여하고 agent간의 협동을 통해 문제를 해결하는 multi agent architecture를 도입할 필요가 있다

leader/key point analyzer/question answerer/reviewer 등으로 역할이 분담된 것을 볼 수 있다

multi agent 방식의 프레임워크는 크게

- MS의 AutoGen

- Crew AI

가 있다.

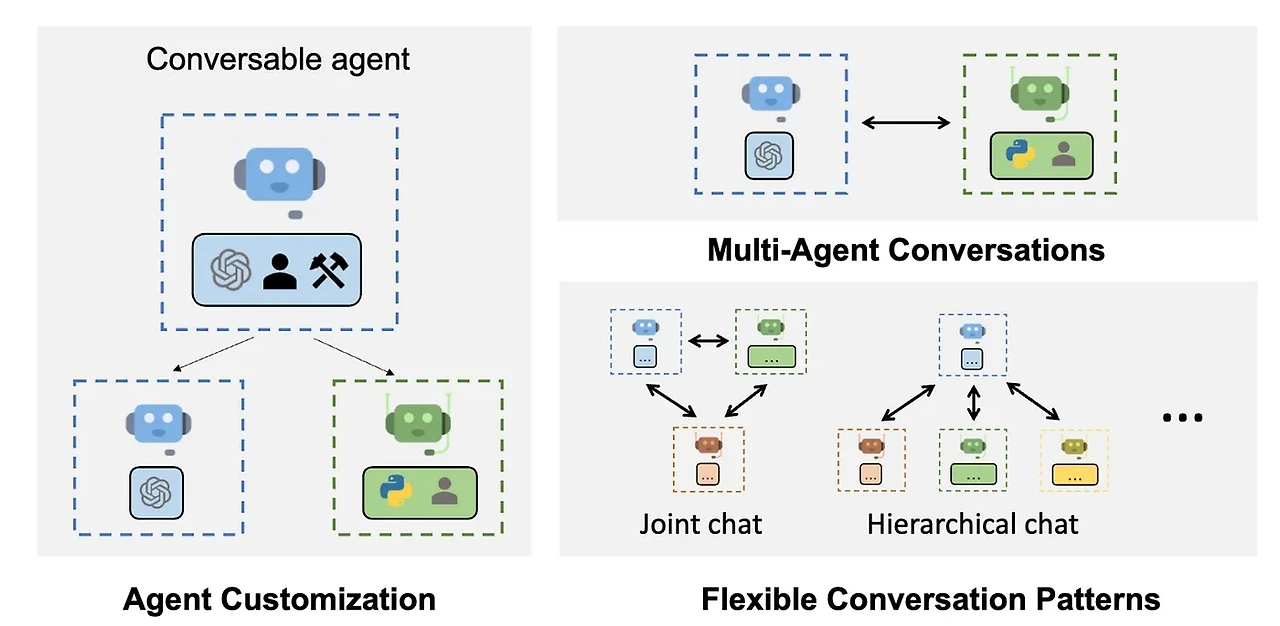

AutoGen

- 복잡한 작업을 위한 여러 agent를 만들고 역할 분담/상호작용 관리/그룹 채팅 시나리오 구현

- 대화형 시스템에서 유용

- agent를 사용자의 특정 요구에 맞게 커스터마이징 가능

- 인간의 입력과 피드백을 통합 가능

- Agent Studio라는 저코드 인터페이스 제공

실제 사용 예시

pip install pyautogen

pip install vllm

python -m vllm.entrypoints.openai.api_server --model /MLP-KTLim/llama-3-Korean-Bllossom-8B --dtype auto --api-key token-abc123import os

from autogen import AssistantAgent, UserProxyAgent

from autogen.coding import DockerCommandLineCodeExecutor

config_list = [{"model": "MLP-KTLim/llama-3-Korean-Bllossom-8B", "base_url" : "http://localhost:your_port/v1","api_key": "your_api_key"}]

# create an AssistantAgent instance named "assistant" with the LLM configuration.

assistant = AssistantAgent(name="assistant", llm_config={"config_list": config_list})

# create a UserProxyAgent instance named "user_proxy" with code execution on docker.

code_executor = DockerCommandLineCodeExecutor()

user_proxy = UserProxyAgent(name="user_proxy", code_execution_config={"executor": code_executor})

user_proxy.initiate_chat(

assistant,

message="""What date is today? Which big tech stock has the largest year-to-date gain this year? How much is the gain?""",

)Crew AI

실제 사용 예시

pip install 'crewai[tools]'

pip install vllm

python -m vllm.entrypoints.openai.api_server --model /MLP-KTLim/llama-3-Korean-Bllossom-8B --dtype auto --api-key token-abc123import os

from crewai import Agent, Task, Crew, Process

from crewai_tools import SerperDevTool

# os.environ["OPENAI_API_KEY"] = "YOUR_API_KEY"

#os.environ["SERPER_API_KEY"] = "Your Key" # serper.dev API key

# os.environ["SERPER_API_KEY"] = 'your SERPER_API_KEY'

# You can choose to use a local model through Ollama for example. See https://docs.crewai.com/how-to/LLM-Connections/ for more information.

os.environ["OPENAI_API_BASE"] = 'http://localhost:your_port/v1'

os.environ["OPENAI_MODEL_NAME"] ='/MLP-KTLim/llama-3-Korean-Bllossom-8B' # Adjust based on available model

os.environ["OPENAI_API_KEY"] ='sk-111111111111111111111111111111111111111111111111'

# You can pass an optional llm attribute specifying what model you wanna use.

# It can be a local model through Ollama / LM Studio or a remote

# model like OpenAI, Mistral, Antrophic or others (https://docs.crewai.com/how-to/LLM-Connections/)

#

# import os

# os.environ['OPENAI_MODEL_NAME'] = 'gpt-3.5-turbo'

#

# OR

#

# from langchain_openai import ChatOpenAI

search_tool = SerperDevTool()

# Define your agents with roles and goals

researcher = Agent(

role='Senior Research Analyst',

goal='Uncover cutting-edge developments in AI and data science',

backstory="""You work at a leading tech think tank.

Your expertise lies in identifying emerging trends.

You have a knack for dissecting complex data and presenting actionable insights.""",

verbose=True,

allow_delegation=False,

# You can pass an optional llm attribute specifying what model you wanna use.

# llm=ChatOpenAI(model_name="gpt-3.5", temperature=0.7),

tools=[search_tool]

)

writer = Agent(

role='Tech Content Strategist',

goal='Craft compelling content on tech advancements',

backstory="""You are a renowned Content Strategist, known for your insightful and engaging articles.

You transform complex concepts into compelling narratives.""",

verbose=True,

allow_delegation=True

)

# Create tasks for your agents

task1 = Task(

description="""Conduct a comprehensive analysis of the latest advancements in AI in 2024.

Identify key trends, breakthrough technologies, and potential industry impacts.""",

expected_output="Full analysis report in bullet points",

agent=researcher

)

task2 = Task(

description="""Using the insights provided, develop an engaging blog

post that highlights the most significant AI advancements.

Your post should be informative yet accessible, catering to a tech-savvy audience.

Make it sound cool, avoid complex words so it doesn't sound like AI.""",

expected_output="Full blog post of at least 4 paragraphs",

agent=writer

)

# Instantiate your crew with a sequential process

crew = Crew(

agents=[researcher, writer],

tasks=[task1, task2],

verbose=2, # You can set it to 1 or 2 to different logging levels

process = Process.sequential

)

# Get your crew to work!

result = crew.kickoff()

print("######################")

print(result)