Adversarial nets

- Generator : generates samples by passing random noise through a multilayer perceptron

- Discriminator : learns to determine whether a sample is from the model distribution or the data distribution

Training

Value function

D and G play two-player minmax game with value function :

- Discriminator tries to make , and

- classify real data to 1, fake one to 0

- Generator tries to make

- "deceive" the discriminator to classify fake data to 1

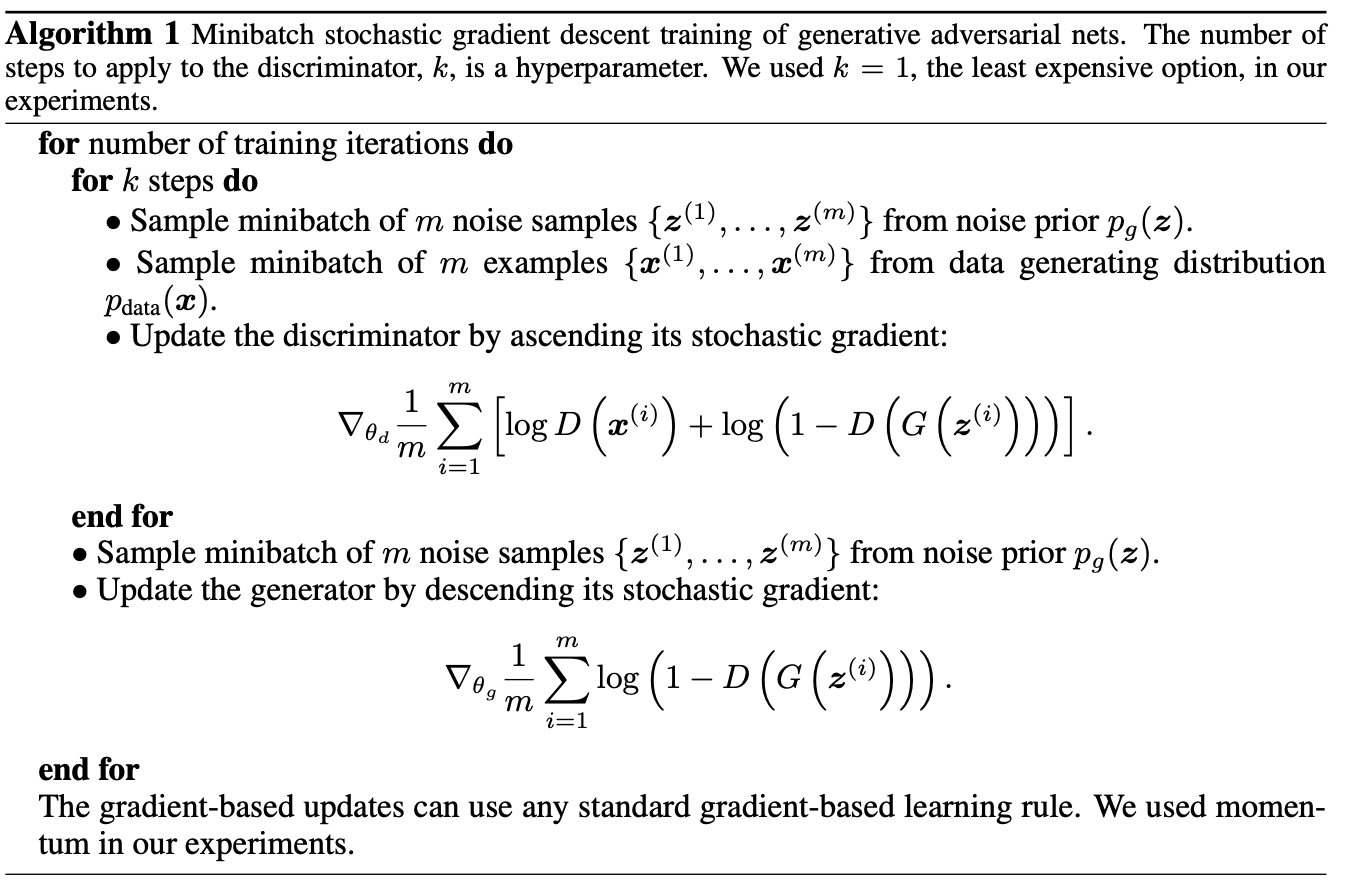

Algorithm of Training GAN

- optimizing the to completion on finite datasets is prohibitive

- computationally expensive

- lead to overfitting

- k steps of optimizing , while only one step of optimizing

Experiments

- trained based on MNIST, the Toronto Face Database (TFD), and CIFAR-10

- generator : mixture of rectifier linear activations

- discriminator

- maxout activations

- dropout applied when training

- input noise z to only the bottom layer

Advantages and Disadvantages

Advantages

- Generator can be updated without data examples, and only with discriminator's gradient flow

- can represent sharp, even degenerate distributions

Disadvantages

- no explicit representation of

- D should be well synced with the generator, or else can lead to mode collapse