COCONUT - CoT보다 효율과 성능이 좋은 학습 방법(Chain of Continuous Thoughts)

선요약

CoT를 포함한 현재까지의 학습 방법은 LLM의 다음 단계 예측에 자연어 토큰을 활용함

그러나 꼭 그렇게 할 필요는 없음. LLM에게 다음 단계 토큰으로 자연어 토큰 대신, 마지막 은닉층의 결과를 넣을 수 있는 자유를 주면 더욱 효율적이고, 성능이 좋아짐

간단 결과:

ProntoQA:

CoT: 98.8 % Acc., 92.5 tokens

COCONUT: 99.8 % Acc., 9.0 tokens

Introduction

LLM이 논증할 때, 자연어 토큰을 사용하면

- 논증마다 필요한 계산량이 다르다는 사실을 반영하지 못함

- 논증사슬(reasoning chain)의 대다수 토큰은 문장을 매끄럽게 만드는 역할을 할 뿐, 논증에 미치는 영향은 미미함

이전 연구 결과들은 Related Work 참고

- Related Work

- Chain of Thought (and its variants):

- 결과를 내기 전에 논증하는 방법의 통칭. 학습, prompting, 강화학습을 모두 포함함. 효과적이지만 자기회귀적 성질이 복잡한 task에서는 약점으로 작용

- 약점을 극복하기 위해 tree search를 추가하거나, search dynamics를 학습시키는 방법이 제기됨 (언어 토큰 사용)

- LLM의 은닉 논증:

- 기존 연구에서 은닉 논증은 중간 단계 토큰을 지칭함

- Transformer의 중간 단계 토큰을 분석해 보면, CoT를 생성하더라도 중간 단계 토큰은 생성된 CoT와는 다른 논증 과정을 거친다는 점이 발견됨

- (unfaithfulness of CoT reasoning) → 은닉 논증을 제대로 활용하고 있지 못함

- 은닉 논증을 더 잘 활용하기 위한 연구

- pause (Think before you speak)

- 논증 토큰을 생성하기 전에 pause토큰을 생성하면서 생각하게 하고, 마지막 pause토큰 이후의 결과만 사용

- (pretrain, finetune 필요)

- implicit-CoT (From Explicit CoT to Implicit CoT)

- 학습 단계별로 논증 토큰을 줄임 → COCONUT에서도 채택

- pause (Think before you speak)

- Chain of Thought (and its variants):

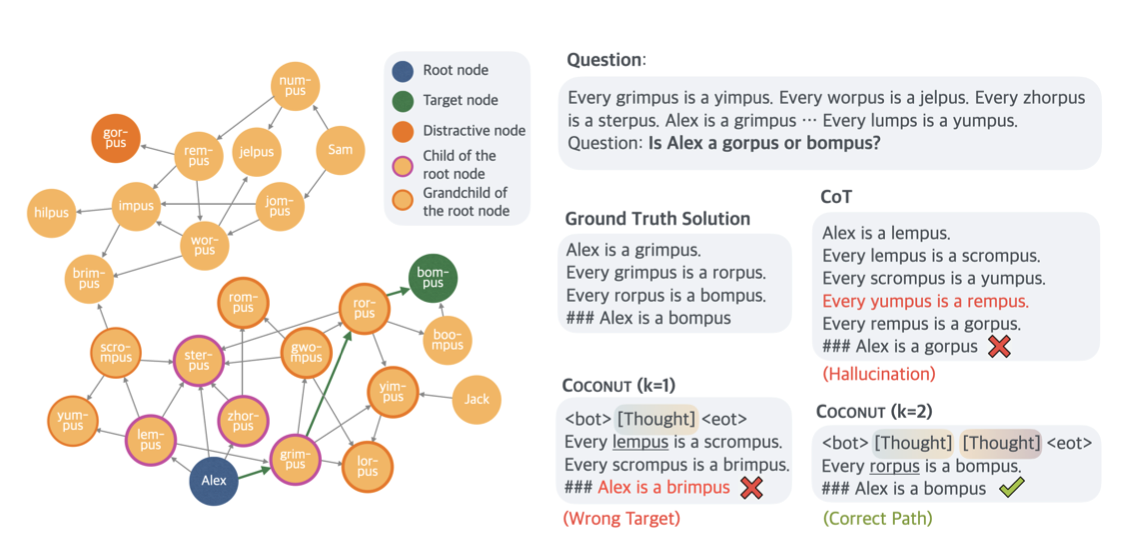

COCONUT은 LLM에게 원할 때 자연어 토큰으로 변환할 수 있게 하는 방법

- 언어 모드와 은닉 모드 사이의 변환이 가능

- 변환은

<bot>,<eot>의 특수 토큰을 사용 - 은닉 모드에서는 은닉층(Latent)의 결과를 다음 토큰으로 사용

- 언어 모드에서는 일반적인 LLM으로 작동

- 다단계 학습법 적용

- 분석 결과, 은닉 모드의 토큰은 가능한 다음 상태를 중첩해서 encode함

- 이는 CoT에서는 불가능

- 논증 과정을 BFS와 비슷한 구조로 만듦

- 장기 계획이 필요한 작업일수록, CoT보다 효율적이면서도 더 좋은 결과를 냄

COCONUT: Chain of Continuous Thought

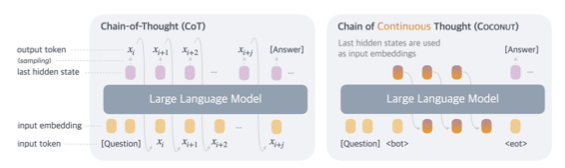

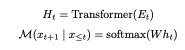

LLM in a nutshell

일반적인 LLM 구조에서

-

입력 sequence는 토큰별로 분해되어 embedding function

e를 거친다.E_t: 토큰 embedding sequence = [e(x1), … , e(x_t)]

-

이후 트랜스포머 기반 모델을 거쳐 hidden state seqence H_t가 된다

h_t: 마지막 토큰의 hidden state

-

최종적으로 language model head W를 거친 결과에 softmax를 취하면 다음 자연어 토큰에 대한 확률분포가 된다.

COCONUT 구조에서

<bot>,<eot>의 특수 토큰을 사용하여 은닉 모드를 표기한다.- 은닉 모드에서는 e(xk) 대신 h{k-1}을 사용한다.

- i번째 토큰을 처리할 때, 는 embedding을 거쳐서(e(x_i)) 은닉 결과 h_i를 낸다.

- (i+1)번째 토큰을 처리할 때, h_i에 W를 적용하지 않고 (W h_i 없음)

- softmax를 적용하지 않고 (Softmax (W h_i) 없음)

- embedding을 적용하지 않고 (e(Softmax (W h_i)) 없음)

- h_i를 다음 트랜스포머 입력 sequence의 일부로 활용한다

- 입력 sequence에서 가 i번째(x_i = )에 있고, 가 j번째에 있는 경우, E_k(i < k < j)는 다음과 같다

- Ek = [e(x_1), …e(x_i), h_i, h{i+1}, …, h_{k-1}]

- h_t는 최종 normalization layer를 거친 결과이므로, 크기가 크진 않다

- 다음 언어 토큰 확률분포 Softmax (W h_t)를 구할 필요는 없으나, 분석 목적으로는 사용 가능하다

- 마지막 은닉 토큰은 현재의 논증 상황을 표현하고, 이를 continous thought라고 명명한다.

- i번째 토큰을 처리할 때, 는 embedding을 거쳐서(e(x_i)) 은닉 결과 h_i를 낸다.

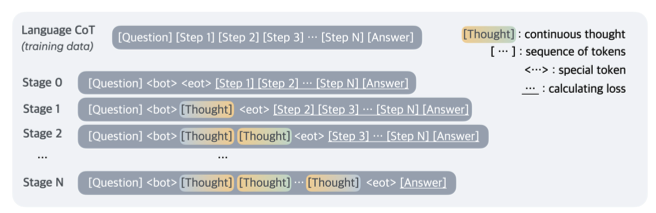

학습 과정

CoT 데이터를 지렛대 삼아, 질문을 입력으로 하고 논증 단계를 거쳐 답을 내도록 학습한다.

학습 과정

- stage 0에서는 CoT 데이터를 그대로 학습한다.

- 각 단계(stage)마다 앞에서부터 CoT 논증 토큰 1 개를 c 개의 continuous thought 토큰으로 대체한다

- 단계를 시작할 때마다 optimizer state를 reset한다

- Negative Log Likelihood loss를 사용하고, 질문과 은닉 생각이 아닌, 자연어 논증 과정에서만 loss를 계산한다

- 은닉 생각은 제거된 앞쪽 자연어 토큰을 되살리거나 압축하는 방향이 아닌, 미래 논증 단계를 잘 예측하도록 학습된다

- It is important to note that the objective does not encourage the continuous thought to

compress the removed language thought, but rather to facilitate the prediction of future reasoning.

- It is important to note that the objective does not encourage the continuous thought to

학습 과정 - 세부

- continous thought는 back-propagation으로 grad 계산 가능 → 학습 용이

- n 개의 은닉 생각을 끼워넣은 단계에서, (n+1) forward pass가 필요함

- 각 은닉 토큰을 만들기 전까지는 값을 모르니(GT 없음) 일반적으로 사용하는 teacher forcing를 사용할 수 없음

- 은닉 토큰을 만드는 과정은 직렬 → 병렬화는 해결해야 하는 과제

추론 과정

질문의 직후에 토큰이 오고, 정해진 개수의 continous thought 이후에 토큰이 등장

continuous thought 토큰의 개수는 별개의 model로 언제 끝낼지 추론하는 것과 정해진 개수를 사용하는 것 모두 잘 되어서 간단한 방법을 채택함

Experiments

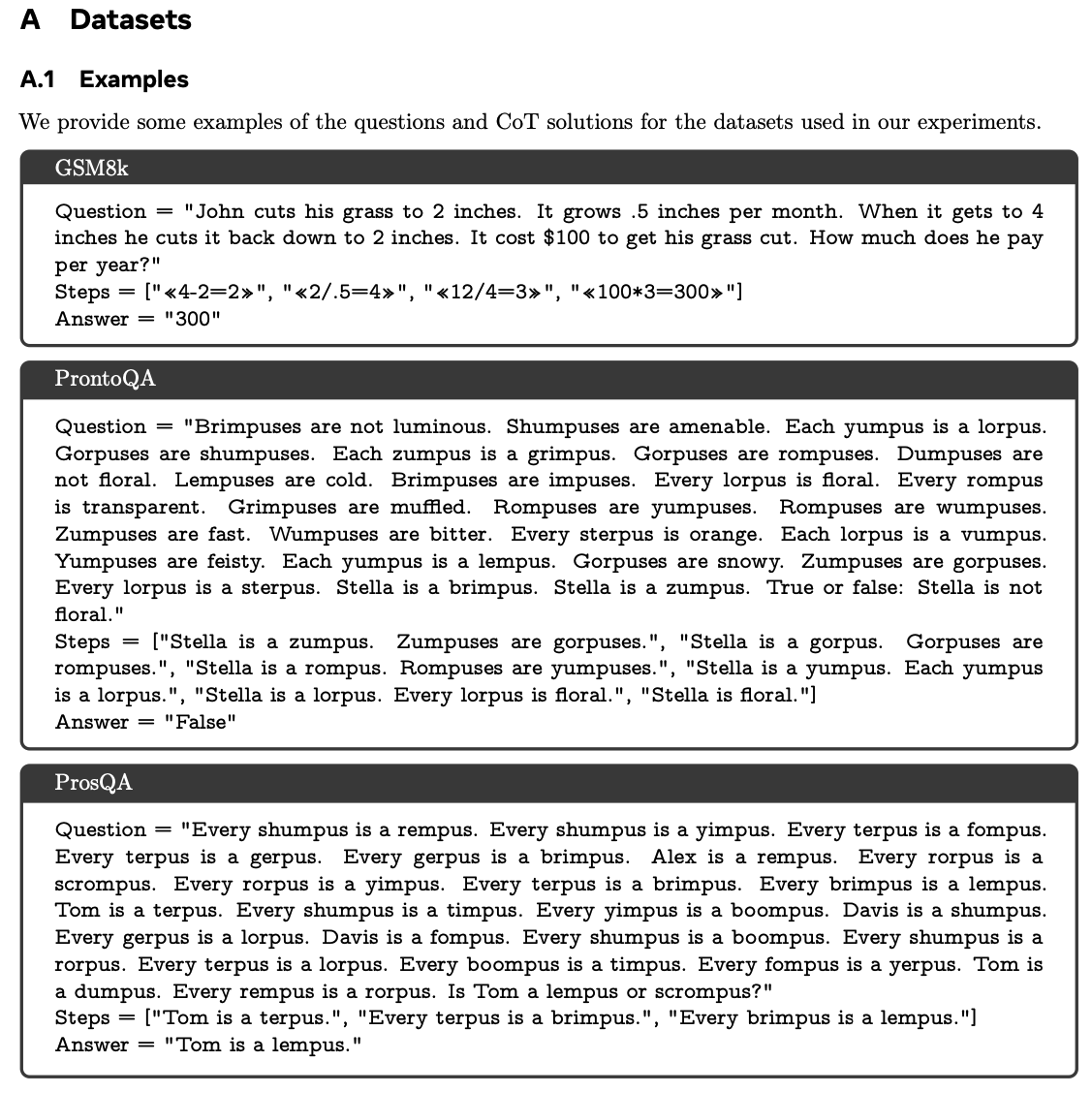

3 개의 dataset 활용 / GT와의 비교로 정확도 계산 / 정답 내기까지 필요했던 추가 토큰량 표기

Datasets

- GSM8k: 수학 논증

- ProntoQA: 논증

- 트리 구조의 맥락을 임의로 생성하고, 자연어로 주어짐

- ProsQA: 논증

- ProntoQA가 너무 쉬워 논증 능력을 평가하기에 부적절함

- DAG 구조의 맥락을 임의로 생성하고, 자연어로 주어짐

- Each problem is structured as a binary question: “Is [Entity] a [Concept A] or [Concept B]?”

- The graph is constructed such that a path exists from [Entity] to [Concept A] but not to [Concept B].

Experimental Setup

base: pre-trained GPT-2

수학 논증: c = 2, 3 단계까지는 논증 언어 토큰을 1 개씩 없애다가, 4 단계에서는 continuous thought 토큰 개수를 유지한 채로 논증 언어 토큰을 모두 없앤다. 3 epoch / stage → 긴 설명 long-tail에 강건해짐

논리 논증: c =1, 두 데이터셋 모두 논증 언어 토큰의 최댓값이 6이므로, 학습 단계를 6 단계로 설정한다. 5 epoch / stage

더 나아갈 단계가 없으면 50 epoch까지 마지막 단계로 학습한다.

추론에서는 continuous thought 토큰의 개수를 학습 시 마지막 단계에서 사용했던 개수와 일치시킨다

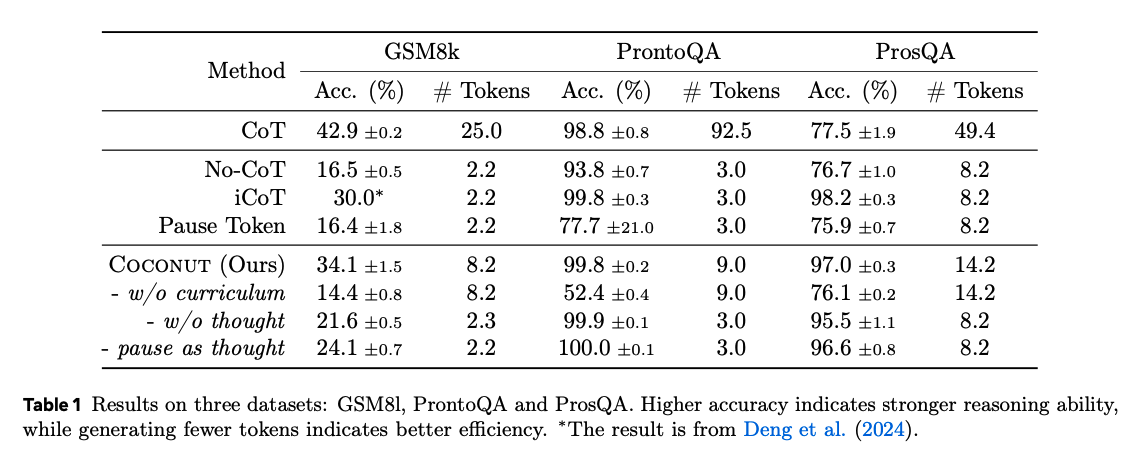

Results & Discussion

Chaining continous thoughts enhances reasoning

GSM8k에서, COCONUT이 iCoT보다 좋은 결과를 얻었고, pause as thought보다는 월등히 좋은 결과를 얻었다. 이는 COCONUT이 일반화 성능에서 더 낫다는 의미. (pause 토큰이 병렬화에 유리하지만)

c를 0 → 1 → 2로 변화시킬 때 성능이 꾸준히 올라갔는데, 이는 COCONUT도 CoT에서의 연쇄 효과를 보고 있는 것으로 해석 할 수 있다

- 토큰을 연쇄적으로 연결하면 계산량에 따라 성능이 올라가는 효과

논리 논증 데이터셋에서는 COCONUT과 iCoT가 모두 좋은 성능을 보였는데, 이 데이터셋에서는 계산량이 병목이 아니라는 것을 보여준다

Latent reasoning outperforms language reasoning in planning-intensive tasks

복잡한 논증의 경우, 더 장기적인 관점에서 각 step을 평가할 필요가 있는데, ProsQA의 복잡한 DAG는 계획 능력을 요구한다. CoT는 거의 성능 향상이 없는 반면, COCONUT과 iCoT는 상당한 성능 향상을 보여준다

The LLM still needs guidance to learn latent reasoning

단계적으로 논증 언어 토큰을 줄여 나가는 경우가 아닌, 모든 논증 언어 토큰을 없애는 방싱(no-curriculum)으로 학습하면, no-CoT와 비슷하다 (continuous thought의 의미가 없다)

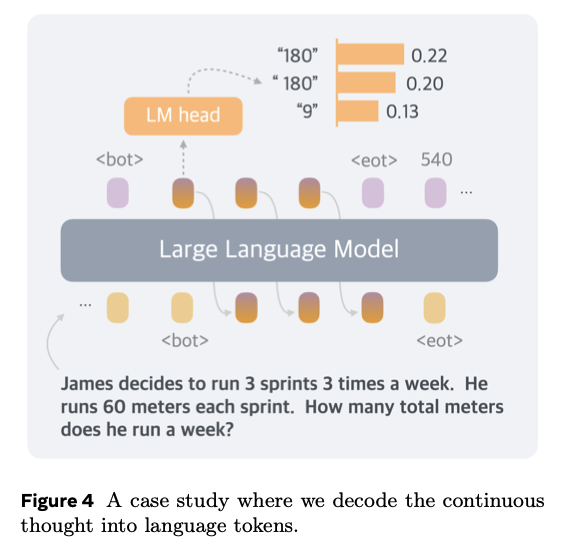

**Continuous thoughts are efficient representations of

reasoning**

첫 은닉 토큰을 언어 토큰으로 decode하기 위해 LM head를 통과시키면 180, 9를 높게 갖는 분포가 나온다. 이는 (3×3×60 = 9×60 = 540, or 3×3×60 = 3×180 = 540) 의 중간 과정을 보여준다. 또한 여러 논증의 방향이 중첩되어 있음을 확인할 수 있다

Understanding the Latent Reasoning in Coconut

이 장에서는 은닉 논증 과정을 분석. 이를 위해 언어 모드와 은닉 모드를 더 자유롭게 왔다갔다 할 수 있는 COCONUT 변이를 사용하는데, 일반 COCONUT과는 다음의 차이를 가짐.

일반 COCONUT

- 학습의 마지막 단계에서는 “정해진 최대의 continuous thought 토큰”을 가짐

- 추론 시에 “정해진 최대의 continuous thought 토큰”만큼 생각 후 언어 모드로 전환

은닉 논증 분석용 COCONUT

- 학습의 모든 단계에서 0.3의 학습 데이터를 다른 단계의 학습 데이터로 대체 → 이전 단계를 까먹지 않게 됨

- 추론 시에 k 토큰만큼 생각 후 언어 모드로 전환

은닉 논증 분석용 COCONUT을 사용하여 완전 은닉 모드와 완전 언어 모드를 사이를 비교(성능 등)해 볼 수 있음.

이 결과를 통해 은닉 논증 과정이 tree search와 유사함을 밝히고, 은닉 논증이 LLM의 판단에 왜 도움을 주는지 분석함

Experimental Setup

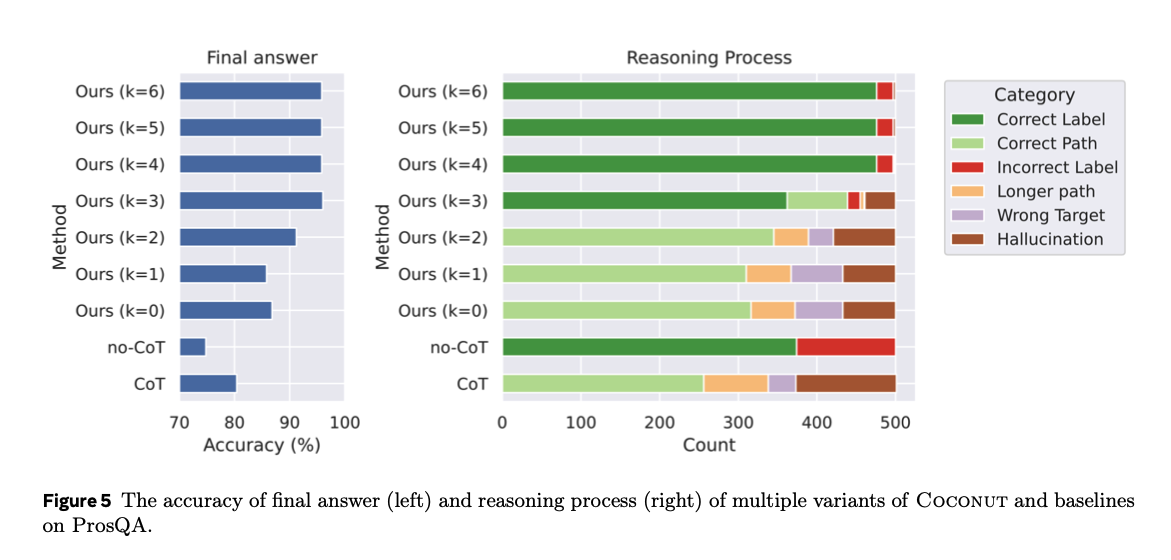

변이 COCONUT(k in {0, 1, 2, 3, 4 ,5, 6})을 ProsQA를 사용하여 평가.

평가 항목:

- 정확도: 최종 답이 맞았는지 평가

- 논증 과정:

- ProsQA는 DAG로 이루어진 데이터셋이므로, 모델이 출력하는 언어 논증도 그래프에서의 경로가 됨

- 언어 논증은 배타적인 다음 6가지 범주로 분류 가능

- Correct Path: 정답을 맞추었고, 가장 짧은 경로임

- Longer Path: 정답을 맞추었지만, 더 짧은 경로가 존재함

- Hallucination: 그래프에 존재하지 않는 edge를 이용하거나, 끊어져 있는 경로임

- Wrong Target: 유효한 경로이지만, 오답을 냈음

- Correct Label: 논증 토큰 없이 정답을 냈음 (no-CoT or large k)

- Incorrect Label: 논증 토큰 없이 오답을 냈음 (no-CoT or large k)

Interpolating between Latent and Language Reasoning

- k를 늘려갈수록 정확도와 올바른 논증 과정(Correct Path, Correct Label)이 올라감. 또한 Hallucination과 Wrong Target은 줄어듦

- k=0 과 CoT를 비교하면, 두 모델 모두 완전한 언어 모드로 동작함에도 k=0 COCONUT의 정확도가 더 높고, 논증 과정에서 Correct Path 비율은 더 높고, Hallucination 비율은 더 낮았음 (Wrong Target은 더 높아보임..)

- 이는 학습 과정에서 다른 단계의 학습 데이터를 섞은 영향으로, 후기 단계의 학습 데이터는 앞 쪽의 논증 과정이 생략되어 모델의 계획 능력을 향상시킴. 이에 반해 CoT에서는 모델이 언제나 직후 토큰을 예측하도록 학습되어, 근시안(shortsighted)인 모습을 보임

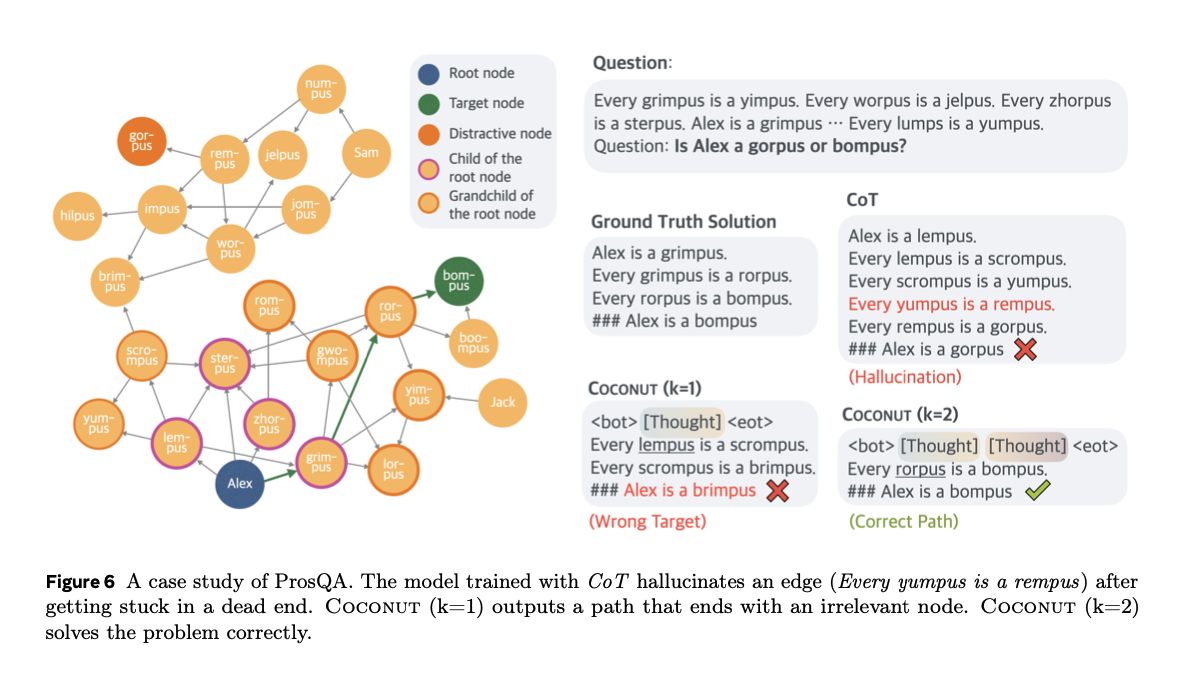

- 이 예시에서 CoT는 Hallucination, k=1 COCONUT은 Wrong Target으로 빠지지만, k=2 COCONUT은 CORRECT PATH를 잘 냄

- 이를 통해 초기 은닉 생각 토큰에서는 어떤 edge를 택할 지 어려워한다는 것을 알 수 있음

- 각 단계마다 언어 토큰 하나를 골라야 하는 언어 모드와 달리, 은닉 모드에서는 결정을 논증의 끝까지 미룰 수 있으므로 은닉 논증 과정이 진행될수록 오답을 점진적으로 걸러내어 정확도를 높이는 것을 확인할 수 있음

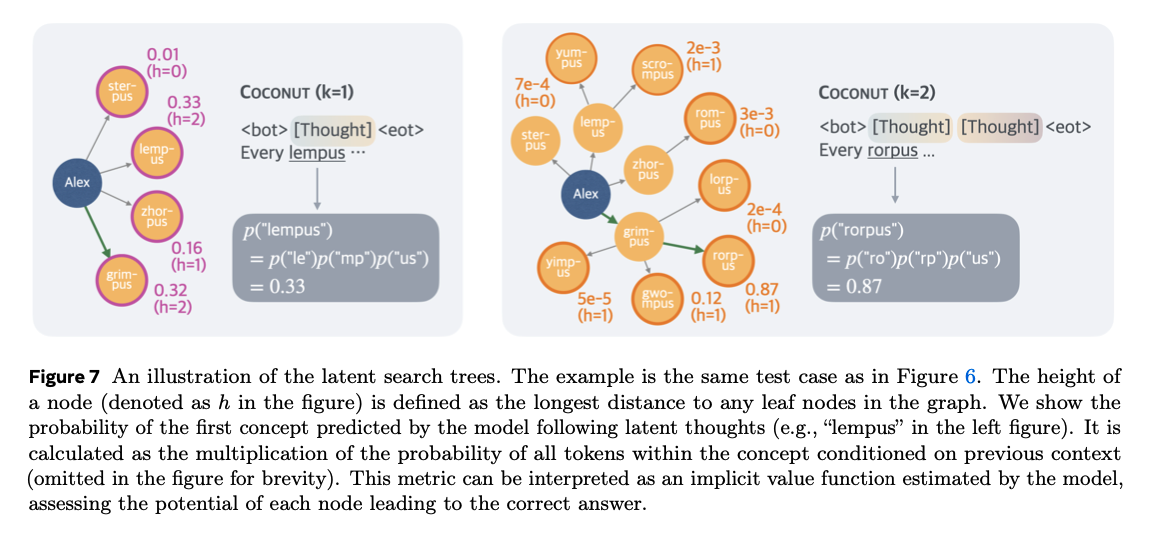

Interpreting the Latent Search Tree

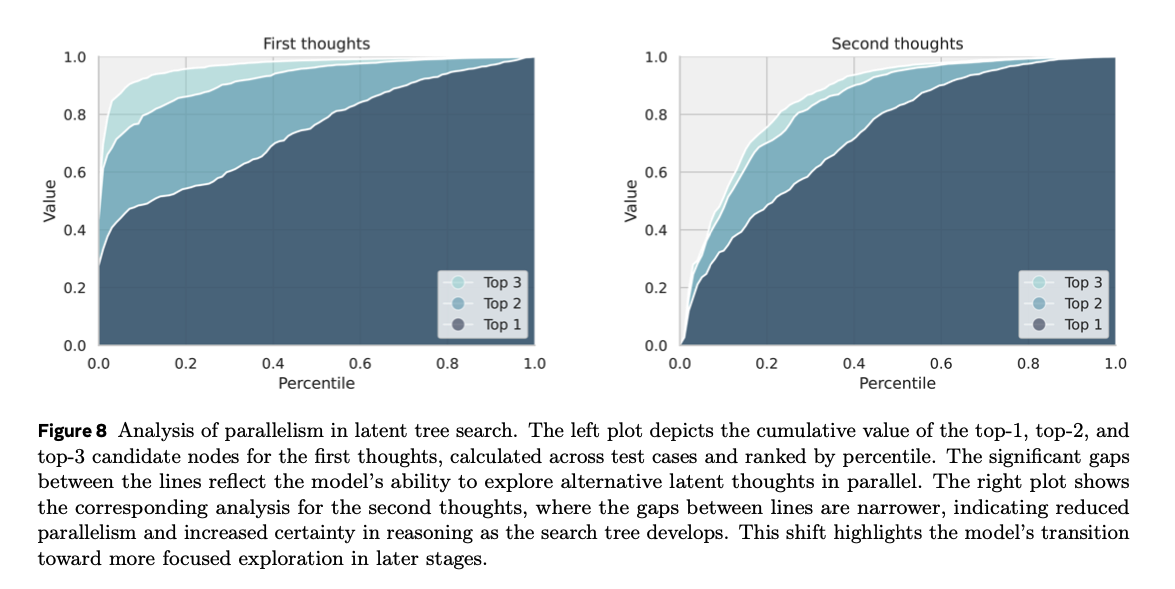

- 은닉 생각 토큰이 다음 step이 될 수 있는 여러 후보를 중첩하여 encode한다는 점에서, search tree로 해석할 수 있다.

- 모든 frontier node를 같은 비중으로 다루는 BFS와는 다르게, 모델은 더 가치있는 node를 우선하는 능력이 있다.

- frontier node: 현재 순회에서 방문한 node와 직접 연결된, 방문하지 않은 node

- Figure 6. 에서 첫 번째 step은 Alex의 자식 node를 고르는 과정이고, 두 번째 step의 frontier node는 손자 node이다.

- frontier node: 현재 순회에서 방문한 node와 직접 연결된, 방문하지 않은 node

- 이 과업에서 k 토큰 후 언어 모드로 모델을 전환하면

Every |Concept A| is a |Concept B|의 규격화된 문장이 연달아 출력되는데, Concept A가 등장할 가능성을 계산하면 모델이 해당 node에 얼마나 큰 가중치를 부여했는지 분석 가능하다

- Figure 7.의 좌측을 예시로 하면, Every까지 언어 모드로 출력한 다음, Every를 Embedding Layer에 통과시키고 Transformer에 통과시키고 LM head, Softmax까지 통과시키면 각 언어 토큰별 확률분포를 얻는데, 이 시점에서 “lempus”의 가치를 “lempus”를 이루는 각 토큰(”le”, “mp”, “us”)가 등장할 확률의 곱으로 평가할 수 있다

- 확률을 구하려면 p(”le")p(”mp”|”le)p(”us”|”le”,”mp”)여야 할텐데, 모두 forward pass를 시켜보지 않는 한, 계산이 불가능할 것 같다

- 가치는 확률의 정의를 만족하지 않을 것 같다

- 마찬가지로, Figure 7.의 우측은 손자 node의 가치를 평가하는 과정이다. 좌측과 비교해 보면, 좌측에서는 “sterpus”를 걸러내긴 했지만 나머지 node에서는 헛갈리는 경향을 보인다. 은닉 생각을 1 토큰 더 거친 우측에서는 rorphus에 집중(가치=0.87)한다는 점을 확인할 수 있다.

- 은닉 논증 단계를 거칠수록 top-1의 가치가 top-2, top-3를 압도하는 것으로 모델이 점차 높은 가치를 갖는 node에 집중하는 점을 확인할 수 있다.

Why is a Latent Space Better for Planning?

은닉 논증 과정이 search tree로 동작한다는 점을 통해 은닉 논증 과정이 어째서 모델의 계획 능력을 향상시키는지 가설을 세울 수 있다.

Figure 6.의 예시에서 가치가 낮게 평가된 “sterpus” (Figure 7. 좌측)와 나머지 세 node의 차이점은 높이이다.

“sterpus”는 leaf node로 target에 이르지 못한다는 사실을 바로 확인할 수 있고, 다른 node는 더 높아 방문할 자식이 아직 남아있어, 평가하기 더 어렵다.

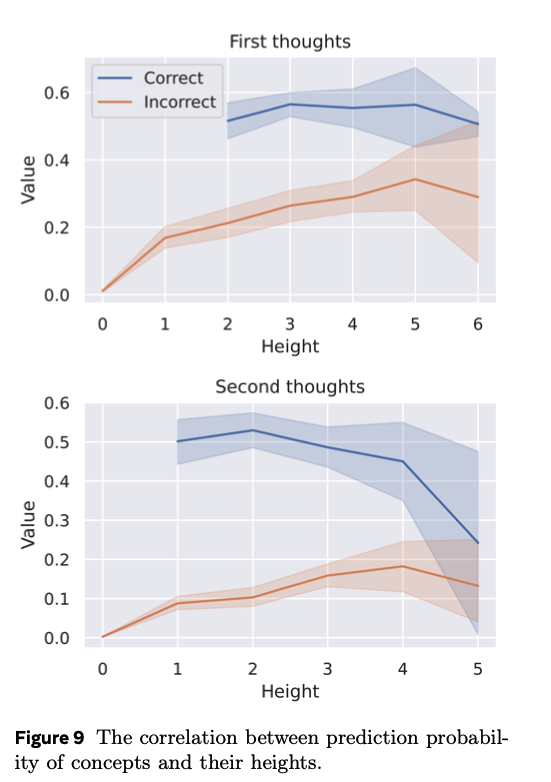

💡 가설: 더 낮은 node는 정확히 평가하기 더 쉽다. (nodes with lower heights are easier to evaluate accurately)

Figure 9. 는 이 가설에 일치하는 패턴을 보여준다. 오답 node와 정답 node의 가치의 차이가 클 수록 모델이 가치를 올바르게 평가하고 있다고 볼 수 있는데, 높이가 낮은 node일수록 두 그래프의 차이가 크다.

결론적으로, 경로 확정을 뒤로 미룰수록 search 범위를 확장하여 종결 상태까지 탐색할 수 있게 되고, 모델의 정답 node와 오답 node를 분류하는 능력이 향상된다

결론

연속적인 은닉 공간에서 논증하는 COCONUT은 LLM의 논증 성능을 향상시킨다. 또한 은닉 생각은 search tree와 유사한 구조를 보인다.

- CoT는 각 step마다 확률분포를 하나의 언어 token으로 collapse → 불연속

TODO

- 효율화

- COCONUT을 pretrain에서도 활용