지난 포스팅에서는 1, 2장을 통해 Service Mesh와 Istio 첫걸음에 대해 다뤘습니다.이번 글에서는 Istio의 데이터 플레인을 구성하는 핵심 요소인 Envoy Proxy에 대해 살펴보겠습니다.

3장 Istio’s data plane: Envoy Proxy

1) Envoy Proxy란?

Envoy는 Lyft에서 개발한 고성능 L7 프록시입니다. 마이크로서비스 아키텍처(SOA)에서 서비스 간의 통신을 중계하고, 관측 가능성(Observability)을 높이는 데 최적화된 프록시로 널리 사용됩니다.

주요 특징

- 다양한 언어/프레임워크 기반의 서비스 간 트래픽 중계

- 고성능, 비차단(non-blocking) 아키텍처

- 분산 트레이싱, 로깅, 모니터링 기능 내장

- Istio와 같은 Service Mesh의 데이터 플레인으로 핵심 역할 수행

기본 구성 요소

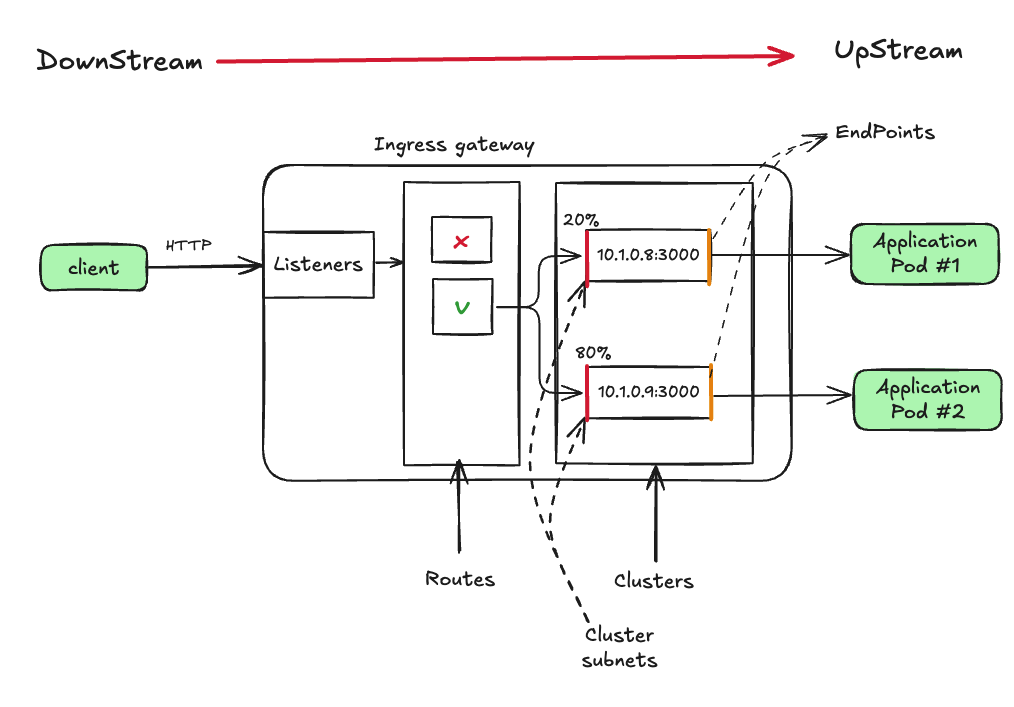

[리스너 (Listeners)]

- 외부로부터 트래픽을 수신하기 위해 특정 포트를 노출하는 역할

- 예: 포트 80의 리스너가 트래픽을 수신하고, 이를 규칙에 따라 처리

[루트(라우트, Routes)]

- Listeners 받은 트래픽을 어디로 보낼지 정하는 라우팅 규칙

- 예: URL 경로가

/catalog일 때, 해당 트래픽을 catalog 클러스터로 전달

[클러스터 (Cluster)]

- Envoy가 트래픽을 전달하는 업스트림 서비스 집합

- 예: catalog 서비스의 v1과 v2는 각각 다른 클러스터로 관리 가능

Envoy 트래픽 흐름 : 다운 스트림 → 리스너 → 라우트 → 클러스터 → 업스트림

주요 기능

[서비스 디스커버리 (Service Discovery)]

- 런타임 전용 라이브러리 없이도 서비스 엔드포인트 자동 탐색

- Istio 같은 컨트롤 플레인에서 제공하는 고수준 추상화 API 사용 가능

- 궁극적 일관성을 가정하며, 능동적·수동적 헬스 체크를 통해 최선의 상태 추정

[로드 밸런싱 (Load Balancing)]

- 지역 기반 로드 밸런싱 지원(지역 내 우선 라우팅)

- 제공 알고리즘

- 랜덤

- 가중 라운드 로빈

- 최소 요청(Weighted Least Request)

- 일관된 해싱(Ring hash, Maglev)

[트래픽 및 요청 라우팅 (Traffic & Request Routing)]

- HTTP/1.1 및 HTTP/2 기반의 정교한 라우팅 규칙 제공

- 가상 호스트, 콘텍스트 경로 라우팅, 헤더 기반 라우팅, 우선순위 라우팅 가능

- 라우팅 시 재시도 및 타임아웃, 오류 주입 기능 제공

[트래픽 전환 및 섀도잉 (Traffic Shifting & Shadowing)]

- 비율 기반 트래픽 분할(카나리 배포 지원)

- 라이브 트래픽 복제 후 사본을 업스트림 클러스터로 보내는 섀도잉 기능(고객 영향 없이 테스트 가능)

[네트워크 복원력 (Network Resilience)]

- 요청 타임아웃, 재시도, 커넥션 제한, 빠른 실패 설정 가능

- 이상 감지(outlier detection) 및 서킷 브레이커 기능으로 장애 전파 방지

[HTTP/2와 gRPC 지원]

- HTTP/1.1과 HTTP/2 간 상호 프록시 가능

- gRPC 기본 지원(HTTP/2 및 Protocol Buffers 기반)

[메트릭 기반 관찰 가능성]

- 다운스트림, 서버, 업스트림에 대한 다양한 메트릭 제공(카운터, 게이지, 히스토그램)

- StatsD, Datadog, Hystrix 등으로 메트릭 내보내기 지원

[분산 트레이싱]

- OpenTracing 기반 트레이스 스팬 자동 보고

- 트래픽 흐름, 지연, 호출 그래프 시각화 가능

- 애플리케이션에서 일부 헤더(x-b3-* 등) 전파 필요

[자동 TLS 종료 및 시작]

- TLS 종료와 업스트림으로의 TLS 시작 기능 제공

- 애플리케이션에서 TLS 설정 관리 필요성 제거 (mTLS 자동 지원)

[속도 제한]

- 네트워크 및 HTTP 수준에서 속도 제한 기능 통합 지원

- 데이터베이스, 캐시 등 리소스 보호 목적으로 활용 가능

[확장 가능성]

- 프로토콜 처리 필터를 통해 Envoy 확장 가능 (C++ 기반)

- 루아(Lua) 및 웹어셈블리(Wasm) 스크립트 기반의 덜 침습적 확장 지원

Envoy 장점

- 웹어셈블리(WebAssembly)를 통한 강력한 확장성

- 개방적이고 활발한 커뮤니티 지원

- 유지 보수와 확장성을 고려한 모듈식 코드베이스

- 업스트림 및 다운스트림 모두에서의 HTTP/2 지원

- 프로토콜에 대한 심층적인 메트릭 수집 능력

- C++로 작성되어 가비지 컬렉션 부하가 없음

- 동적 설정을 통한 핫 리스타트 불필요

Envoy Configuring

Envoy 프록시는 JSON 또는 YAML 형식의 설정 파일을 기반으로 동작합니다.

설정 방식은 크게 두 가지로 나뉩니다: 정적 설정(Static Configuration)과 동적 설정(Dynamic Configuration)입니다.

정적 설정 (Static Configuration)

Static Configuration 은 Envoy가 처음 실행될 때 읽어 들이는 설정파일로, 주로 리스너, 라우트, 클러스터, 타임아웃 등의 기본적인 설정을 포함 한다.

주요 특징

- Envoy 실행 시 한번 설정을 로드하며, 이후 설정 변경 시 재시작(Hot Restart)이 필요 하다.

- 간단한 환경 또는 변경이 거의 없는 상황에 적합

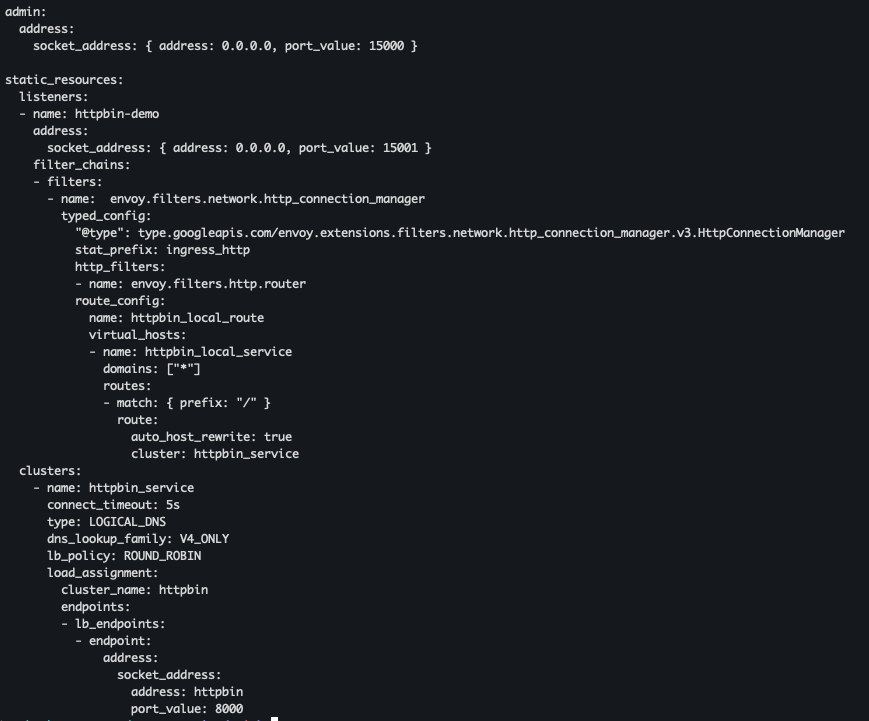

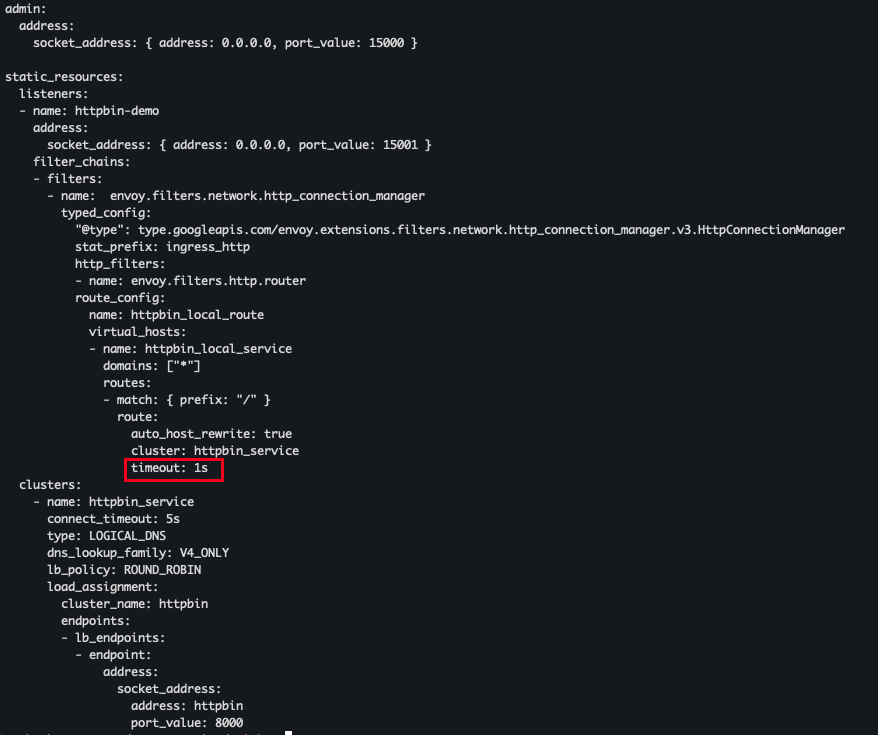

예시 코드

static_resources:

listeners:

- name: httpbin-demo

address:

socket_address: { address: 0.0.0.0, port_value: 15001 }

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

stat_prefix: ingress_http

http_filters:

- name: envoy.filters.http.router

route_config:

name: httpbin_local_route

virtual_hosts:

- name: httpbin_local_service

domains: ["*"]

routes:

- match: { prefix: "/" }

route:

auto_host_rewrite: true

cluster: httpbin_service

clusters:

- name: httpbin_service

connect_timeout: 5s

type: LOGICAL_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: httpbin

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: httpbin

port_value: 8000동적 설정 (Dynamic Configuration)

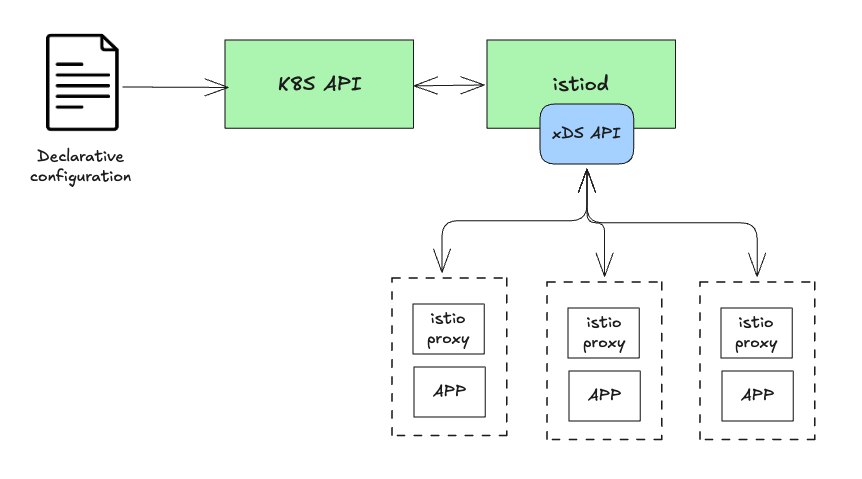

동적 설정 방식은 Envoy가 재시작 없이 런타임에 실시간으로 구성 업데이트를 수행할 수 있다.

이는 Envoy가 xDS API(Discovery Service)를 사용하여 설정을 동적으로 받아오는 방식입니다.

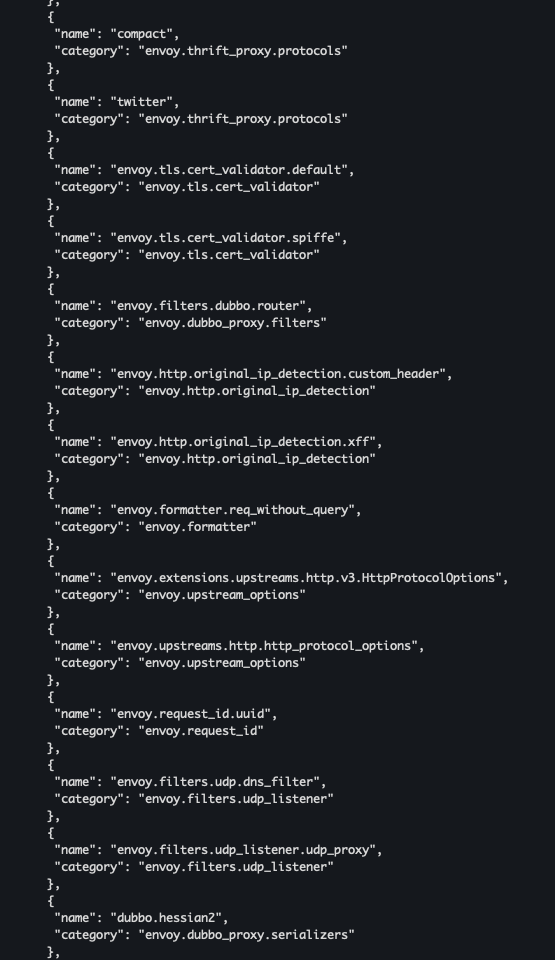

Envoy의 주요 xDS API

- Listener Discovery Service (LDS): Envoy가 어떤 리스너를 노출할지 동적으로 설정

- Route Discovery Service (RDS): 라우팅 규칙을 동적으로 설정

- Cluster Discovery Service (CDS): 클러스터 설정을 동적으로 관리

- Endpoint Discovery Service (EDS): 클러스터의 엔드포인트를 동적으로 관리

- Secret Discovery Service (SDS): 인증서와 같은 보안 정보를 동적으로 관리

- Aggregate Discovery Service (ADS): 모든 위의 설정을 하나의 스트림으로 관리하여 경쟁 상태(race condition)를 방지

예시 코드

- 해당 코드는 리스너 정보를 명시적으로 설정하지 않고, 런타임 시 xDS API(LDS)를 통해 동적으러 가져온다.

ynamic_resources: lds_config: api_config_source: api_type: GRPC grpc_services: - envoy_grpc: cluster_name: xds_cluster clusters: - name: xds_cluster connect_timeout: 0.25s type: STATIC lb_policy: ROUND_ROBIN http2_protocol_options: {} hosts: - socket_address: { address: 127.0.0.3, port_value: 5678 }

-

Istio의 경우 ADS를 통해 전체 설정을 일관되게 관리합니다:

bootstrap: dynamicResources: ldsConfig: ads: {} cdsConfig: ads: {} adsConfig: apiType: GRPC grpcServices: - envoyGrpc: clusterName: xds-grpc refreshDelay: 1.000s staticResources: clusters: - name: xds-grpc type: STRICT_DNS connectTimeout: 10.000s hosts: - socketAddress: address: istio-pilot.istio-system portValue: 15010 circuitBreakers: thresholds: - maxConnections: 100000 maxPendingRequests: 100000 maxRequests: 100000 http2ProtocolOptions: {}

이 방식은 설정 변경을 실시간으로 적용하고, 경쟁 상태를 방지하기 때문에 대규모 클라우드 네이티브 환경에 적합합니다.

비교

| 방식 | 장점 | 단점 | 적합한 상황 |

|---|---|---|---|

| 정적 설정(Static) | 간단한 구성, 쉬운 관리 | 설정 변경 시 재시작 필요 | 소규모, 변화가 적은 환경 |

| 동적 설정(Dynamic) | 실시간 설정 변경 가능, 높은 유연성 | 설정 및 관리 복잡성 증가 | 대규모 마이크로서비스 환경, 잦은 변경 |

환경과 요구 사항에 따라 적절한 설정 방식을 선택하여 Envoy를 효율적으로 활용할 수 있다.

Envoy는 왜 Istio에 적합한가?

(1) Envoy의 역할과 필요성

- 핵심 역할 Envoy는 Istio의 데이터 플레인에서 핵심 프록시 역할을 담당하며, 서비스 메시에서 중요한 기능을 수행한다.

- 추가 구성 요소의 필요성 Envoy는 독립적으로 모든 작업을 수행할 수 없으며, 보조 인프라와 구성 요소를 통해 최대한의 성능을 발휘할 수 있다.

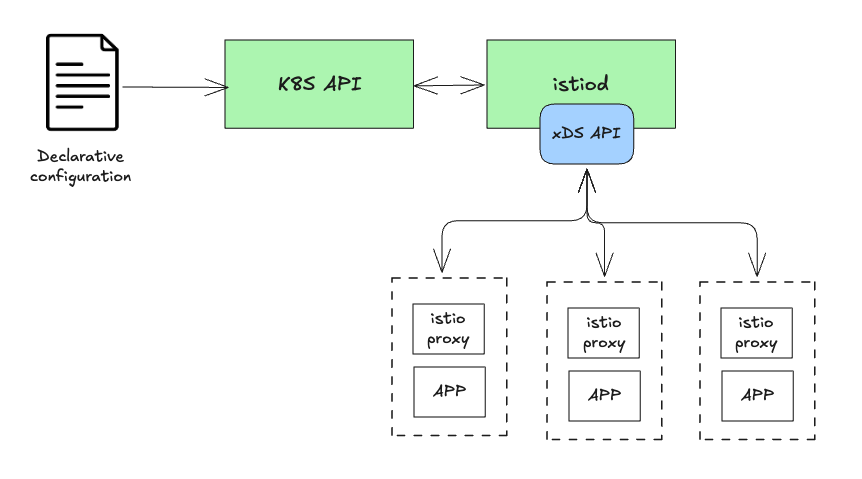

(2) Istio의 Control Plane (istiod)과의 연계

- 동적 설정(xDS API) Istio는

istiod를 통해 Envoy가 사용하는 xDS API를 구현하여 리스너, 엔드포인트, 클러스터 등 Envoy 설정을 동적으로 관리한다.

- 서비스 디스커버리 Istio는 Envoy가 직접 서비스 저장소를 관리할 필요 없이, Kubernetes의 서비스 저장소와 연계하여 이를 추상화하고 단순화한다.

(3) 텔레메트리와 분산 트레이싱

- 메트릭과 텔레메트리 Envoy는 수많은 메트릭을 제공하지만, 이 데이터를 처리할 별도의 텔레메트리 수집 시스템이 필요하다. Istio는 Prometheus와 같은 모니터링 시스템과의 통합을 지원한다.

- 분산 트레이싱 Istio는 Envoy의 트레이싱 데이터를 예거(Jaeger)나 집킨(Zipkin) 같은 오픈트레이싱 엔진과 연결하는 역할을 담당한다.

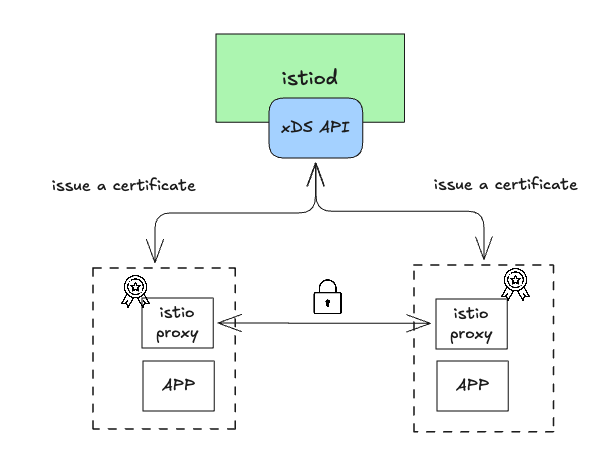

(4) TLS 및 보안 관리

- Envoy는 서비스 간 TLS 연결을 처리할 수 있지만, 인증서 관리(생성, 서명, 갱신 등)는 보조 인프라가 필요하다.

- Istio는

istiod를 통해 이러한 보조 인프라를 제공한다.

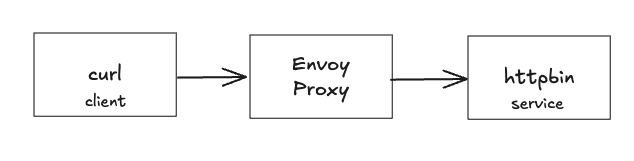

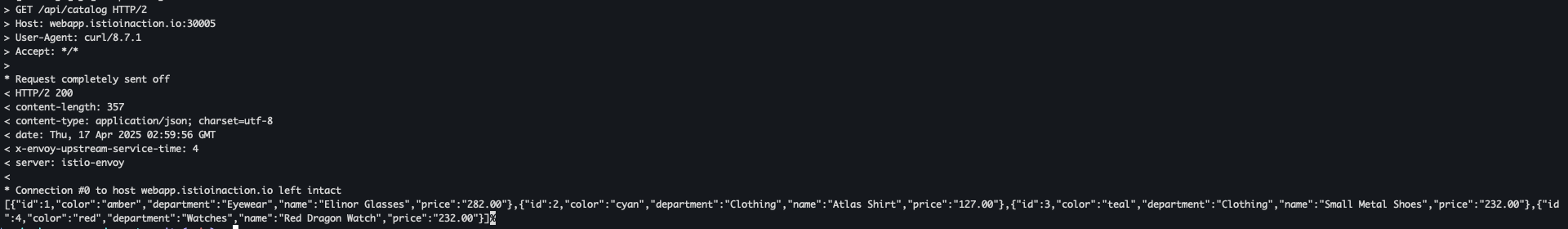

2) Envoy 실습

기본 실습 - 1

- 도커 이미지 가져오기

docker pull envoyproxy/envoy:v1.19.0 docker pull curlimages/curl docker pull mccutchen/go-httpbin # 해당 버전은 arm CPU 미지원 ~~docker pull citizenstig/httpbin~~

- 이미지 확인

docker images

- httpbin 서비스 실행

docker run -d -e PORT=8000 --name httpbin mccutchen/go-httpbin docker ps

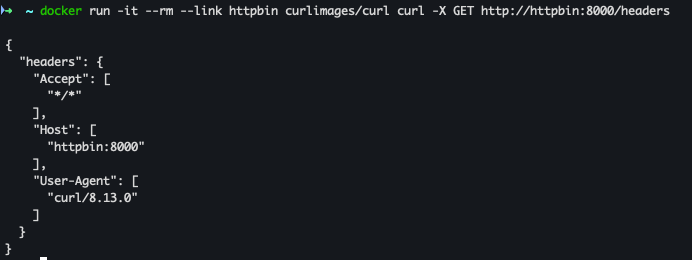

- curl 컨테이너로 httpbin 호출 확인

docker run -it --rm --link httpbin curlimages/curl curl -X GET http://httpbin:8000/headers

- Envoy Proxy 실행

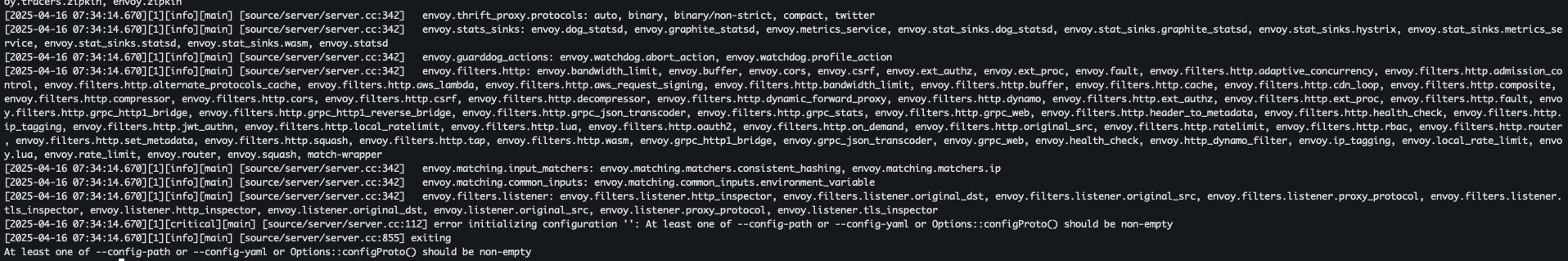

docker run -it --rm envoyproxy/envoy:v1.19.0 envoy- 유효한 설정이 없어 에러 발생

- 유효한 설정이 없어 에러 발생

- Envoy 설정 파일 확인

cat ch3/simple.yaml

- Envoy 재시작

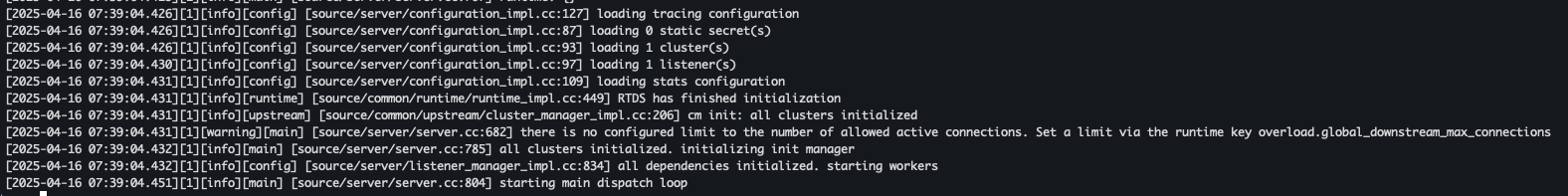

docker run --name proxy --link httpbin envoyproxy/envoy:v1.19.0 --config-yaml "$(cat ch3/simple.yaml)"

- 로그 확인

docker logs proxy

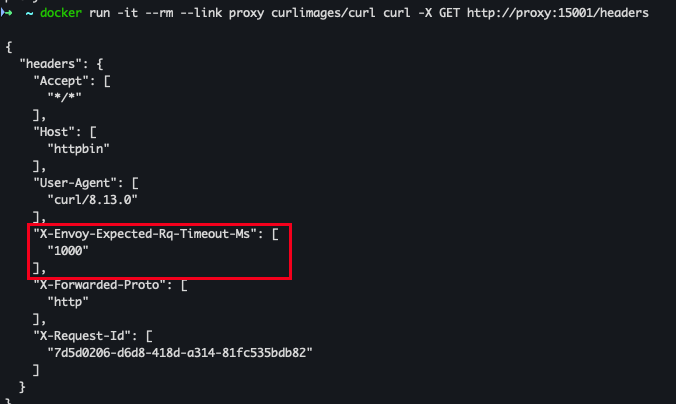

- curl로 proxy 호출

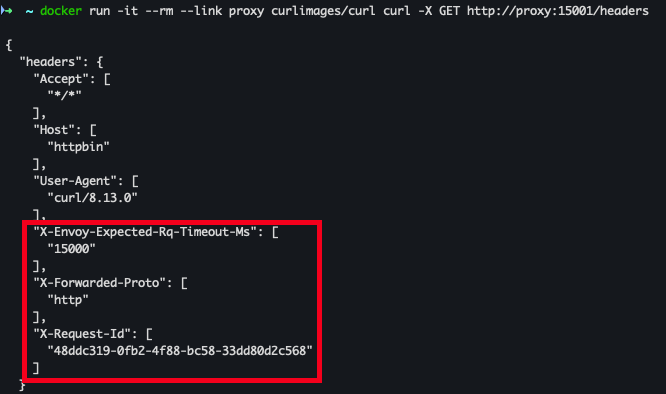

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/headers- httpbin 서비스로 전달 완료

X-Envoy-Expected-Rq-Timeout-Ms, X-Forwarded-Proto, X-Request-Id헤더 추가

- Envoy 종료

docker rm -f proxy

기본 실습 - 2

- 라우팅 규칙 업데이트 (timeout 1s)

cat ch3/simple_change_timeout.yaml

- Envoy Proxy 실행

docker run --name proxy --link httpbin envoyproxy/envoy:v1.19.0 --config-yaml "$(cat ch3/simple_change_timeout.yaml)"

- 타임아웃 설정 확인

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/headers

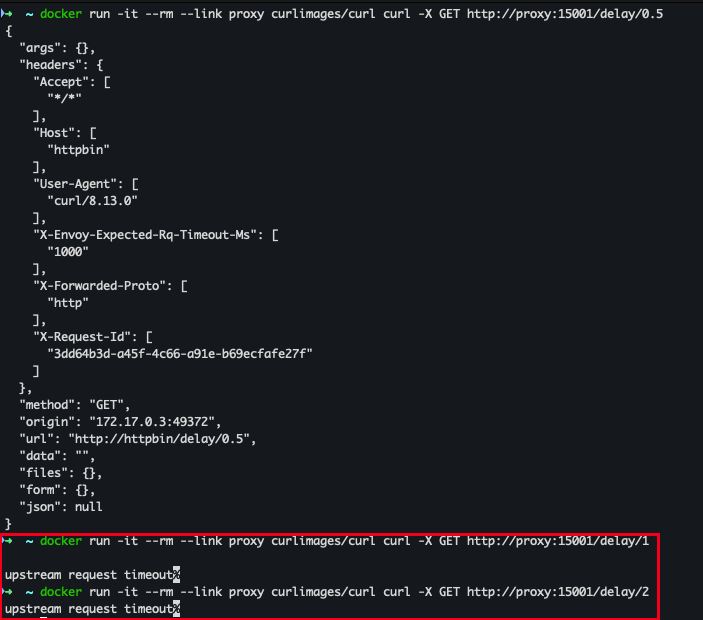

- timeout 테스트 - 1s 이상 timeout 발생

docker run -it --rm --link proxy curlimages/curl curl -X POST http://proxy:15000/logging?http=debug docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/delay/0.5 docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/delay/1 docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/delay/2

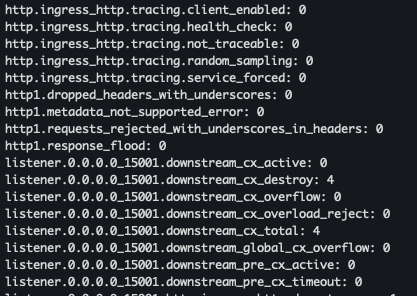

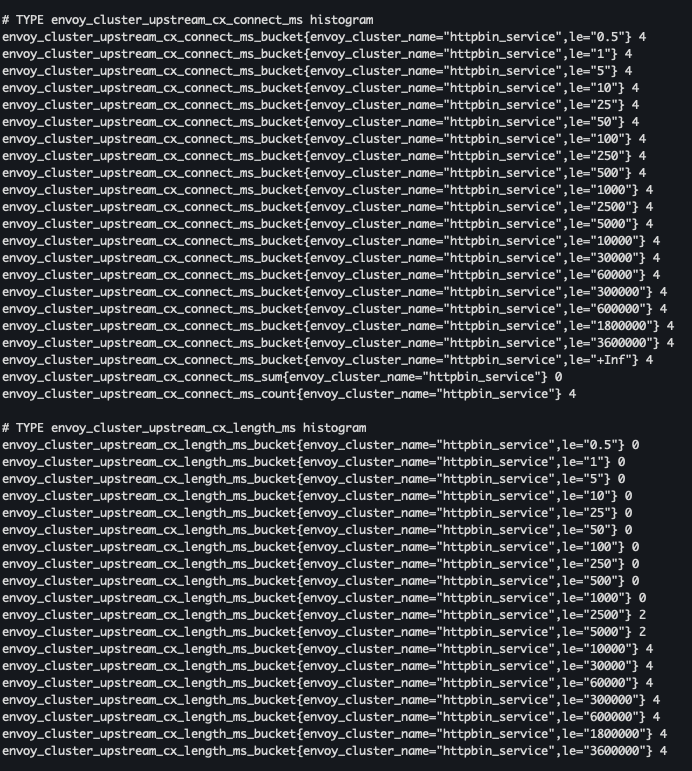

Envoy’s Admin API

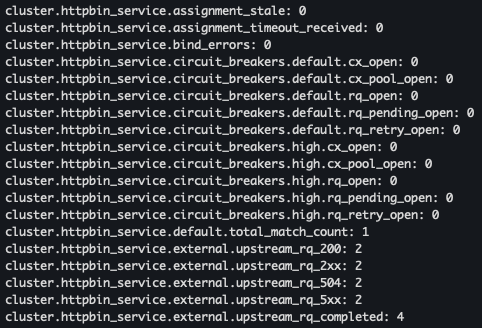

- Envoy stat 확인 - 응답은 리스너, 클러스터, 서버에 대한 통계 및 메트릭확인 가능

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/stats

- retry 통계 확인

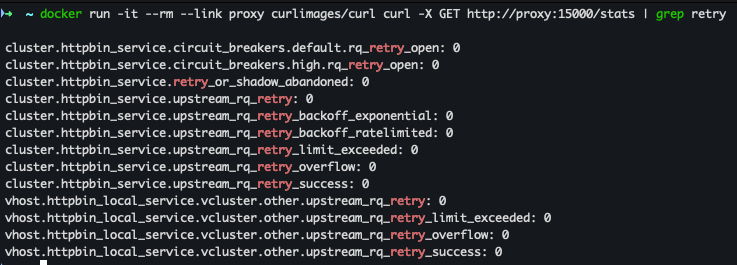

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/stats | grep retry

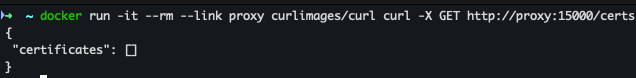

- 인증서 확인

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/certs

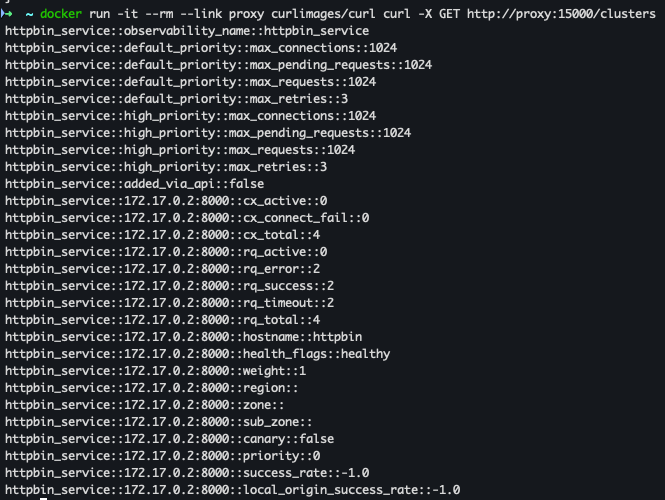

- Envoy 설정된 클러스터 확인

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/clusters

- envoy 설정 덤프 확인

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/config_dump

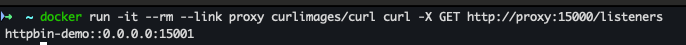

- 리스너 확인

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/listeners

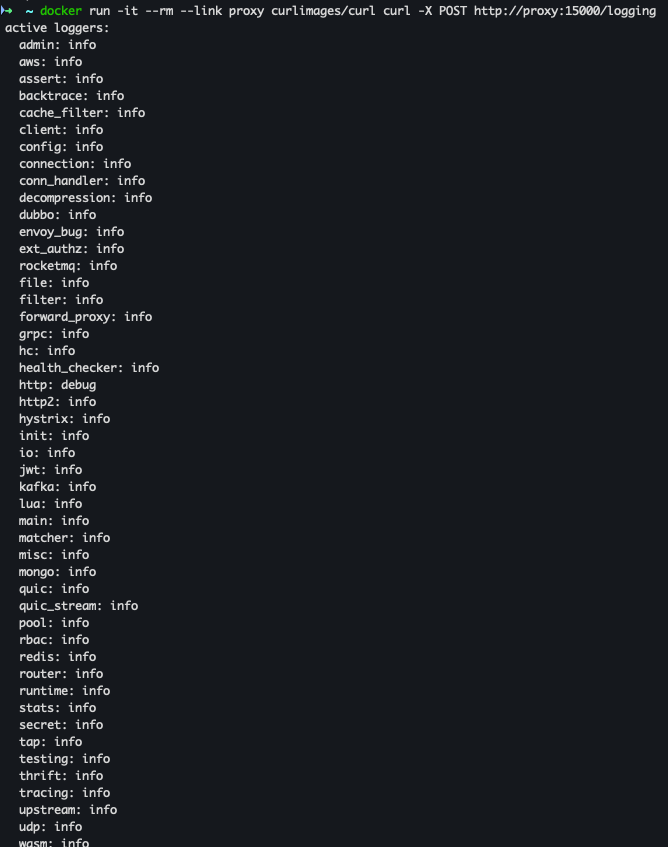

- 로깅 설정 확인

docker run -it --rm --link proxy curlimages/curl curl -X POST http://proxy:15000/logging

- Envoy 통계 (프로메테우스 레코드 형식)

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/stats/prometheus

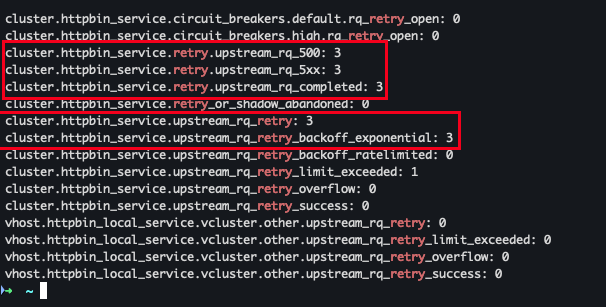

Envoy 요청 재시도

- retry_policy 파일 확인

cat ch3/simple_retry.yaml

- Envoy 프록시 실행

docker run -p 15000:15000 --name proxy --link httpbin envoyproxy/envoy:v1.19.0 --config-yaml "$(cat ch3/simple_retry.yaml)"

- 500 에러 발생

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15001/status/500

- Admin API 확인

docker run -it --rm --link proxy curlimages/curl curl -X GET http://proxy:15000/stats | grep retry- 500 응답을 받았고 cluster.httpbin_service.upstream_rq_retry: 3번 시도 함

- 500 응답을 받았고 cluster.httpbin_service.upstream_rq_retry: 3번 시도 함

- 다음 실습을 위한 Envoy 종료

docker rm -f proxy && docker rm -f httpbin

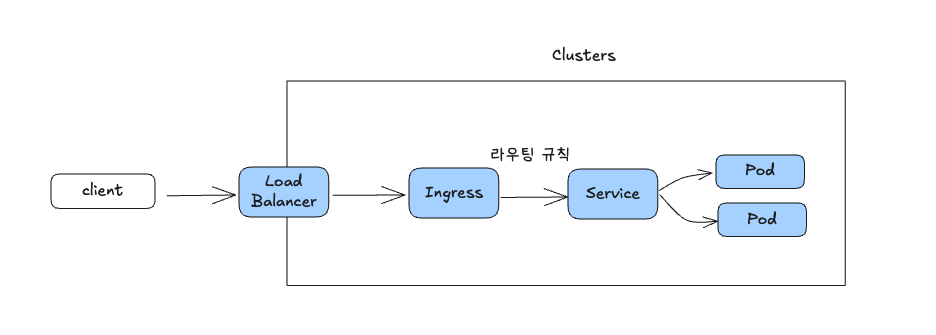

4장 Istio gateways: Getting traffic into a cluster

1) Traffic ingress

Ingress

- 네트워크 외부에서 발원하여 내부 네트워크의 엔드포인트를 향하는 트래픽

- k8s 에서는 이러한 외부 트래픽을 효과적으로 내부 서비스로 라우팅하기 위한 리소스로 "Ingress"라는 오브젝트를 제공

Ingress Points

- 네트워킹 관점에서 인그레스 포인트(Ingress Point)는 네트워크 내부로 향하는 트래픽을 최초로 수신하는 진입점 역할을 한다.

- 외부로부터 유입된 모든 트래픽은 먼저 이 "문지기" 역할을 하는 인그레스 포인트에서 처리 된다.

- 인그레스 포인트는 어떤 트래픽을 로컬 네트워크로 허용할지 결정하는 규칙과 정책을 적용

- 허용된 트래픽은 이후 내부 네트워크의 정확한 목적지(엔드포인트)로 프록시 되고, 정책에 맞지 않는 트래픽은 거부 한다.

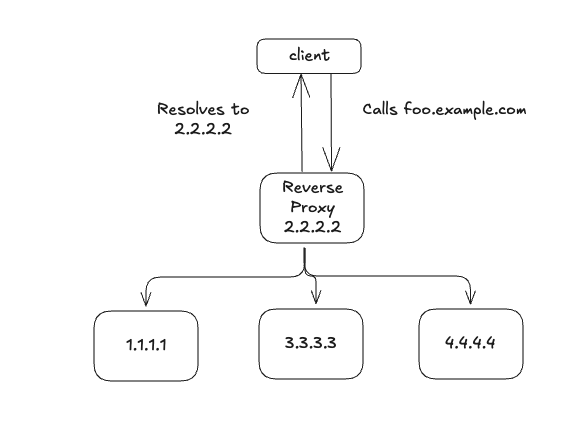

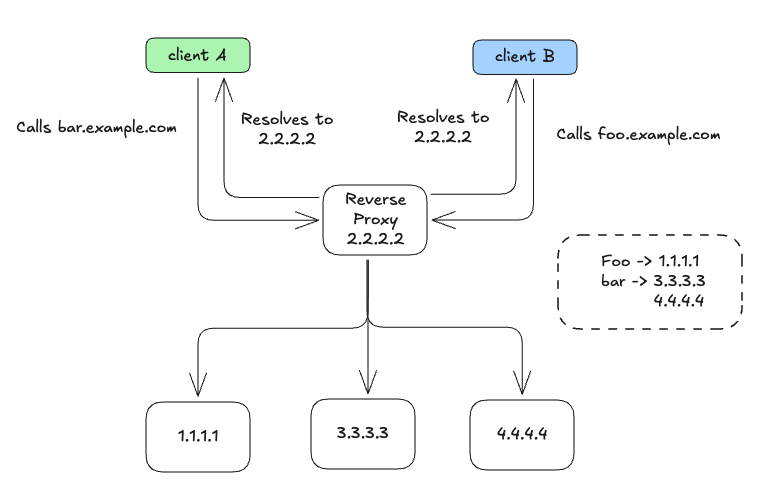

Virtual IPs

-

서비스 외부 노출 과정

- 서비스(

api.istioinaction.io/v1/products)를 외부 시스템이 호출하려면, 먼저 도메인 이름(api.istioinaction.io)을 IP 주소로 변환하는 과정이 필요 - 이 과정은 클라이언트의 네트워킹 스택이 DNS 서버에 도메인 이름의 IP 주소를 질의하는 방식으로 수행된다

- 서비스(

-

DNS에서 IP 주소를 매핑할 때 주의점

- DNS를 통해 도메인을 단일 서비스 인스턴스의 실제 IP에 직접 대응시키는 방식은 매우 취약하다.

- 해당 서비스 인스턴스가 다운되면 DNS가 갱신되기 전까지 클라이언트가 지속적으로 오류를 경험하게 된다.

- 이는 느리고, 가용성 및 안정성이 떨어지는 방식이다.

-

안정적인 서비스 노출 방법

- 가상 IP(virtual IP) 를 도메인에 대응시켜 사용하는 것이 권장된다.

- 가상 IP는 특정 서비스가 아니라 중간 계층 역할의 리버스 프록시(Reverse Proxy)와 연결된다.

- 리버스 프록시는 트래픽을 여러 서비스 인스턴스로 분산 처리하고, 로드 밸런싱 기능을 제공해 개별 백엔드의 과부하를 방지할 수 있다.

- 이를 통해 높은 가용성과 안정적인 서비스를 제공할 수 있다.

Virtual Hosting

-

서비스와 가상 IP 구성

- 여러 개의 서비스 인스턴스가 각각의 자체 IP를 가질 수 있지만, 외부 클라이언트는 가상 IP 하나만 사용해 접근한다.

- 이 방식은 여러 서비스가 동일한 가상 IP를 공유할 수 있게 해준다.

- 예시:

prod.istioinaction.io,api.istioinaction.io두 도메인이 동일한 가상 IP로 대응될 수 있다.

-

리버스 프록시의 요청 처리 방식

- 클라이언트가 동일한 가상 IP로 전송한 요청은 단일 리버스 프록시로 들어온다.

- 리버스 프록시는 요청을 분석해 적절한 서비스로 라우팅한다.

- 이때 HTTP 요청이라면, 리버스 프록시는 요청 헤더를 통해 어떤 요청이 어느 서비스로 향해야 하는지 판단한다.

-

가상 호스팅

- 단일 진입점(인그레스 포인트)에서 여러 다른 서비스들을 함께 제공하는 방식이다.

- 이를 위해 HTTP/1.1은

Host헤더, HTTP/2는:authority헤더를 사용해 요청이 향하는 서비스를 판단한다. - TLS 기반의 TCP 연결에서는 SNI(Server Name Indication)를 통해 요청을 라우팅한다.

-

Istio의 인그레스와 가상 호스팅

- Istio는 이와 같은 가상 IP 라우팅과 가상 호스팅 방식을 결합해, 외부 요청을 내부 서비스로 효율적으로 라우팅한다.

- Istio의 에지 인그레스(Edge Ingress)는 클러스터 외부에서 들어오는 요청을 내부 서비스로 전달하는 데 있어, 위의 기술들을 사용한다.

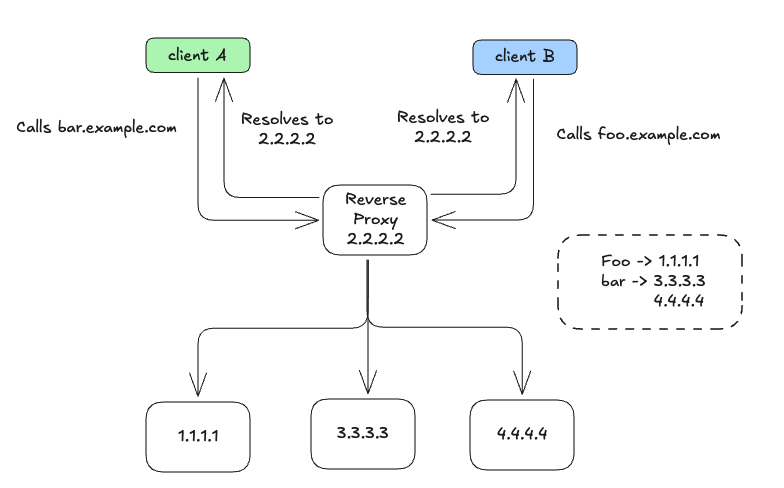

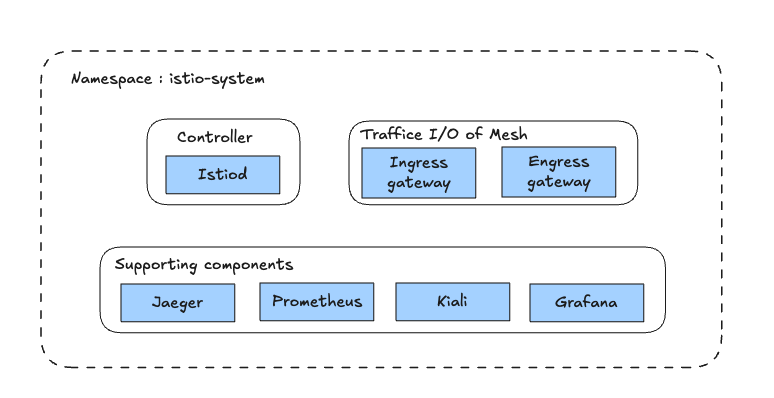

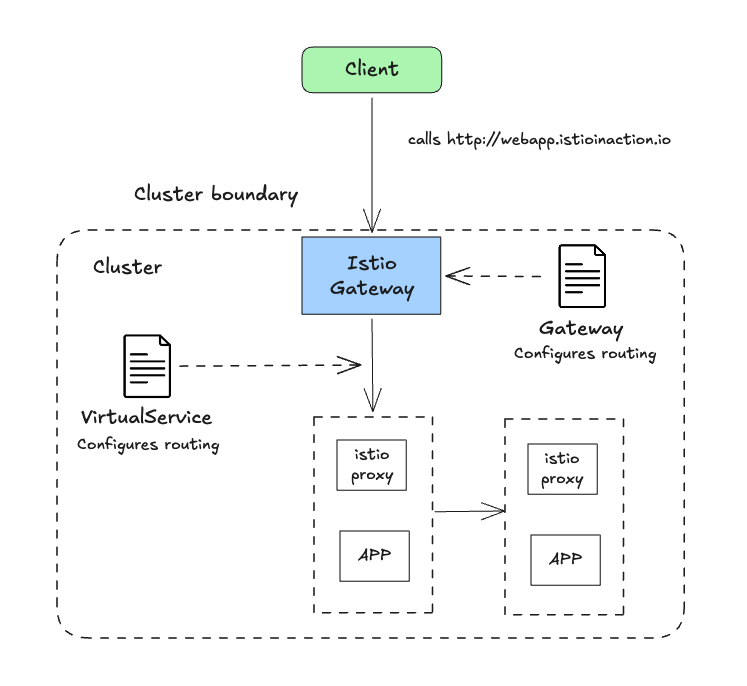

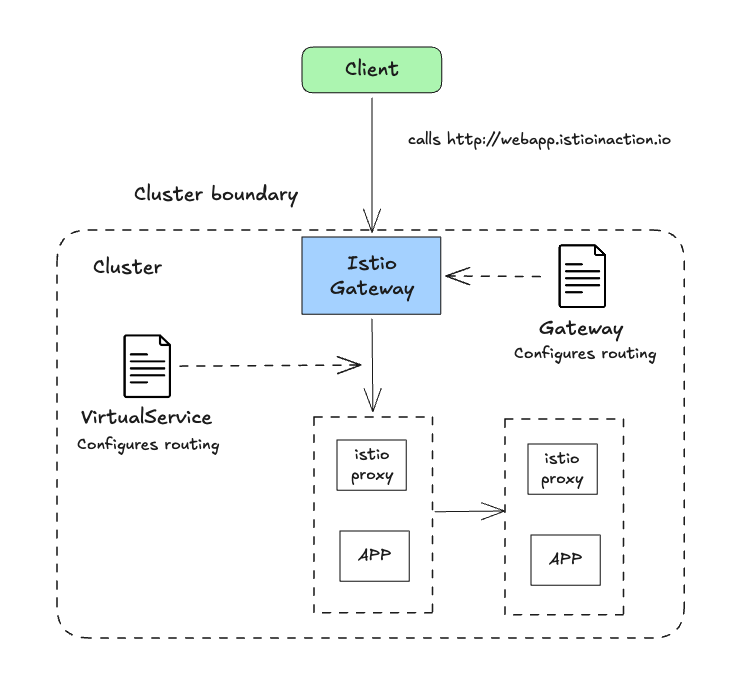

2) Istio Ingress Gateways

개념

[인그레스 게이트웨이의 역할]

- Istio 인그레스 게이트웨이는 클러스터 외부에서 내부로 유입되는 트래픽의 진입점이다.

- 다음과 같은 기능을 수행

- 보안 제어 (트래픽 필터링)

- 로드 밸런싱

- 가상 호스트 기반 라우팅 (도메인에 따라 내부 서비스 분기)

[리버스 프록시로서의 동작]

- 인그레스 게이트웨이는 Envoy 프록시를 사용해 리버스 프록시 역할도 수행한다.

- 즉, 클러스터 외부 트래픽을 받아서 내부 서비스로 적절히 분배한다.

- Istio는 단일 Envoy 인스턴스를 인그레스 게이트웨이로 사용한다.

[구성 요소]

- 인그레스 게이트웨이는 Istio의 컨트롤 플레인의 설정을 기반으로 작동한다.

- 컨트롤 플레인은 Envoy에 설정을 동적으로 전달하고, 보안 정책이나 라우팅 규칙 등을 관리한다.

실습

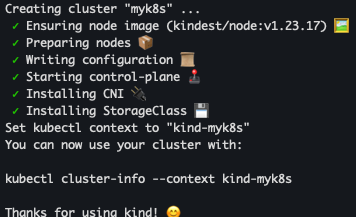

k8s 배포 (version : 1.23.17)

- NodePort 설정 : 30000(http) , 30005(https)

- k8s 설치

kind create cluster --name myk8s --image kindest/node:v1.23.17 --config - <<EOF kind: Cluster apiVersion: kind.x-k8s.io/v1alpha4 nodes: - role: control-plane extraPortMappings: - containerPort: 30000 # Sample Application (istio-ingrssgateway) HTTP hostPort: 30000 - containerPort: 30001 # Prometheus hostPort: 30001 - containerPort: 30002 # Grafana hostPort: 30002 - containerPort: 30003 # Kiali hostPort: 30003 - containerPort: 30004 # Tracing hostPort: 30004 - containerPort: 30005 # Sample Application (istio-ingrssgateway) HTTPS hostPort: 30005 - containerPort: 30006 # TCP Route hostPort: 30006 - containerPort: 30007 # New Gateway hostPort: 30007 *extraMounts: # 해당 부분 생략 가능 - hostPath: /Users/bkshin/istio-in-action/book-source-code-master # 각자 자신의 pwd 경로로 설정 containerPath: /istiobook* networking: podSubnet: 10.10.0.0/16 serviceSubnet: 10.200.1.0/24 EOF

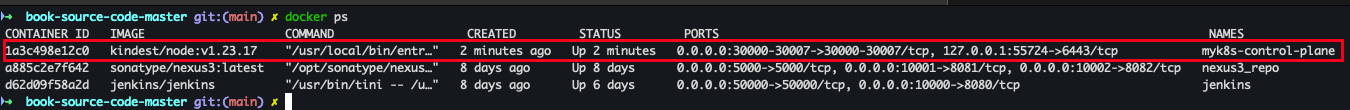

- 설치 확인

docker ps

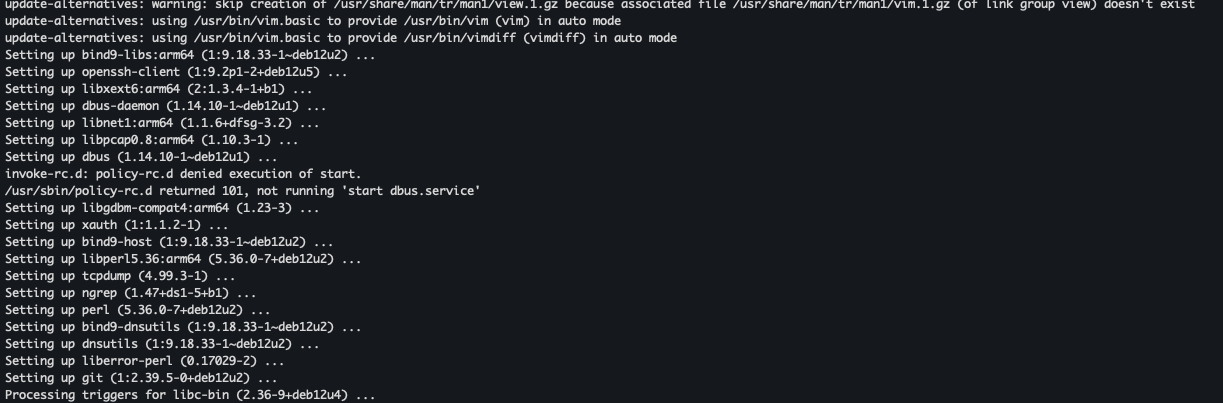

- 노드에 기본 툴 설치

docker exec -it myk8s-control-plane sh -c 'apt update && apt install tree psmisc lsof wget bridge-utils net-tools dnsutils tcpdump ngrep iputils-ping git vim -y'

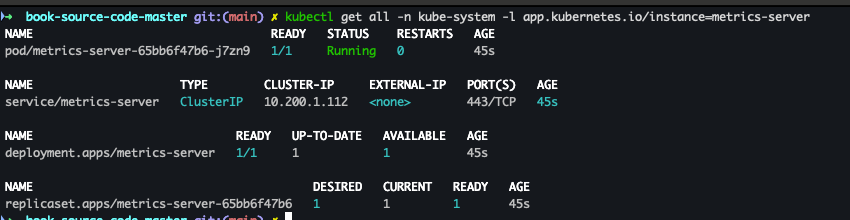

- metrics-server 설치 및 확인

helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/ helm install metrics-server metrics-server/metrics-server --set 'args[0]=--kubelet-insecure-tls' -n kube-system kubectl get all -n kube-system -l app.kubernetes.io/instance=metrics-server

istio 설치 (version : 1.17.8)

- myk8s-control-plane 진입

docker exec -it myk8s-control-plane bash

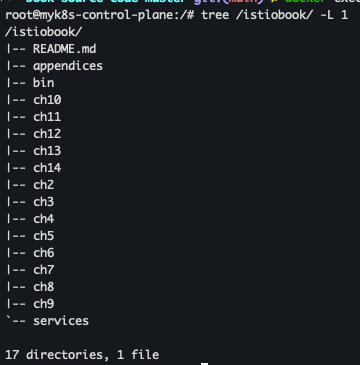

- /istiobook 마운트 확인

tree /istiobook/ -L 1

- istioctl 설치

export ISTIOV=1.17.8 echo 'export ISTIOV=1.17.8' >> /root/.bashrc curl -s -L https://istio.io/downloadIstio | ISTIO_VERSION=$ISTIOV sh -

- version 확인

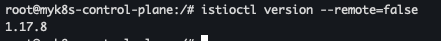

cp istio-$ISTIOV/bin/istioctl /usr/local/bin/istioctl istioctl version --remote=false

- default 프로파일 컨트롤 플레인 배포

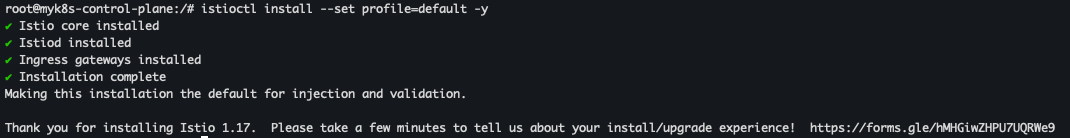

istioctl install --set profile=default -y

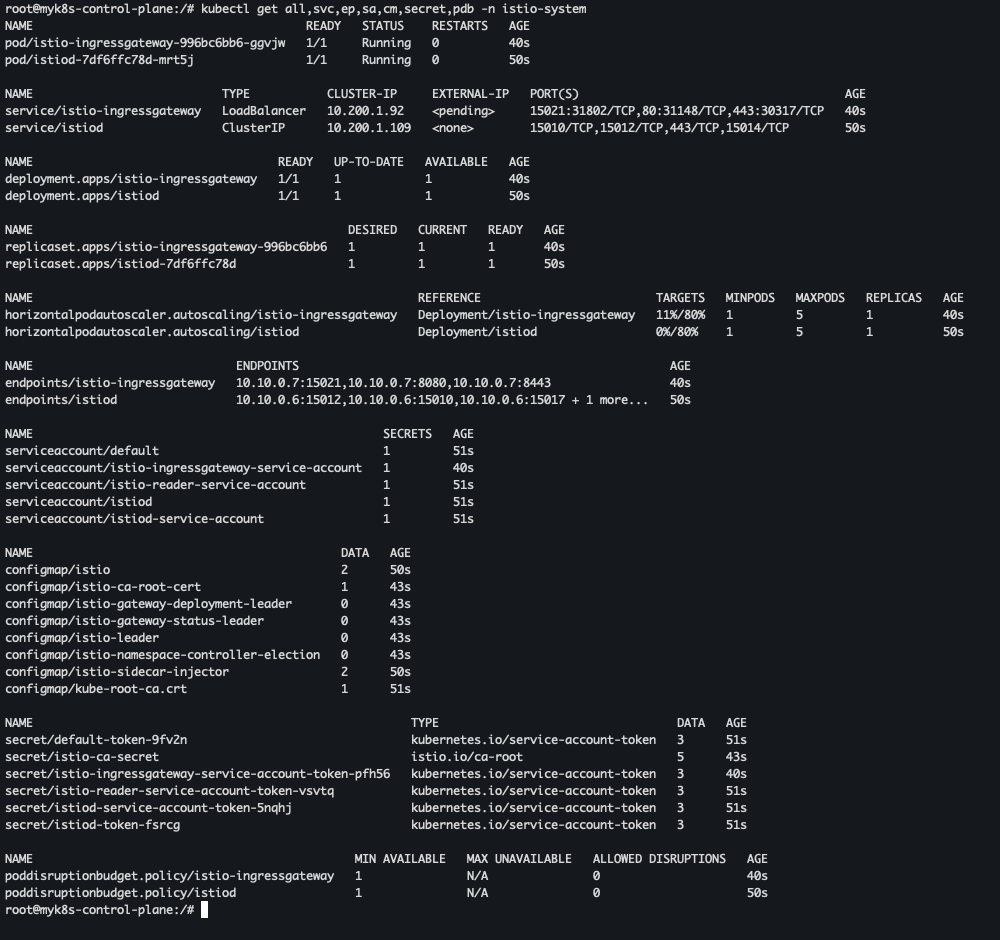

- istiod, istio-ingressgateway, crd 설치 확인

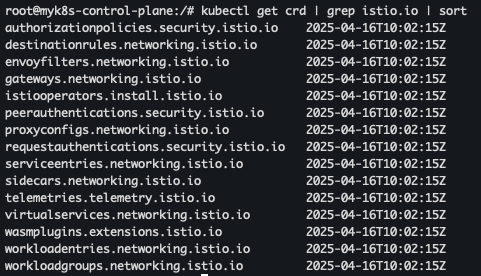

kubectl get istiooperators -n istio-system -o yaml kubectl get all,svc,ep,sa,cm,secret,pdb -n istio-system kubectl get cm -n istio-system istio -o yaml kubectl get crd | grep istio.io | sort

- 보조 도구 설치 및 확인

kubectl apply -f istio-$ISTIOV/samples/addons kubectl get pod -n istio-system

- 빠져 나오기

exit

- 실습을 위한 네임스페이스 설정

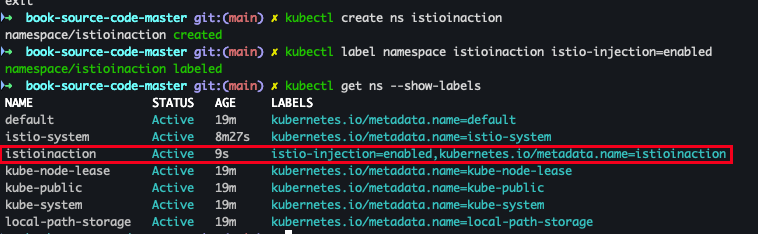

kubectl create ns istioinaction kubectl label namespace istioinaction istio-injection=enabled kubectl get ns --show-labels

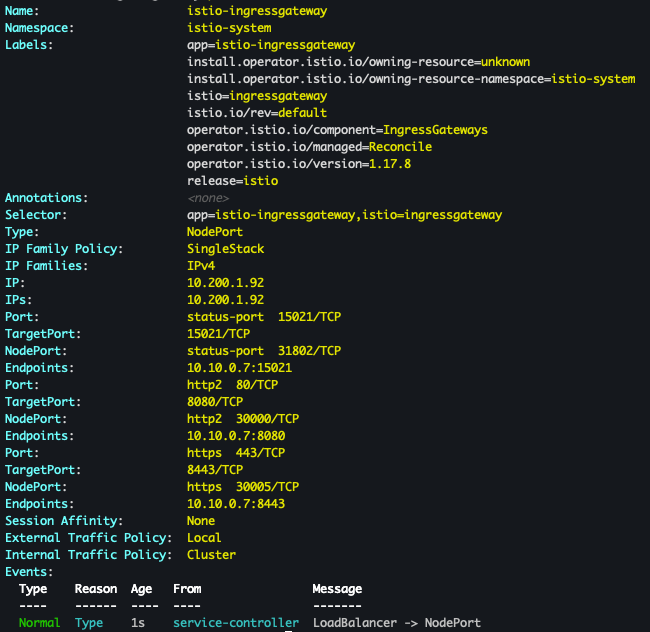

- istio-ingressgateway 서비스

-

NodePort 변경 및 nodeport 지정 변경

-

externalTrafficPolicy 설정 (ClientIP 수집)

kubectl patch svc -n istio-system istio-ingressgateway -p '{"spec": {"type": "NodePort", "ports": [{"port": 80, "targetPort": 8080, "nodePort": 30000}]}}' kubectl patch svc -n istio-system istio-ingressgateway -p '{"spec": {"type": "NodePort", "ports": [{"port": 443, "targetPort": 8443, "nodePort": 30005}]}}' kubectl patch svc -n istio-system istio-ingressgateway -p '{"spec":{"externalTrafficPolicy": "Local"}}' kubectl describe svc -n istio-system istio-ingressgateway

-

- Node Port 변경

- prometheus(30001), grafana(30002), kiali(30003), tracing(30004)

kubectl patch svc -n istio-system prometheus -p '{"spec": {"type": "NodePort", "ports": [{"port": 9090, "targetPort": 9090, "nodePort": 30001}]}}' kubectl patch svc -n istio-system grafana -p '{"spec": {"type": "NodePort", "ports": [{"port": 3000, "targetPort": 3000, "nodePort": 30002}]}}' kubectl patch svc -n istio-system kiali -p '{"spec": {"type": "NodePort", "ports": [{"port": 20001, "targetPort": 20001, "nodePort": 30003}]}}' kubectl patch svc -n istio-system tracing -p '{"spec": {"type": "NodePort", "ports": [{"port": 80, "targetPort": 16686, "nodePort": 30004}]}}'

- prometheus(30001), grafana(30002), kiali(30003), tracing(30004)

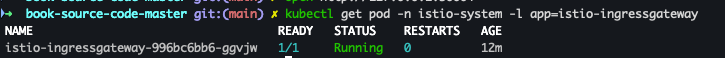

istio 인그레스 게이트웨이 확인

- 파드 확인

kubectl get pod -n istio-system -l app=istio-ingressgateway

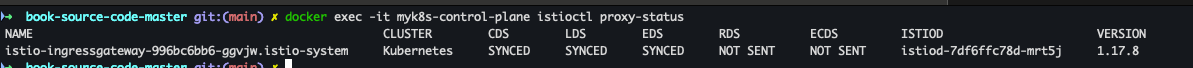

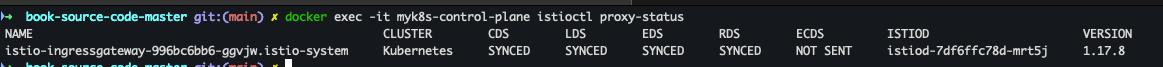

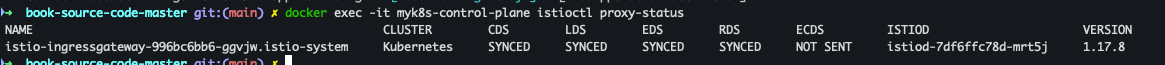

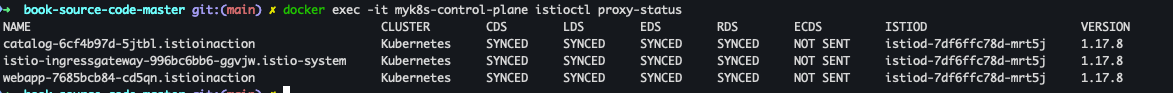

- proxy 상태 확인

docker exec -it myk8s-control-plane istioctl proxy-status

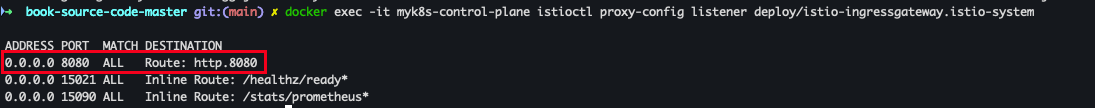

- proxy 설정 확인

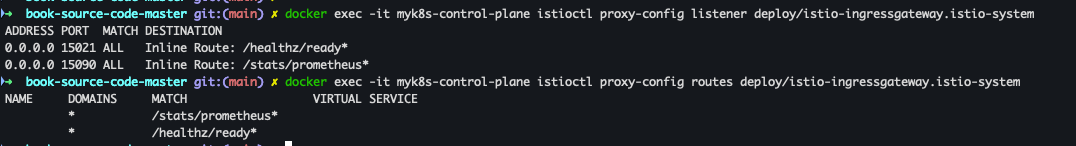

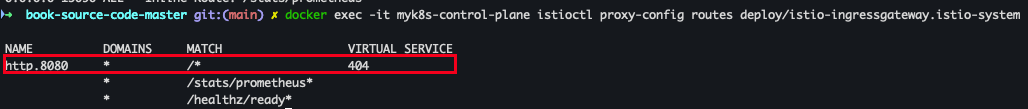

docker exec -it myk8s-control-plane istioctl proxy-config all deploy/istio-ingressgateway.istio-system docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/istio-ingressgateway.istio-system docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system

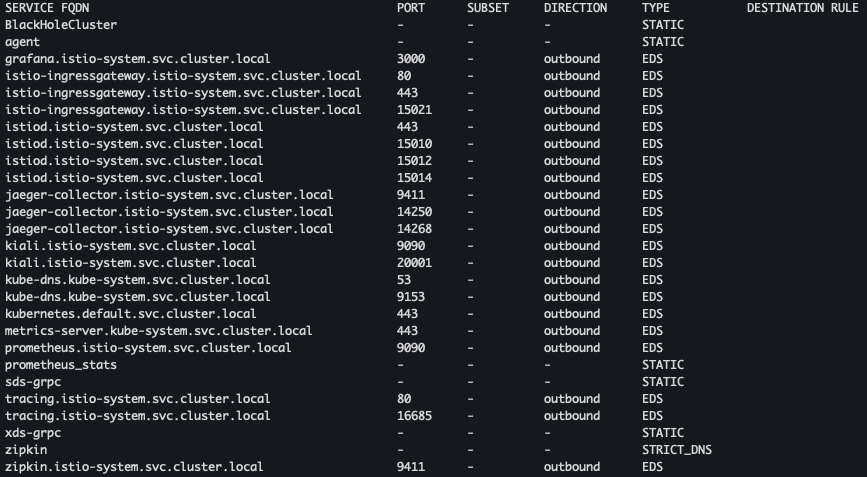

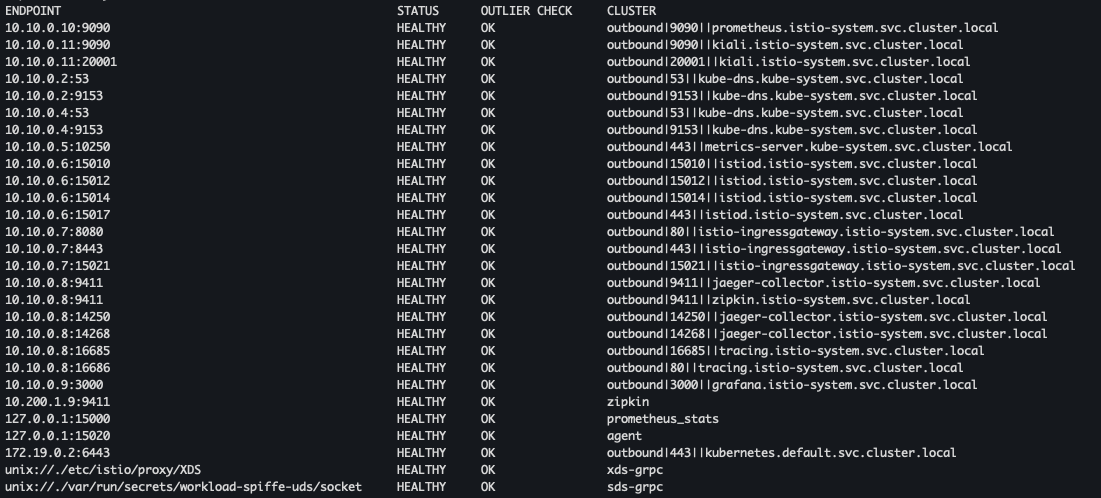

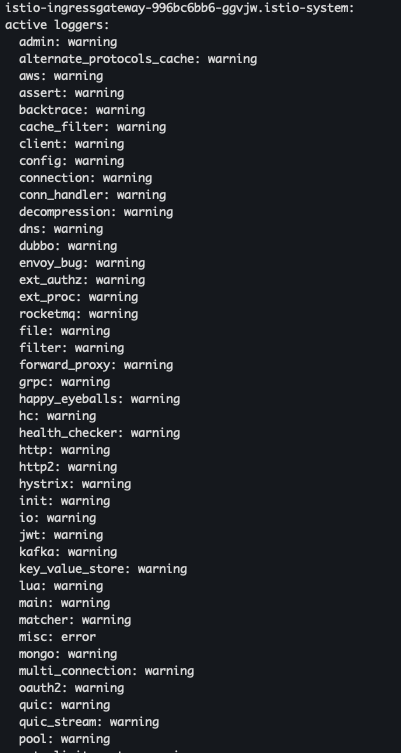

- proxy 설정 확인 - cluster, endpoint, log, secret

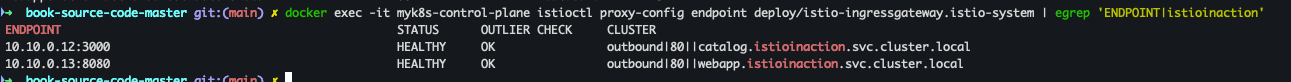

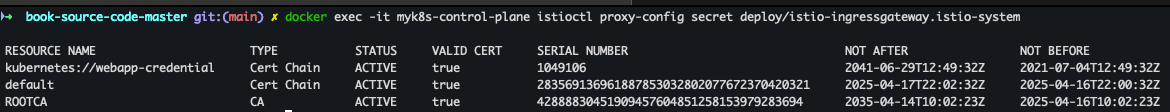

docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/istio-ingressgateway.istio-system docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/istio-ingressgateway.istio-system docker exec -it myk8s-control-plane istioctl proxy-config log deploy/istio-ingressgateway.istio-system docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/istio-ingressgateway.istio-system

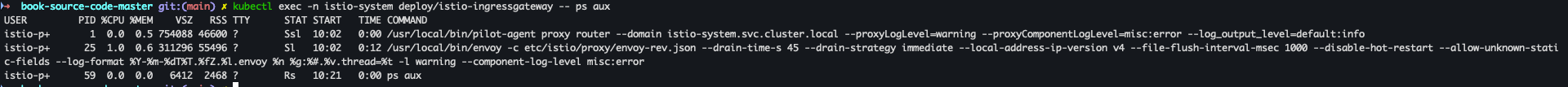

- pilot-agent 프로세스가 envoy를 부트스트랩 되는지 확인

kubectl exec -n istio-system deploy/istio-ingressgateway -- ps kubectl exec -n istio-system deploy/istio-ingressgateway -- ps aux

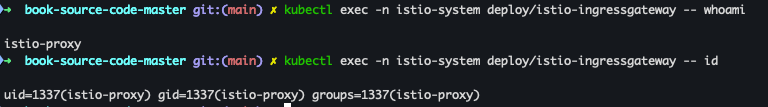

- 프로세스 실행 유저 정보 확인

kubectl exec -n istio-system deploy/istio-ingressgateway -- whoami kubectl exec -n istio-system deploy/istio-ingressgateway -- id

Gateway 리소스 지정하기

개요

- Istio Ingress Gateway를 설정하려면, Gateway 리소스를 사용해 개방하고 싶은 포트와 그 포트에서 허용할 가상 호스트를 지정한다.

- 살펴볼 예시 Gateway 리소스는 매우 간단해 80 포트에서 가상 호스트

webapp.istioinaction.io를 향하는 트래픽을 허용하는 HTTP 포트를 개방한다.

실습

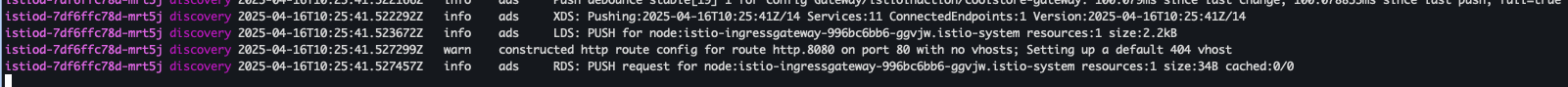

- istiod 로그 확인

kubectl stern -n istio-system -l app=istiod

- Gateway 리소스 지정

cat ch4/coolstore-gw.yaml kubectl -n istioinaction apply -f ch4/coolstore-gw.yaml

- Gateway 리소스 확인

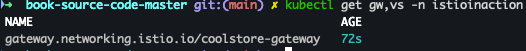

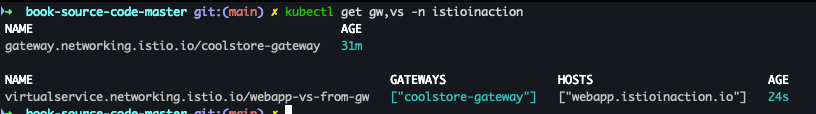

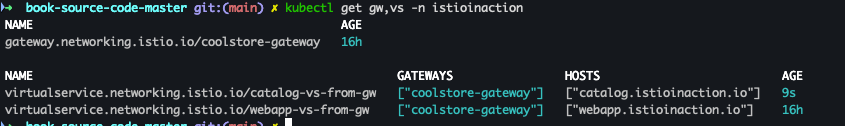

kubectl get gw,vs -n istioinaction

- proxy 상태 확인

docker exec -it myk8s-control-plane istioctl proxy-status

- proxy-status listener 확인

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/istio-ingressgateway.istio-system

- proxy-status routes 확인

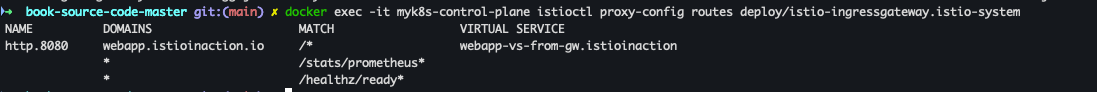

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system

- 상세확인

kubectl get svc -n istio-system istio-ingressgateway -o jsonpath="{.spec.ports}" | jq

- VirtualService 확인

- VirtualService에 Service Routes 추가 설정 필요

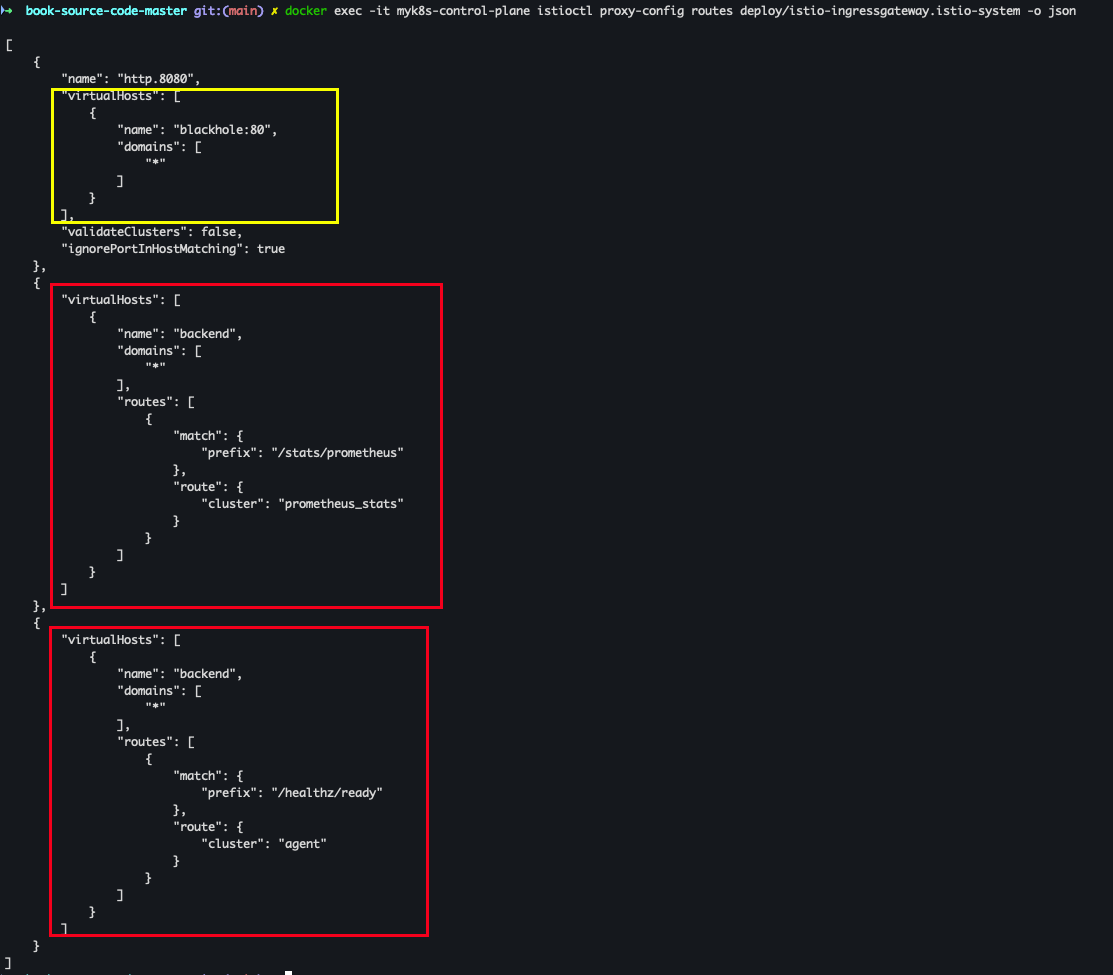

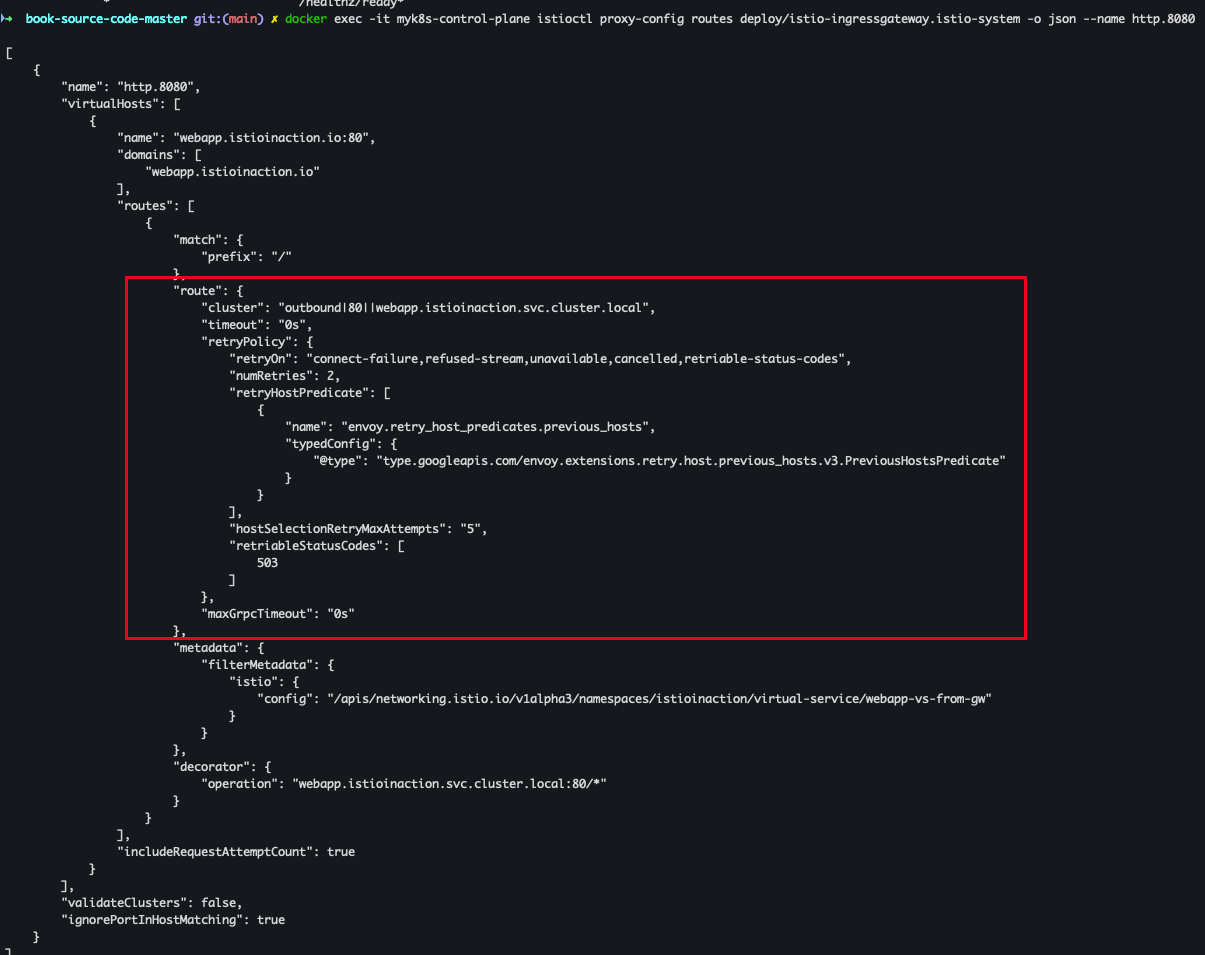

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system -o json docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system -o json --name http.8080

- VirtualService에 Service Routes 추가 설정 필요

VirtualService로 게이트웨이 라우팅 하기

개요

- 게이트웨이로 들어오는 트래픽에 대해 수행할 작업을 이 VirtualService 리소스로 정의

- 가상 호스트

webapp.istioinaction.io로 향하는 트래픽에만 적용 하도록 설정이 필요

실습

- VirtualService 적용

cat ch4/coolstore-vs.yaml kubectl apply -n istioinaction -f ch4/coolstore-vs.yaml

- 배포 확인

kubectl get gw,vs -n istioinaction

- proxy-status 확인

docker exec -it myk8s-control-plane istioctl proxy-status docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/istio-ingressgateway.istio-system docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system

- proxy-status routes 상세 확인

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/istio-ingressgateway.istio-system -o json --name http.8080

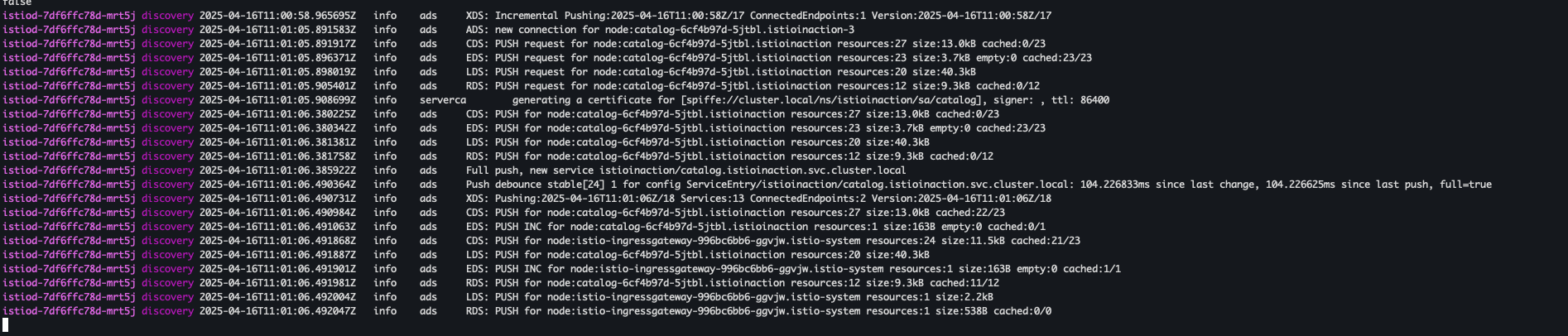

- 실제 애플리케이션 배포 전 로그 모니터링

kubectl stern -n istio-system -l app=istiod

- 실제 애플리케이션 배포

kubectl apply -f services/catalog/kubernetes/catalog.yaml -n istioinaction kubectl apply -f services/webapp/kubernetes/webapp.yaml -n istioinaction

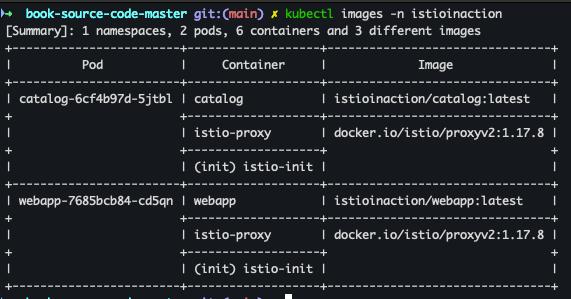

- istioinaction 네임스페이스에 사용된 이미지 확인

-

krew plugins - LINK

kubectl krew install images kubectl images -n istioinaction

-

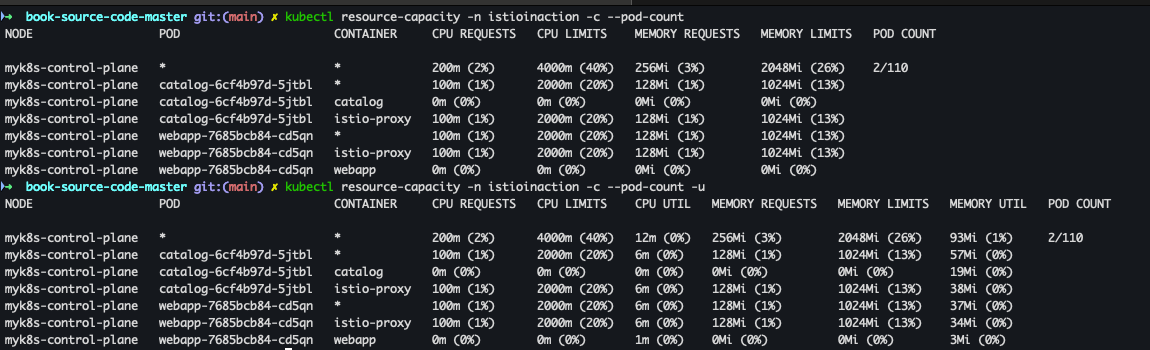

- istioinaction 네임스페이스에 속한 파드 컨테이너별 CPU/Mem Request/Limit 확인

kubectl krew install resource-capacity kubectl resource-capacity -n istioinaction -c --pod-count kubectl resource-capacity -n istioinaction -c --pod-count -u

- proxy-status 확인

docker exec -it myk8s-control-plane istioctl proxy-status

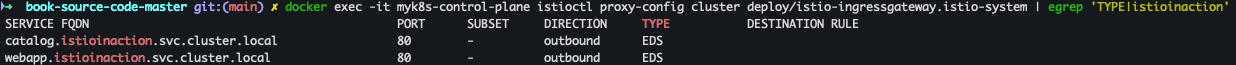

- proxy-status Listener, cluster 확인

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/istio-ingressgateway.istio-system docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/istio-ingressgateway.istio-system | egrep 'TYPE|istioinaction'

- Endpoint 확인

- istio-ingressgateway에서 catalog/webapp 의 Service(ClusterIP)로 전달하는게 아니라, 바로 파드 IP인 Endpoint 로 전달함.

- istio 를 사용하지 않았다면, Service(ClusterIP) 동작 처리를 위해서 Node에 iptable/conntrack 를 사용했었어야 하지만, istio 사용 시에는 Node에 iptable/conntrack 를 사용하지 않아서, 이 부분에 대한 통신 라우팅 효율이 있다.

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/istio-ingressgateway.istio-system | egrep 'ENDPOINT|istioinaction'

-

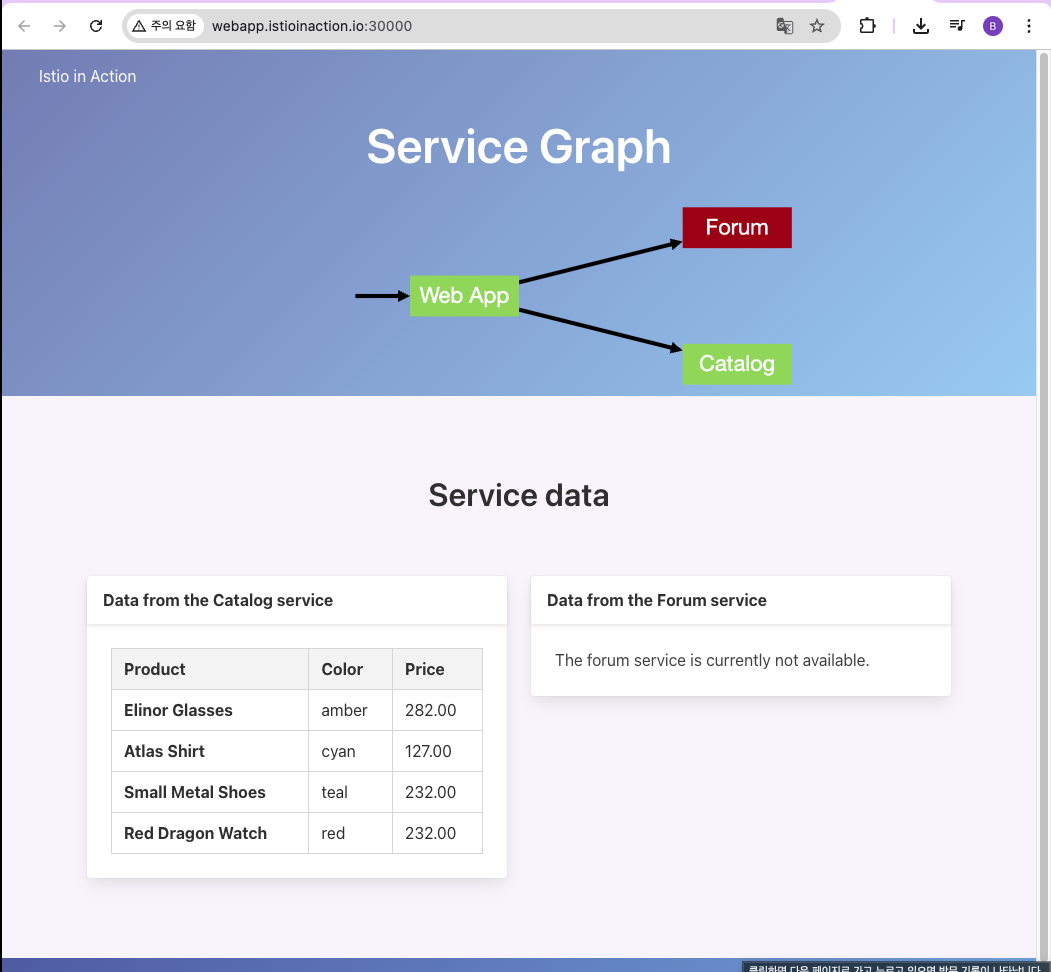

webapp 확인

- listener 확인

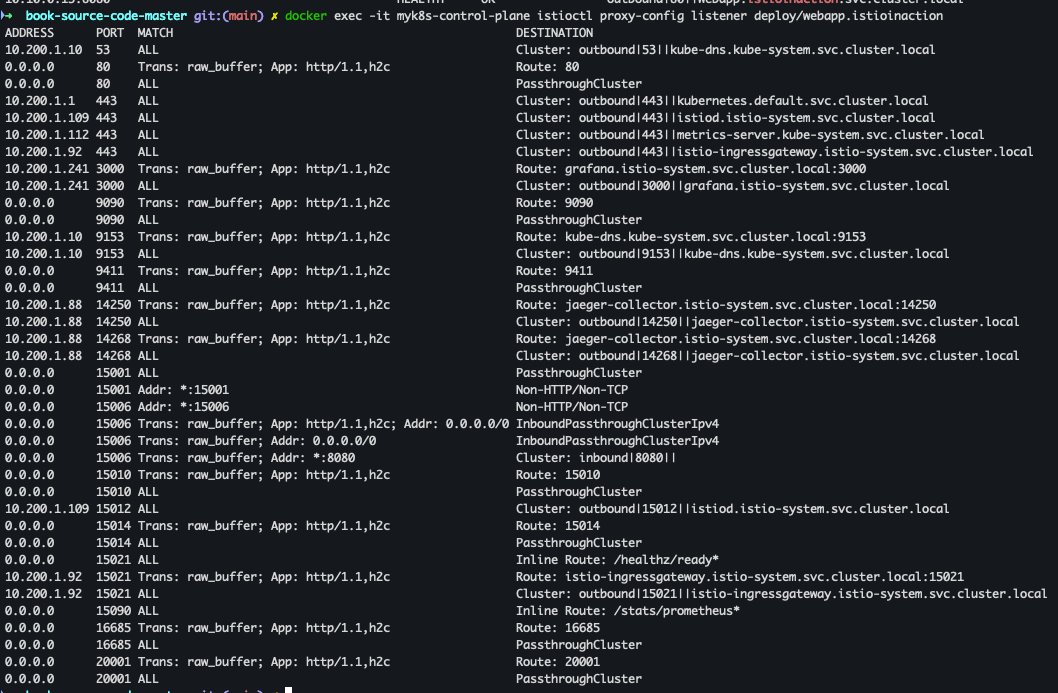

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/webapp.istioinaction

- routes 확인

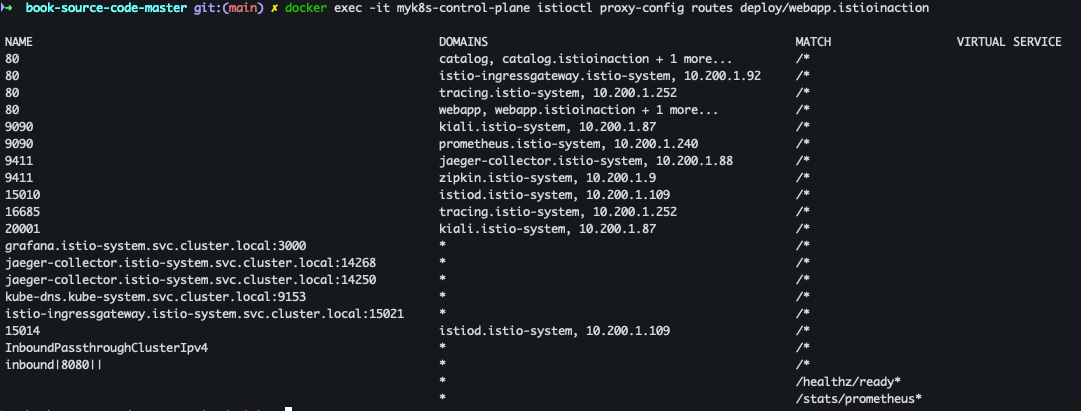

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/webapp.istioinaction

- cluster 확인

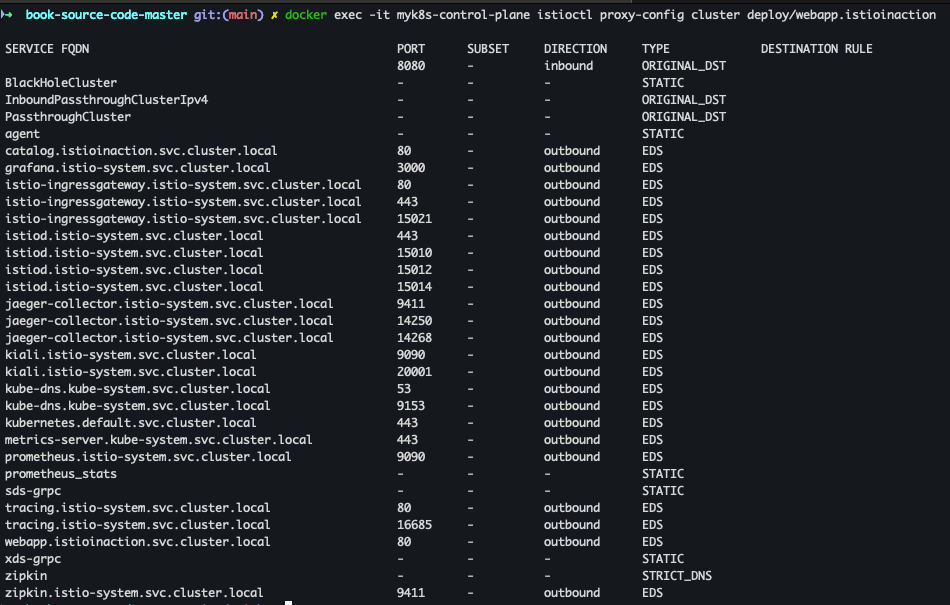

docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/webapp.istioinaction

- endpoint 확인

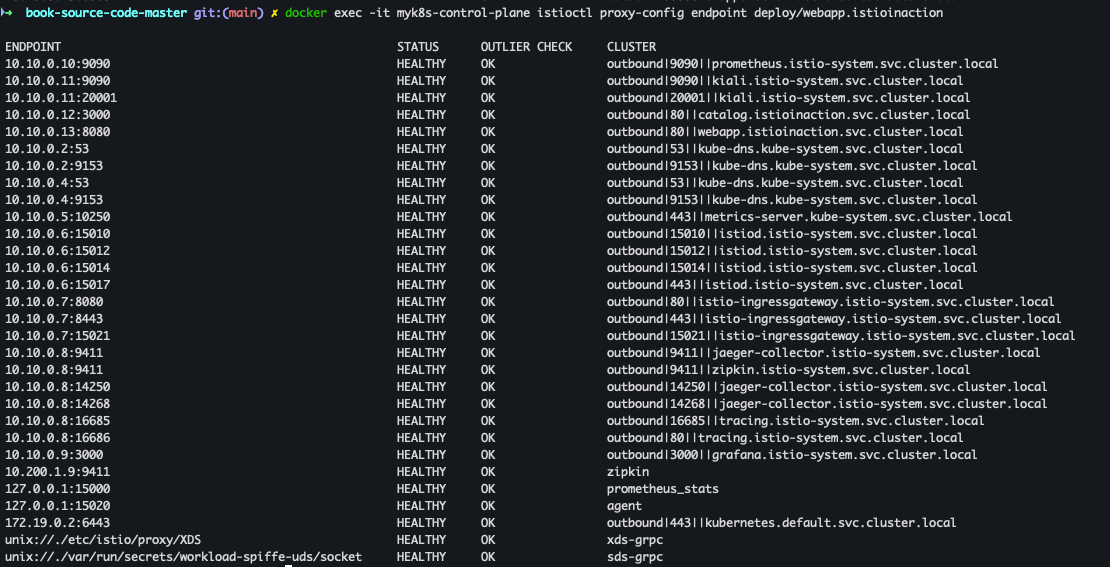

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/webapp.istioinaction

- secrets 확인

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/webapp.istioinaction

- listener 확인

-

catalog 확인

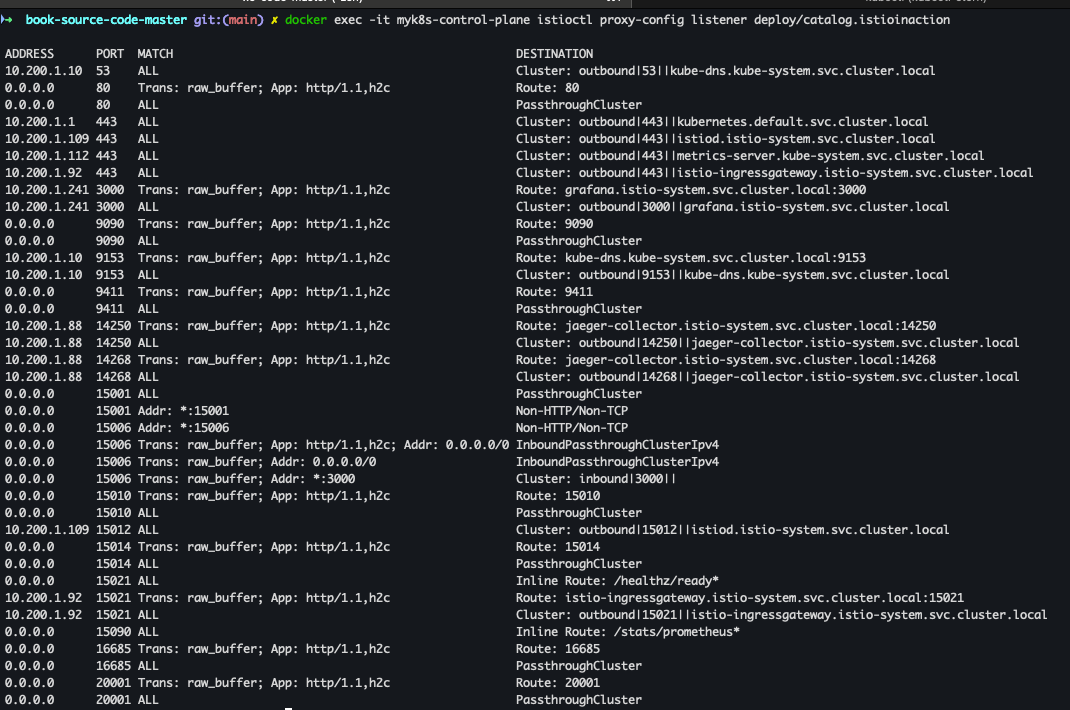

- listener 확인

docker exec -it myk8s-control-plane istioctl proxy-config listener deploy/catalog.istioinaction

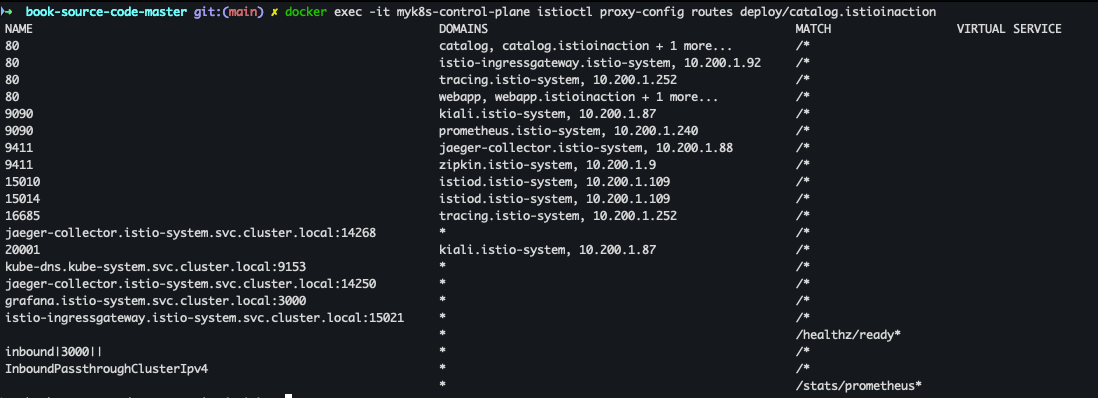

- routes 확인

docker exec -it myk8s-control-plane istioctl proxy-config routes deploy/catalog.istioinaction

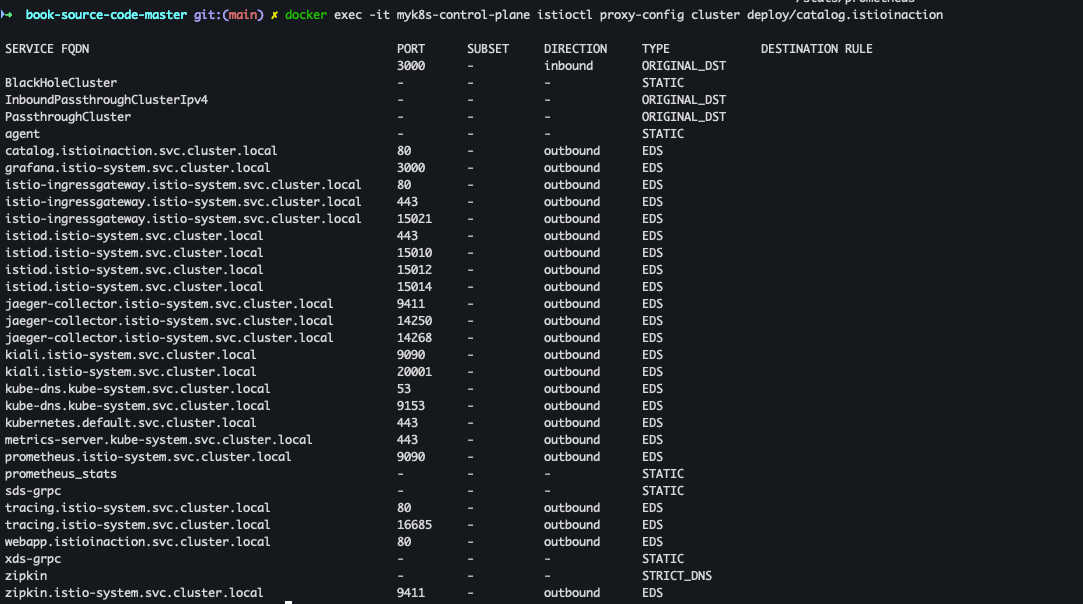

- cluster 확인

docker exec -it myk8s-control-plane istioctl proxy-config cluster deploy/catalog.istioinaction

- listener 확인

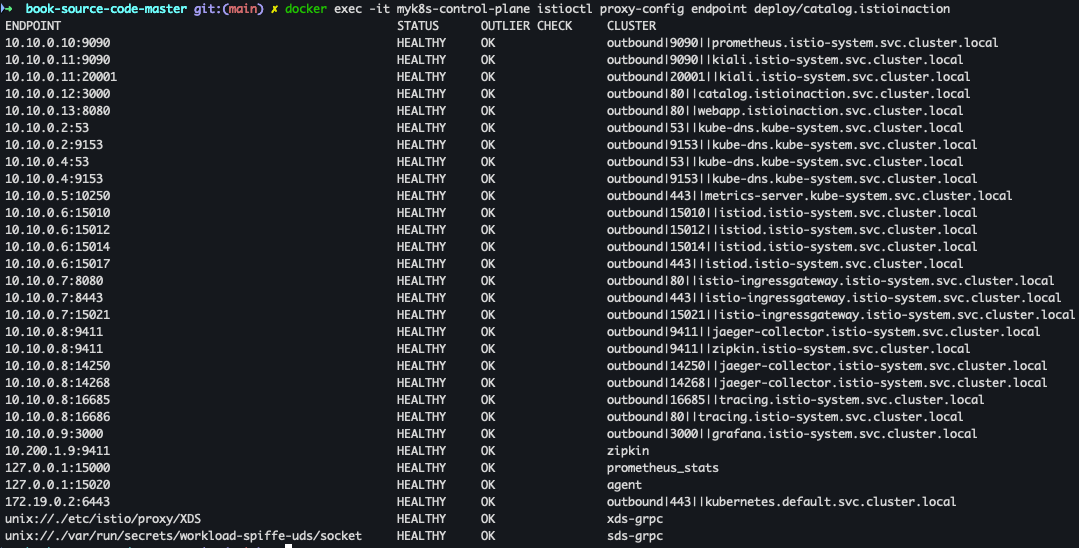

- endpoint 확인

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/catalog.istioinaction

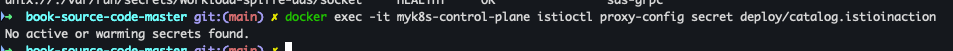

- secrets 확인

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/catalog.istioinaction

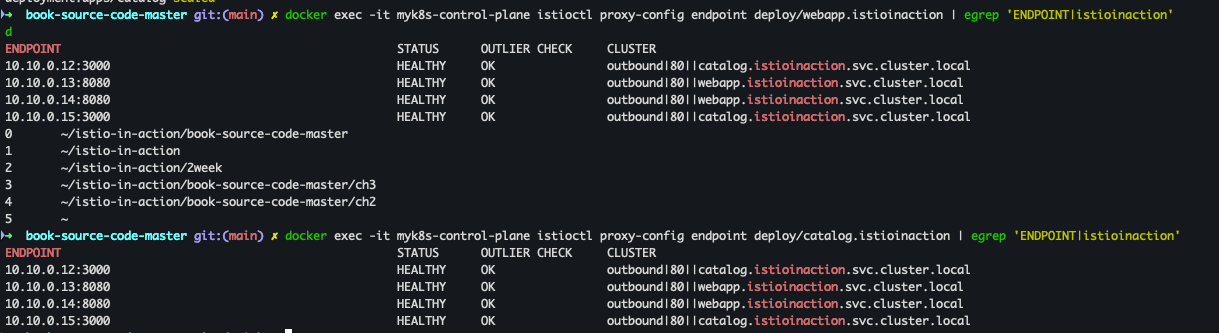

- catalog, webapp 에 replicas=1 → 2로 증가

kubectl scale deployment -n istioinaction webapp --replicas 2 kubectl scale deployment -n istioinaction catalog --replicas 2

- K8S Service의 Endpoint 목록 정보 확인

docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/webapp.istioinaction | egrep 'ENDPOINT|istioinaction' docker exec -it myk8s-control-plane istioctl proxy-config endpoint deploy/catalog.istioinaction | egrep 'ENDPOINT|istioinaction'

- replicas 1으로 변경

kubectl scale deployment -n istioinaction webapp --replicas 1 kubectl scale deployment -n istioinaction catalog --replicas 1

- (추가) 인증서 정보 확인

kubectl exec -it deploy/webapp -n istioinaction -c istio-proxy -- curl http://localhost:15000/certs | jq

3) GateWay 트래픽 보안

HTTP 트래픽 (with TLS)

개념

[내용]

- TLS는 MITM(중간자 공격) 방지를 위해 사용됩니다.

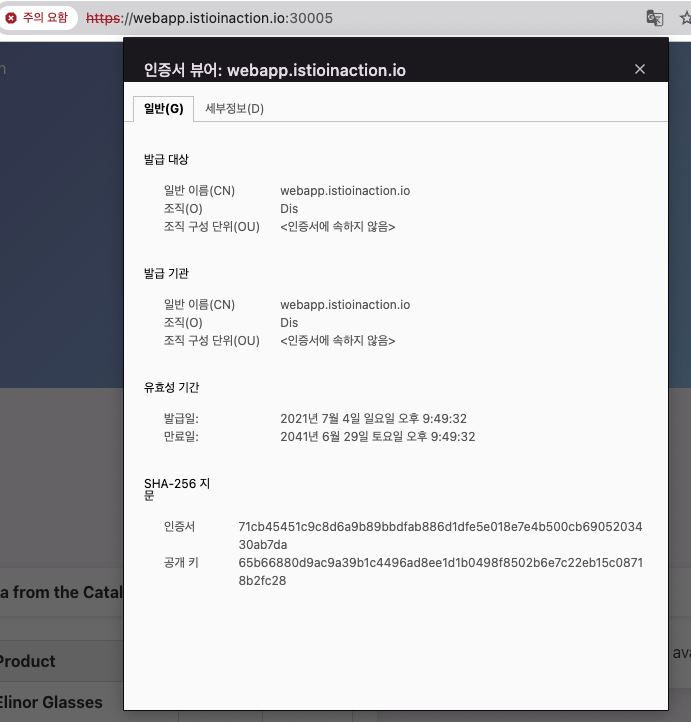

- 인증서에는 서버의 공개 키가 포함되어 있으며, CA가 서명해 신뢰성을 부여합니다.

- 클라이언트는 CA 인증서를 기반으로 서버의 진위를 검증하고, 그 후 안전하게 통신합니다.

- Istio 게이트웨이에서는 이 TLS 과정을 통해 수신 트래픽 전부를 HTTPS로 암호화할 수 있습니다.

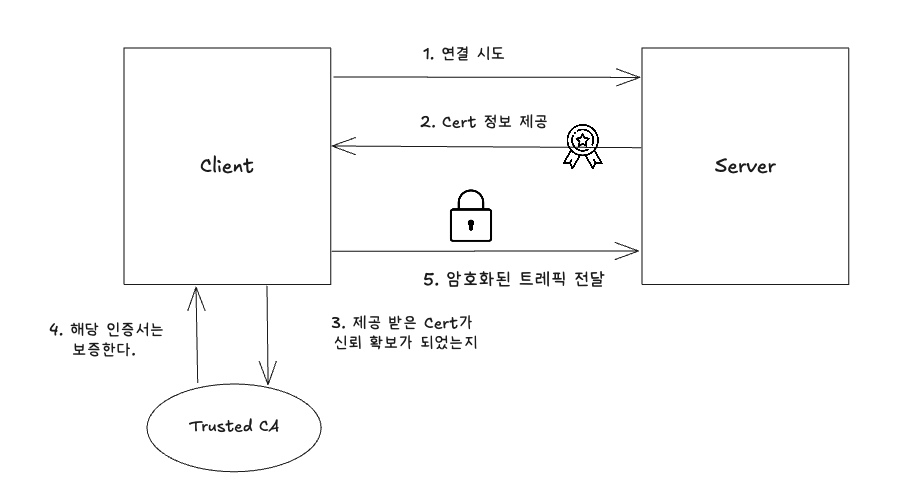

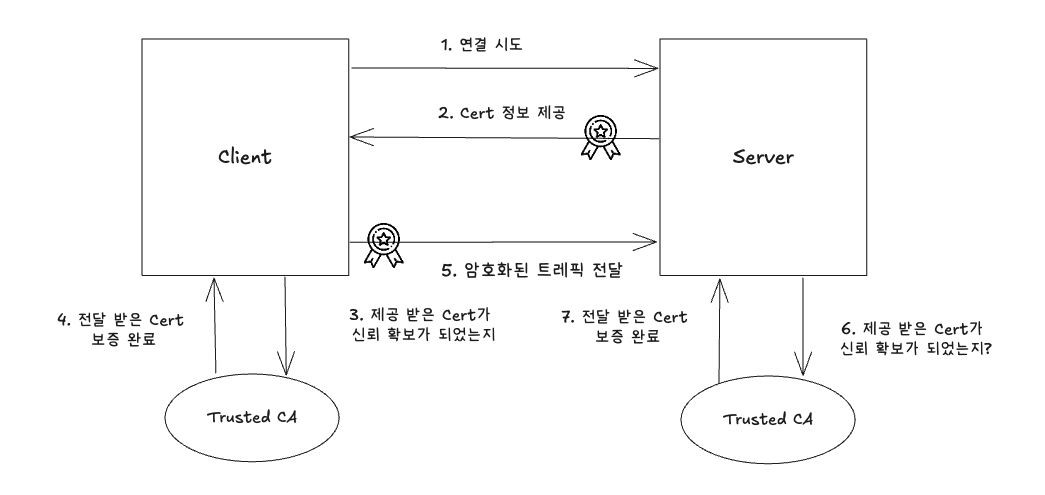

[TLS 인증서 기반 통신 흐름]

- 연결 시도 - 클라이언트가 서버에 접속 요청을 보낸다.

- 서버 인증서 제공 - 서버는 자신의 인증서(Cert)를 클라이언트에게 전달한다

- 클라이언트가 인증서 신뢰 여부 검증 -클라이언트는 인증서가 신뢰할 수 있는 인증 기관(CA)에 의해 서명됐는지 확인한다.

- CA를 통해 인증서 신뢰 확인 - 클라이언트는 로컬에 설치된 Trusted CA 목록을 참조하여 해당 인증서를 신뢰 한다.

- 암호화된 트래픽 전송 -클라이언트는 인증서의 공개 키로 데이터를 암호화해 서버에 전송하고, 서버는 자신의 비밀 키로 복호화 진행

[실습]

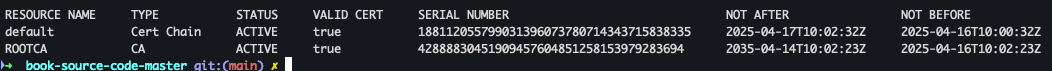

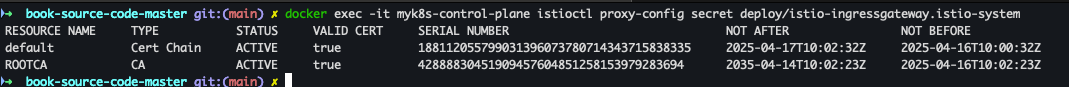

- Cert Chain , CA 확인

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/istio-ingressgateway.istio-system

- 파일 정보 확인

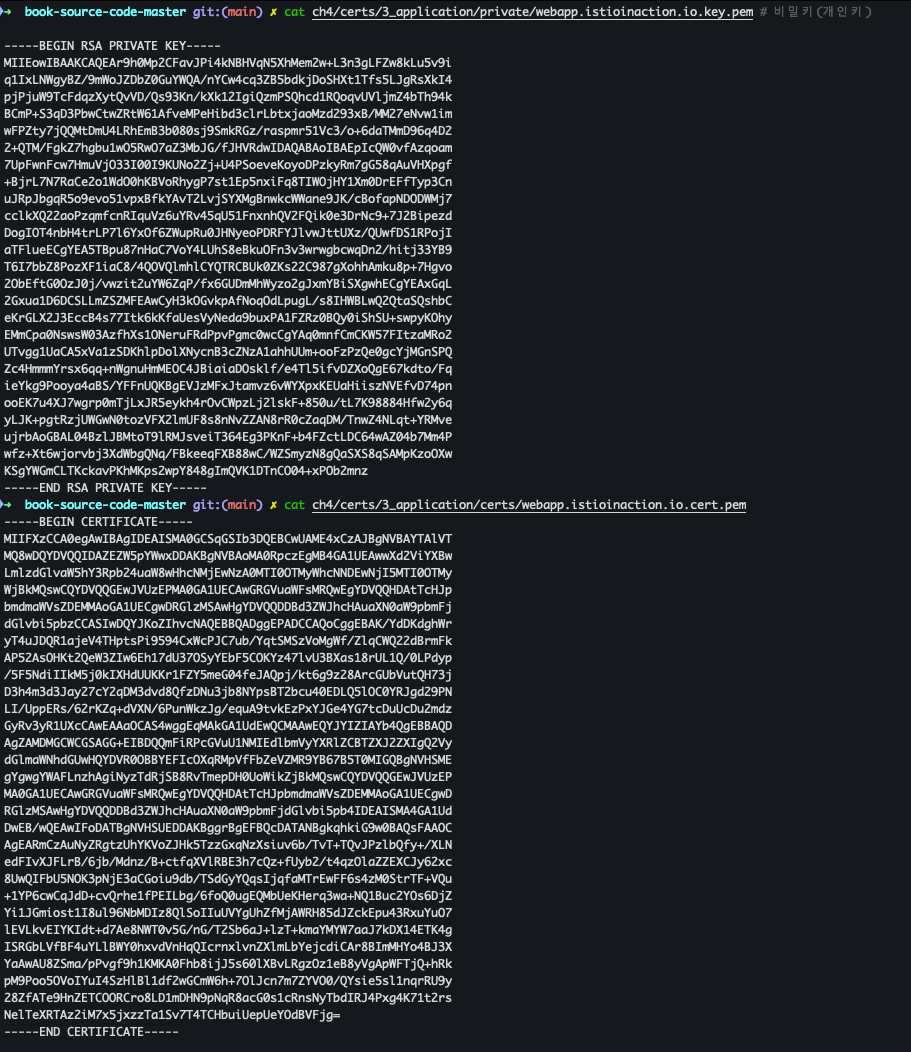

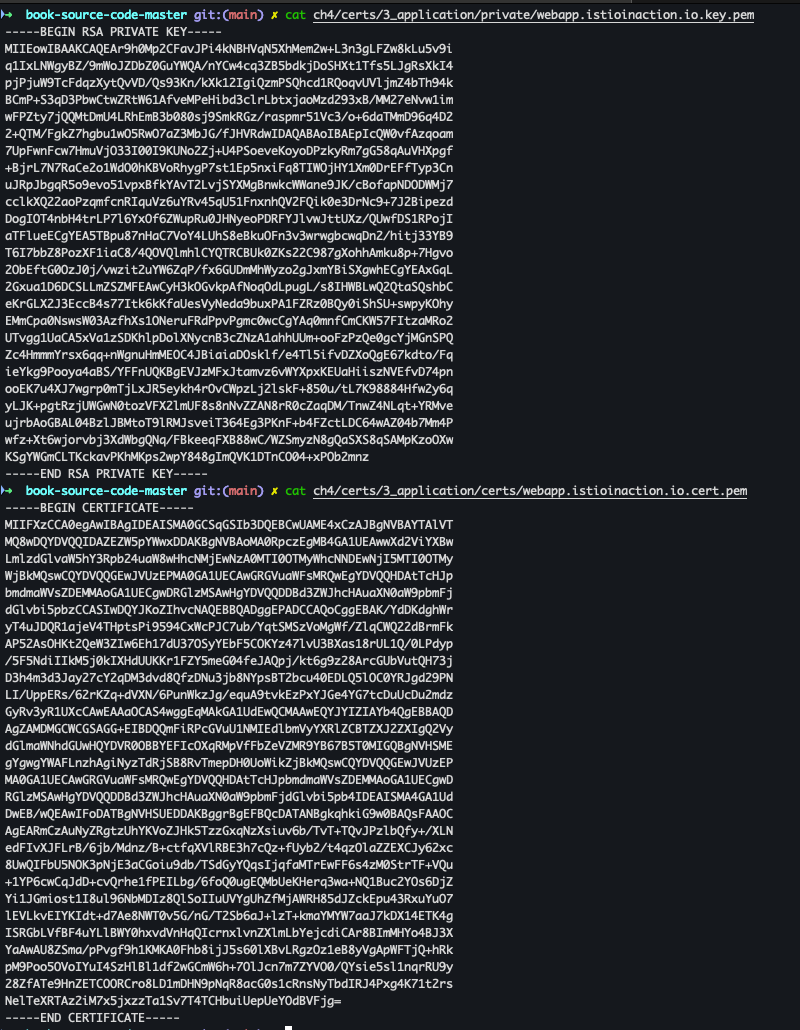

!cat ch4/certs/3_application/private/webapp.istioinaction.io.key.pem # 비밀키(개인키) cat ch4/certs/3_application/certs/webapp.istioinaction.io.cert.pem

- webapp-credential 시크릿 생성

kubectl create -n istio-system secret tls webapp-credential \ --key ch4/certs/3_application/private/webapp.istioinaction.io.key.pem \ --cert ch4/certs/3_application/certs/webapp.istioinaction.io.cert.pem

- 생성 확인

kubectl view-secret -n istio-system webapp-credential --all

- Istio gateway Resource 적용

cat ch4/coolstore-gw-tls.yaml kubectl apply -f ch4/coolstore-gw-tls.yaml -n istioinaction

- 적용 확인

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/istio-ingressgateway.istio-system

- 호출 테스트

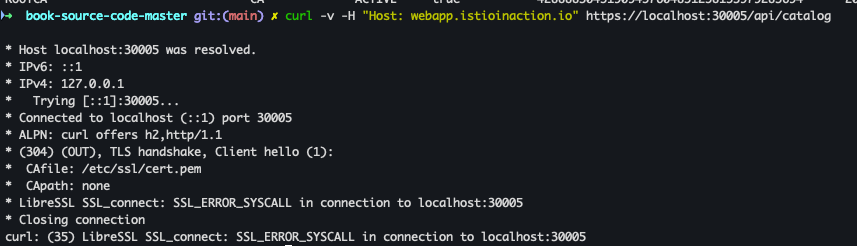

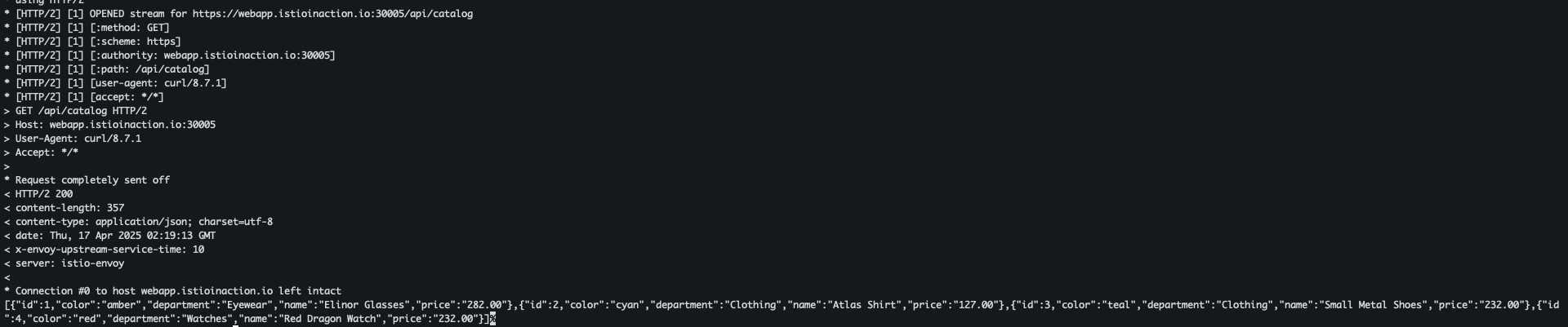

curl -v -H "Host: webapp.istioinaction.io" https://localhost:30005/api/catalog

- 호출 테스트 결과 서버에서 제공되는 인증서는 기본 CA 인증서 체인을 가져올수 없다. → 사설 CA 인증서 필요

- 사설 CA 인증서 적용

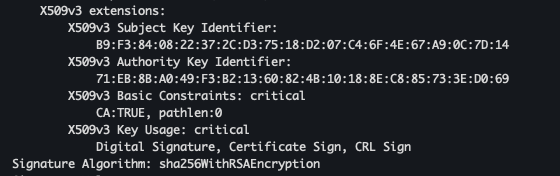

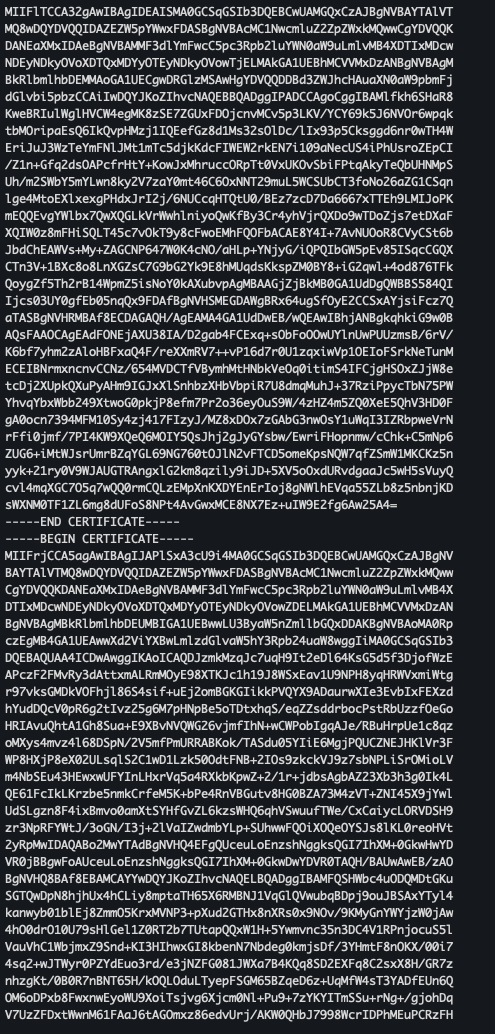

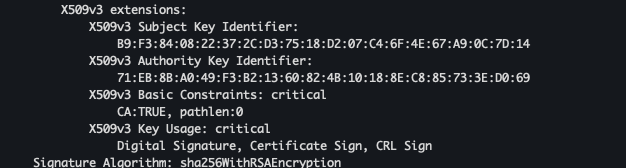

cat ch4/certs/2_intermediate/certs/ca-chain.cert.pem openssl x509 -in ch4/certs/2_intermediate/certs/ca-chain.cert.pem -noout -text

- 호출 테스트2

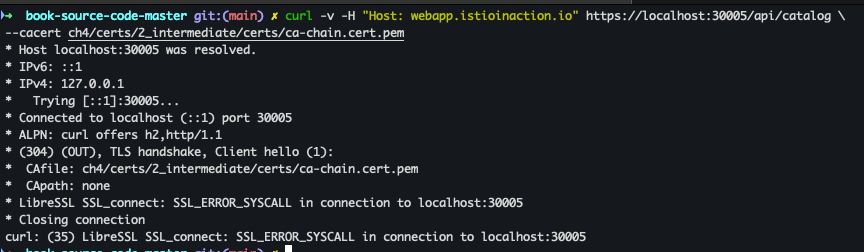

curl -v -H "Host: webapp.istioinaction.io" https://localhost:30005/api/catalog \ --cacert ch4/certs/2_intermediate/certs/ca-chain.cert.pem

- 동일하게 인증 실패 → 서버인증서가 발급된 도메인 “webapp.istioinaction.io” 대신 localhost로 호출함

- 도메인 질의를 위한 임시 설정

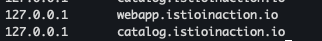

echo "127.0.0.1 webapp.istioinaction.io" | sudo tee -a /etc/hosts cat /etc/hosts | tail -n 1

- 호출 테스트 3 - 연결이 완료된것을 확인

curl -v https://webapp.istioinaction.io:30005/api/catalog \ --cacert ch4/certs/2_intermediate/certs/ca-chain.cert.pe

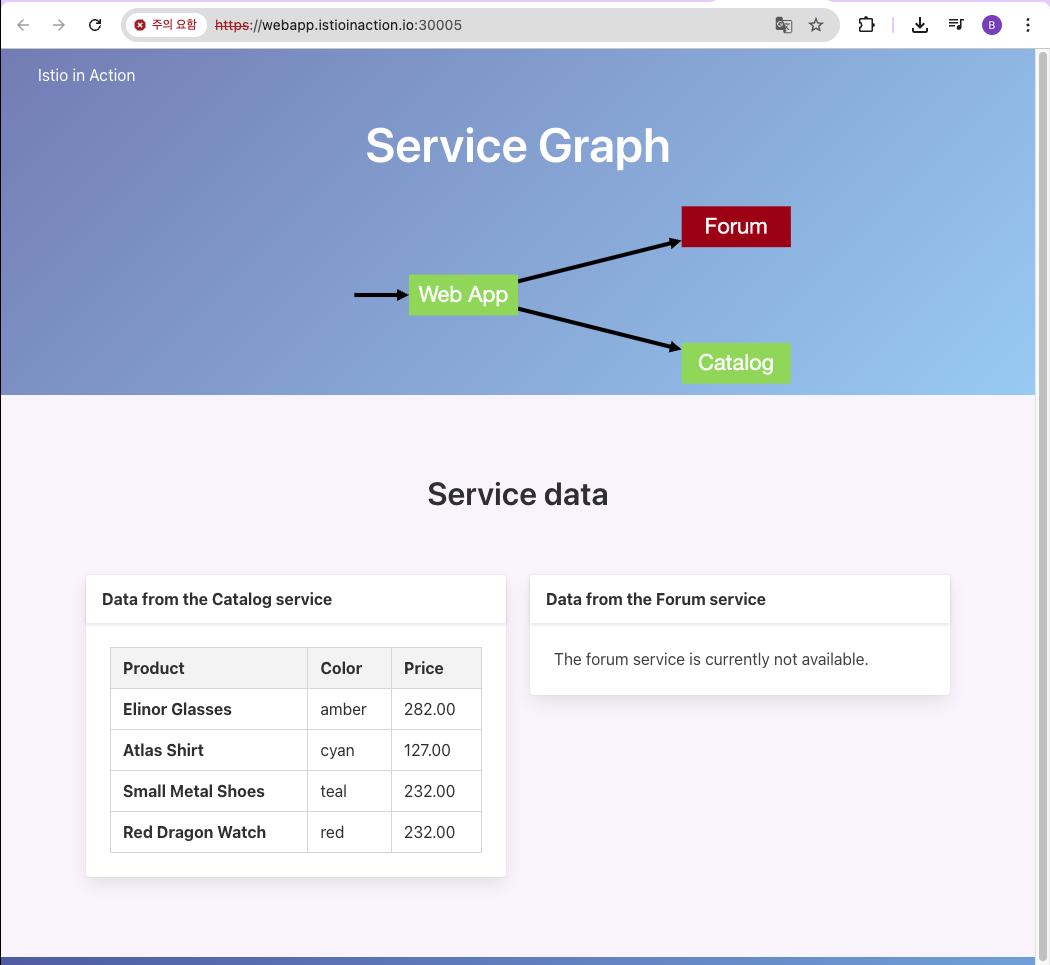

- 사이트 접근

open https://webapp.istioinaction.io:30005

- 공인된 CA가 아니므로 다음과 같은 화면이 발생

-

http 접속 확인

open http://webapp.istioinaction.io:30000

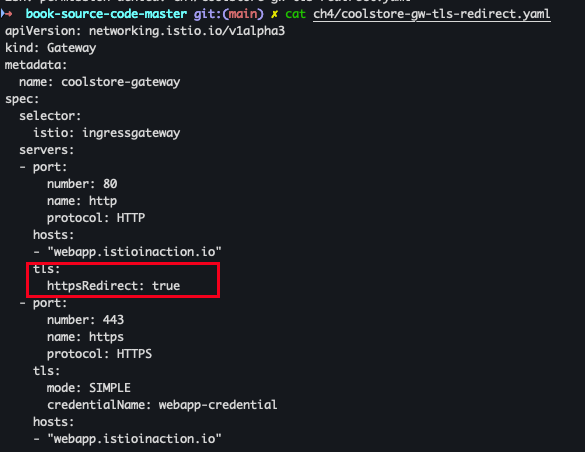

HTTP redirect to HTTPS

- 파일 확인

cat ch4/coolstore-gw-tls-redirect.yaml

- 설정 적용

kubectl apply -f ch4/coolstore-gw-tls-redirect.yaml

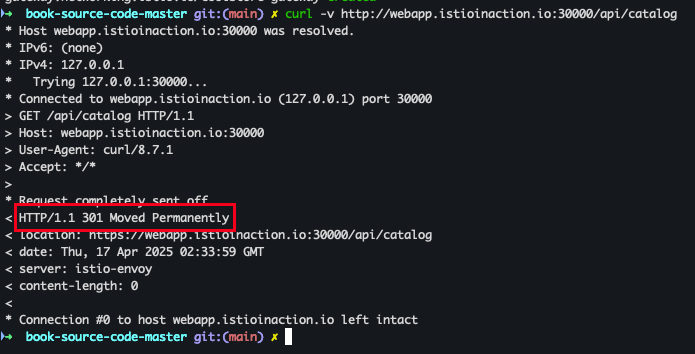

- 테스트 진행

curl -v http://webapp.istioinaction.io:30000/api/catalog

HTTP traffic with mutual TLS

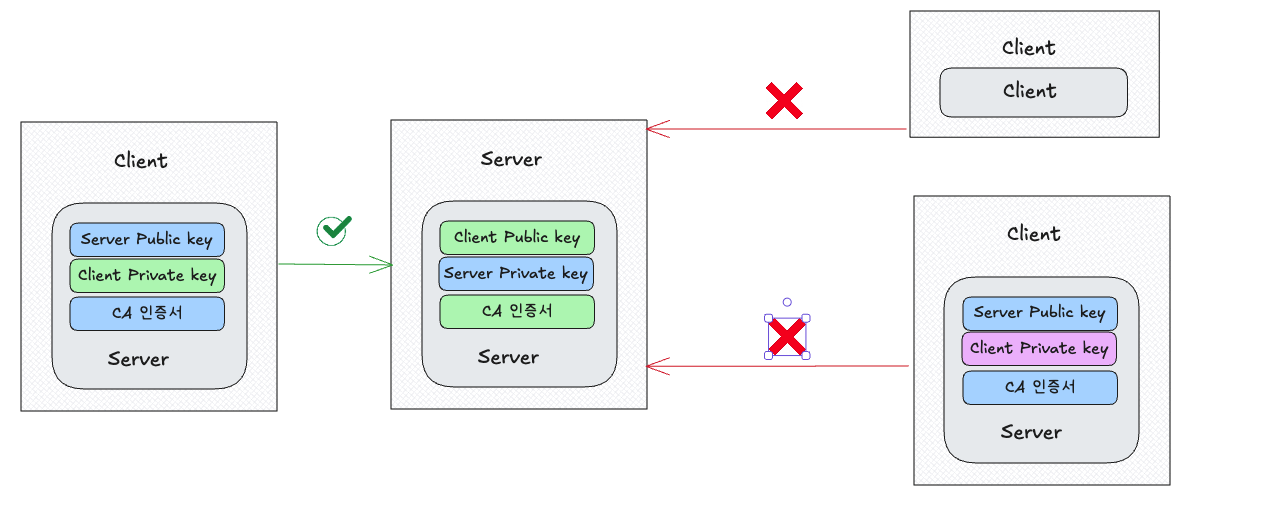

개념

- 기존 TLS는 서버만 인증서를 제공하고, 클라이언트가 이를 검증함 (일방향 인증).

- 하지만 보안이 중요한 환경에서는 클라이언트도 인증받아야 하므로, 서버가 클라이언트 인증서를 검증하는 mTLS(양방향 TLS)를 사용한다.

- mTLS

-

클라이언트가 자신의 인증서를 서버에 제공하고,

-

서버는 해당 인증서가 신뢰할 수 있는 CA에 의해 서명되었는지 확인 한다.

-

클라이언트와 서버는 서로 인증하고, 그 뒤 트래픽은 암호화된 채널로 교환 됨

-

실습

- 인증서 파일 확인

cat ch4/certs/3_application/private/webapp.istioinaction.io.key.pem cat ch4/certs/3_application/certs/webapp.istioinaction.io.cert.pem cat ch4/certs/2_intermediate/certs/ca-chain.cert.pem openssl x509 -in ch4/certs/2_intermediate/certs/ca-chain.cert.pem -noout -text

- Secret 생성

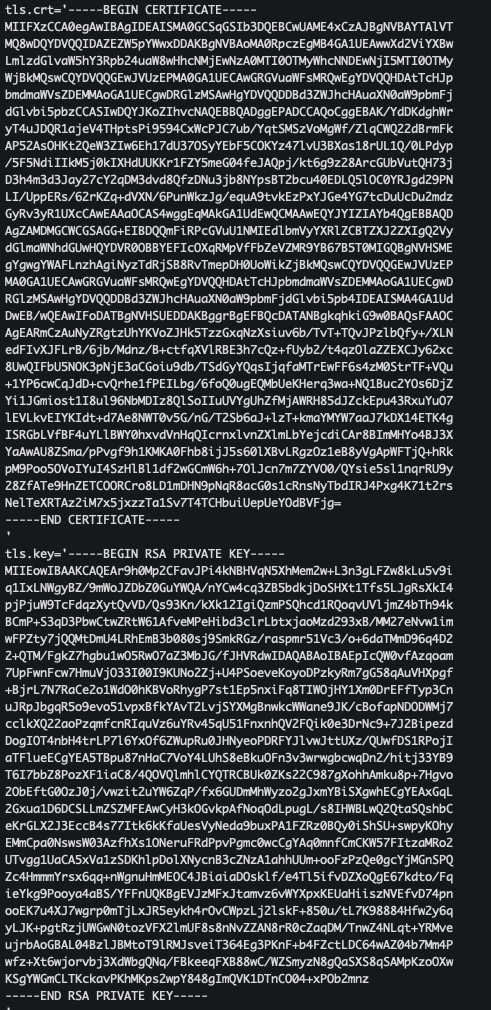

kubectl create -n istio-system secret \ generic webapp-credential-mtls --from-file=tls.key=\ ch4/certs/3_application/private/webapp.istioinaction.io.key.pem \ --from-file=tls.crt=\ ch4/certs/3_application/certs/webapp.istioinaction.io.cert.pem \ --from-file=ca.crt=\ ch4/certs/2_intermediate/certs/ca-chain.cert.pem

- 생성 확인

kubectl view-secret -n istio-system webapp-credential-mtls --all

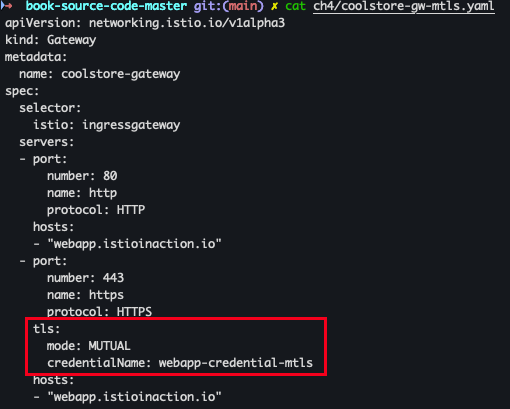

- istio Gateway 리소스 업데이트 하여 mTLS 구성

cat ch4/coolstore-gw-mtls.yaml

- 실행

kubectl apply -f ch4/coolstore-gw-mtls.yaml -n istioinaction

- 로그 확인

kubectl stern -n istio-system -l app=istiod

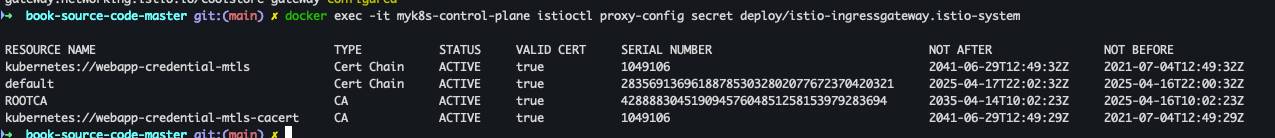

- secrets 확인

docker exec -it myk8s-control-plane istioctl proxy-config secret deploy/istio-ingressgateway.istio-system

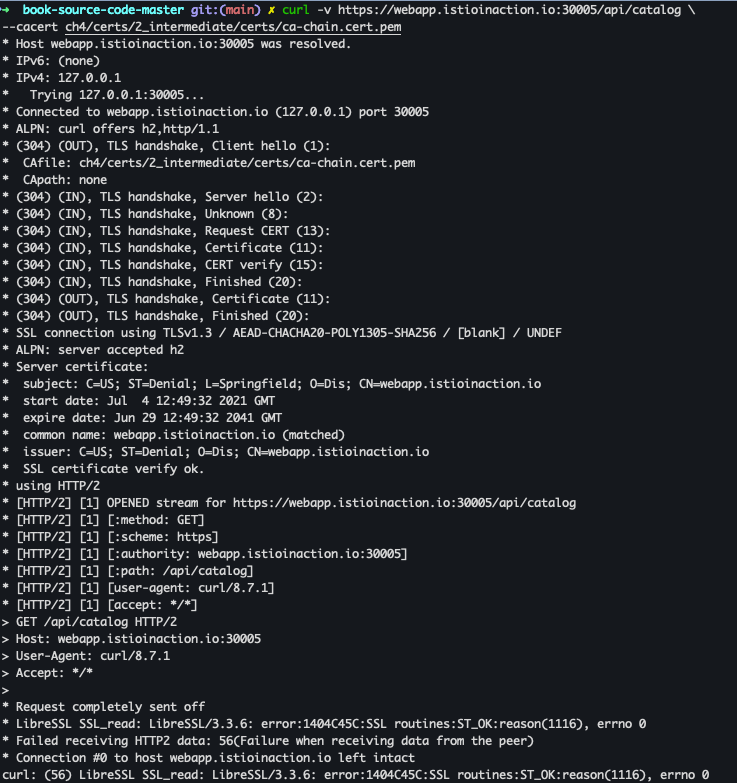

- 호출 테스트 1

curl -v https://webapp.istioinaction.io:30005/api/catalog \ --cacert ch4/certs/2_intermediate/certs/ca-chain.cert.pem

- SSL 핸드세이크가 성공하지 못하여 거부 됨

- 호출 테스트 2 - 클라이언트 인증서/ 키 추가

curl -v https://webapp.istioinaction.io:30005/api/catalog \ --cacert ch4/certs/2_intermediate/certs/ca-chain.cert.pem \ --cert ch4/certs/4_client/certs/webapp.istioinaction.io.cert.pem \ --key ch4/certs/4_client/private/webapp.istioinaction.io.key.pem- 200 OK 확인

- 200 OK 확인

Serving multiple virtual hosts with TLS

개념

- Istio Ingress Gateway는 동일한 HTTPS 포트(443)에서 자체 인증서와 비밀 키가 있는 여러 가상 호스트를 서빙할수 있다.

- 이를 위하여 동일 포트 및 프로토콜에 여러가지 항목을 추가한다.

- 예를 들어서 자체 인증서, 키가 존재하는

webapp.istioinaction.io와catalog.istioinaction.io서비스를 둘 다 추가할 수 있다.

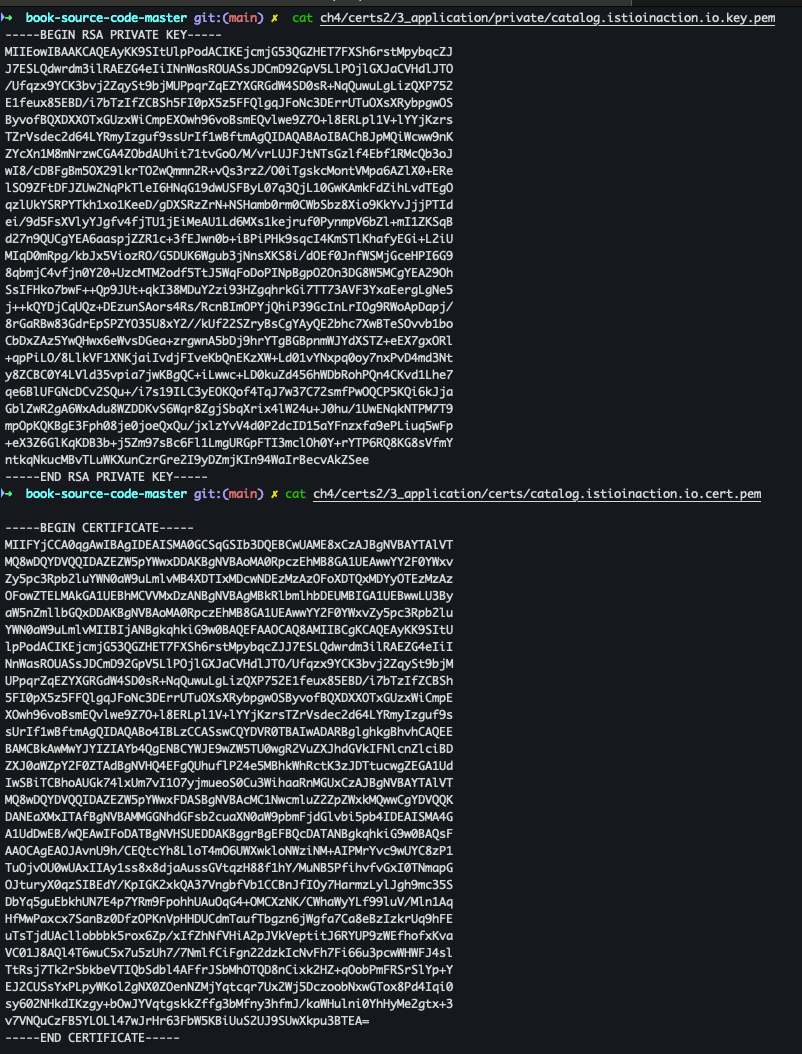

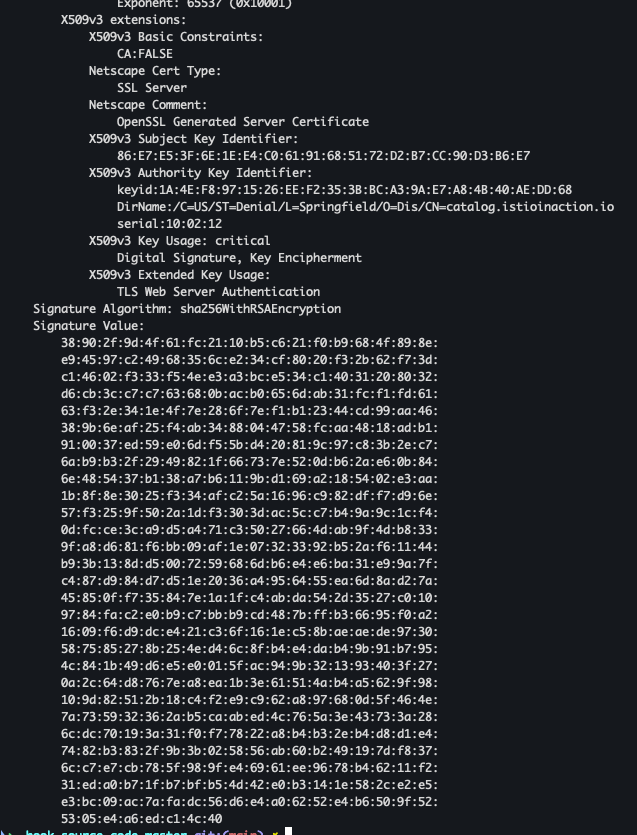

실습

- 인증서 확인

cat ch4/certs2/3_application/private/catalog.istioinaction.io.key.pem cat ch4/certs2/3_application/certs/catalog.istioinaction.io.cert.pem openssl x509 -in ch4/certs2/3_application/certs/catalog.istioinaction.io.cert.pem -noout -text

- Secrets 생성

kubectl create -n istio-system secret tls catalog-credential \ --key ch4/certs2/3_application/private/catalog.istioinaction.io.key.pem \ --cert ch4/certs2/3_application/certs/catalog.istioinaction.io.cert.pem

- gateway 설정 업데이트

kubectl apply -f ch4/coolstore-gw-multi-tls.yaml -n istioinaction

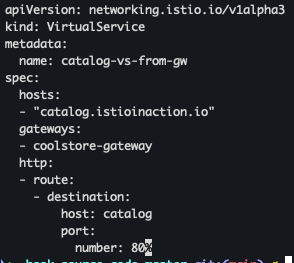

- Gateway로 노출된 catalog 서비스 전용 Virtual Service 리소스 생성

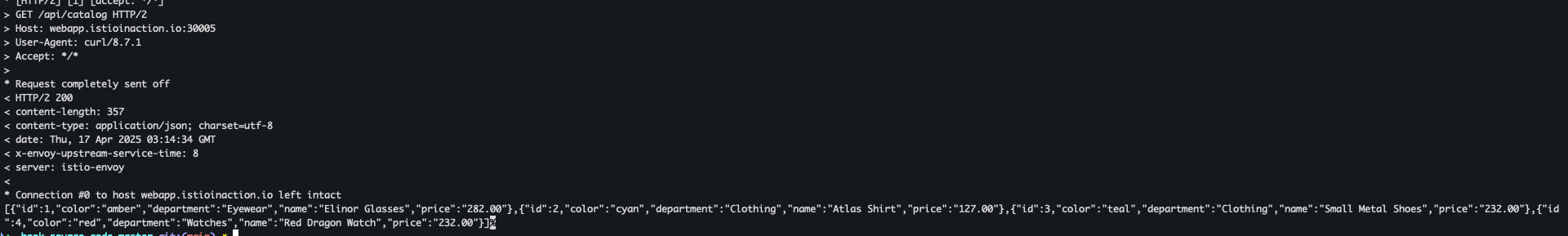

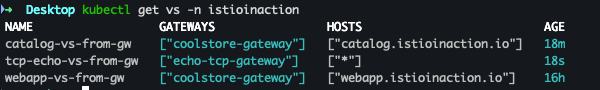

cat ch4/catalog-vs.yaml kubectl apply -f ch4/catalog-vs.yaml -n istioinaction kubectl get gw,vs -n istioinaction

- 도메인 질의를 위한 임시 설정

echo "127.0.0.1 catalog.istioinaction.io" | sudo tee -a /etc/hosts cat /etc/hosts | tail -n 2

- 호출 테스트 1 - webapp.istioinaction.io

curl -v https://webapp.istioinaction.io:30005/api/catalog \ --cacert ch4/certs/2_intermediate/certs/ca-chain.cert.pem

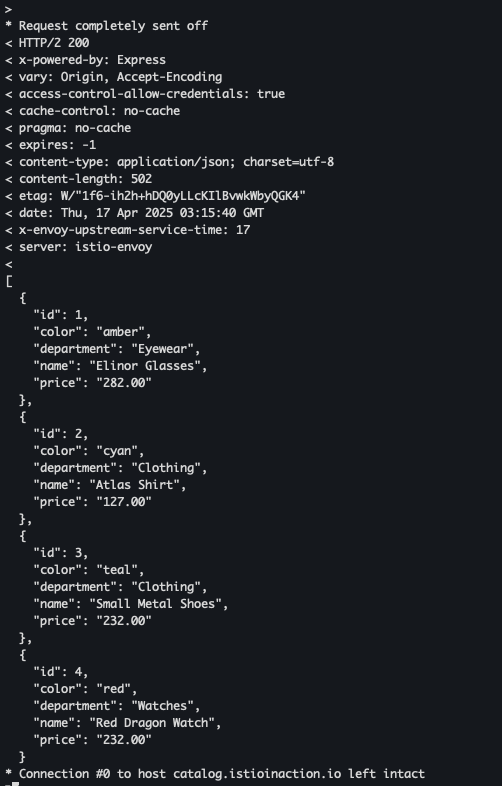

- 호출 테스트 2 - catalog.istioinaction.io

curl -v https://catalog.istioinaction.io:30005/items \ --cacert ch4/certs2/2_intermediate/certs/ca-chain.cert.pem

4) TCP traffic

Exposing TCP ports on an Istio gateway

- Service mesh 내에 TCP 기반 서비스 생성 - LINK

kubectl apply -f ch4/echo.yaml -n istioinaction

- 서비스 확인

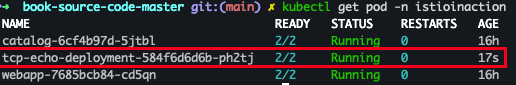

kubectl get pod -n istioinactionkubectl get pod -n istioinaction

- TCP 서빙 포트 추가

KUBE_EDITOR="vi" kubectl edit svc istio-ingressgateway -n istio-system # 해당 항목 추가 - name: tcp nodePort: 30006 port: 31400 protocol: TCP targetPort: 31400

- 포트 확인

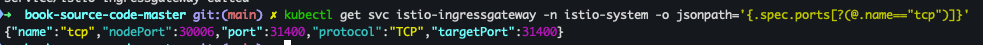

kubectl get svc istio-ingressgateway -n istio-system -o jsonpath='{.spec.ports[?(@.name=="tcp")]}'

- Gateway 생성

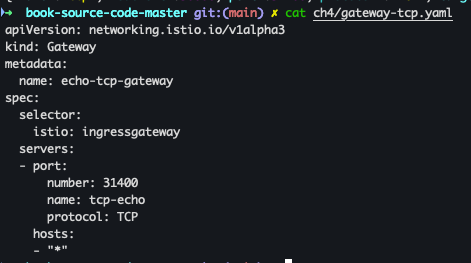

cat ch4/gateway-tcp.yaml kubectl apply -f ch4/gateway-tcp.yaml -n istioinaction

- Gateway 확인

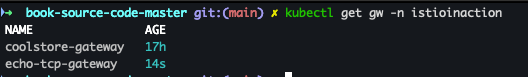

kubectl get gw -n istioinaction

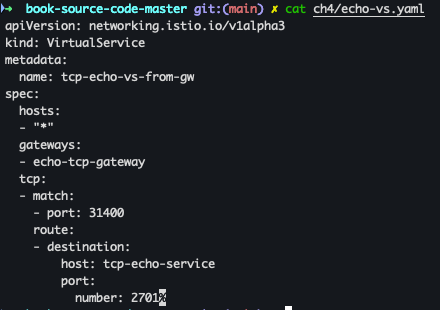

- VirtualService 리소스 생성

cat ch4/echo-vs.yaml kubectl apply -f ch4/echo-vs.yaml -n istioinaction

- VirtualService 리소스 확인

kubectl get vs -n istioinaction

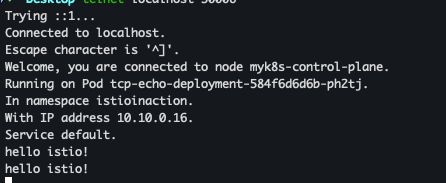

- telnet 확인

telnet localhost 30006