ERROR: Could not build wheels for llama-cpp-python, hnswlib, lxml, which is required to install pyproject.toml-based project 문제 발생 시 해결법

에러 내용

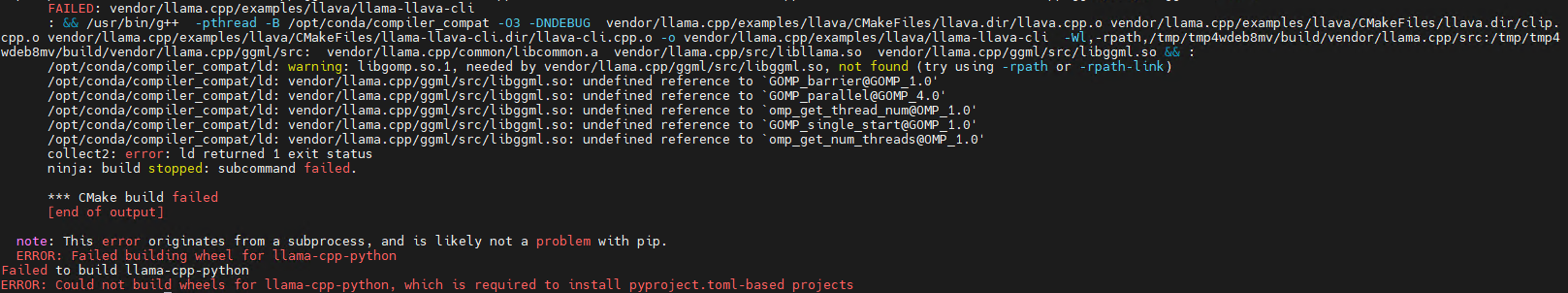

FAILED: vendor/llama.cpp/examples/llava/llama-llava-cli

: && /usr/bin/g++ -pthread -B /opt/conda/compiler_compat -O3 -DNDEBUG vendor/llama.cpp/examples/llava/CMakeFiles/llava.dir/llava.cpp.o vendor/llama.cpp/examples/llava/CMakeFiles/llava.dir/clip.cpp.o vendor/llama.cpp/examples/llava/CMakeFiles/llama-llava-cli.dir/llava-cli.cpp.o -o vendor/llama.cpp/examples/llava/llama-llava-cli -Wl,-rpath,/tmp/tmp4wdeb8mv/build/vendor/llama.cpp/src:/tmp/tmp4wdeb8mv/build/vendor/llama.cpp/ggml/src: vendor/llama.cpp/common/libcommon.a vendor/llama.cpp/src/libllama.so vendor/llama.cpp/ggml/src/libggml.so && :

/opt/conda/compiler_compat/ld: warning: libgomp.so.1, needed by vendor/llama.cpp/ggml/src/libggml.so, not found (try using -rpath or -rpath-link)

/opt/conda/compiler_compat/ld: vendor/llama.cpp/ggml/src/libggml.so: undefined reference to `GOMP_barrier@GOMP_1.0'

/opt/conda/compiler_compat/ld: vendor/llama.cpp/ggml/src/libggml.so: undefined reference to `GOMP_parallel@GOMP_4.0'

/opt/conda/compiler_compat/ld: vendor/llama.cpp/ggml/src/libggml.so: undefined reference to `omp_get_thread_num@OMP_1.0'

/opt/conda/compiler_compat/ld: vendor/llama.cpp/ggml/src/libggml.so: undefined reference to `GOMP_single_start@GOMP_1.0'

/opt/conda/compiler_compat/ld: vendor/llama.cpp/ggml/src/libggml.so: undefined reference to `omp_get_num_threads@OMP_1.0'

collect2: error: ld returned 1 exit status

ninja: build stopped: subcommand failed.

*** CMake build failed

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

ERROR: Failed building wheel for llama-cpp-python

Failed to build llama-cpp-python

ERROR: Could not build wheels for llama-cpp-python, which is required to install pyproject.toml-based projects

해결법

export PATH="/usr/local/cuda-12.1/bin:$PATH"

export LD_LIBRARY_PATH="/usr/local/cuda-12.1/lib64:$LD_LIBRARY_PATH"

source ~/.bashrc

CMAKE_ARGS="-DLLAMA_CUDA=on" FORCE_CMAKE=1 pip install llama-cpp-python --force-reinstall --upgrade --no-cache-dir --verbose