📒 PyTorch Basic Tensor Manipulation

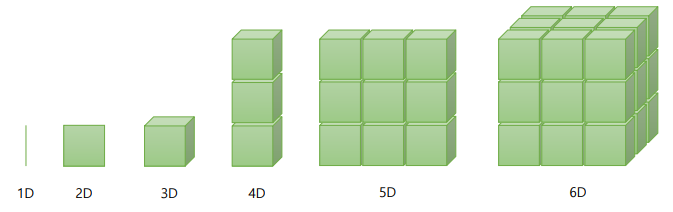

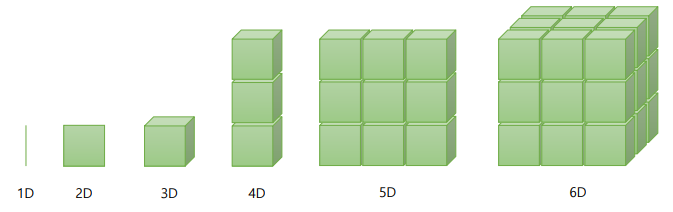

📝 Vector, Matrix and Tensor

- 1D : Vector, 2D : Matrix, 3D : Tensor, nD는 Tensor를 확장

✏️ Tensor size

- 2D Tensor : | t | = (batch size, dim)

- 3D Tensor : | t | = (batch size, width or length, height or dim )

📝 NumPy Review

import numpy as np

>>> t = np.array([0., 1., 2., 3., 4., 5., 6.])

>>> print(t)

[0. 1. 2. 3. 4. 5. 6.]

>>> print(t.ndim)

1

>>> print(t.shape)

(7, )

>>> print(t[0], t[1], t[-1])

0.0 1.0 6.0

>>> print(t[2:5], t[4:-1]

[2. 3. 4.] [4. 5.]

>>> print(t[:2], t[3:]

[0. 1.] [3. 4. 5. 6.]

>>> t = np.array([[1., 2., 3.], [4., 5., 6.], [7., 8., 9.], [10., 11., 12.]])

>>> print(t)

[[1. 2. 3.]

[4. 5. 6.]

[7. 8. 9.]

[10. 11. 12.]]

>>> print(t.ndim)

2

>>> print(t.shape)

(4, 3)

📝 PyTorch Tensor Allocation

import torch

>>> t = torch.FloatTensor([0., 1., 2., 3., 4., 5., 6.])

>>> print(t)

tensor([0. 1. 2. 3. 4. 5. 6.])

>>> print(t.ndim)

1

>>> print(t.shape)

torch.Size([7])

>>> print(t.size())

torch.Size([7])

>>> print(t[0], t[1], t[-1])

tensor(0.) tensor(1.) tensor(6.)

>>> print(t[2:5], t[4:-1]

tensor([2. 3. 4.]) tensor([4. 5.])

>>> print(t[:2], t[3:]

tensor([0. 1.]) tensor([3. 4. 5. 6.])

>>> t = torch.FloatTensor([[1., 2., 3.], [4., 5., 6.], [7., 8., 9.], [10., 11., 12.]])

>>> print(t)

tensor([[1. 2. 3.]

[4. 5. 6.]

[7. 8. 9.]

[10. 11. 12.]])

>>> print(t.ndim)

2

>>> print(t.size())

torch.Size([4, 3])

>>> print(t[:, 1])

tensor([2., 5., 8., 11.])

>>> print(t[:, 1].size())

torch.Size([4])

📝 Matrix Multiplication

- PyTorch는 자동으로 BroadCasting을 지원한다.

- LongTensor, FloatTensor, ByteTensor 등이 있으며, .float() 등으로 형변환도 지원한다.

import torch

>>> m1 = torch.FloatTensor([[3, 3]])

>>> m2 = torch.FloatTensor([[2, 2]])

>>> print(m1 + m2)

tensor([[5., 5.]])

>>> m1 = torch.FloatTensor([[1, 2]])

>>> m2 = torch.FloatTensor([3])

>>> print(m1 + m2)

tensor([[4., 5.]])

>>> m1 = torch.FloatTensor([[1, 2]])

>>> m2 = torch.FloatTensor([[3], [4]])

>>> print(m1 + m2)

tensor([[4., 5.],

[5., 6.]])

- 행렬 곱은 matmul()으로 지원한다. (mul 과는 다르다)

>>> m1 = torch.FloatTensor([[1, 2], [3, 4]])

>>> m2 = torch.FloatTensor([[1],[2]])

>>> print(m1.matmul(m2))

tensor([[5.],

[11.]])

>>> print(m1.mul_(m2))

tensor([[1., 2.],

[6., 8.]])

📝 Other Basic Ops

>>> t = torch.FloatTensor([1, 2])

>>> print(t.mean())

tensor(1.5000)

>>> t = torch.FloatTensor([[1, 2], [3, 4]])

>>> print(t.mean())

tensor(2.5000)

>>> print(t.mean(dim=0))

tensor([2., 3.])

>>> print(t.mean(dim=1))

tensor([1.5000, 3.5000])

>>> print(t.sum())

tensor(10.)

>>> print(t.sum(dim=0))

tensor([4., 6.])

>>> print(t.max())

tensor(4.)

>>> print(t.max(dim=0))

(tensor([3., 4.]), tensor([1, 1]))

📒 Tensor Utilization

📝 View (Reshape)

- 모양을 다시 만들어주는 함수. NumPy의 Reshape와 완전 동일

>>> t = np.array([[[0, 1, 2],

[3, 4, 5]],

[6, 7, 8],

[9, 10, 11]]])

>>> ft = torch.FloatTensor(t)

>>> print(ft.shape)

torch.Size([2, 2, 3])

>>> print(ft.view([-1, 3]))

tensor([[0., 1., 2.],

[3., 4., 5.],

[6., 7., 8.],

[9., 10., 11.]])

>>> print(ft.view([-1, 3]).shape)

torch.Size([4, 3])

📝 Squeeze

>>> ft = torch.FloatTensor([[0], [1], [2]])

>>> print(ft.shape)

torch.Size([3, 1])

>>> print(ft.squeeze())

tensor([0., 1., 2.])

>>> print(ft.squeeze().shape)

torch.Size([3])

>>> print(ft.unsqueeze(1))

tensor([[0.],

[1.],

[2.]])

📝 Concatenate

>>> x = torch.FloatTensor([[1, 2], [3, 4]])

>>> y = torch.FloatTensor([[5, 6], [7, 8]])

>>> print(torch.cat([x, y], dim=0))

tensor([[1., 2.],

[3., 4.],

[5., 6.]

[7., 8.]])

>>> print(torch.cat([x, y], dim=1))

tensor([[1., 2., 5., 6.],

[3., 4., 7., 8.])

📝 Stacking

>>> x = torch.FloatTensor([1, 4])

>>> y = torch.FloatTensor([2, 5])

>>> z = torch.FloatTensor([3, 6])

>>> print(torch.stack([x, y, z]))

tensor([[1., 4.],

[2., 5.],

[3., 6.]])

>>> print(torch.stack([x, y, z], dim=1))

tensor([[1., 2., 3.],

[4., 5., 6.]])

📝 Ones and Zeros

>>> x = torch.FloatTensor([[0, 1, 2], [2, 1, 0]])

>>> print(x)

tensor([[0., 1., 2.],

[2., 1., 0.]])

>>> print(torch.ones_like(x))

tensor([[1., 1., 1.],

[1., 1., 1.]])

>>> print(torch.zeros_like(x))

tensor([[0., 0., 0.],

[0., 0., 0.]])