해당 게시물은 DeepLearningAI의 LangChain Chat with Your Data 강의를 듣고 개인적으로 정리한 내용입니다.

LangChain Chat with Your Data

(3) vectorstores_and_embeddings

◼︎ embedding

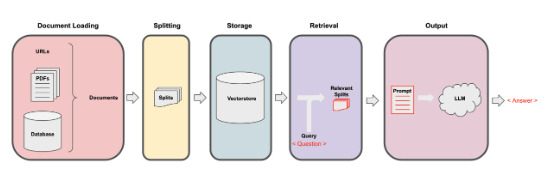

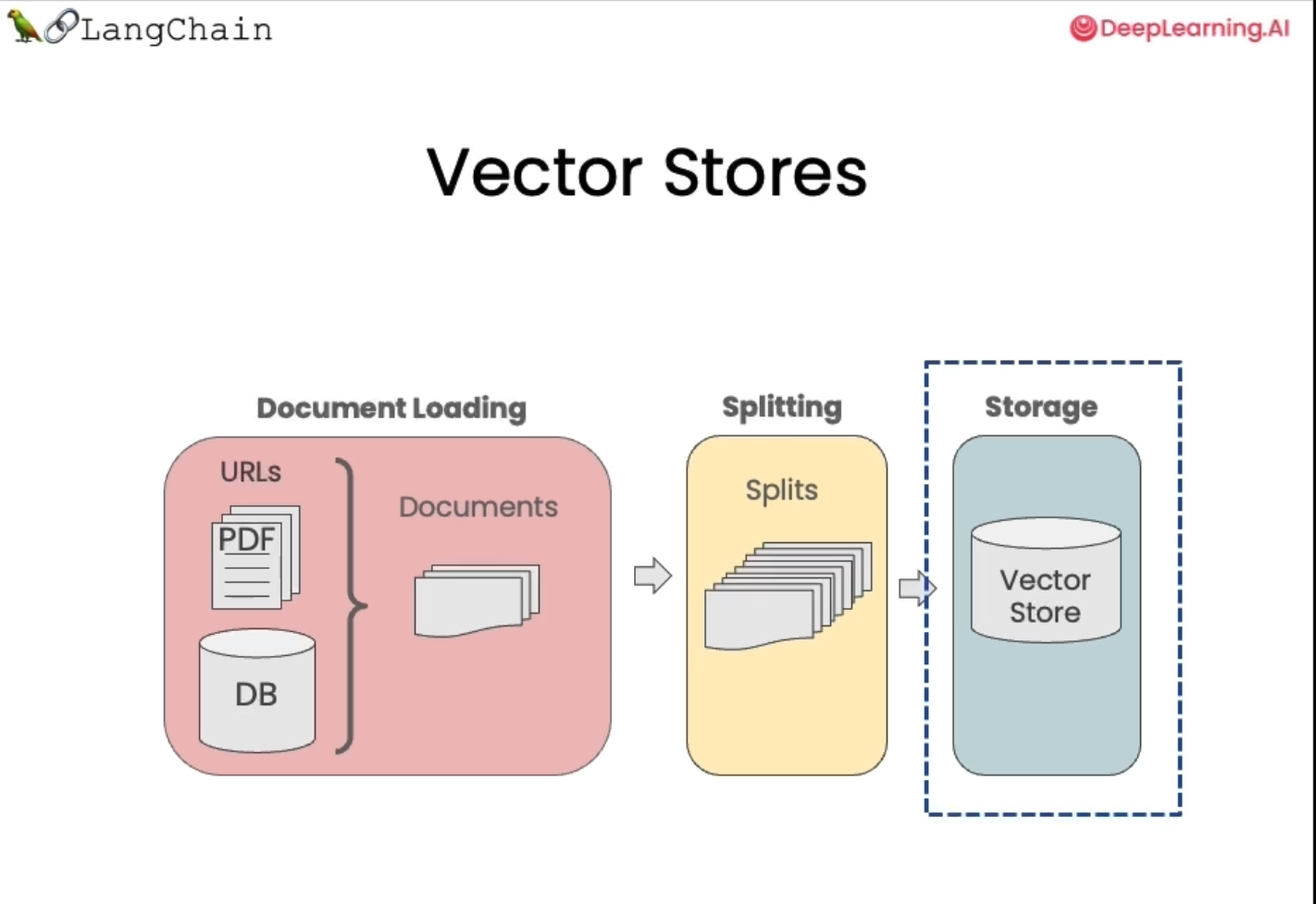

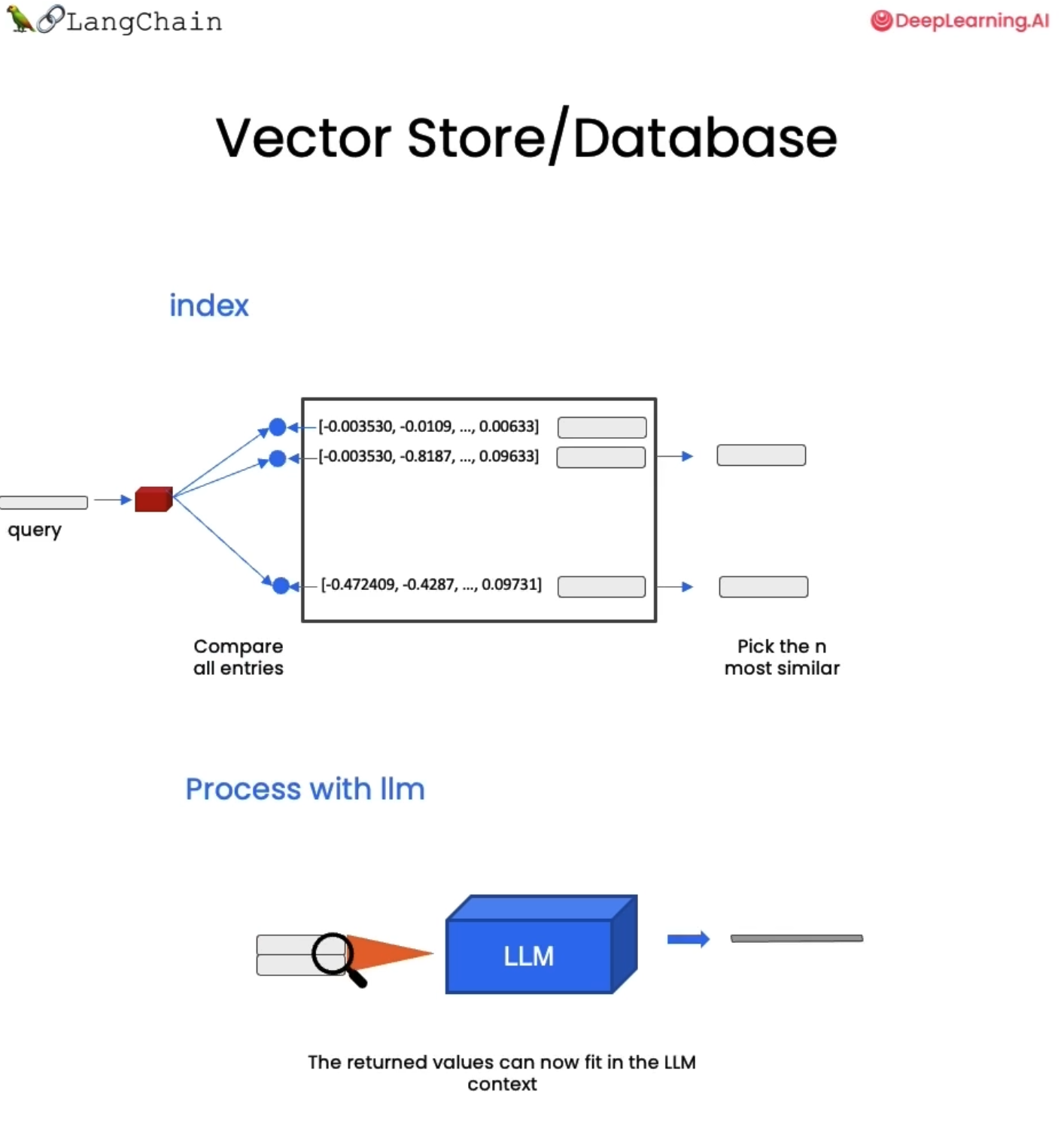

필요한 문서를 로드하고, 작고 의미상 의미 있는 청크로 분할했다. 이제 이러한 청크를 인덱스에 넣어서 이 데이터 모음에 대한 질문에 답할 때가 되면 쉽게 검색할 수 있게된다.

이를 위해 임베딩과 벡터 스토어를 활용한다.

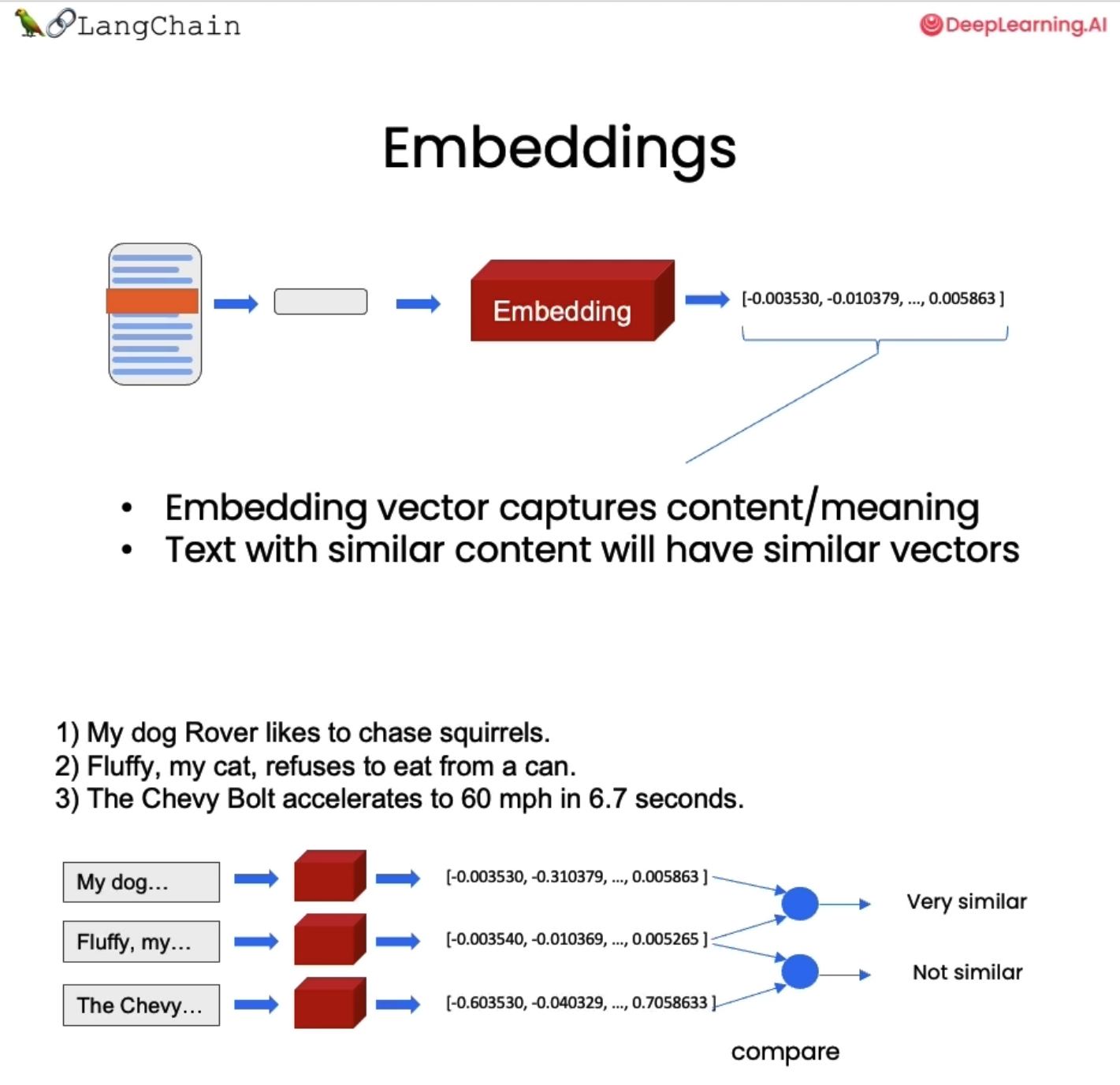

임베딩이란 텍스트를 가져와서 해당 텍스트를 숫자로 표현하는 것이다. 유사한 내용을 가진 텍스트는 이 숫자 공간에서 유사한 벡터를 갖는다. 이것이 의미하는 바는 해당 벡터를 비교하고 유사한 텍스트 조각을 찾을 수 있다는 것이다.

예시에서 보듯이 애완동물에 관한 두 문장은 매우 유사한 반면, 애완동물에 관한 문장과 자동차에 관한 문장은 그다지 유사하지 않다.

전체 엔드투엔드 워크플로우를 다시 한 번 언급하자면,

문서로 시작한 다음 해당 문서의 작은 분할을 생성하고 해당 문서의 임베딩을 생성한 다음 모든 것을 벡터 저장소에 저장하는 것이다.

◼︎ vector store

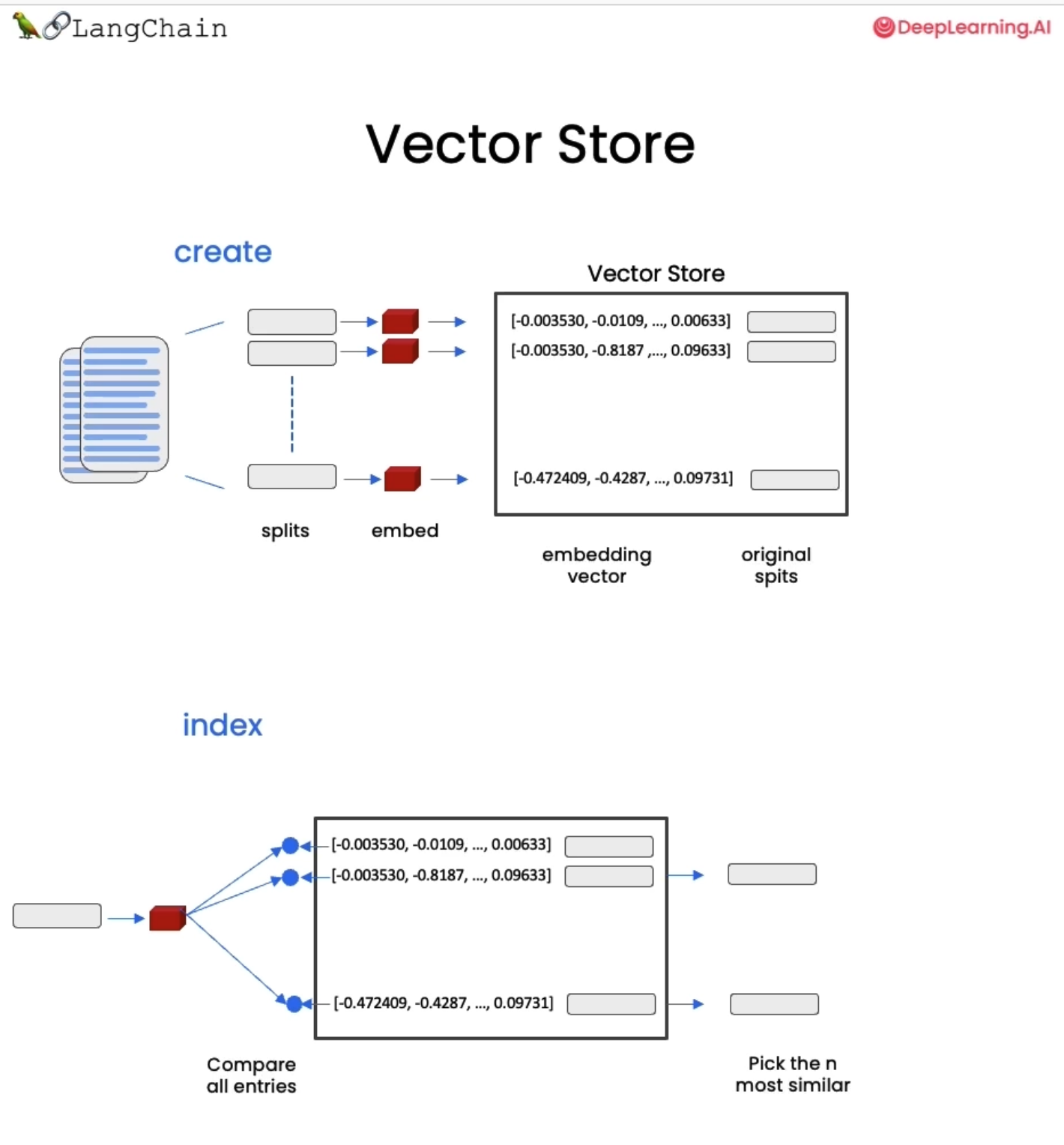

벡터 스토어는 유사한 벡터들을 쉽게 검색할 수 있는 데이터베이스이다.

어떤 질문이 들어오게 되면 해당 질문과 관련된 문서를 찾을 때 유용하다.

질문을 받고 임베딩을 만든 다음 벡터 저장소에 있는 모든 다른 벡터를 비교한 다음 가장 유사한 n개를 선택할 수 있게 된다. 그런 다음 가장 유사한 n개 청크를 질문과 함께 LLM에 전달하고 답변을 얻는다.

◼︎ example code - success case

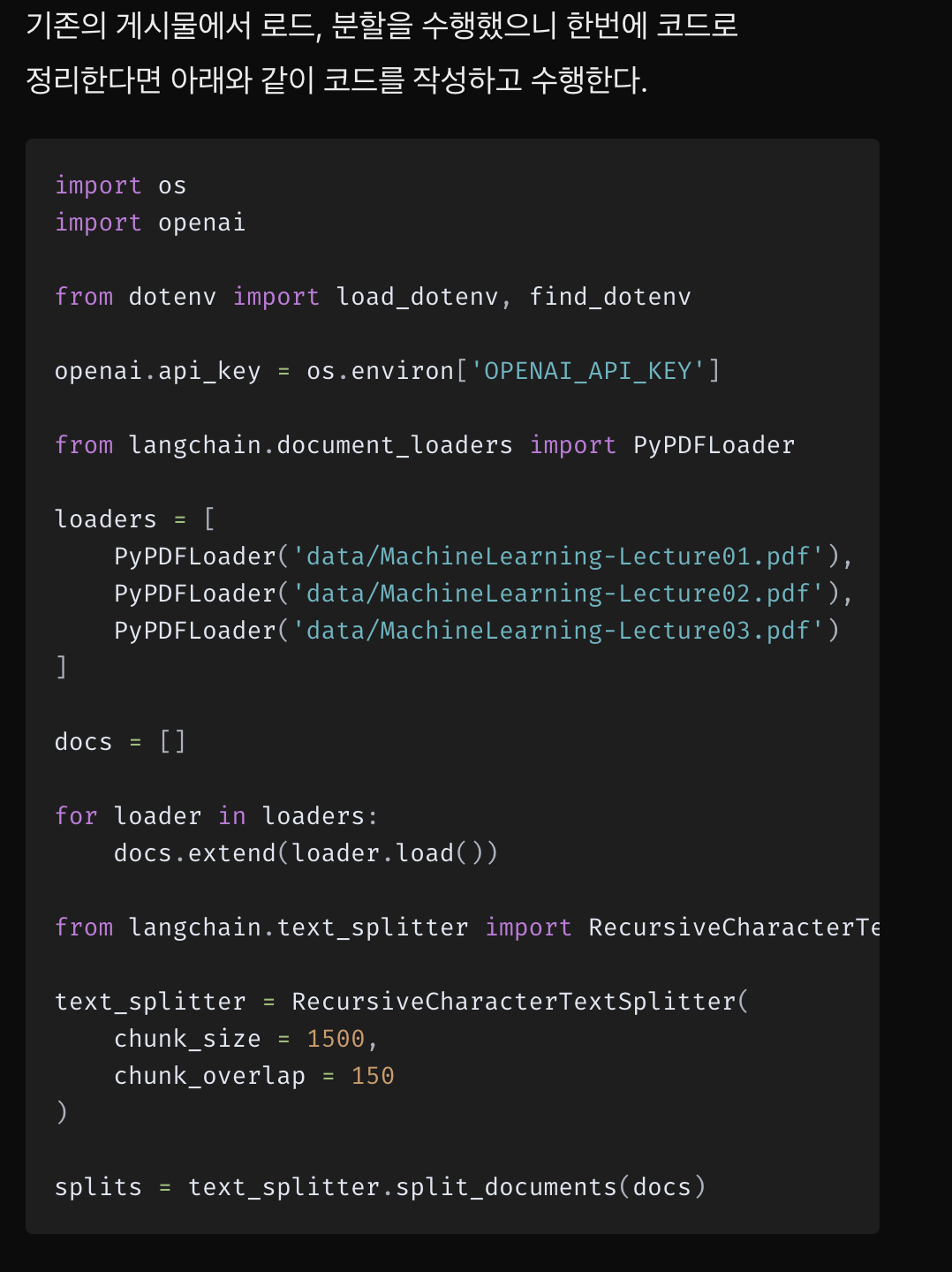

기존의 게시물에서 로드, 분할을 수행했으니 한번에 코드로 정리한다면 아래와 같이 코드를 작성하고 수행한다.

import os

import openai

from dotenv import load_dotenv, find_dotenv

openai.api_key = os.environ['OPENAI_API_KEY']

from langchain.document_loaders import PyPDFLoader

loaders = [

PyPDFLoader('data/MachineLearning-Lecture01.pdf'),

PyPDFLoader('data/MachineLearning-Lecture02.pdf'),

PyPDFLoader('data/MachineLearning-Lecture03.pdf')

]

docs = []

for loader in loaders:

docs.extend(loader.load())

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size = 1500,

chunk_overlap = 150

)

splits = text_splitter.split_documents(docs)시작하기 위해 환경 변수를 설정한다.

해당 문서는 CS229 강의 파일로 진행한다.

len(docs), len(splits)

## output

56, 152

문서가 로드된 후 재귀 문자 텍스트를 사용하고, 문서 청크를 생성하는 splitter를 사용해, docs는 56이었지만 152개의 서로 다른 청크가 생성된 것을 확인할 수 있다.

해당 문장을 embeddingOpenAI를 하여 이러한 임베딩을 생성한다.

from langchain.embeddings.openai import OpenAIEmbeddings

embedding = OpenAIEmbeddings()sentence1 = 'i like dogs'

sentence2 = 'i like canines'

sentence3 = 'the weather is ugly outside'

라는 단어가 있다.

문장 2에서의 i like canines는

canines => 견공의 개, 암튼 개를 좋아한다는 문장이다.

문장 1,2는 서로 유사하고, 문장 3은 관련이 없는 예시 문장이다.

그런 다음 임베딩 클래스를 사용하여 각 문장에 대한 임베딩을 생성할 수 있다.

sentence1 = 'i like dogs'

sentence2 = 'i like canines'

sentence3 = 'the weather is ugly outside'

embedding1 = embedding.embed_query(sentence1)

embedding2 = embedding.embed_query(sentence2)

embedding3 = embedding.embed_query(sentence3)그런 다음 NumPy를 사용하여 그것들을 비교하고 어떤 것이 가장 유사한지 확인할 수 있다.

import numpy as np

print(np.dot(embedding1, embedding2))

print(np.dot(embedding1, embedding3))

print(np.dot(embedding2, embedding3))처음 두 문장이 매우 유사해야 하고, 세 번째 문장과 비교했을 때 첫 번째와 두 번째 문장은 거의 비슷하지 않을 것으로 예상할 수 있는데, 내적을 사용하여 두 임베딩을 비교해본다.

print(np.dot(embedding1, embedding2))

print(np.dot(embedding1, embedding3))

print(np.dot(embedding2, embedding3))

##output

0.9631675619330513

0.7710630976675918

0.7596682675219103서로 내적의 값이 높을 수록 유사하다는 의미를 내포하는데, 여기서 처음 두 임베딩의 점수가 0.96으로 상당히 높은 것을 볼 수 있다. 첫 번째 임베딩과 세 번째 임베딩을 비교 0.77로 상대적으로 낮은 수치이다.

그리고 두 번째와 세 번째를 비교해 보면 0.75 정도로 두 문장 또한 상대적으로 낮은 수치이다.

이제 모든 PDF 청크에 대한 임베딩을 생성한 다음 이를 벡터 저장소에 저장할 차례이다.

from langchain.vectorstores import Chroma

여기서 사용하는 vector store는 Chroma이다.

Chroma는 가볍고 메모리에 저장되어 있어 시작하고 시작하기가 매우 쉽ㄴ다.

호스팅 솔루션을 제공하는 다른 벡터 저장소가 있는데, 이는 대량의 데이터를 유지하거나 어딘가의 클라우드 저장소에 유지하려고 할 때 유용하다.

persist_directory = 'data/chroma/'

!rm -rf ./data/chromadocs slash Chroma에서 사용할 persist 디렉터리라는 변수를 만들고, 벡터 저장소에 저장된 파일이 있는지 없는지를 확인한다.

그 후 벡터 저장소를 만든 후에, 따라서 문서에서 Chroma를 호출하여 분할된 청크를 전달한다. 이는 이전에 데이터를 로드하고 청크로 분할한 데이터이다.

vectordb = Chroma.from_documents(

documents=splits,

embedding=embedding,

persist_directory = persist_directory

)print(vectordb._collection.count())

##output

152AI 임베딩 모델에, 디렉터리를 디스크에 저장할 수 있게 해주는 Chroma 특정 키워드 인수인 persist 디렉터리를 전달한다. 이렇게 한 후 수집 개수를 살펴보면 152개라는 것을 알 수 있는데, 이는 이전에 했던 분할 개수와 동일함을 확인할 수 있다.

"""

참고 위에서 불러온 후 splitter한 splits

"""

# similarity search

question = 'is there an email i can ask for help'

docs = vectordb.similarity_search(question, k=3)이제 이렇게 저장한 데이터를 사용하기위해서,

해당 문서는 수업 강의에 관한 내용이므로, 강좌나 자료 등에 대해 도움이 필요할 때 도움을 요청할 수 있는 이메일이 있는지 물어볼 수 있다.

물어볼 문제는 "is there an email i can ask for help"로 도움을 요청할 수 있는 이메일이 있냐는 질문이다.

similarity_search를 사용하여 질문과 함께 k=3 인자도도 전달한다. 해당 k는 이는 반환하려는 문서 수를 의미한다.

docs

##output

[Document(page_content="cs229-qa@cs.stanford.edu. This goes to an acc ount that's read by all the TAs and me. So \nrather than sending us email individually, if you send email to this account, it will \nactually let us get back to you maximally quickly with answers to your questions. \nIf you're asking questions about homework probl ems, please say in the subject line which \nassignment and which question the email refers to, since that will also help us to route \nyour question to the appropriate TA or to me appropriately and get the response back to \nyou quickly. \nLet's see. Skipping ahead — let's see — for homework, one midterm, one open and term \nproject. Notice on the honor code. So one thi ng that I think will help you to succeed and \ndo well in this class and even help you to enjoy this cla ss more is if you form a study \ngroup. \nSo start looking around where you' re sitting now or at the end of class today, mingle a \nlittle bit and get to know your classmates. I strongly encourage you to form study groups \nand sort of have a group of people to study with and have a group of your fellow students \nto talk over these concepts with. You can also post on the class news group if you want to \nuse that to try to form a study group. \nBut some of the problems sets in this cla ss are reasonably difficult. People that have \ntaken the class before may tell you they were very difficult. And just I bet it would be \nmore fun for you, and you'd probably have a be tter learning experience if you form a", metadata={'page': 5, 'source': 'data/MachineLearning-Lecture01.pdf'}),

Document(page_content="So all right, online resources. The class has a home page, so it's in on the handouts. I \nwon't write on the chalkboard — http:// cs229.stanford.edu. And so when there are \nhomework assignments or things like that, we usually won't sort of — in the mission of \nsaving trees, we will usually not give out many handouts in class. So homework \nassignments, homework solutions will be posted online at the course home page. \nAs far as this class, I've also written, a nd I guess I've also revised every year a set of \nfairly detailed lecture notes that cover the te chnical content of this class. And so if you \nvisit the course homepage, you'll also find the detailed lecture notes that go over in detail \nall the math and equations and so on that I'll be doing in class. \nThere's also a newsgroup, su.class.cs229, also written on the handout. This is a \nnewsgroup that's sort of a forum for people in the class to get to know each other and \nhave whatever discussions you want to ha ve amongst yourselves. So the class newsgroup \nwill not be monitored by the TAs and me. But this is a place for you to form study groups \nor find project partners or discuss homework problems and so on, and it's not monitored \nby the TAs and me. So feel free to ta lk trash about this class there. \nIf you want to contact the teaching staff, pl ease use the email address written down here, \ncs229-qa@cs.stanford.edu. This goes to an acc ount that's read by all the TAs and me. So", metadata={'page': 5, 'source': 'data/MachineLearning-Lecture01.pdf'}),

Document(page_content="over the last 15, 20 years in an electronic format. \nTurns out that most of you probably use learning algorithms — I don't know — I think \nhalf a dozen times a day or maybe a dozen times a day or more, and often without \nknowing it. So, for example, every time you se nd mail via the US Postal System, turns \nout there's an algorithm that tries to automa tically read the zip code you wrote on your \nenvelope, and that's done by a learning al gorithm. So every time you send US mail, you \nare using a learning algorithm, perhap s without even being aware of it.", metadata={'page': 2, 'source': 'data/MachineLearning-Lecture01.pdf'})]그래서 이를 실행해서 문서의 길이를 보면 우리가 지정한 대로 3개라는 것을 알 수 있다.

첫 번째 문서의 내용을 살펴보면

Document(page_content="cs229-qa@cs.stanford.edu. This goes to an acc ount that's read by all the TAs and me. So \nrather than sending us email individually, if you send email to this account, it will \nactually let us get back to you maximally quickly with answers to your questions. \nIf you're asking questions about homework probl ems, please say in the subject line which \nassignment and which question the email refers to, since that will also help us to route \nyour question to the appropriate TA or to me appropriately and get the response back to \nyou quickly. \nLet's see. Skipping ahead — let's see — for homework, one midterm, one open and term \nproject. Notice on the honor code. So one thi ng that I think will help you to succeed and \ndo well in this class and even help you to enjoy this cla ss more is if you form a study \ngroup. \nSo start looking around where you' re sitting now or at the end of class today, mingle a \nlittle bit and get to know your classmates. I strongly encourage you to form study groups \nand sort of have a group of people to study with and have a group of your fellow students \nto talk over these concepts with. You can also post on the class news group if you want to \nuse that to try to form a study group. \nBut some of the problems sets in this cla ss are reasonably difficult. People that have \ntaken the class before may tell you they were very difficult. And just I bet it would be \nmore fun for you, and you'd probably have a be tter learning experience if you form a", metadata={'page': 5, 'source': 'data/MachineLearning-Lecture01.pdf'})

실제로는 cs229-qa.cs.stanford.edu라는 이메일 주소에 관한 것임을 알 수 있다. 해당 이메일은 우리가 질문을 보낼 수 있고 모든 TA가 읽을 수 있는 이메일이다.

두번째는

Document(page_content="So all right, online resources. The class has a home page, so it's in on the handouts. I \nwon't write on the chalkboard — http:// cs229.stanford.edu. And so when there are \nhomework assignments or things like that, we usually won't sort of — in the mission of \nsaving trees, we will usually not give out many handouts in class. So homework \nassignments, homework solutions will be posted online at the course home page. \nAs far as this class, I've also written, a nd I guess I've also revised every year a set of \nfairly detailed lecture notes that cover the te chnical content of this class. And so if you \nvisit the course homepage, you'll also find the detailed lecture notes that go over in detail \nall the math and equations and so on that I'll be doing in class. \nThere's also a newsgroup, su.class.cs229, also written on the handout. This is a \nnewsgroup that's sort of a forum for people in the class to get to know each other and \nhave whatever discussions you want to ha ve amongst yourselves. So the class newsgroup \nwill not be monitored by the TAs and me. But this is a place for you to form study groups \nor find project partners or discuss homework problems and so on, and it's not monitored \nby the TAs and me. So feel free to ta lk trash about this class there. \nIf you want to contact the teaching staff, pl ease use the email address written down here, \ncs229-qa@cs.stanford.edu. This goes to an acc ount that's read by all the TAs and me. So", metadata={'page': 5, 'source': 'data/MachineLearning-Lecture01.pdf'})

세번째는

Document(page_content="over the last 15, 20 years in an electronic format. \nTurns out that most of you probably use learning algorithms — I don't know — I think \nhalf a dozen times a day or maybe a dozen times a day or more, and often without \nknowing it. So, for example, every time you se nd mail via the US Postal System, turns \nout there's an algorithm that tries to automa tically read the zip code you wrote on your \nenvelope, and that's done by a learning al gorithm. So every time you send US mail, you \nare using a learning algorithm, perhap s without even being aware of it.", metadata={'page': 2, 'source': 'data/MachineLearning-Lecture01.pdf'})

이다.

세번째는 이메일이 언급되어 있을 뿐이지 해당 답에 대한 답변이 나올 수 있는 청크가 아니지만, 첫번째와 두번째는 해당 내용의 청크인 것을 볼 수 있다.

vectordb.persist()그런 다음 Vectordb.persist를 실행하여 향후 학습에서 사용할 수 있도록 벡터 데이터베이스를 유지해본다. 이는 의미론적 검색의 기본 사항을 다루었으며 임베딩만으로도 꽤 좋은 결과를 얻을 수 있음을 보여주었다.

하지만 여기까지 완벽하다고 볼 수 없다.

이건 아주 잘 나온 케이스이고,

실패 사례가 나올 수 있다.

◼︎ example code - fail case (1)

아래의 사례를 보면 실패할 수 있는 사례가 발생하는데,

question = "what did they say about matlab?"

matlab에 대해 무엇이라고 말하고 있는지에 대해 질문해보자.

k는 값을 좀 올려서 3 대신 5라고 지정해본다.

question = "what did they say about matlab?"

docs = vectordb.similarity_search(question,k=5)K가 5와 같다고 지정하여 이를 실행하고 몇 가지 결과를 얻어본다.

docs

##output

[Document(page_content='those homeworks will be done in either MATLA B or in Octave, which is sort of — I \nknow some people call it a free ve rsion of MATLAB, which it sort of is, sort of isn\'t. \nSo I guess for those of you that haven\'t s een MATLAB before, and I know most of you \nhave, MATLAB is I guess part of the programming language that makes it very easy to write codes using matrices, to write code for numerical routines, to move data around, to \nplot data. And it\'s sort of an extremely easy to learn tool to use for implementing a lot of \nlearning algorithms. \nAnd in case some of you want to work on your own home computer or something if you \ndon\'t have a MATLAB license, for the purposes of this class, there\'s also — [inaudible] \nwrite that down [inaudible] MATLAB — there\' s also a software package called Octave \nthat you can download for free off the Internet. And it has somewhat fewer features than MATLAB, but it\'s free, and for the purposes of this class, it will work for just about \neverything. \nSo actually I, well, so yeah, just a side comment for those of you that haven\'t seen \nMATLAB before I guess, once a colleague of mine at a different university, not at \nStanford, actually teaches another machine l earning course. He\'s taught it for many years. \nSo one day, he was in his office, and an old student of his from, lik e, ten years ago came \ninto his office and he said, "Oh, professo r, professor, thank you so much for your', metadata={'page': 8, 'source': 'data/MachineLearning-Lecture01.pdf'}),

Document(page_content='into his office and he said, "Oh, professo r, professor, thank you so much for your \nmachine learning class. I learned so much from it. There\'s this stuff that I learned in your \nclass, and I now use every day. And it\'s help ed me make lots of money, and here\'s a \npicture of my big house." \nSo my friend was very excited. He said, "W ow. That\'s great. I\'m glad to hear this \nmachine learning stuff was actually useful. So what was it that you learned? Was it \nlogistic regression? Was it the PCA? Was it the data ne tworks? What was it that you \nlearned that was so helpful?" And the student said, "Oh, it was the MATLAB." \nSo for those of you that don\'t know MATLAB yet, I hope you do learn it. It\'s not hard, \nand we\'ll actually have a short MATLAB tutori al in one of the discussion sections for \nthose of you that don\'t know it. \nOkay. The very last piece of logistical th ing is the discussion s ections. So discussion \nsections will be taught by the TAs, and atte ndance at discussion sections is optional, \nalthough they\'ll also be recorded and televi sed. And we\'ll use the discussion sections \nmainly for two things. For the next two or th ree weeks, we\'ll use the discussion sections \nto go over the prerequisites to this class or if some of you haven\'t seen probability or \nstatistics for a while or maybe algebra, we\'ll go over those in the discussion sections as a \nrefresher for those of you that want one.', metadata={'page': 8, 'source': 'data/MachineLearning-Lecture01.pdf'}),

Document(page_content="same regardless of the group size, so with a larger group, you probably — I recommend \ntrying to form a team, but it's actually totally fine to do it in a sma ller group if you want. \nStudent : [Inaudible] what language [inaudible]? \nInstructor (Andrew Ng): So let's see. There is no C programming in this class other \nthan any that you may choose to do yourself in your project. So all the homeworks can be \ndone in MATLAB or Octave, and let's see. A nd I guess the program prerequisites is more \nthe ability to understand big?O notation and know ledge of what a data structure, like a \nlinked list or a queue or bina ry treatments, more so than your knowledge of C or Java \nspecifically. Yeah? \nStudent : Looking at the end semester project, I mean, what exactly will you be testing \nover there? [Inaudible]? \nInstructor (Andrew Ng) : Of the project? \nStudent : Yeah. \nInstructor (Andrew Ng) : Yeah, let me answer that later. In a couple of weeks, I shall \ngive out a handout with guidelines for the pr oject. But for now, we should think of the \ngoal as being to do a cool piec e of machine learning work that will let you experience the", metadata={'page': 9, 'source': 'data/MachineLearning-Lecture01.pdf'}),

Document(page_content="So later this quarter, we'll use the discussion sections to talk about things like convex \noptimization, to talk a little bit about hidde n Markov models, which is a type of machine \nlearning algorithm for modeling time series and a few other things, so extensions to the \nmaterials that I'll be covering in the main lectures. And attend ance at the discussion \nsections is optional, okay? \nSo that was all I had from l ogistics. Before we move on to start talking a bit about \nmachine learning, let me check what questions you have. Yeah? \nStudent : [Inaudible] R or something like that? \nInstructor (Andrew Ng) : Oh, yeah, let's see, right. So our policy has been that you're \nwelcome to use R, but I would strongly advi se against it, mainly because in the last \nproblem set, we actually supply some code th at will run in Octave but that would be \nsomewhat painful for you to translate into R yourself. So for your other assignments, if \nyou wanna submit a solution in R, that's fi ne. But I think MATLAB is actually totally \nworth learning. I know R and MATLAB, and I personally end up using MATLAB quite a \nbit more often for various reasons. Yeah? \nStudent : For the [inaudible] pr oject [inaudible]? \nInstructor (Andrew Ng) : So for the term project, you're welcome to do it in smaller \ngroups of three, or you're welcome to do it by yo urself or in groups of two. Grading is the \nsame regardless of the group size, so with a larger group, you probably — I recommend", metadata={'page': 9, 'source': 'data/MachineLearning-Lecture01.pdf'}),

Document(page_content='algorithm then? So what’s different? How come I was making all that noise earlier about \nleast squares regression being a bad idea for classification problems and then I did a \nbunch of math and I skipped some steps, but I’m, sort of, claiming at the end they’re \nreally the same learning algorithm? \nStudent: [Inaudible] constants? \nInstructor (Andrew Ng) :Say that again. \nStudent: [Inaudible] \nInstructor (Andrew Ng) :Oh, right. Okay, cool.', metadata={'page': 13, 'source': 'data/MachineLearning-Lecture03.pdf'})]첫번째는

page_content='those homeworks will be done in either MATLA B or in Octave, which is sort of — I \nknow some people call it a free ve rsion of MATLAB, which it sort of is, sort of isn\'t. \nSo I guess for those of you that haven\'t s een MATLAB before, and I know most of you \nhave, MATLAB is I guess part of the programming language that makes it very easy to write codes using matrices, to write code for numerical routines, to move data around, to \nplot data. And it\'s sort of an extremely easy to learn tool to use for implementing a lot of \nlearning algorithms. \nAnd in case some of you want to work on your own home computer or something if you \ndon\'t have a MATLAB license, for the purposes of this class, there\'s also — [inaudible] \nwrite that down [inaudible] MATLAB — there\' s also a software package called Octave \nthat you can download for free off the Internet. And it has somewhat fewer features than MATLAB, but it\'s free, and for the purposes of this class, it will work for just about \neverything. \nSo actually I, well, so yeah, just a side comment for those of you that haven\'t seen \nMATLAB before I guess, once a colleague of mine at a different university, not at \nStanford, actually teaches another machine l earning course. He\'s taught it for many years. \nSo one day, he was in his office, and an old student of his from, lik e, ten years ago came \ninto his office and he said, "Oh, professo r, professor, thank you so much for your' metadata={'page': 8, 'source': 'data/MachineLearning-Lecture01.pdf'}

두번째는

page_content='into his office and he said, "Oh, professo r, professor, thank you so much for your \nmachine learning class. I learned so much from it. There\'s this stuff that I learned in your \nclass, and I now use every day. And it\'s help ed me make lots of money, and here\'s a \npicture of my big house." \nSo my friend was very excited. He said, "W ow. That\'s great. I\'m glad to hear this \nmachine learning stuff was actually useful. So what was it that you learned? Was it \nlogistic regression? Was it the PCA? Was it the data ne tworks? What was it that you \nlearned that was so helpful?" And the student said, "Oh, it was the MATLAB." \nSo for those of you that don\'t know MATLAB yet, I hope you do learn it. It\'s not hard, \nand we\'ll actually have a short MATLAB tutori al in one of the discussion sections for \nthose of you that don\'t know it. \nOkay. The very last piece of logistical th ing is the discussion s ections. So discussion \nsections will be taught by the TAs, and atte ndance at discussion sections is optional, \nalthough they\'ll also be recorded and televi sed. And we\'ll use the discussion sections \nmainly for two things. For the next two or th ree weeks, we\'ll use the discussion sections \nto go over the prerequisites to this class or if some of you haven\'t seen probability or \nstatistics for a while or maybe algebra, we\'ll go over those in the discussion sections as a \nrefresher for those of you that want one.' metadata={'page': 8, 'source': 'data/MachineLearning-Lecture01.pdf'}

세번째는

page_content="same regardless of the group size, so with a larger group, you probably — I recommend \ntrying to form a team, but it's actually totally fine to do it in a sma ller group if you want. \nStudent : [Inaudible] what language [inaudible]? \nInstructor (Andrew Ng): So let's see. There is no C programming in this class other \nthan any that you may choose to do yourself in your project. So all the homeworks can be \ndone in MATLAB or Octave, and let's see. A nd I guess the program prerequisites is more \nthe ability to understand big?O notation and know ledge of what a data structure, like a \nlinked list or a queue or bina ry treatments, more so than your knowledge of C or Java \nspecifically. Yeah? \nStudent : Looking at the end semester project, I mean, what exactly will you be testing \nover there? [Inaudible]? \nInstructor (Andrew Ng) : Of the project? \nStudent : Yeah. \nInstructor (Andrew Ng) : Yeah, let me answer that later. In a couple of weeks, I shall \ngive out a handout with guidelines for the pr oject. But for now, we should think of the \ngoal as being to do a cool piec e of machine learning work that will let you experience the" metadata={'page': 9, 'source': 'data/MachineLearning-Lecture01.pdf'}

네번째는

page_content="So later this quarter, we'll use the discussion sections to talk about things like convex \noptimization, to talk a little bit about hidde n Markov models, which is a type of machine \nlearning algorithm for modeling time series and a few other things, so extensions to the \nmaterials that I'll be covering in the main lectures. And attend ance at the discussion \nsections is optional, okay? \nSo that was all I had from l ogistics. Before we move on to start talking a bit about \nmachine learning, let me check what questions you have. Yeah? \nStudent : [Inaudible] R or something like that? \nInstructor (Andrew Ng) : Oh, yeah, let's see, right. So our policy has been that you're \nwelcome to use R, but I would strongly advi se against it, mainly because in the last \nproblem set, we actually supply some code th at will run in Octave but that would be \nsomewhat painful for you to translate into R yourself. So for your other assignments, if \nyou wanna submit a solution in R, that's fi ne. But I think MATLAB is actually totally \nworth learning. I know R and MATLAB, and I personally end up using MATLAB quite a \nbit more often for various reasons. Yeah? \nStudent : For the [inaudible] pr oject [inaudible]? \nInstructor (Andrew Ng) : So for the term project, you're welcome to do it in smaller \ngroups of three, or you're welcome to do it by yo urself or in groups of two. Grading is the \nsame regardless of the group size, so with a larger group, you probably — I recommend" metadata={'page': 9, 'source': 'data/MachineLearning-Lecture01.pdf'}

다섯번째는

page_content='algorithm then? So what’s different? How come I was making all that noise earlier about \nleast squares regression being a bad idea for classification problems and then I did a \nbunch of math and I skipped some steps, but I’m, sort of, claiming at the end they’re \nreally the same learning algorithm? \nStudent: [Inaudible] constants? \nInstructor (Andrew Ng) :Say that again. \nStudent: [Inaudible] \nInstructor (Andrew Ng) :Oh, right. Okay, cool.' metadata={'page': 13, 'source': 'data/MachineLearning-Lecture03.pdf'}

로, 각각 결과로 해당 청크를 가져온다.

PDF를 로드할 때 의도적으로 중복 항목을 지정했기 때문에 두 개의 다른 청크에 동일한 정보가 있고 이 두 청크를 모두 언어 모델에 전달할 것이기 때문에 첫번째와 좋지 않은 결과가 나타난 것이다.

두 번째 청크안에 담긴 정보에는 실제 가치가 없으며 언어 모델이 학습할 수 있는 다른 별개의 청크가 있다면 훨씬 더 좋을 것이다.

◼︎ example code - fail case (2)

발생할 수 있는 또 다른 유형의 실패 유형이 있다.

만약 세 번째 강의에서 회귀(regression)에 대해 무엇이라고 설명했는지에 대해서 질문해보자.

question = "what did they say about regression in the third lecture?"

question = "what did they say about regression in the third lecture?"

docs = vectordb.similarity_search(question,k=5)이에 대한 문서를 얻을 때 직관적으로 우리는 그것들이 모두 세 번째 강의의 일부일 것이라고 기대한다.

어떤 강의에서 나온 것인지 메타데이터에 정보가 있기 때문에 이를 확인할 수 있기 때문이다.

for doc in docs:

print(doc.metadata)

###output

{'page': 0, 'source': 'data/MachineLearning-Lecture03.pdf'}

{'page': 2, 'source': 'data/MachineLearning-Lecture02.pdf'}

{'page': 14, 'source': 'data/MachineLearning-Lecture03.pdf'}

{'page': 4, 'source': 'data/MachineLearning-Lecture03.pdf'}

{'page': 0, 'source': 'data/MachineLearning-Lecture02.pdf'}이제 모든 문서를 반복하여 메타데이터를 출력해보자.

실제로 세 번째 강의와 두번째 강의가 조합되어 있음을 볼 수 있다.

이것이 실패하는 이유는 우리가 세 번째 강의에서만 문서를 원한다는 사실이 구조화된 정보 조각이지만 의미론적 임베딩(semantic lookup based on embeddings)을 기반으로 검색을 수행하고 있기 때문이다.

전체 문장에 대한 임베딩을 생성했다면, 아마도 회귀에 좀 더 중점을 둘 것이고, 회귀와 상당히 관련이 있는 결과를 얻고 있을 것이다.

결과로 나온 docs들을 확인해보면

docs1

'MachineLearning-Lecture03 \nInstructor (Andrew Ng) :Okay. Good morning and welcome b ack to the third lecture of \nthis class. So here’s what I want to do t oday, and some of the topics I do today may seem \na little bit like I’m jumping, sort of, from topic to topic, but here’s, sort of, the outline for \ntoday and the illogical flow of ideas. In the last lecture, we talked about linear regression \nand today I want to talk about sort of an adaptation of that called locally weighted \nregression. It’s very a popular algorithm that’s actually one of my former mentors \nprobably favorite machine learning algorithm. \nWe’ll then talk about a probabl e second interpretation of linear regression and use that to \nmove onto our first classification algorithm, which is logistic regr ession; take a brief \ndigression to tell you about something cal led the perceptron algorithm, which is \nsomething we’ll come back to, again, later this quarter; and time allowing I hope to get to \nNewton’s method, which is an algorithm fo r fitting logistic regression models. \nSo this is recap where we’re talking about in the previous lecture, remember the notation \nI defined was that I used this X superscrip t I, Y superscript I to denote the I training \nexample. And when we’re talking about linear regression or linear l east squares, we use \nthis to denote the predicted value of “by my hypothesis H” on the input XI. And my'

docs2

"Instructor (Andrew Ng) :All right, so who thought driving could be that dramatic, right? \nSwitch back to the chalkboard, please. I s hould say, this work was done about 15 years \nago and autonomous driving has come a long way. So many of you will have heard of the \nDARPA Grand Challenge, where one of my colleagues, Sebastian Thrun, the winning \nteam's drive a car across a desert by itself. \nSo Alvin was, I think, absolutely amazing wo rk for its time, but autonomous driving has \nobviously come a long way since then. So what you just saw was an example, again, of \nsupervised learning, and in particular it was an example of what they call the regression \nproblem, because the vehicle is trying to predict a continuous value variables of a \ncontinuous value steering directions , we call the regression problem. \nAnd what I want to do today is talk about our first supervised learning algorithm, and it \nwill also be to a regression task. So for the running example that I'm going to use \nthroughout today's lecture, you're going to retu rn to the example of trying to predict \nhousing prices. So here's actually a data set collected by TA, Dan Ramage, on housing \nprices in Portland, Oregon. \nSo here's a dataset of a number of houses of different sizes, and here are their asking \nprices in thousands of dollars, $200,000. And so we can take this data and plot it, square \nfeet, best price, and so you make your other dataset like that. And the question is, given a"

docs3

'Student: It’s the lowest it – \nInstructor (Andrew Ng) :No, exactly. Right. So zero to the same, this is not the same, \nright? And the reason is, in logi stic regression this is diffe rent from before, right? The \ndefinition of this H subscript theta of XI is not the same as the definition I was using in \nthe previous lecture. And in pa rticular this is no longer thet a transpose XI. This is not a \nlinear function anymore. This is a logistic function of theta transpose XI. Okay? So even \nthough this looks cosmetically similar, even though this is similar on the surface, to the \nBastrian descent rule I derive d last time for least squares regression this is actually a \ntotally different learning algorithm. Okay? And it turns out that there’s actually no \ncoincidence that you ended up with the same l earning rule. We’ll actually talk a bit more \nabout this later when we talk about generalized linear models. But this is one of the most \nelegant generalized learning models that we’l l see later. That even though we’re using a \ndifferent model, you actually ended up with wh at looks like the sa me learning algorithm \nand it’s actually no coincidence. Cool. \nOne last comment as part of a sort of l earning process, over here I said I take the \nderivatives and I ended up with this line . I didn’t want to make you sit through a long \nalgebraic derivation, but later t oday or later this week, pleas e, do go home and look at our'

docs4

'when you had a Q’s tow. Like you make it too small in your – \nInstructor (Andrew Ng) :Yes, absolutely. Yes. So local ly weighted regression can run \ninto – locally weighted regression is not a penancier for the problem of overfitting or \nunderfitting. You can still run into the same problems with locally weighted regression. \nWhat you just said about – and so some of these things I’ll leave you to discover for \nyourself in the homework problem. You’ll actu ally see what you just mentioned. Yeah? \nStudent: It almost seems like you’re not even th oroughly [inaudible] w ith this locally \nweighted, you had all the data th at you originally had anyway.'

docs5

"really makes a difference between a good so lution and amazing solution. And to give \neveryone to just how we do points assignments, or what is it that causes a solution to get \nfull marks, or just how to write amazing so lutions. Becoming a grad er is usually a good \nway to do that. \nGraders are paid positions and you also get free food, and it's usually fun for us to sort of \nhang out for an evening and grade all the a ssignments. Okay, so I will send email. So \ndon't email me yet if you want to be a grader. I'll send email to the entire class later with \nthe administrative details and to solicit app lications. So you can email us back then, to \napply, if you'd be interested in being a grader. \nOkay, any questions about that? All right, okay, so let's get started with today's material. \nSo welcome back to the second lecture. What I want to do today is talk about linear \nregression, gradient descent, and the norma l equations. And I should also say, lecture \nnotes have been posted online and so if some of the math I go over today, I go over rather \nquickly, if you want to see every equation wr itten out and work through the details more \nslowly yourself, go to the course homepage and download detailed lecture notes that \npretty much describe all the mathematical, te chnical contents I'm going to go over today. \nToday, I'm also going to delve into a fair amount – some amount of linear algebra, and so"

첫 번째 강의에서 나온 다섯 번째 문서를 살펴보면 실제로 회귀에 대해 언급하고 있음을 알 수 있다. 세 번째 강의의 문서만 쿼리해야 한다는 사실을 인지하지 못하고 있는데, 우리가 만든 의미론적 임베딩에서는 완벽하게 포착되지 않은 구조화된 정보이기 때문이다.

검색하는 문서 수인 k를 변경할 때에도 너무 큰 k는 많은 문서를 검색할 수 있지만 끝 부분에 있는 문서는 처음에 있는 문서만큼 관련성이 없을 수 있다.

그러나 이러한 실패 케이스들은 Retrieval을 통해서 검색을 강화하는 방법으로 개선할 수 있다.

2개의 댓글

This is the wisdom I spent my thirties searching for! https://henrystickmin.io If only I could have learned it sooner!

Worldle offers daily geography puzzles that help you test your knowledge of countries and map locations while learning about new regions.