Paper Review: <AN INVESTIGATION OF INCORPORATING MAMBA FOR SPEECH ENHANCEMENT>

Paper Review

목록 보기

17/17

This post is about the paper review on "AN INVESTIGATION OF INCORPORATING MAMBA FOR SPEECH ENHANCEMENT", Chao et al., SLT 2024.

Research topic

- Applying Mamba on Speech Enhancement

Methods

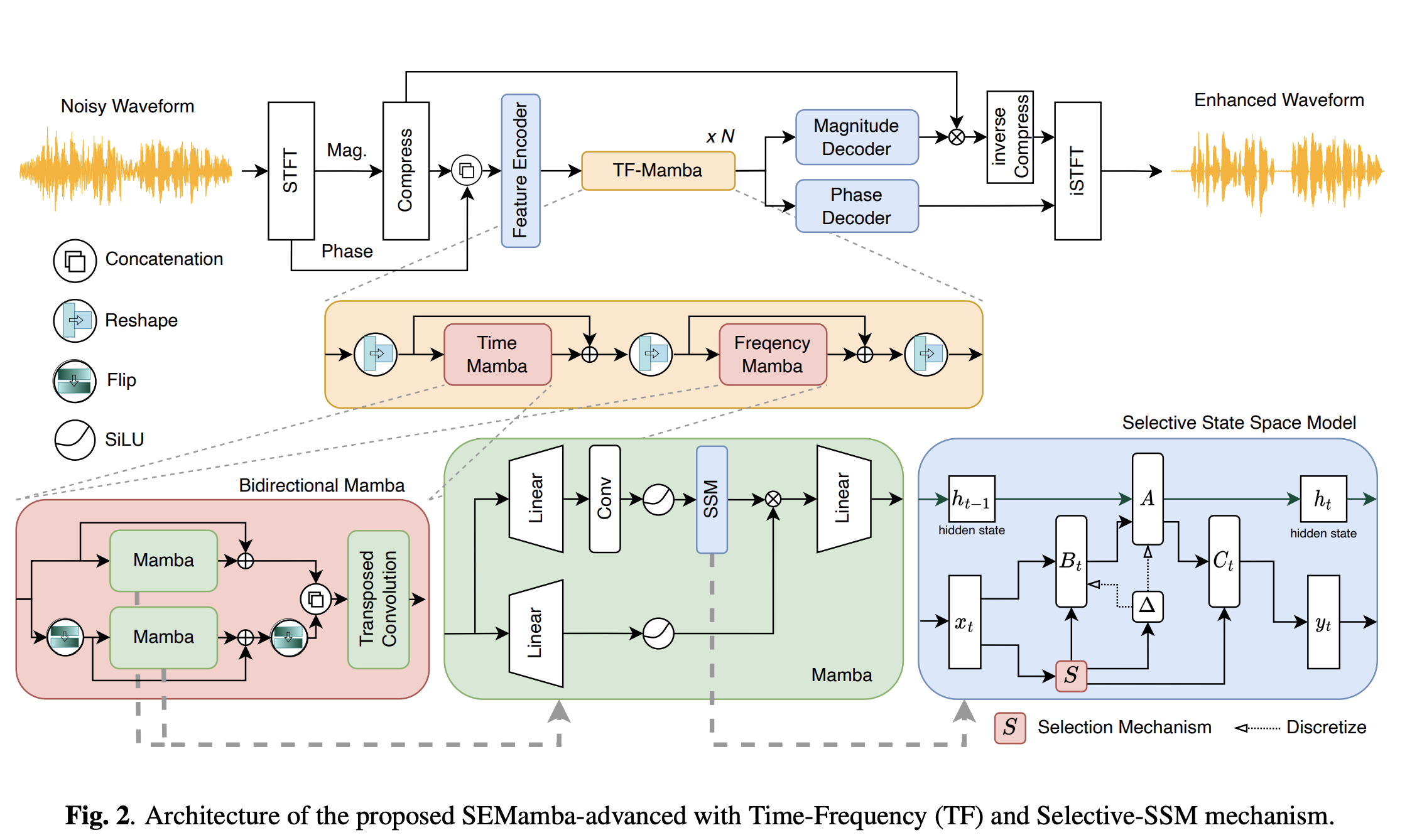

- Encoder-decoder framework following MP-SENet(Lu et al., Interspeech 2023)

- Same encoder & decoder setup following MP-SENet

- N repetition of Bidirectional Mamba as main feature mixing operators

- Consistency Loss (Zadorozhnyy et al., Interspeech 2023)

- Perceptual Contrast Stretching (Chao et al., Interspeech 2022)

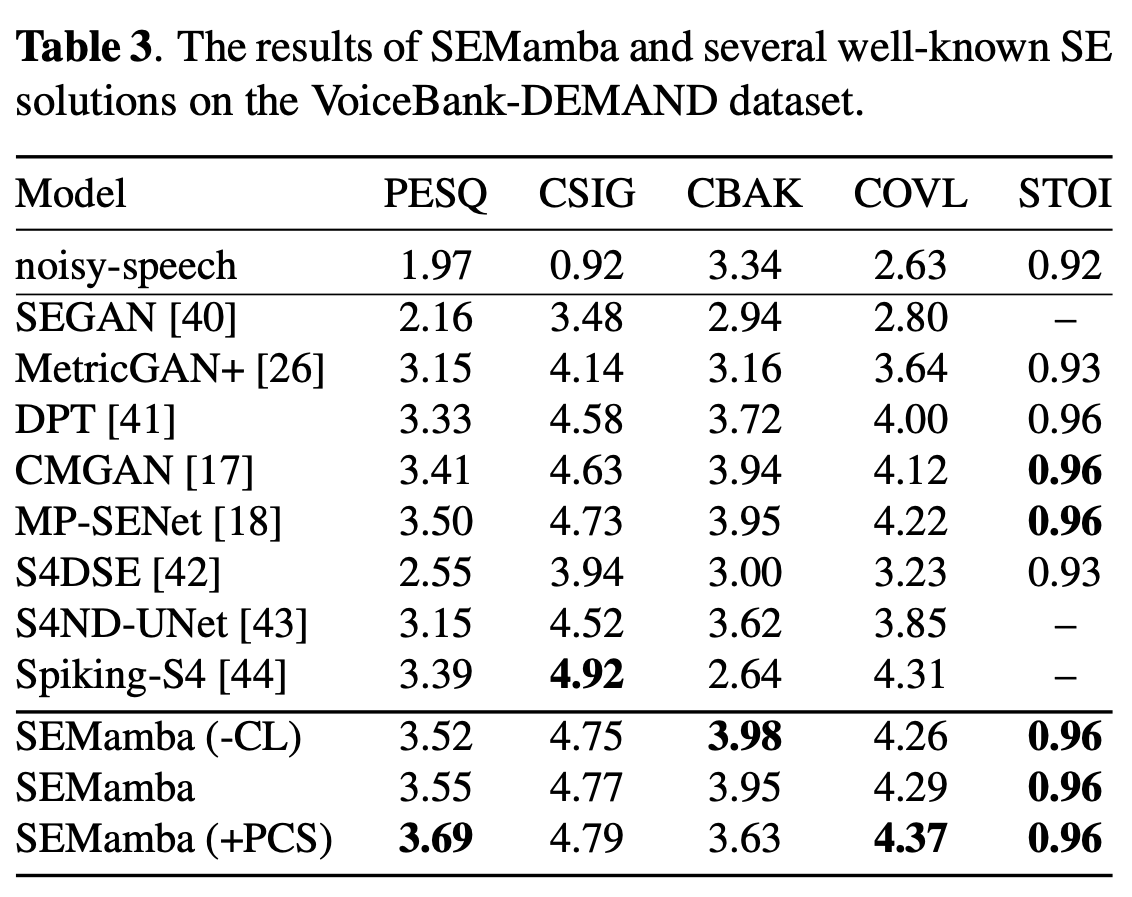

Experimental results

- Holds as a strong SOTA Model among all baseline models.

Thoughts

- Strong, easy-to-use, easy-to-read method --> 102 citations (checked on 2025/11/30)

- Out-of-distribution experiments?

- Achieving SOTA VS Incorporation of Mamba. PCS and CL are not the novelty, but mere techniques to achieve SOTA. Why not focus more on Mamba? (How can Mamba be applied better, Deeper analysis on how Mamba processes speech)