Functions, Tools and agents with Langchain - 2

DeepLearning.AI 강의 정리내용입니다.

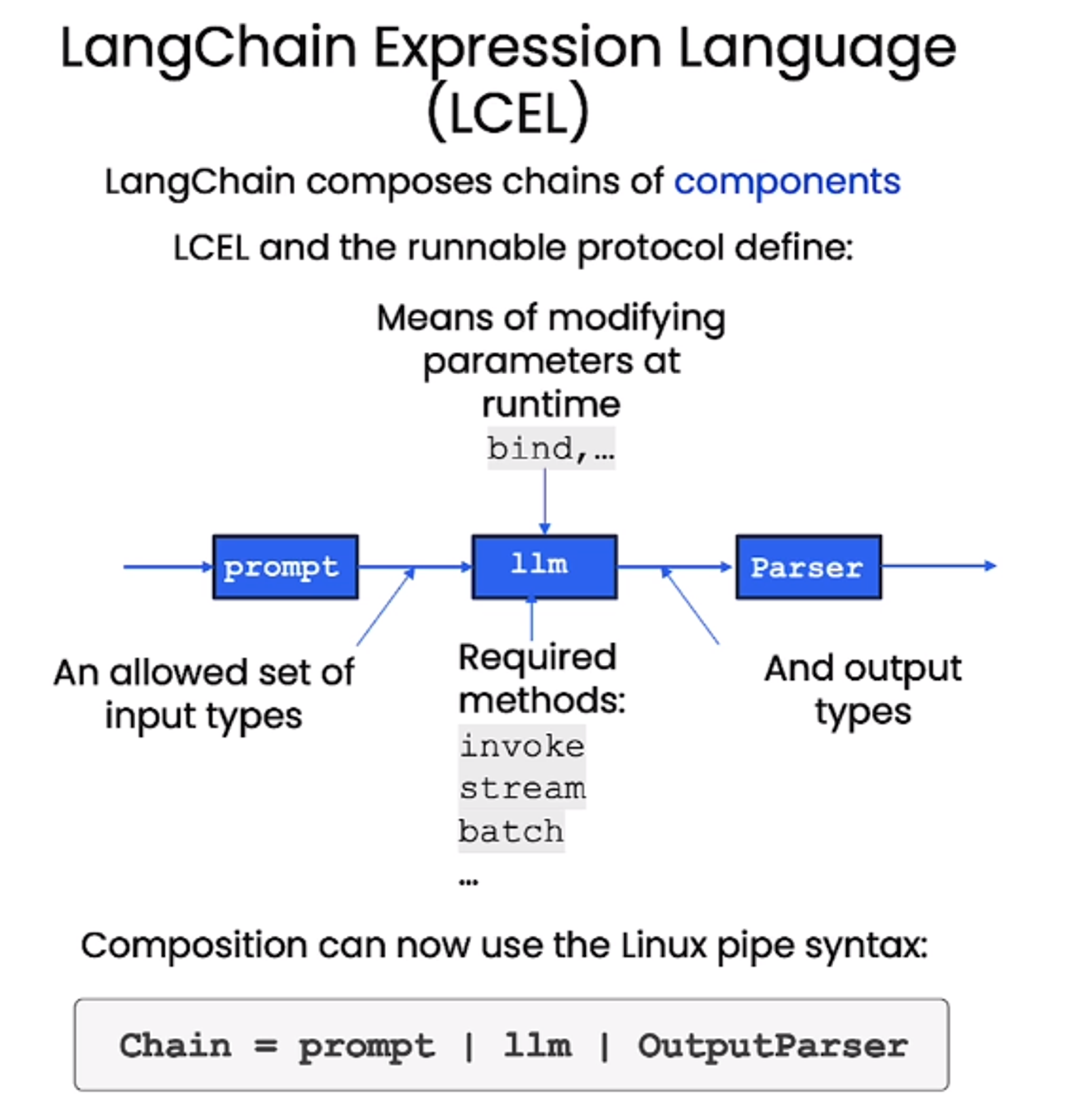

L2. LangChain Expression Language (LCEL)

- 리눅스 파이프의 형태로 LangChain에서도 사용할 수 있게 함.

- Runnable이라는 객체의 형태로 쉽게 관리가 가능하다.

- LCEL 장점

- LCEL을 써서 간단하게 Async, Batch, Streaming을 구현할 수 있다

- llm 뿐만 아니라 전체 Chain에도 Fallback기능 가능

- 병렬처리 가능

- 빌트인 로깅

예제로 살펴보자

Simple Chain

prompt = ChatPromptTemplate.from_template(

"tell me a short joke about {topic}"

)

model = ChatOpenAI()

output_parser = StrOutputParser()

chain = prompt | model | output_parser

chain.invoke({"topic": "bears"})

# 출력

"Why don't bears wear shoes?\n\nBecause they already have bear feet!"More Complex Chain

- Retrieval Augmented Generation도 쉽게 가능하다

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import DocArrayInMemorySearch

vectorstore = DocArrayInMemorySearch.from_texts(

["harrison worked at kensho", "bears like to eat honey"],

embedding=OpenAIEmbeddings()

)

retriever = vectorstore.as_retriever()

retriever.get_relevant_documents("where did harrison work?")

# 결과

[Document(page_content='harrison worked at kensho'),

Document(page_content='bears like to eat honey')]

retriever.get_relevant_documents("what do bears like to eat")

# 결과

[Document(page_content='bears like to eat honey'),

Document(page_content='harrison worked at kensho')]- user prompt에서 사용자의 질문만 가지고 어떻게 처리할까?

# RAG 과정 -> context, 즉 retiriever로부터 fetch한 Document

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

# 1. Fetch relevant context

# 2. Pass 1. into the prompt

# 3. Pass 2. into a model

# 4. Pass 3. to the output parser

# 첫번째로 하나의 질문을 받아 두개(context, question)의 dictionary를 결과로 받게 Runnable 작성

from langchain.schema.runnable import RunnableMap

chain = RunnableMap({

"context": lambda x: retriever.get_relevant_documents(x["question"]),

"question": lambda x: x["question"]

)} | prompt | model | output_parser

chain.invoke({"question": "where the harrison work?"})

# 결과

'Harrison worked at Kensho.'

# 자세히 살펴보기

inputs = RunnableMap({

"context": lambda x: retriever.get_relevant_documents(x["question"]),

"question": lambda x: x["question"]

})

inputs.invoke({"question": "where did harrison work?"})

# 프롬프트 단에 들어가기 전 결과

{'context': [Document(page_content='harrison worked at kensho'),

Document(page_content='bears like to eat honey')],

'question': 'where did harrison work?'}Bind

- bind 기능을 이용하여 쉽게 function을 model에 추가해줄 수 있다.

# 예를 들어 날씨 검색 api 를 사용하는 weather_search라는 function을 추가해보자

functions = [

{

"name": "weather_search",

"description": "Search for weather given an airport code",

"parameters": {

"type": "object",

"properties": {

"airport_code": {

"type": "string",

"description": "The airport code to get the weather for"

},

},

"required": ["airport_code"]

}

}

]

prompt = ChatPromptTemplate.from_messages(

[

("human", "{input}")

]

)

# .bind로 쉽게 function 추가!!!!

model = ChatOpenAI(temperature=0).bind(functions=functions)

runnable = prompt | model

runnable.invoke({"input": "what is the weather in sf"})

# 결과

AIMessage(content='', additional_kwargs={'function_call': {'name': 'weather_search', 'arguments': '{\n "airport_code": "SFO"\n}'}})

# 새로운 함수를 추가하고 싶을 때는 그냥 functions만 바꿔주면 업데이트 된다!!!

# 스포츠 경기 결과? 를 알려주는 함수 추가

functions = [

{

"name": "weather_search",

"description": "Search for weather given an airport code",

"parameters": {

"type": "object",

"properties": {

"airport_code": {

"type": "string",

"description": "The airport code to get the weather for"

},

},

"required": ["airport_code"]

}

},

{

"name": "sports_search",

"description": "Search for news of recent sport events",

"parameters": {

"type": "object",

"properties": {

"team_name": {

"type": "string",

"description": "The sports team to search for"

},

},

"required": ["team_name"]

}

}

]

model = model.bind(functions=functions)

runnable = prompt | model

runnable.invoke({"input": "how did the patriots do yesterday?"})

# 결과

AIMessage(content='', additional_kwargs={'function_call': {'name': 'sports_search', 'arguments': '{\n "team_name": "patriots"\n}'}})Fallbacks

- LCEL을 이용하여 하나의 컴포넌트 뿐만 아니라 전체 시퀀스에 fallback을 추가할 수 있다

- fail이 나는 환경을 위해 성능이 좋지 않은 older version LLM을 사용하는 예제(JSON 처리를 못함)

from langchain.llms import OpenAI

import json

simple_model = OpenAI(

temperature=0,

max_tokens=1000,

model="text-davinci-001"

)

simple_chain = simple_model | json.loads

challenge = "write three poems in a json blob, where each poem is a json blob of a title, author, and first line"

simple_model.invoke(challenge)

# 결과

'\n\n["The Waste Land","T.S. Eliot","April is the cruelest month....이하 생략"]'

simple_chain.invoke(challenge) # davinci가 json이 유효하지 않기 때문에 오류 발생

model = ChatOpenAI(temperature=0)

chain = model | StrOutputParser() | json.loads

chain.invoke(challenge)

# 결과

{'poem1': {'title': 'Whispers of the Wind',

'author': 'Emily Rivers',

'first_line': 'Softly it comes, the whisper of the wind'},

'poem2': {'title': 'Silent Serenade',

'author': 'Jacob Moore',

'first_line': 'In the stillness of night, a silent serenade'},

'poem3': {'title': 'Dancing Shadows',

'author': 'Sophia Anderson',

'first_line': 'Shadows dance upon the moonlit floor'}}

# .with_fallbacks를 이용하여 원래 runnable 즉, simple_chain(json 안되는모델) 실행

# 2. 에러가 난다면 리스트 내에 있는 runnable들(여기선 하나밖에 없음)을 차례대로 성공할 때까지 수행한다.

final_chain = simple_chain.with_fallbacks([chain])

final_chain.invoke(challenge)

# 결과

{'poem1': {'title': 'Whispers of the Wind',

'author': 'Emily Rivers',

'first_line': 'Softly it comes, the whisper of the wind'},

'poem2': {'title': 'Silent Serenade',

'author': 'Jacob Moore',

'first_line': 'In the stillness of night, a silent serenade'},

'poem3': {'title': 'Dancing Shadows',

'author': 'Sophia Anderson',

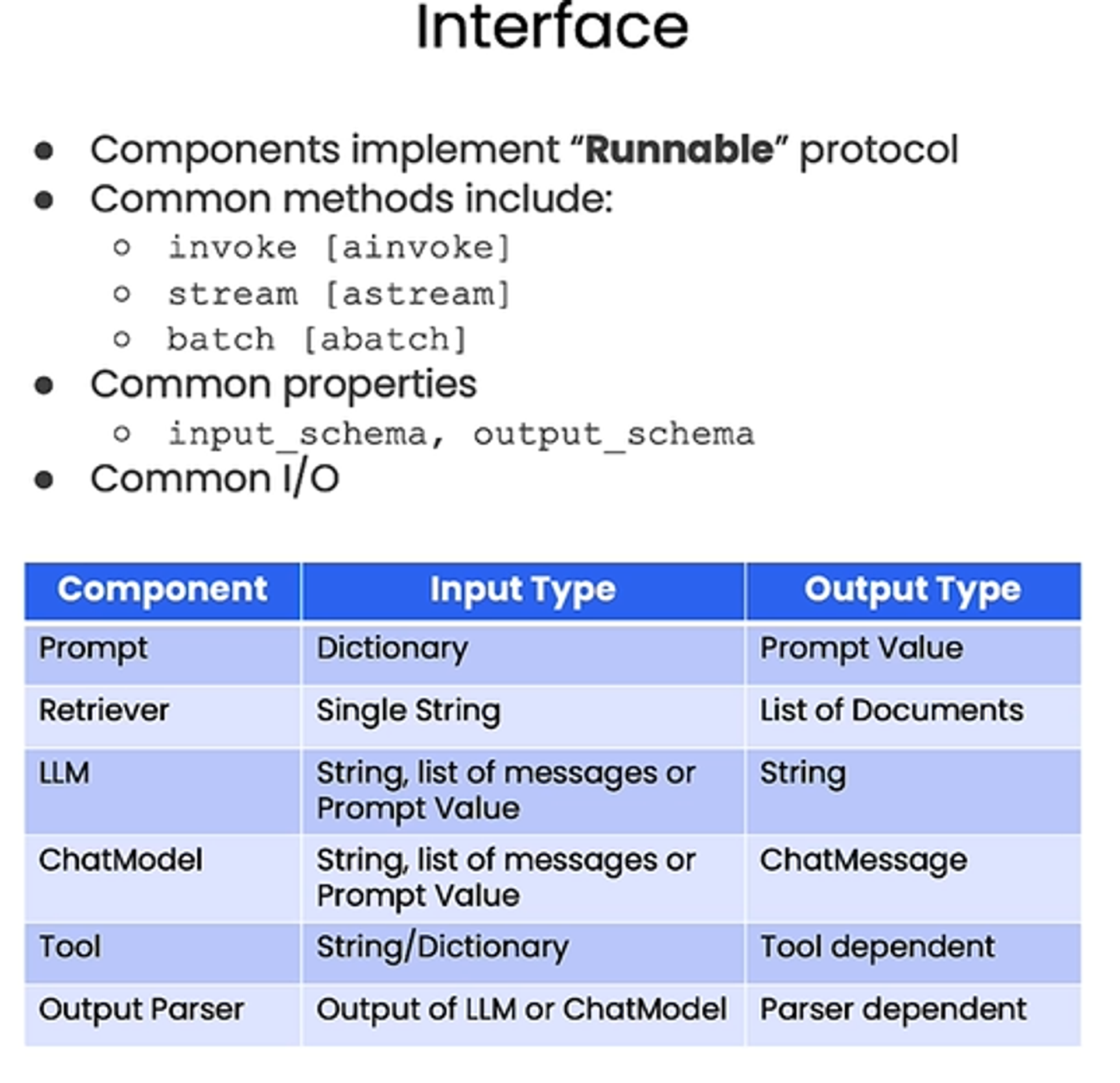

'first_line': 'Shadows dance upon the moonlit floor'}}Interface

- 인터페이스를 위한 기능들

prompt = ChatPromptTemplate.from_template(

"Tell me a short joke about {topic}"

)

model = ChatOpenAI()

output_parser = StrOutputParser()

chain = prompt | model | output_parser

# batch를 이용하여 여러 input에 대해 가능한 한 병렬적으로 처리하도록 한다.

chain.batch([{"topic": "bears"}, {"topic": "frogs"}])

# 결과

["Why don't bears wear shoes?\n\nBecause they have bear feet!",

'Why did the frog take the bus to work?\n\nBecause his car got toad away!']

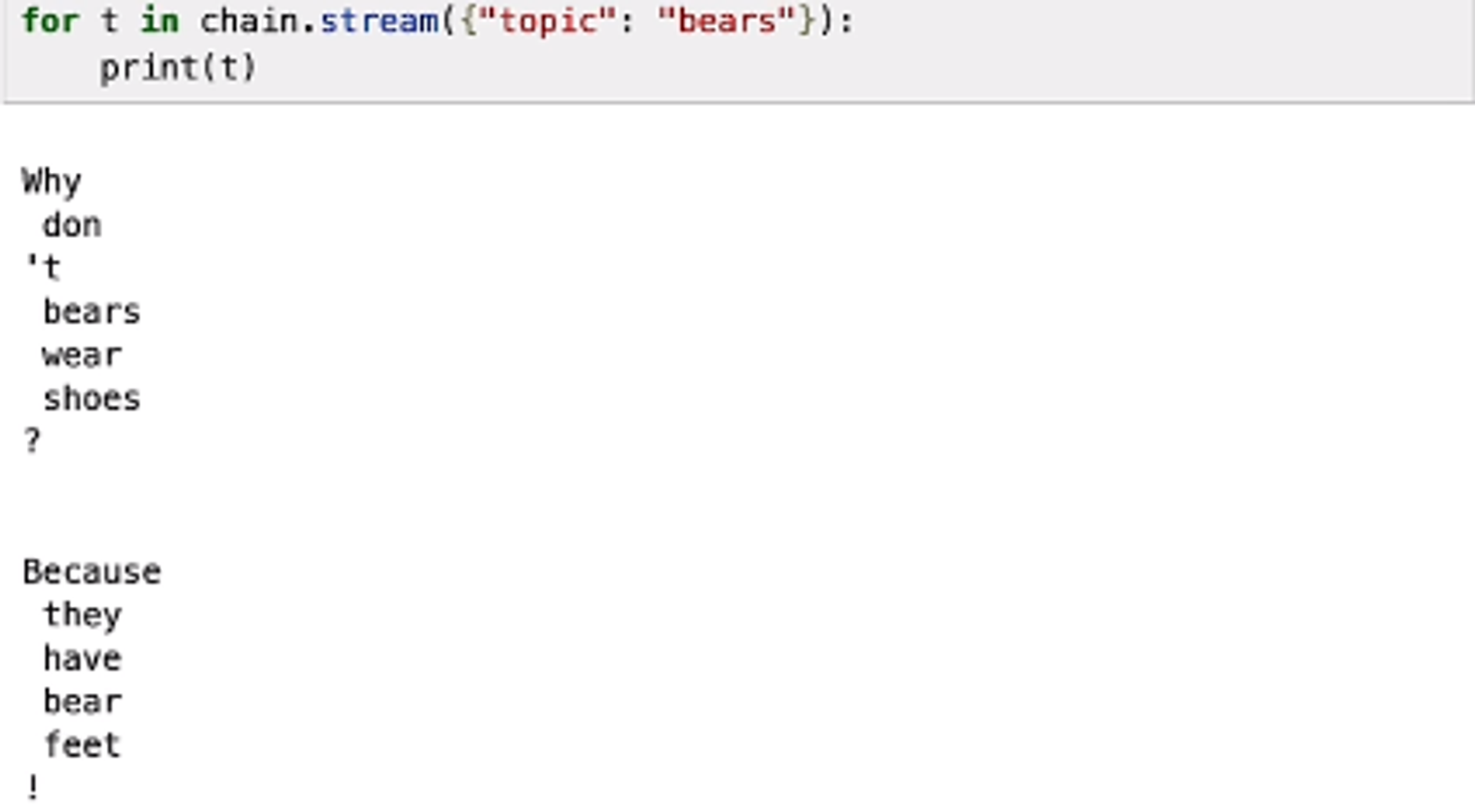

- 스트리밍 기능도 쉽게 구현. 이전에는 callback? 클래스를 상속받아 수정해줘야 했었음

- 스트리밍이란? ChatGPT처럼 한글자씩 나오도록 하는것

- 응답 처리하는 동안 사용자에게 과정이 보여지게 함으로써 지루하지 않게

# 비동기 처리도 쉽게 가능하다

# 컴포넌트 하나하나 비동기 처리 붙이는게 아닌 Runnable 에다 await 붙여주기

response = await chain.ainvoke({"topic": "bears"})

response