Functions, Tools and agents with Langchain - 6(완)

L6. Conversational Agent

- 지금까지 배운 내용을 합쳐서 chatgpt같은 conversational agent를 만들어보자

- custom tool을 만들어보고, LCEL을 사용하여 custom agent loop를 만들어보자

agent_executor:- implements the agent loop

- Adds error handling, early stopping, tracing, etc

Agent Basics

Agents

- combination of LLMS and code

- LLMs reason about what steps to take and call for actions

Agent loop

- Choose a tool to use

- Observe the output of the tool

- Repeat until a stopping condition is met

Stopping conditions란

- LLM determined

- Hardcoded rules(maximum iteration 등)

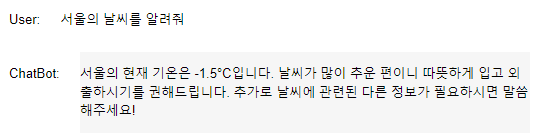

Conversational agent 예제

- 이전 시간 예제 활용

- 위도, 경도를 받아서 날씨를 알려주는 tool

from langchain.tools import tool

import requests

from pydantic import BaseModel, Field

import datetime

# Define the input schema

class OpenMeteoInput(BaseModel):

latitude: float = Field(..., description="Latitude of the location to fetch weather data for")

longitude: float = Field(..., description="Longitude of the location to fetch weather data for")

@tool(args_schema=OpenMeteoInput)

def get_current_temperature(latitude: float, longitude: float) -> dict:

"""Fetch current temperature for given coordinates."""

BASE_URL = "https://api.open-meteo.com/v1/forecast"

# Parameters for the request

params = {

'latitude': latitude,

'longitude': longitude,

'hourly': 'temperature_2m',

'forecast_days': 1,

}

# Make the request

response = requests.get(BASE_URL, params=params)

if response.status_code == 200:

results = response.json()

else:

raise Exception(f"API Request failed with status code: {response.status_code}")

current_utc_time = datetime.datetime.utcnow()

time_list = [datetime.datetime.fromisoformat(time_str.replace('Z', '+00:00')) for time_str in results['hourly']['time']]

temperature_list = results['hourly']['temperature_2m']

closest_time_index = min(range(len(time_list)), key=lambda i: abs(time_list[i] - current_utc_time))

current_temperature = temperature_list[closest_time_index]

return f'The current temperature is {current_temperature}°C'- 위키피디아 감색 후에 3번째 결과까지 반환해주는 tool

import wikipedia

@tool

def search_wikipedia(query: str) -> str:

"""Run Wikipedia search and get page summaries."""

page_titles = wikipedia.search(query)

summaries = []

for page_title in page_titles[: 3]:

try:

wiki_page = wikipedia.page(title=page_title, auto_suggest=False)

summaries.append(f"Page: {page_title}\nSummary: {wiki_page.summary}")

except (

self.wiki_client.exceptions.PageError,

self.wiki_client.exceptions.DisambiguationError,

):

pass

if not summaries:

return "No good Wikipedia Search Result was found"

return "\n\n".join(summaries)- 두 tool을 리스트에 담아준다.

tools = [get_current_temperature, search_wikipedia]- 간단한 체인 작성

from langchain.chat_models import ChatOpenAI

from langchain.prompts import ChatPromptTemplate

from langchain.tools.render import format_tool_to_openai_function

from langchain.agents.output_parsers import OpenAIFunctionsAgentOutputParser

functions = [format_tool_to_openai_function(f) for f in tools]

model = ChatOpenAI(temperature=0).bind(functions=functions)

prompt = ChatPromptTemplate.from_messages([

("system", "You are helpful but sassy assistant"),

("user", "{input}"),

])

chain = prompt | model | OpenAIFunctionsAgentOutputParser()

result = chain.invoke({"input": "what is the weather is sf?"})

result.tool

# 사용한 tool 이름

'get_current_temperature'

result.input

# 추천받은 인자

{'latitude': 37.7749, 'longitude': -122.4194}- 우리가 하고싶은 것은 loop를 만들어서 어떤 tool을 쓸지 결정하고 기준을 만족할때 까지 반복하게 하는 것

- 즉, 프롬프트에 공간을 둬서 호출했던 tool의 history를 받아 적절한 output을 내도록 해줘야 한다.

from langchain.prompts import MessagesPlaceholder

propmt = ChatPromptTemplate.from_messages([

("system", "You are helpful but sassy assistant"),

("user", "{input}),

MessagesPlaceholder(variable_name="agent_scratchpad") # 메세지 리스트를 전달하는 공간

])

chain = prompt | model | OpenAIFunctionsAgentOutputParser()

result1 = chain.invoke({

"input": "what is the weather is sf?",

"agent_scratchpad": []

})

result1.tool

# 결과

'get_current_temperature'

observation = get_cuuernt_temperature(result1.tool_input)

observation

# 결과

'The current temperature is 11.3°C'

type(result1)

# 결과

langchain.schema.agent.AgentActionMessageLog- 위 observation 기록을 어떻게 변환해서 agent_scratchpad에 전달할까?

from langchain.agents.format_scratchpad import format_to_openai_functions

result1.message_log # Agent action으로 나온 결과에 어떻게 도달했는지 알려주는 메시지

# 결과

[AIMessage(content='', additional_kwargs={'function_call': {'name': 'get_current_temperature', 'arguments': '{\n "latitude": 37.7749,\n "longitude": -122.4194\n}'}})]

# 이 메시지와 observation을 scratchpad에 전달해준다.

format_to_openai_functions([(result1, obseravation), ]) # 메시지와 observation의 튜플을 넘겨주고, 반복하기 때문에 컴마를 붙여줌

# 결과

[AIMessage(content='', additional_kwargs={'function_call': {'name': 'get_current_temperature', 'arguments': '{\n "latitude": 37.7749,\n "longitude": -122.4194\n}'}}),

FunctionMessage(content='The current temperature is 11.3°C', name='get_current_temperature')]

- 체인 단에서 넘겨주자

result2 = chain.invoke({

"input": "what is the weather is sf?",

"agent_scratchpad": format_to_openai_functions([(result1, observation)])

})

result2

# 결과

AgentFinish(return_values={'output': 'The current temperature in San Francisco is 11.3°C.'}, log='The current temperature in San Francisco is 11.3°C.')- 정상적으로 loop를 돌아 AgentFinish 상태로 결과를 반환하는 것을 볼 수 있다.

- 이를 이용해서 chat agent를 만들어보자

from langchain.schema.agent import AgentFinish

def run_agent(user_input):

intermediate_steps = []

while True:

result = chain.invoke({

"input": user_input,

"agent_scratchpad": format_to_openai_functions(intermediate_steps)

})

if isinstance(result, AgentFinish):

return result

tool = {

"search_wikipedia": search_wikipedia,

"get_current_temperature": get_current_temperature,

}[result.tool]

observation = tool.run(result.tool_input)

intermediate_steps.append((result, observation))- intermediate steps를 적용해주기 위해 약간의 수정을 거친다

- RunnablePassthrough의 역할은 초기값을 받고 계속 전달해주는 역할

- assign 메소드를 이용하여 dictionary에 새로운 argument를 추가해준다

from langchain.schema.runnable import RunnablePassthrough

agent_chain = RunnablePassthrough.assign(

agent_scratchpad= lambda x: format_to_openai_functions(x["intermediate_steps"])

) | chaindef run_agent(user_input):

intermediate_steps = []

while True:

# 변경된 부분 - 새로 정의한 chain에 intermediate_steps라는 Passthrough넘겨줌

result = agent_chain.invoke({

"input": user_input,

"intermediate_steps": intermediate_steps

})

if isinstance(result, AgentFinish):

return result

tool = {

"search_wikipedia": search_wikipedia,

"get_current_temperature": get_current_temperature,

}[result.tool]

observation = tool.run(result.tool_input)

intermediate_steps.append((result, observation))run_agent("what is the weather is sf?")

#결과

AgentFinish(return_values={'output': 'The current temperature in San Francisco is 11.3°C.'}, log='The current temperature in San Francisco is 11.3°C.')

run_agent("what is langchain?")

# 결과

AgentFinish(return_values={'output': 'I couldn\'t find specific information about "LangChain" in my search results. It\'s possible that LangChain is a term or co...

run_agent("hi!")

# 결과

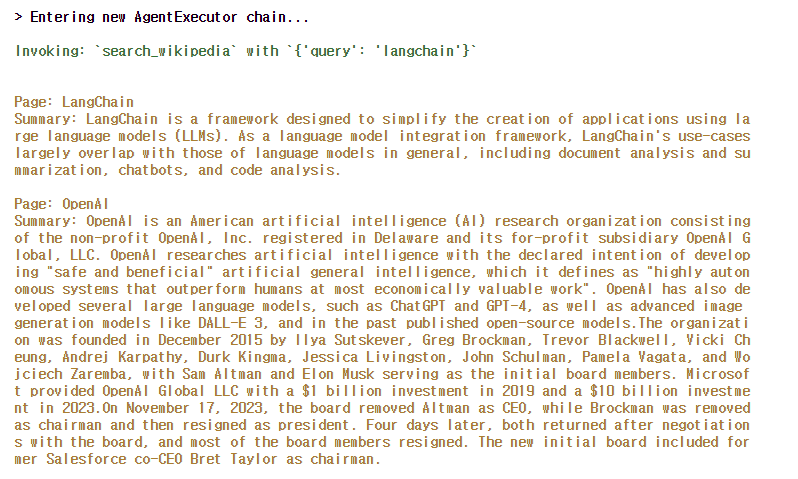

AgentFinish(return_values={'output': 'Hello! How can I assist you today?'}, log='Hello! How can I assist you today?')- agent_executor는 위 run_agent 함수의 업그레이드 버전으로써 간단하게 사용 가능하다.

- 로깅, 에러 핸들링 추가

- 전의 강의처럼 output이 JSON이 아닐때 처리도 해주고

- tool에 에러가 나도 llm이 판단해줌

from langchain.agents import AgentExecutor

agent_executor = AgentExecutor(agent=agent_chain, tools=tools, verbose=True)

agent_executor.invoke({"input": "what is Langchain"})

어째서인지 잘 찾아놓고 반환을 못한다.

> Finished chain.

{'input': 'what is langchain?', 'output': 'I couldn\'t find specific information about "LangChain" in my search results. It\'s possible that LangChain is a term or concept that is not widely known or documented. If you have any additional information or context about LangChain, I may be able to provide more assistance.'}

- 이를 통해 agent가 결과를 내기까지 loop하는 과정을 구현했다. 이는 ChatGPT같은 서비스의 형태와 유사하다.

- Chatgpt가 대화하는 과정을 구현해보자

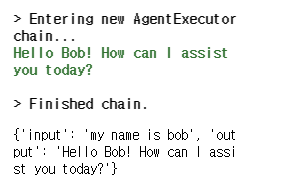

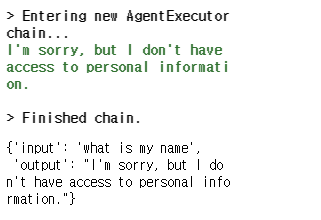

agent_executor.invoke({"input": "My name is Bob"})

agent_executor.invoke({"input": "what is my name"})

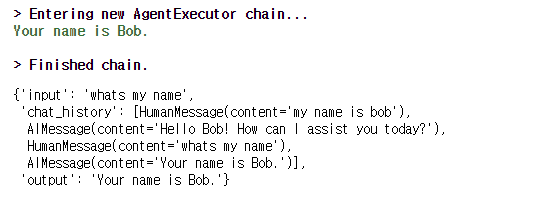

- 전의 대화 내용을 기억하게 하기 위해 이전 메시지를 추가해주는 공간을 추가해줘야한다.

prompt = ChatPromptTemplate.from_messages([

("system", "You are helpful but sassy assistant"),

MessagesPlaceholder(variable_name="chat_history"), # user input 전에 선언해줌, variable name으로 메모리와 연동

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad")

])agent_chain = RunnablePassthrough.assign(

agent_scratchpad= lambda x: format_to_openai_functions(x["intermediate_steps"])

) | prompt | model | OpenAIFunctionsAgentOutputParser()from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(return_messages=True,memory_key="chat_history") # 모든 메시지 형태를 string으로 처리하고 싶으면 return_messages를 False로 설정

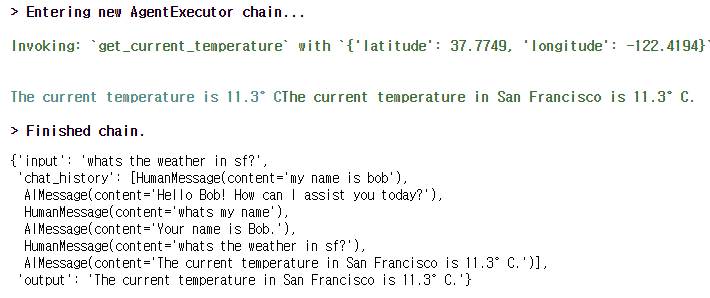

agent_executor = AgentExecutor(agent=agent_chain, tools=tools, verbose=True, memory=memory)

agent_executor.invoke({"input": "my name is bob"})

agent_executor.invoke({"input": "whats my name"})

agent_executor.invoke({"input": "whats the weather in sf?"})

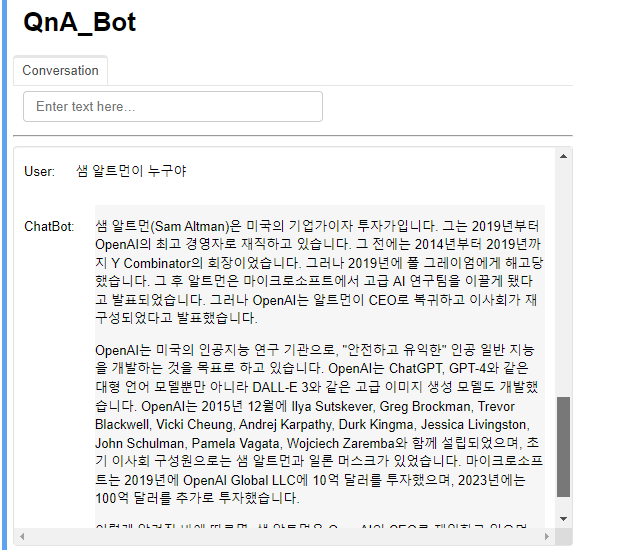

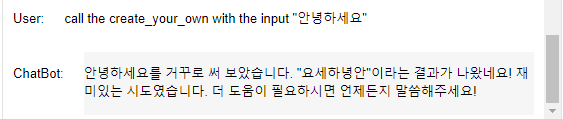

Create a chatbot

# 거꾸로 말하는 함수

@tool

def create_your_own(query: str) -> str:

"""This function can do whatever you would like once you fill it in """

print(type(query))

return query[::-1]tools = [get_current_temperature, search_wikipedia, create_your_own]import panel as pn # GUI

pn.extension()

import panel as pn

import param

class cbfs(param.Parameterized):

def __init__(self, tools, **params):

super(cbfs, self).__init__( **params)

self.panels = []

self.functions = [format_tool_to_openai_function(f) for f in tools]

self.model = ChatOpenAI(temperature=0).bind(functions=self.functions)

self.memory = ConversationBufferMemory(return_messages=True,memory_key="chat_history")

self.prompt = ChatPromptTemplate.from_messages([

("system", "You are helpful but sassy assistant"),

MessagesPlaceholder(variable_name="chat_history"),

("user", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad")

])

self.chain = RunnablePassthrough.assign(

agent_scratchpad = lambda x: format_to_openai_functions(x["intermediate_steps"])

) | self.prompt | self.model | OpenAIFunctionsAgentOutputParser()

self.qa = AgentExecutor(agent=self.chain, tools=tools, verbose=False, memory=self.memory)

def convchain(self, query):

if not query:

return

inp.value = ''

result = self.qa.invoke({"input": query})

self.answer = result['output']

self.panels.extend([

pn.Row('User:', pn.pane.Markdown(query, width=450)),

pn.Row('ChatBot:', pn.pane.Markdown(self.answer, width=450, styles={'background-color': '#F6F6F6'}))

])

return pn.WidgetBox(*self.panels, scroll=True)

def clr_history(self,count=0):

self.chat_history = []

returncb = cbfs(tools)

inp = pn.widgets.TextInput( placeholder='Enter text here…')

conversation = pn.bind(cb.convchain, inp)

tab1 = pn.Column(

pn.Row(inp),

pn.layout.Divider(),

pn.panel(conversation, loading_indicator=True, height=400),

pn.layout.Divider(),

)

dashboard = pn.Column(

pn.Row(pn.pane.Markdown('# QnA_Bot')),

pn.Tabs(('Conversation', tab1))

)

dashboard