Understanding Sound Source Direction: From Human Hearing to Digital Implementation

How Do Humans Locate Sound?

Our ability to determine where a sound comes from is an example of biological signal processing. When a sound occurs, our brain processes subtle differences in how that sound reaches each of our ears. There are two main cues our brain uses:

First, consider what happens when someone calls your name from your left side. The sound reaches your left ear slightly before it reaches your right ear - a phenomenon known as Interaural Time Difference (ITD). Additionally, the sound will be slightly louder in your left ear because your head creates a natural sound shadow for your right ear, resulting in an Interaural Level Difference (ILD).

Your brain combines these time and level differences to create a precise understanding of where the sound originated. This remarkable system allows us to locate sounds in our environment with impressive accuracy.

Digital Implementation: Mimicking Human Hearing

In our implementation, we've created a simplified version of this biological system using my Samsung Galaxy Flip 3's stereo recording capabilities. Here's how it works:

Recording Setup

The Samsung Galaxy Z Flip 3 has multiple microphones positioned around the device: One at the top and another one at the bottom. By enabling stereo recording in the Voice Recorder app, we can capture sound using two microphones simultaneously - similar to how our two ears work. When recording in stereo mode, we will place our phone in a constant sideway position choosing the top microphone as 'left' and the bottom microphone as 'right'. Each microphone's input is saved to a separate audio channel (left and right).

Understanding Sound Source Detection: A Deep Dive into the Implementation

Here's the complete code and how we identify the sound source.

import soundfile as sf

import numpy as np

from pydub import AudioSegment

def convert_to_wav(audio_file, output_file):

"""

Converts an audio file (like M4A) to WAV format for easier processing.

Args:

audio_file (str): Path to the input audio file

output_file (str): Path where the WAV file will be saved

"""

# Load the audio file using pydub, which supports various formats

audio = AudioSegment.from_file(audio_file)

# Export as WAV format - this preserves the stereo channels

audio.export(output_file, format="wav")

def separate_interview_audio(audio_file):

"""

Reads a stereo WAV file and separates it into two mono channels.

Args:

audio_file (str): Path to the WAV file

Returns:

tuple: (top_mic array, bottom_mic array, sample rate)

"""

# sf.read returns a tuple: (audio_data, sample_rate)

# For stereo audio, audio_data is a 2D array where each row is a sample

# and each column is a channel

y, sr = sf.read(audio_file)

# Extract individual channels

# y[:, 0] takes all rows (:) and the first column (0) - left channel

# y[:, 1] takes all rows (:) and the second column (1) - right channel

top_mic = y[:, 0] # Left channel data

bottom_mic = y[:, 1] # Right channel data

# Save each channel as a separate mono WAV file

# This is useful for debugging or further analysis

sf.write('top_mic.wav', top_mic, sr)

sf.write('bottom_mic.wav', bottom_mic, sr)

return top_mic, bottom_mic, sr

def calculate_sound_direction(top_mic, bottom_mic):

"""

Determines the sound source direction by comparing energy levels

between the two microphone channels.

Args:

top_mic (np.array): Audio data from the top microphone

bottom_mic (np.array): Audio data from the bottom microphone

Returns:

str: Direction of the sound source ("left" or "right")

"""

# Calculate energy by squaring each sample and summing

# np.square squares each value in the array

# np.sum adds up all the squared values

top_energy = np.sum(np.square(top_mic))

bottom_energy = np.sum(np.square(bottom_mic))

# Compare energies to determine direction

# Higher energy in the top mic means sound is coming from the left

if top_energy > bottom_energy:

return "left"

else:

return "right"

# Example usage showing the complete workflow

def main():

# File paths for input and output

input_file_path = "/path/to/your/recording.m4a"

wav_file_path = "/path/to/your/recording.wav"

# Step 1: Convert M4A to WAV

print("Converting audio file to WAV format...")

convert_to_wav(input_file_path, wav_file_path)

# Step 2: Separate stereo channels

print("Separating stereo channels...")

top, bottom, sample_rate = separate_interview_audio(wav_file_path)

# Step 3: Calculate and display direction

print("Analyzing sound direction...")

direction = calculate_sound_direction(top, bottom)

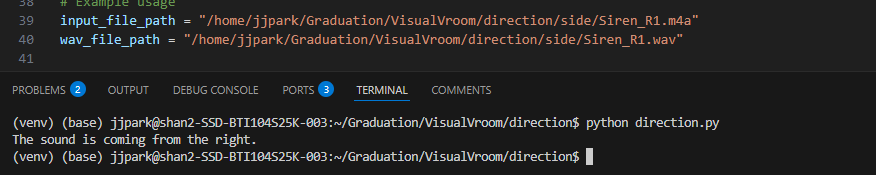

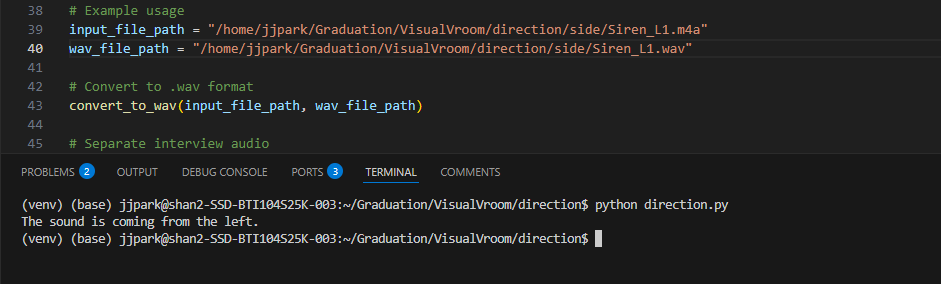

print(f"The sound is coming from the {direction}.")

if __name__ == "__main__":

main()Let's break down each major component:

1. File Conversion (convert_to_wav)

This function handles the initial file conversion. We need WAV format because:

- WAV is uncompressed, making it easier to process

- It maintains the original stereo channel separation

- Most audio processing libraries work best with WAV files

2. Channel Separation (separate_interview_audio)

This function does the crucial work of splitting the stereo recording into its component channels. When you record in stereo mode:

- The left channel (top_mic) captures audio from one microphone

- The right channel (bottom_mic) captures audio from the other microphone

- The

y, sr = sf.read(audio_file)line gives us:y: A 2D numpy array where each row is a sample and each column is a channelsr: The sample rate (typically 44100 Hz)

3. Direction Calculation (calculate_sound_direction)

This is where the actual direction detection happens. The function:

1. Calculates energy in each channel by:

- Squaring each sample (to get positive values)

- Summing all squared values (to get total energy)

- Compares the energies to determine direction:

- More energy in top mic = sound from left

- More energy in bottom mic = sound from right

The energy calculation is similar to how our ears work - louder sounds have more energy, and the microphone closer to the sound source will record higher energy levels.

Usage Considerations

When using this code, remember:

1. The accuracy depends on:

- Microphone quality and positioning

- Environmental factors (echoes, background noise)

- Distance from sound source

- The direction detection is binary (left/right) - it doesn't detect intermediate angles

- The stereo recording must be properly configured on your device