Training Direction-Aware Sound Recognition: A Journey from 12 to 8 Classes

When working on a sound recognition system for emergency vehicles, I encountered an interesting challenge that led to an architectural decision.

The Initial Approach: 12-Class Model

Our first simpleViT model was trained to recognize 12 distinct classes, representing four vehicle types (Ambulance, Police Car, Fire Truck, and Car Horn) each with three directional variants (Left, Middle, and Right). The test results were impressive:

Test Accuracy: 95.31%With individual class accuracies ranging from 93% to 100% for most classes, the model seemed highly capable. However, when implemented in our Android application, we encountered a crucial issue: the model consistently classified sounds as coming from the "Middle" direction, regardless of their actual source location.

The Problem

This behavior revealed a fundamental challenge in directional sound recognition: while the model performed well in controlled test conditions, it struggled with real-world directional discrimination. The "Middle" classification became a sort of default prediction, compromising the system's ability to provide accurate directional awareness.

The Solution: 8-Class Model

To address this issue, I made a strategic decision to simplify the model by removing the "Middle" class entirely, leaving us with 8 classes (four vehicle types × two directions). This decision was based on two key insights:

- In real-world scenarios, precise left/right discrimination is more valuable than detecting a middle position

- Reducing the number of directional options could help the model make more decisive directional predictions

Results of the New Approach

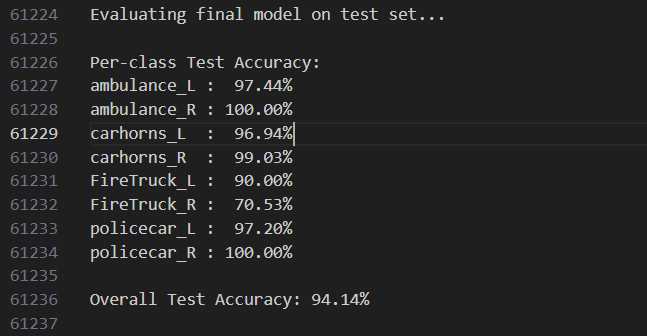

The 8-class model showed promising results:

Overall Test Accuracy: 94.14%

Per-class Test Accuracy:

Ambulance Left: 97.44%

Ambulance Right: 100.00%

Car Horn Left: 96.94%

Car Horn Right: 99.03%

Fire Truck Left: 90.00%

Fire Truck Right: 70.53%

Police Car Left: 97.20%

Police Car Right: 100.00%While the overall accuracy was slightly lower than the 12-class model (94.14% vs 95.31%), the real-world performance in our Android implementation showed marked improvement in directional discrimination. The model became much better at distinguishing between left and right sound sources, which was our primary goal.

Future Developments

Looking ahead, we're considering further improvements by implementing the data preprocessing methodology described in Chin et al.'s 2024 paper on directional vehicle alert sounds. The main difference though would be that my approach will utilize Android phones as sound source recorders, splitting audio into three channels:

- Left channel

- Right channel

- Stereo channel

This multi-channel approach could potentially provide even more robust directional awareness while maintaining the simplified 8-class architecture that has proven effective in practice.

Takeaway

This experience highlights an important lesson in machine learning: sometimes, higher test accuracy doesn't necessarily translate to better real-world performance. By simplifying our model from 12 to 8 classes, we sacrificed a small amount of numerical accuracy but gained improvements in practical directional discrimination.