Transformer

트랜스포머 모델은 문장 속 단어와 같은 순차 데이터 내의 관계를 추적해 맥락과 의미를 학습하는 신경망입니다.

어텐션(attention) 또는 셀프어텐션(self-attention)이라 불리며 진화를 거듭하는 수학적 기법을 응용해 서로 떨어져 있는 데이터 요소들의 의미가 관계에 따라 미묘하게 달라지는 부분까지 감지해냅니다.

가장 인기 있는 딥러닝 모델로 손꼽혔던 합성곱과 순환 신경망(CNN과 RNN)을 이제는 트랜스포머가 대체하고 있습니다.

트랜스포머의 등장 전까지는 라벨링된 대규모 데이터 세트로 신경망을 훈련해야 했지만 트랜스포머는 요소들 사이의 패턴을 수학적으로 찾아내기 때문에 이 과정이 필요 없습니다.

트랜스포머 모델도 기본적으로는 데이터를 처리하는 대형 인코더/디코더 블록에 해당합니다.

인코더는 소스 시퀀스의 정보를 압축해 디코더로 보내주는 역할을 담당한다. 인코더가 소스 시퀀스 정보를 압축하는 과정을 인코딩(encoding)이라고 하며 디코더는 인코더가 보내준 소스 시퀀스 정보를 받아서 타깃 시퀀스를 생성한다.

디코더가 타겟 시퀀스를 생성하는 과정을 디코딩(decoding)이라고 한다. 예를 들어 기계번역에서는 인코더가 한국어 문장을 압축해 디코더에 보내고, 디코더는 이를 받아 영어로 번역한다.

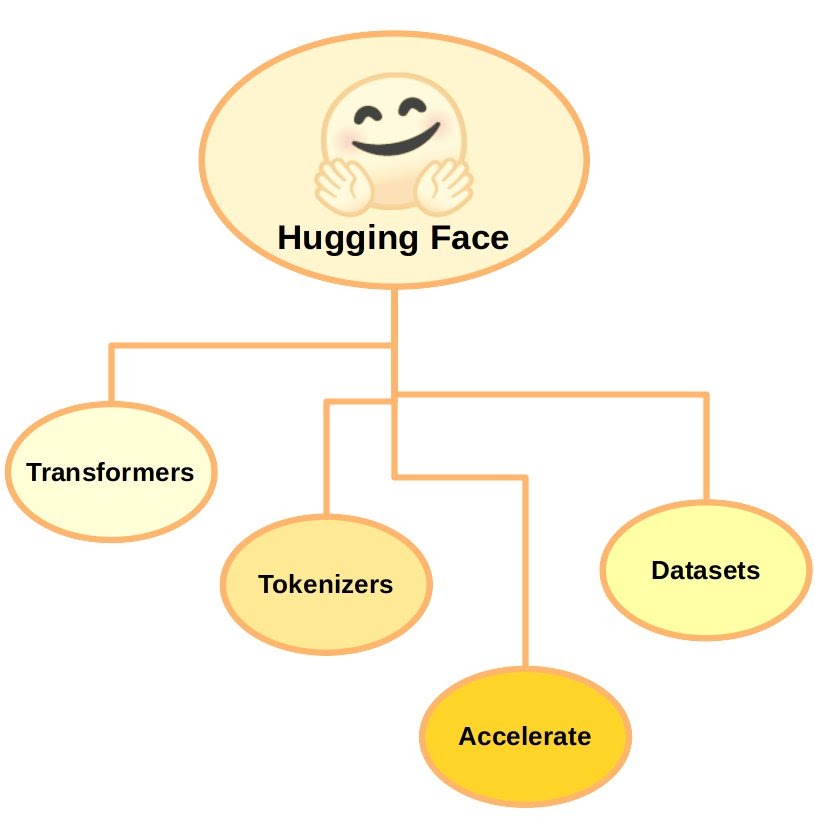

Hugging Face

Image by Getting Started with Hugging Face Transformers for NLP

'허깅 페이스’는, 자연어 처리 스타트업이 개발한, 다양한 트랜스포머 모델(transformer.models)과 학습 스크립트(transformer.Trainer)를 제공하는 모듈이다. 전 세계 사용자들이 미리 훈련해둔 모델을 손쉽게 가져다 쓸 수 있다.

'허깅 페이스'라는 회사가 만든 'transformers' 패키지가 있고, 일반적인 파이토치 구현체의 layer.py, model.py이 transformer.models에, train.py 가 transformer.Trainer에 대응된다.

transformers.models

트랜스포머 기반의 다양한 모델을 파이토치, 텐서 플로우로 각각 구현해놓은 모듈이다. 또한 각 모델에 맞는 tokenizer도 구현되어 있다.

transformers.Trainer

딥러닝 학습 및 평가에 필요한 optimizer, weight updt, learning rate schedul, ckpt, tensorbord, evaluation 등을 수행한다.

Trainer.train 함수를 호출하면 이 모든 과정이 사용자가 원하는 arguments에 맞게 실행된다.

pytorch lightning 과 비슷하게, 공통적으로 사용되는 학습 스크립트를 모듈화 하여 편하게 사용할 수 있다는 점이 장점이다.

Huging Face 사용해보기

transformers 설치

!pip install transformers파이프라인(pipeline) 활용하기

from transformers import pipeline

파이프라인에 텍스트가 입력되면 3가지 주요 단계가 내부적으로 실행됩니다.

-

텍스트는 모델이 이해할 수 있는 형식으로 전처리됩니다(preprocessing).

-

전처리 완료된 입력 텍스트는 모델에 전달됩니다.

-

모델이 예측한 결과는 후처리되어(postprocessing) 우리가 이해할 수 있는 형태로 변환됩니다

감성 분석 (sentiment-analysis)

classifier = pipeline('sentiment-analysis')

classifier("But I'm a creep. I'm a weirdo. What the hell am I doin' here?")

🖥️ [{'label': 'NEGATIVE', 'score': 0.9873543381690979}]제로샷 분류(zero-shot classification)

학습된 클래스 레이블이 없는 상황에서 새로운 문장을 주어진 후보 클래스 레이블로 분류

classifier = pipeline('zero-shot-classification')

classifier(

'this is a course about the transformers library',

candidate_labels=['education', 'politics', 'business']

)🖥️ {'sequence': 'this is a course about the transformers library',

'labels': ['education', 'business', 'politics'],

'scores': [0.9224742650985718, 0.05610811710357666, 0.02141762152314186]}텍스트 생성

주어진 텍스트의 다음 단어나 문장을 예측하여 텍스트를 생성

generator = pipeline('text-generation')

generator('In this course, we will teach you how to', num_return_sequences=10, max_length=30)Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation.

[{'generated_text': 'In this course, we will teach you how to achieve the success of your project and learn from your experience in the company (and its many competitors).'},

{'generated_text': 'In this course, we will teach you how to apply the skills of the modern world to create a revolutionary web framework for digital art that will transform digital'},

{'generated_text': 'In this course, we will teach you how to connect your iPhone and iPad to the Internet for online or offline use. We will use your email and'},

{'generated_text': 'In this course, we will teach you how to program a virtual machine to build out your own custom operating system on a computer.\n\nYou will'},

{'generated_text': "In this course, we will teach you how to use Java EE to manage your applications' user interfaces with JAX-RS and Java EE. We"},

{'generated_text': 'In this course, we will teach you how to make a life sentence or a life parole for your child.\n\n1. Teach a child:'},

{'generated_text': 'In this course, we will teach you how to create a simple but effective application for all of the following projects. You will learn how to have data'},

{'generated_text': 'In this course, we will teach you how to generate and use 3D animation in your application. You will take this course to create a custom 3'},

{'generated_text': 'In this course, we will teach you how to write simple, clean, and easy to read software from scratch and how to implement your design into a'},

{'generated_text': 'In this course, we will teach you how to navigate our world. For instance, we will be exploring the notion of personal responsibility and how you can'}]Mask filling

문장에서 < mask >로 마스크 처리된 부분을 예측하여 단어를 채울 수 있다.

인자로는 마스크를 채울 문장이 주어지며, top_k 매개변수를 통해 반환할 예측 결과의 개수를 지정할 수 있습니다.

unmasker = pipeline('fill-mask')

unmasker('This course will teach you all about <mask> models', top_k = 2)

[{'score': 0.19631513953208923,

'token': 30412,

'token_str': ' mathematical',

'sequence': 'This course will teach you all about mathematical models'},

{'score': 0.04449228197336197,

'token': 745,

'token_str': ' building',

'sequence': 'This course will teach you all about building models'}]개체명 인식(Named Entity Recognition, NER)

"Sylvain"이 "PER" (사람)로, "Hugging Face"가 "ORG" (조직)로, 그리고 "Brooklyn"이 "LOC" (장소)로 인식

ner = pipeline("ner", grouped_entities = True)

ner("My name is Sylvain and I work at Hugging Face in Brooklyn.")

[{'entity_group': 'PER',

'score': 0.9981694,

'word': 'Sylvain',

'start': 11,

'end': 18},

{'entity_group': 'ORG',

'score': 0.9796019,

'word': 'Hugging Face',

'start': 33,

'end': 45},

{'entity_group': 'LOC',

'score': 0.9932106,

'word': 'Brooklyn',

'start': 49,

'end': 57}]

질문과 답변(Question Answering, QA)

QA = pipeline('question-answering')

QA(

question = 'Where do I work?',

context = "My name is Sylvain and I work at Hugging Face in Brooklyn"

){'score': 0.6949767470359802, 'start': 33, 'end': 45, 'answer': 'Hugging Face'}

요약 summarization

summarizer = pipeline("summarization")

summarizer("""

Once upon a time, in a quaint little village nestled amidst rolling green hills, there lived a young girl named Amelia. She had an insatiable curiosity and a heart full of dreams that extended far beyond the borders of her small world.

Amelia's days were filled with endless exploration and boundless imagination. From a tender age, she would venture into the nearby forests, enthralled by the secrets they held. Every tree, every creature, seemed to whisper stories of enchantment and wonder. The villagers regarded her as a peculiar child, forever lost in her own world.

One sunny morning, as the dew-kissed grass glistened under the gentle caress of the golden rays, Amelia stumbled upon an ancient book tucked away beneath a moss-covered stone. Its pages were weathered and fragile, but its words beckoned to her, promising grand adventures.

She sat beneath the shade of a towering oak tree and began to read. The book revealed tales of mythical creatures, forgotten civilizations, and far-off lands where magic thrived. With each turn of the page, Amelia's imagination soared, and her desire to see these wondrous places grew stronger.

Determined to embark on her own extraordinary journey, Amelia packed a small bag with provisions, bid farewell to her worried parents, and set off into the unknown. She followed the clues within the book, traversing treacherous mountains, crossing raging rivers, and braving dense forests.

Along her odyssey, Amelia encountered fantastical beings she had only read about—majestic unicorns, mischievous sprites, and wise old wizards. They guided her, shared their wisdom, and became her faithful companions. With their help, she overcame daunting challenges and unraveled the mysteries that lay before her.

As her travels unfolded, Amelia discovered that true magic existed not only in the realms she visited but also within herself. She realized that the power to create, to inspire, and to change the world lay dormant within her heart. Each encounter, each experience, awakened a new facet of her own potential.

After months of incredible exploits, Amelia found herself standing at the edge of a shimmering lake, the final destination of her extraordinary quest. The reflection on the water's surface revealed a transformed young girl, brimming with confidence, compassion, and a boundless sense of purpose.

Returning to her village, Amelia shared her tales of wonder and the lessons she had learned. The once-skeptical villagers, captivated by her words and the light that radiated from her soul, were inspired to embrace their own dreams and embark on their own personal journeys.

And so, the village blossomed into a place of boundless imagination, where every child and adult alike dared to dream and pursue their passions. All because a young girl named Amelia had dared to believe in the magic within herself and had embarked on a remarkable adventure that changed not only her own life but also the lives of those around her.

From that day forward, the village became a testament to the extraordinary power of dreams, the indomitable spirit of adventure, and the everlasting magic that resides within each and every one of us. And Amelia, the girl with stars in her eyes and an untamed heart, continued to explore the wonders of the world, forever reminding us that the greatest stories are the ones we dare to live.

{'summary_text': ' A young girl named Amelia discovered that true magic existed not only in the realms she visited but also within herself . The village became a testament to the extraordinary power of dreams, the indomitable spirit of adventure, and the everlasting magic that resides within each and every one of us .'}]기계 번역

프랑스어(French)에서 영어(English)로 번역

translator = pipeline("translation", model = "Helsinki-NLP/opus-mt-fr-en")

translator("Ce course produit par Hugging Face ")[{'translation_text': 'This race produced by Hugging Face'}]