Review-Estimation

Parameter: we wish to estimate the parameter from the observation(s) . Theses can be vectors

and

orscalars.Parmetrized PDF: the unknown parameter is to be estimated. parametrizes the probability density function of the received data .Estimator:RuleorFunctionthat assigns a value to for each realization of .Estimate:Valueof obtained for a given realization of . will be used for the estimate, while will represent the true value of the unknown paramete.MeanandVarianceof theestimator: and .

Expectations are taken over (meaning israndom, not .)

Unbiased Estimators

- An

estimatoris calledunbiased, if for all possible . - If , the

biasis

(Expectation is taken with respect to or )

Revisit example of the DC level in noise, two candidate

estimators

(Sample mean) and (First sample value)

areunbiasedsince

- may hold for some values of for biased estimators, i.e., modified sample mean estimator

An

Unbiased estimatoris not necessarily agood estimator;

But abiased estimatoris apoor estimator.

Mean-Squared Error(MSE) Criterion

-

-

-

-

-

Note that, in many cases,

minimum MSEcriterion leads tounrealizable estimator, which cannot be written solely as a function of the data, i.e.,

, where is chosen to minimizeMSE

Then,Unrealizable, a function of unknown A

Unbiased Estimator...?

Minimum Variance Unbiased Estimation(MVUE)

- Any criterion that depends on the

biasis likely to beunrealizable

Practicallyminimum MSE estimatorneeds to beabandoned

Minimum variance unbiased(MVU) estimator

- Alternatively, contrain

the biasto be zero - Find the estimator which

minimizes the variance(minimizing theMSEas well for unbiased case)Minimum variance unbiased(MVU)Estimator

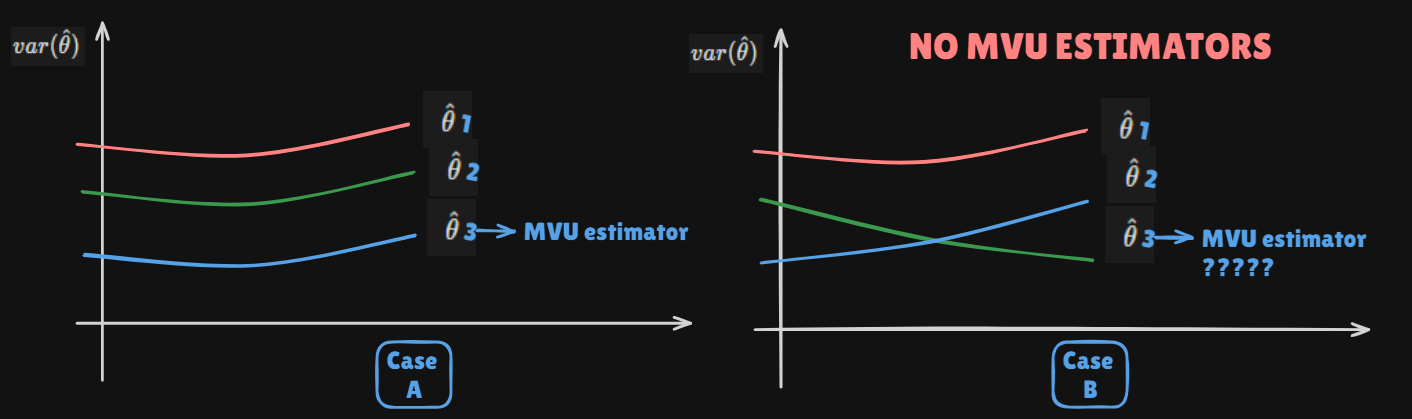

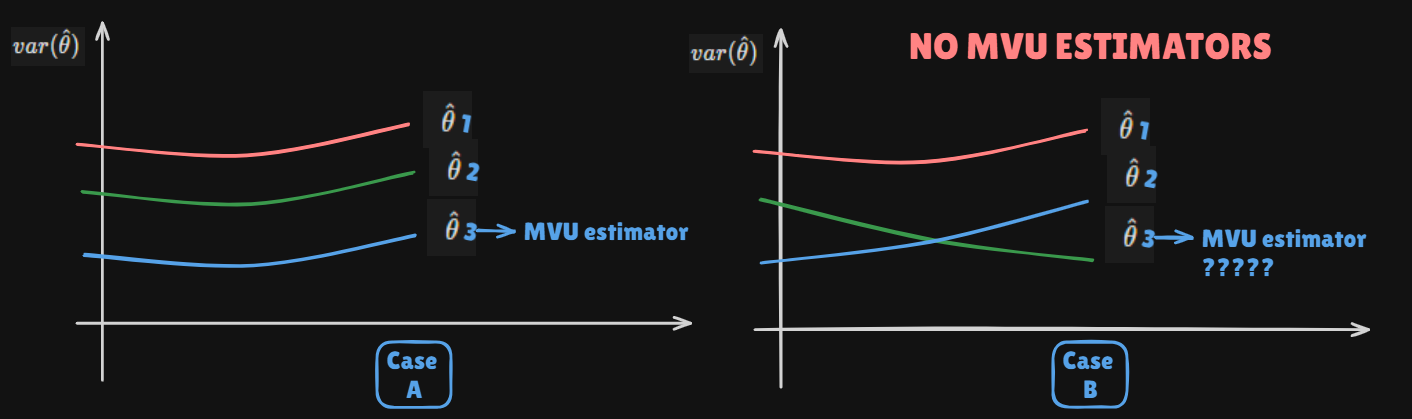

Existence of the MVU Estimator

- An unbiased estimator with

minimum variancefor all - Examples

- There might be some problems

- Problem 1 : We can not try

All functionsto findMVUE - Problem 2 : We can not try

All theta intervalsto confirm whether it minimizes variance or not

- Problem 1 : We can not try

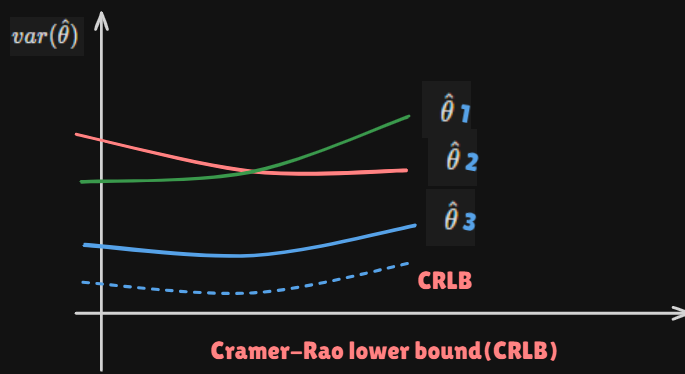

Fining the MVU Estimator

- There is

no general frameworkto findMVU estimatoreven if it exists.Possible approaches:

- Determine the

Cramer-Rao lower bound(CRLB)and check if some estimator satisfies it - Apply the

Rao-Blackwell-Lehmann-Scheffe(RBLS) theorem - Find unbiased linear estimator with minimum variance(

best linear unbiased estimator,BLUE)

- Determine the