Review

Cramer-Rao Lower Bound(CRLB)

- The

CRLBgive a lower bound on the variance of anyunbiased estimatorDoes not guarantee bound can be obtained

- If find an unbiased estimator whose variance =

CRLBthen it'sMVUE - Otherwise can use

Ch.5tools(Rao-Blackwell-Lehmann-Scheffe Theorem and Neyman-Fisher Factorization Theorem) to construct a better estimator from any unbiased one - possibly theMVUEif conditions are met

CRLB -Scalar parameter

- Let satisfy the

regularitycondition, - Then, the

varianceof anyunbiased estimatormust satisfy - Where the derivative is evaluated at the true value of and the expectation is taken w.r.t. $p(x;\theta).

Furthermore, an

unbiased estimatormay be found that attains the bound for all iffFor some functions and . That

estimator, is theMVUEwithvarianceis

General CRLB for Signals in WGN

Vector Form of the CRLB

- Assuming satisfies the

regularitycondition - The

covariance matrixof anyunbiased estimatorsatisfies - Where means the matrix is

positive semidefiniteFurthermore, an

unbiased estimatormay be found that attains the bound

if and only if - In that case, is the

MVU estimatorwithvariance.

Linear Model 으악

- The determination of the

MVUEis difficult in general. Many estimation problems can be represented by thelinear modelfor which theMVUEis easily determined - Line fitting example

- In Matrix notation,

- In Matrix notation,

MVUE for the Linear Model

- Determine the

MVUEfrom the equality condition ofCRLBtheorem - will be the

MVUEif - For the

linear model, - Assume that is

invertible - For

linear model, anefficient MVUEcan be found if isinvertible. isinvertibleiff(if and only iff) the columns of are linearlyindependent.(seeProb. 4.2) - If the columns of are not

linearly independent, even in the absence of noise, the model parameters will not beidentificable(The number of equations is less than the number of variables.)

MVUE for the Linear Model - Theorem

- If the observed data can be modeled as , then the

MVUEis - And the

covariance matrixof is - Where is an vector of observations, is

knownobservation matrix(with ) with rank - is a vector of parameters to be estimated, and is an noise vector with PDF

- For the

linear modeltheMVUEisefficientin that it attains theCRLB. - Also, the statistical performance of is completely specified, because is a

linear transformationof a Gaussian vecot and hence

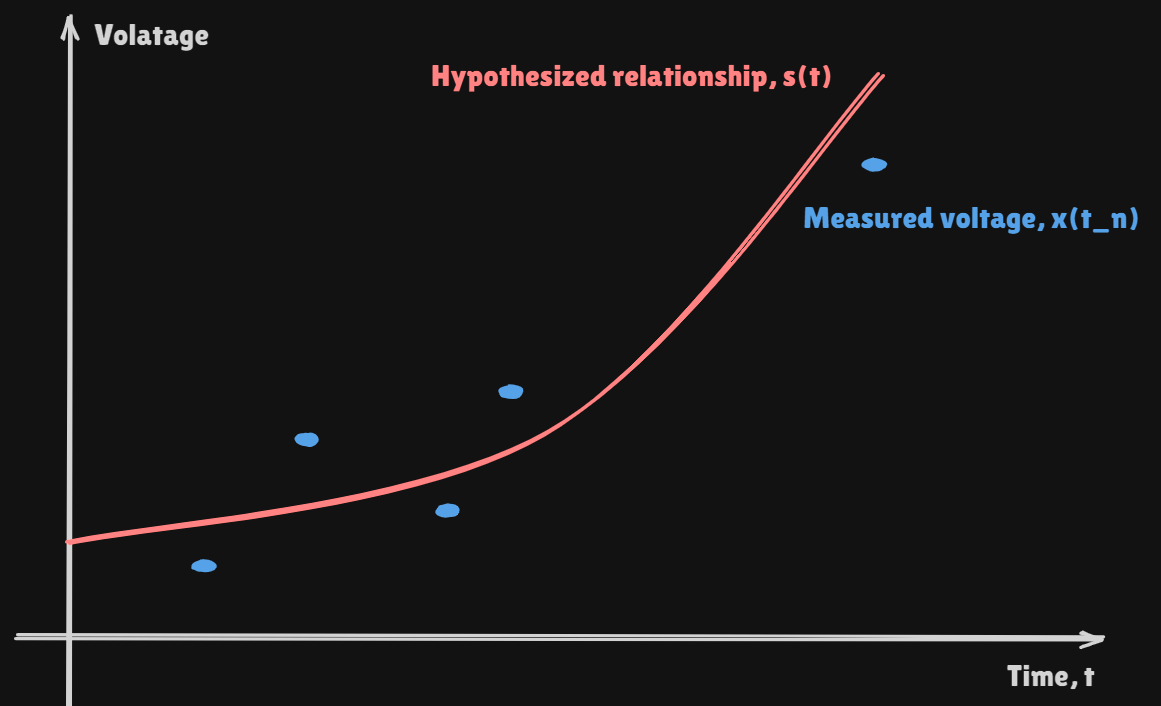

Example - Curve Fitting

The

LinearinLinear Modeldoes not come from fitting straight lines to data. It is more general than that!!

Example - Fourier Analysis

- Data model

- Parameters(Fourier coefficients)

- Observation matrix

- Apply

MVUETheorem forLinear Model,

Example - System Identification

-

Goal : Determine a model for the system

- Wireless Communications(idenfy & equalize multi-path)

- Geophysical Sensing(oil exploration)

- Speakerphone(echo cancellation)

-

In many applications : assume that the system is

FIR(FiniteImpulseResponse)(length p)(or TDL) -

where :

Pilot Signalisknown, for , :WGNIt's

Linearthan, We can applyMVUE -

MVU estimatorof the impulse responseWhat signal is best to use?

-

The that gives the

smallest estimated variances!! -

Choosing s.t. is

diagonalwill minimize variance -

Choose to be

pseudo-random noise(PRN)PRNhas approximatelyflat spectrum(From a frequency-domain view aPRNsignal equally probes at all frequencies)

-

Designing the

probing signal -

Equality holds when or

-

The conditions achieving the

minimum possible variancesare -

To minimize the variance of

MVUE, should be chosen to makediagonalFor large , we have

-

Under these conditions,

-

Then,

MVU estimatorwith yieldswiener filter : has been cross corelations and autocorelations function so far about WGN

Linear Model with Colored Noise

- General

Linear Modelwithcolored noiseWhitening approach