📌 All about Partitions

세 종류의 Partition(Life cycle of Partitions)

- 입력 데이터를 로드할 때 파티션 수와 크기

- 셔플링 후 만들어지는 파티션 수와 크기

- 데이터를 최종적으로 저장할 때 파티션 수와 크기

- 파티션의 크기는 128MB - 1GM가 좋다.

1. 입력 데이터를 로드할 때 파티션 수와 크기

-

기본적으로는 파티션의 최대 크기에 의해 결정된다.

-> spark.sql.files.maxpartitionbytes(128MB) -

결국 해당 데이터가 어떻게 저장되었는지와 연관이 많다.

- 파일 포맷이 무엇이고 압축되었는지? 압축되었다면 어떤 알고리즘인지?

-> 결국 Splittable한가 -> 한 큰 파일을 다수의 파티션으로 나눠 로드할 수 있는가? - 기타 관련 Spark 환경 변수들

- 파일 포맷이 무엇이고 압축되었는지? 압축되었다면 어떤 알고리즘인지?

입력 데이터 파티션 수와 크기를 결정해주는 변수들

-

bucketBy로 저장된 데이터를 읽는 경우

- Bucket의 수와 Bucket 기준 컬럼들과 정렬 기준 컬럼들

-

읽어들이는 데이터 파일이 splittable한지?

- PARQUET/AVRO등이 좋은 이유가 된다. : 항상 splittable

- JSON/CSV등의 경우 한 레코드가 multi-line이라면 splittable하지 않다.

-> Single line이라도 압축시 bzip2를 사용해야만 splittable

-

입력 데이터의 전체 크기(모든 파일크기의 합)

-> 입력 데이터를 구성하는 파일의 수 -

내가 리소스 매니저에게 요청한 CPU의 수

-> executor의 수 X executor 별 CPU 수

입력 데이터 파티션 수와 크기 결정방식

-

먼저 아래 공식으로 maxSplitBytes를 결정한다.

- bytesPerCore = (Full size of DataFiles + Files Num * OpenCostInBytes) / default.parallelism

- maxSplitBytes = Min(maxPartitionBytes, bytesPerCore)

-> maxSplitBytes = Min(maxPartitionBytes, Max(bytesPerCore, OpenCostInBytes))

-

입력 데이터를 구성하는 각 파일에 대하여 다음을 진행한다.

- Splittable하다면 MaxSplitBytes 단위로 분할하여 File Chunk를 생성한다.

- Splittable하지 않거나 크기가 maxSplitBytes보다 작다면 하나의 File chunk를 생성한다.

-

다음으로 위에서 만들어지는 File Chunk들로 부터 파티션을 생성한다.

- 기본적으로 한 파티션은 하나 혹은 그 이상의 File Chunk들로 구성되어있다.

- 한 파티션에 다음 File Chunk의 크기 + OpenConstInBytes를 더했을 때 이 값이 maxSplitBytes를 넘어가지 않을 때까지 계속해서 Merge한다.

-> 파일들이 하나의 파티션으로 패킹된다.

Spark Scala 코드 : maxSplitBytes

def maxSplitBytes(

sparkSession: SparkSession,

selectedPartitions: Seq[PartitionDirectory]): Lang = {

val defaultMaxSplitBytes = sparkSession.sessionState.conf.fileMaxPartitionBytes

val openCostInBytes = sparkSession.sessionState.conf.filesOpenCostInBytes

val minPartitionNum = sparkSession.sessionState.conf.filesMinPartitionNum

.getOrElse(sparkSession.leafNodeDefaultParallelism)

val totalBytes = selectedPartitions.flatMap(_.files.map(_.getLen + openCostInBytes)).sum

val bytesPerCore = totalBytes / minPartitionNum

Math.min(defaultMaxSplitBytes, Math.max(openCostInBytes, bytesPerCore))

}Spark Scala 코드 : 파티션 생성 코드

val openCostInBytes = sparkSession.sessionState.conf.filesOpenCostInBytes

// Assign files to partitions using "Next Fit Decreasing"

partitionedFiles.foreach { fiel =>

if (currentSize + file.length > maxSplitBytes) {

closePartition()

}

// Add the given file to the current partition.

currentSize += file.length + openCostInBytes

currentFiles += file

}

closePartition()입력 데이터 파티션 수와 크기 결정 방식 예

- 파일 수 : 50

- 파일 포맷 : PARQUET

- 파일 크기 : 65MB

- Spark Application에 할당된 코어 수 : 10

- spark.sql.files.maxPartitionBytes : 128MB

- spark.default.parallelism : 10

- spark.sql.files.openCostInBytes : 4MB

- bytesPerCore = (50 * 65MB + 50 * 4MB) / 10 = 345MB

- maxSplitBytes = Min(128MB, 345MB) = 128MB

- 최종 파티션 수 : 50

Bucketing (bucketBy) 소개

-

데이터가 자주 사용되는 컬럼 기준으로 미리 저장해두고 활용한다.

-

다양한 최적화가 가능하다

- 조인대상 테이블들이 조인 키를 가지고 bucketing된 경우 shuffle free join이 가능하다

- 한 쪽만 bucketing되어 있는 경우 one-side shuffle free join이 가능하다.(bucket의 크기에 달렸다.)

- Bucket Pruning을 통한 최적화가 가능하다.

- Shuffle Free Aggregation

-

Bucket 정보가 Metastore에 저장되고 Spark Compiler는 이를 활용한다.

- 이경우 sortBy를 통하여 순서를 미리 정해주기도 한다.

- Spark 테이블로 저장하고 로딩해야지만 이 정보를 이용할 수 있다.

-> saveAsTable, spark.table()

Bucketing (bucketBy) 저장 방식

-

Bucket의 수 X Partition의 수 만큼의 파일이 만들어진다

- ex) DataFrame의 Partition수가 10이고 Bucket의 수가 4라면 40개의 파일을 생성한다

- 다시 읽어들일 때 10개의 Partition으로 읽혀진다

-

다시 읽어들일 때 원래 Partition의 수만큼으로 재구성된다.

-

Bucketing키를 기반으로 작업시 Shuffle이 없어진다.

Small Files 신드롬

-

왜 작은 크기의 많은 파일이 문제가 되는가?

- 64MB의 파일 하나를 읽는것 vs. 64Byte의 파일 백만개를 읽는것

- 이 API콜은 모두 네트워크 RPC콜이다.

- 파일 시스템 접근 관련 오버헤드

- 파일 하나를 접근하기 위해서 다수의 API콜이 필요하다.

- 그래서 앞서 openCostInBytes라는 오버헤드가 각 파일마다 부여된다.

-

읽어 들이면서 Partition의 수를 줄일 수 있지만 오버헤드가 크다.

-> 파일로 쓸 때 어느 정도 정리르 해주는 것이 필요하다.

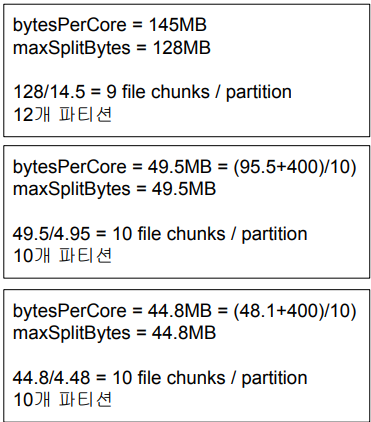

JSON으로 저장된 데이터 3가지 읽어보기

- 파일 수 : 100

- 파일 포맷 : JSON

-> 압축 여부 : No, Yes(gzip), Yes(bzip2) - 각 파일의 크기

-> 10.5MB, 955KB, 481KB - Spark Application에 할당된 코어 수 : 10

- spark.sql.files.maxPartitionByutes : 128MB

- spark.default.parallelism : 10

- spark.sql.files.openCostInBytes : 4MB

2. 셔플링 후 만들어지는 파티션 수와 크기

- spark.sqsl.shuffle.partitions

- AQE를 꼭 사용한다

3. 데이터를 저장할 때 파티션 수와 크기

-

3가지 방식이 존재한다.

- default

- bucketBy

- partitionBy

-

파일 크기를 레코드 수로도 제어 가능하다.

- spark.sql.files.maxRecordsPerFile

-> 기본값은 0이며 레코드수로 제약하지 않곘다는 의미이다

- spark.sql.files.maxRecordsPerFile

3-1. Default

-

bucketBy나 partitionBy를 사용하지 않는 경우

- 각 파티션이 하나의 파일로 쓰여진다.

- saveAsTable vs. save

-

적당한 크기와 수의 파티션을 찾는 것이 중요하다.

- 작은 크기의 다수의 파티션이 있다면 문제가된다.

- 큰 크기의 소수의 파티션도 문제다.

-> splittable하지 않은 포맷으로 저장될 경우

-

Repartition 혹은 coalesce를 적절하게 사용한다.

-> 이 경우 AQE의 Coalescing이 도움될 수 있다. -> repartition -

PARQUET 포맷 사용

-> Snappy compression 사용

3-2. bucketBy

-

데이터 특성을 잘 아는 경우 특정 ID를 기준으로 나눠서 테이블로 저장한다.

- 다음부터는 이를 로딩하여 사용함으로써 반복 처리시 시간을 단축한다.

->Bucket의 수와 기준 ID를 지정한다. - 데이터의 특성을 잘 알고 있는 경우 사용 가능하다.

- 다음부터는 이를 로딩하여 사용함으로써 반복 처리시 시간을 단축한다.

-

bucket의 수와 키를 지정해야한다.

- df.write.mode("overwrite").bucketBy(3, key).saveAsTable(table)

- sortBy를 사용해서 순서를 정하기도 한다.

- 이 정보는 MetaStore에 같이 저장된다.

CREATE TABLE bucketed_table(

id INT,

name STRING,

age INT

)

USING PARQUET

CLUSTERED BY (id)

INTO 4 BUCKETS;3-3. partitionBy

-

굉장히 큰 로그 파일을 데이터 생성 시간 기반으로 데이터 읽기를 많이 한다면?

-

데이터 자체를 연-월-일의 폴더 구조로 저장한다.

- 이를 통해 데이터의 읽기 과정을 최적화한다.(스캐닐 과정이 줄어들거나 없어진다.)

- 데이터 관리도 쉬워진다.(Retention Policy 적용시)

-

하지만 Partition key를 잘못 선택하면 엄청나게 많은 파일들이 생성된다.

-> Cardinality가 높은 컬럼을 키로 사용하면 안된다.

-

-

partitioning할 키를 지정해야 한다.

- df.write.mode("overwrite").partitionBy("order_month").saveAsTable("order")

- df.write.mode("overwrite").partitionBy("year", "month", "day").saveAsTable("appl_stock")

CREATE TABLE partitioned_table (

id INT,

value STRING

)

USING parquet

PARTITIONED BY (id);bucketBy & partitionBy

- partitionBy후에 bucketBy를 사용한다.

- 필터링 패턴 기준 partitionBy후 그룹핑/조인 패턴 기준 bucketBy를 사용한다.

df.write.mode("overwrite").partitionBy("dept").bucketBy(5, "employeeid")Spark Persistent 테이블의 통계 정보 확인

- spark.sql("DESCRIBE EXTENDED Table_Name")

-> 이를 통해 bucket/partition 테이블 정보를 얻을 수 있다

spark.sql("DESCRIBE EXTENDED appl_stock").show()