하둡 클러스터링이 완료됬다는 전제하에 진행한다.

https://velog.io/@kidae92/Hadoop-Cluster

🚩 환경변수 설정

- ~/.bashrc

SPARK_HOME=/usr/local/spark

export PATH=\$PATH:\$SPARK_HOME/bin:\$SPARK_HOME/sbin- 환경변수 적용

source ~/.bashrc🚩 Spark 설치 및 설정

1. 다운로드 (3.0.3)

wget https://downloads.apache.org/spark/spark-3.0.3/spark-3.0.3-bin-hadoop3.2.tgz

mkdir -p $SPARK_HOME && sudo tar -zvxf spark-3.0.3-bin-hadoop3.2.tgz -C $SPARK_HOME --strip-components 1

chown -R $USER:$USER $SPARK_HOME2. slaves 설정

- $SPARK_HOME/conf/slaves

- $SPARK_HOME/conf에 가보면 slaves를 포함한 모든 파일들이 ~~~.template으로 만들어져 있어서 복사를 해줘야한다.

cp slaves.template slaves- 그 후에 slaves 파일에 들어가서 localhost를 지우고

worker1

worker2

worker3

worker43. spark-env.sh

- $SPARK_HOME/conf/spark-env.sh

- 마찬가지로 복사

cp spark-env.sh.template spark-env.sh- JAVA_HOME 같은 경우는 커멘트창에 $JAVA_HOME을 쳐서 확인하고 입력바란다. 필자는 다른게 몇몇 있어서 하나하나 확인하고 진행했다.

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export SPARK_MASTER_HOST=master

export HADOOP_HOME=/usr/local/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop4. spark-defaults.conf

- $SPARK_HOME/conf/spark-defaults.conf

spark.master yarn

spark.eventLog.enabled true

spark.eventLog.dir file:///usr/local/spark/eventLog

spark.history.fs.logDirectory file:///usr/local/spark/eventLog- eventLog 디렉토리 생성

mkdir -p $SPARK_HOME/eventLog🚩 Spark 실행

- Master 에서만 실행

- hadoop

> $HADOOP_HOME/bin/hdfs namenode -format -force

> $HADOOP_HOME/sbin/start-dfs.sh

> $HADOOP_HOME/sbin/start-yarn.sh

> $HADOOP_HOME/bin/mapred --daemon start historyserver- spark

> $SPARK_HOME/sbin/start-all.sh

> $SPARK_HOME/sbin/start-history-server.sh- spark ui

Spark Context - Spark가 실행중일 때만 들어가짐 (http://master:4040)

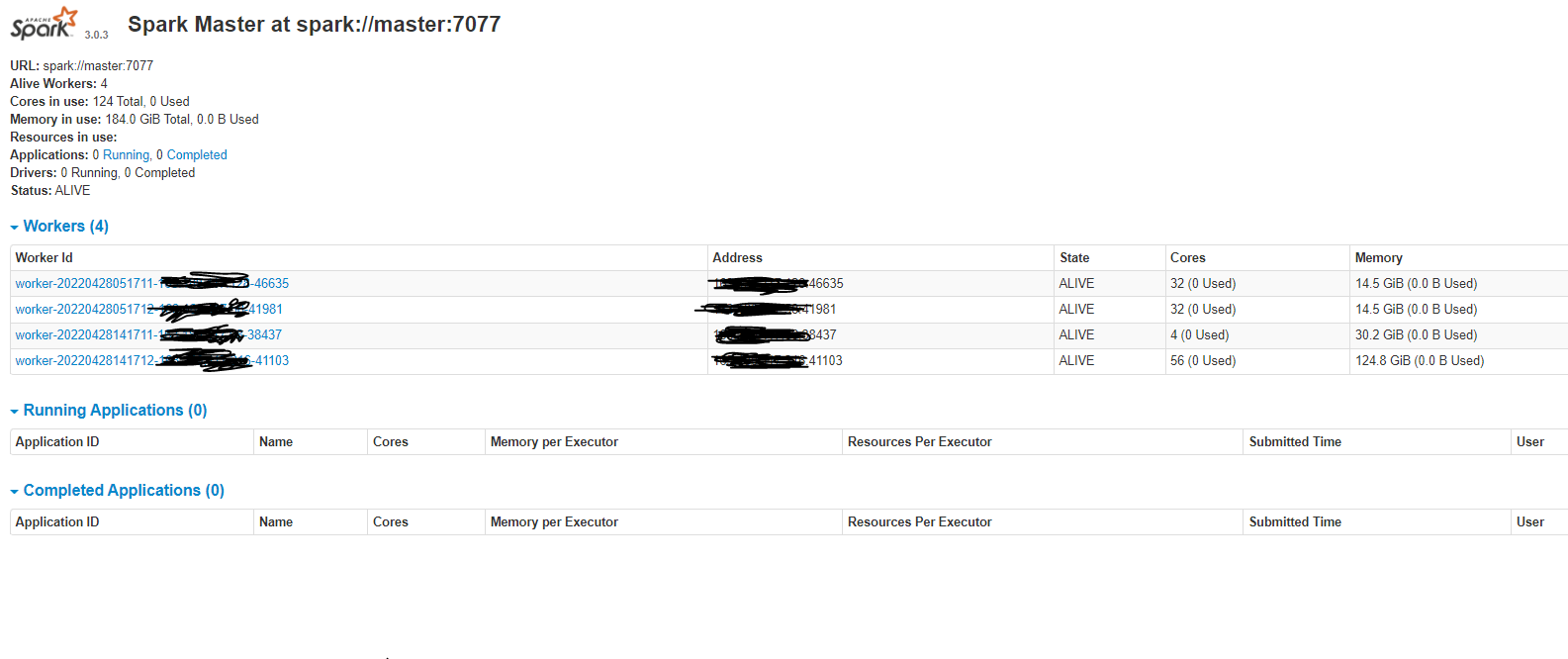

Spark Master (http://master:8080)

Spark Worker (http://master:8081, http://worker1:8081 ...)

Spark History Server (http://master:18080)

Spark Master (http://master:8080) 화면

🚩 Spark 중지

- spark

> $SPARK_HOME/sbin/stop-all.sh

> $SPARK_HOME/sbin/stop-history-server.sh

> rm -rf $SPARK_HOME/eventLog/*- hadoop

> $HADOOP_HOME/sbin/stop-dfs.sh

> $HADOOP_HOME/sbin/stop-yarn.sh

> $HADOOP_HOME/bin/mapred --daemon stop historyserver

> rm -rf $HADOOP_HOME/data/namenode/*- 모든 Datanode에서

rm -rf $HADOOP_HOME/data/datanode/*