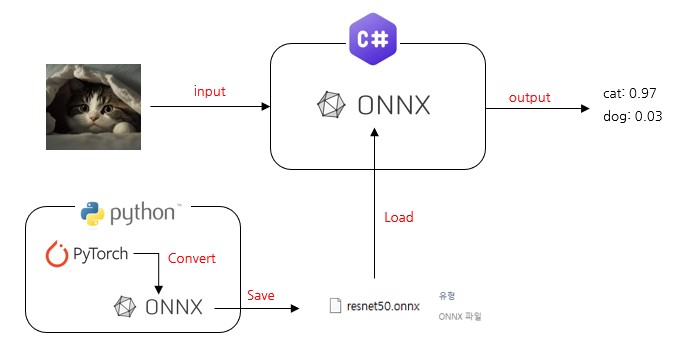

목적

Pytorch 모델을 ONNX로 변환하고, 변환한 ONNX 모델을 C#에서 사용할 수 있다.

Python에서 Pytorch 모델 학습이 완료되고 .pt 파일이 있는 상태라고 가정.

Environment

Python

- python 3.8.16

- pytorch 2.1.0

- onnx 1.15.0

- onnxruntime 1.16.3

C#

- . NET 8.0

- Microsoft.ML.OnnxRuntime.Gpu (1.16.3)

- SixLarbors.ImageSharp (3.0.2)

Code

1. Python

import os

import torch

import onnx

import onnxruntime

import numpy as np

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")(1) Pytorch -> Onnx

INPUT_SIZE = (1, 3, 224, 224)

MODEL_PATH = "./checkpoint/resnet50.pt"

ONNX_PATH = os.path.splitext(MODEL_PATH)[0] + ".onnx"# Load model

model = torch.load(MODEL_PATH)

model.eval()

# Define random Tensor

x = torch.randn(size = INPUT_SIZE, reguires_grad = True).to(device)

# Export onnx

torch.onnx.export(model, x, ONNX_PATH, export_params = True, opset_version = 17, input_names = ["input"], output_names = ["output"])(2) Load Onnx & Confirm

# Load onnx

onnx_model = onnx.load(ONNX_PATH)

# Confirm: Whether it follows the onnx standard

assert onnx.checker_model(onnx_model) == Nonedef to_numpy(tensor):

return tensor.detach().cpu().numpy() if tensor.requires_grad else tensor.cpu().numpy()

# Compare torch result with onnx result

## Torch result

torch_out = model(x)

## Onnxruntime result

ort_session = onnxruntime.InferenceSession(ONNX_PATH)

ort_inputs = {ort_session.get_inputs()[0].name : to_numpy(x)}

ort_outs = ort_session.run(None, ort_inputs)

ort_out = ort_outs[0]

## Compare

np.testing.assert_allclose(to_numpy(torch_out), ort_out, rtol = 1e-03, atol = 1e-05)2. C#

Programs.cs

using System;

using Microsoft.ML.OnnxRuntime;

using Microsoft.ML.OnnxRuntime.Tensors;

using SixLabors.ImageSharp;

namespace Predict

{

class Program

{

public static void Main(string[] args)

{

// Read paths

string modelFilePath = args[0];

string imageFilePath = args[1];

// Read image

using Image<Rgb24> image = Image.Load<Rgb24>(imageFilePath);

// Resize & Padding

image.Mutate(x =>

{

x.Resize(new ResizeOptions

{

Size = new Size(224, 224),

Mode = ResizeMode.Pad

});

});

// RGB image to Gray image

Image<Rgb24> grayscaleImage = new Image<Rgb24>(image.Width, image.Height);

image.ProcessPixelRows(accessor =>

{

for (int y = 0; y < accessor.Height; y++)

{

for (int x = 0; x < accessor.Width; x++)

{

Rgb24 originalPixel = image[x, y];

// Calculate gray pixel

int brightness = (int)(0.299 * originalPixel.R + 0.587 * originalPixel.G + 0.114 * originalPixel.B);

Rgb24 grayPixel = new Rgb24((byte)brightness, (byte)brightness, (byte)brightness);

// Apply to new image

grayscaleImage[x, y] = grayPixel;

}

}

});

// image to Tensor

Tensor<float> input = new DenseTensor<float>(new[] { 1, 3, 224, 224 });

grayscaleImage.ProcessPixelRows(accessor =>

{

for (int y = 0; y < accessor.Height; y++)

{

Span<Rgb24> pixelSpan = accessor.GetRowSpan(y);

for (int x = 0; x < accessor.Width; x++)

{

input[0, 0, y, x] = pixelSpan[x].R / 255.0f;

input[0, 1, y, x] = pixelSpan[x].G / 255.0f;

input[0, 2, y, x] = pixelSpan[x].G / 255.0f;

}

}

});

// Setup inputs

var inputs = new List<NamedOnnxValue>

{

NamedOnnxValue.CreateFromTensor("input", input)

};

// Run inference

using var session = new InferenceSession(modelFilePath, SessionOptions.MakeSessionOptionWithCudaProvider()); //GPU

// using var session = new InferenceSession(modelFilePath); //CPU

using IDisposableReadOnlyCollection<DisposableNamedOnnxValue> results = session.Run(inputs);

// Postprocess to get softmax vector

IEnumerable<float> output = results.First().AsEnumerable<float>();

float sum = output.Sum(x => (float)Math.Exp(x));

IEnumerable<float> softmax = output.Select(x => (float)Math.Exp(x) / sum);

// Extract predicted classes

IEnumerable<Prediction> predictions = softmax.Select((x, i) => new Prediction { Label = LabelMap.Labels[i], Confidence = x })

.OrderByDescending(x => x.Confidence);

// Print results to console

Console.WriteLine("Model Result...");

Console.WriteLine("--------------------------------------------------------------");

foreach (var prediction in predictions)

{

Console.WriteLine($"Label: {prediction.Label}, Confidence: {prediction.Confidence}");

}

}

}

}

Prediction.cs

namespace Predict

{

internal class Prediction

{

public string Label { get; set; } = string.Empty;

public float Confidence { get; set; }

}

}LabelMap.cs

namespace Predict

{

public class LabelMap

{

public static readonly string[] Labels = new[] {"cat",

"dog"};

}

}Run

dotnet run {onnx model path} {image path}ex)

dotnet run .\model.onnx .\test_image.jpg#### OUTPUT ####

Model Result...

--------------------------------------------------------------

Label: cat, Confidence: 0.9967283

Label: dog, Confidence: 0.0032717406