Bellman equation is about method of representing state value function and action value function.

Bellman equation for value state function

Let’s change the form of state value function(using bayesian rule).

Recall Bayesian rule:

p(x,y)=p(x∣y)p(y)

p(x,y∣z)=p(x∣y,z)p(y∣z)

The state value function becomes:

V(st)=at:a∞∫Gtp(at,st+1,at+1,…∣st)dat:a∞=at∫st+1:a∞∫Gtp(st+1,at+1,…∣st,at)dst+1:a∞p(at∣st)dat

We can find the blue box is actually action value function.

So the state value function becomes:

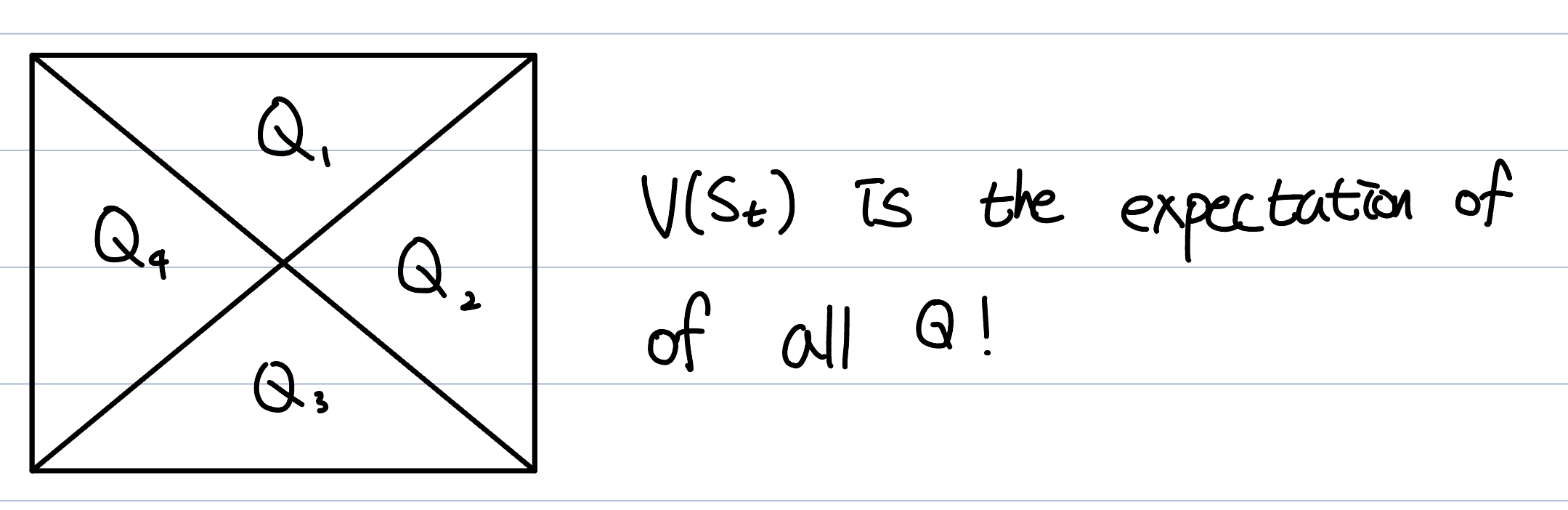

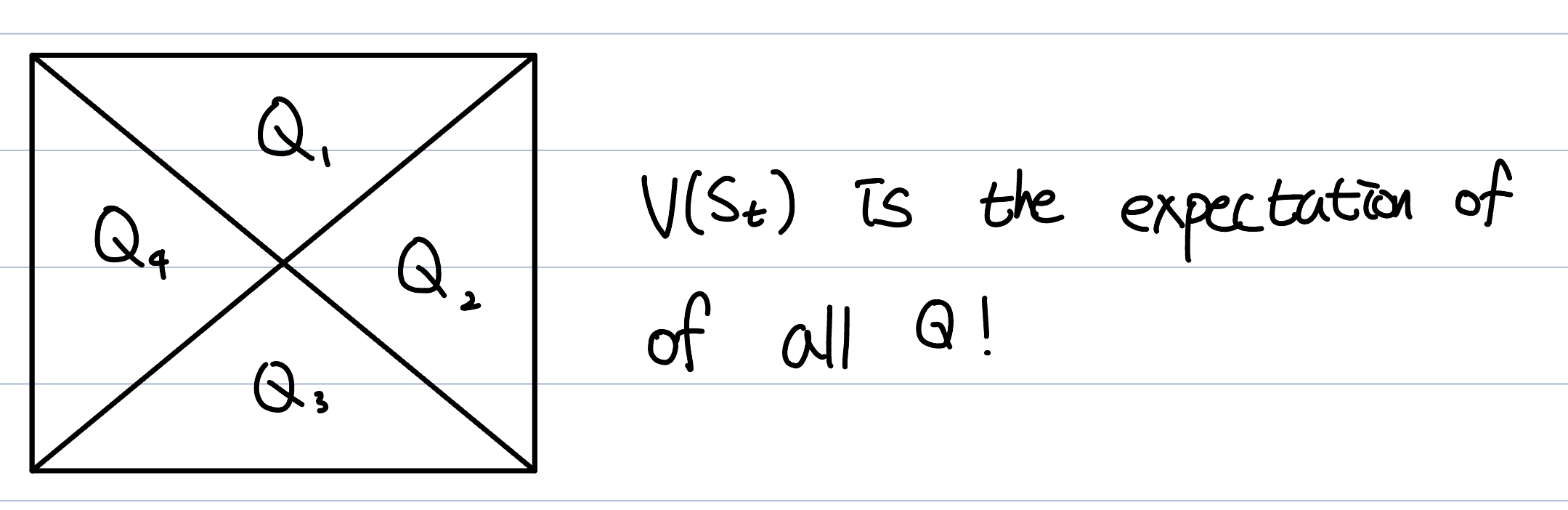

V(st)=at∫Q(st,at)p(at∣st)dat

For example, in the Q-learning, the state value function is expectation of all Q in the current state.

Let's change the equation in different way.

V(st)=at:a∞∫Gtp(at,st+1,at+1,…∣st)dat:a∞=at,st+1∫at+1:a∞∫Gtp(at+1,…∣st,at,st+1)dst+1:a∞p(at,st+1∣st)dat,st+1

As we learned, if we already have information about st+1, we don't need st,at anymore.

Gt can be expressed as Rt+γRt+1Therefore, the equation becomes:

V(st)=at,st+1∫at+1:a∞∫(Rt+γGt+1)p(at+1,...∣st+1)dat+1:a∞p(at,st+1∣st)dat,st+1

If we look at the equation, we know that

at+1:a∞∫Gt+1p(at+1,...∣st+1)dat+1:a∞=V(st+1)

The final equation is:

V(st)=at,st+1∫(Rt+γV(st+1))p(at,st+1∣st)dat,st+1=at,st+1∫(Rt+γV(st+1))p(st+1∣st,at)p(at∣st)dat,st+1

This equation form has an advantage in being able to see the policy(blue box). The green box is called transition probability.

Bellman equation for action value function

Now, we will change the form of action value function.

Q(st,at)=st+1:a∞∫Gtp(st+1,at+1,…∣st,at)dst+1:a∞=st+1∫at+1:a∞∫Gtp(at+1,…∣st,at,st+1)dat+1:a∞p(st+1∣st,at)dst+1=st+1∫at+1:a∞∫(Rt+γGt+1)p(at+1,…∣st+1)dat+1:a∞p(st+1∣st,at)dst+1=st+1∫(Rt+γV(st+1))p(st+1∣st,at)dst+1

We have applied same principle to changing equation. As we can see the equation, we can express Q with V(st+1).

Let's change the action value function again.

Q(st,at)=st+1:a∞∫Gtp(st+1,at+1,…∣st,at)dst+1:a∞=st+1,at+1∫at+1:a∞∫Gtp(st+2,…∣st,at,st+1,at+1)dst+2:a∞p(st+1,at+1∣st,at)dst+1,at+1=st+1,at+1∫at+1:a∞∫(Rt+γGt+1)p(st+2,…∣st+1,at+1)dst+2:a∞p(st+1,at+1∣st,at)dst+1,at+1=st+1,at+1∫(Rt+γQ(st+1,at+1))p(st+1,at+1∣st,at)dst+1,at+1=st+1,at+1∫(Rt+γQ(st+1,at+1))p(at+1∣st,at,st+1)p(st+1∣st,at)dst+1,at+1=st+1,at+1∫(Rt+γQ(st+1,at+1))p(at+1∣st+1)p(st+1∣st,at)dst+1,at+1

Again, by changing the equation, we reduced a calculation for integration, and also found the policy(blue box)and the transition probability(green box) in the equation.