Computer Networks

1.2 Network Hardware

2 types of transmission technology that are in widespread

broadcast links

- the communication channel is shard by all the machines on the network

- packets sent by any machine are received by all the others

- upon receiving a packet, a machine checks the address field

- if the packet is intended for some other machine, it is just ignored

- some broadcast systems also support transmission to a subset of the machines, multicasting

point to point links

- connect individual pairs of machines

- short message = packets

- one sender and exactly one receiver is called unicasting

1.2.1. Personal Area Networks

- PaNs let devices communicate over the range of a person

- design a short-range wireless network called Bluetooth to connect these components without wires

1.2.2. Local Area Networks

- privately owned network that operates within and nearby a single building like a home

- called enterprise networks

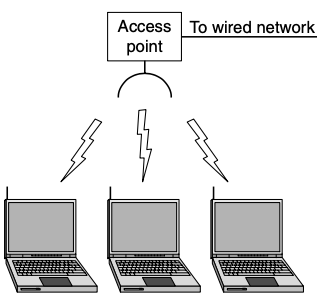

- in most cases, each computer talks to a device in the ceiling

- This device, called an AP (Access Point), wireless router, base station

- standard for wireless LANs called IEEE 802.11 known as WiFi

- Wire LANs use a range of different transmission technologies

- compared to wireless networks, wired LANs exceed them in all dimensions of performance

- just easier to send signals over a wire or through a fiber than through the air

- the topology of many wired LANs is built from point-to-point links

- IEEE 802.3 called Ethernet

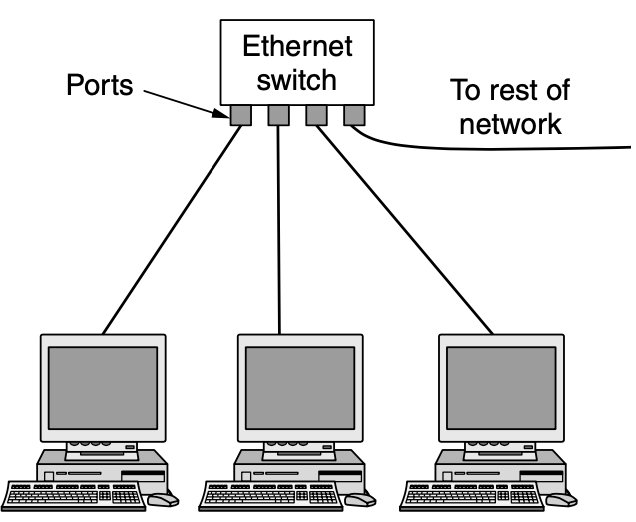

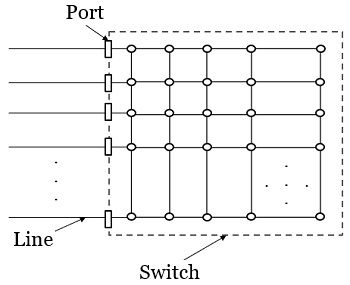

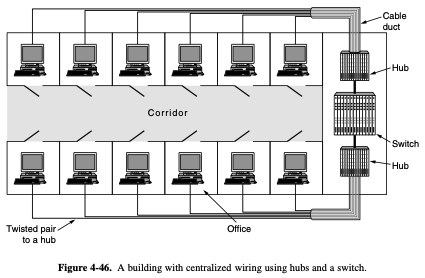

- Each computer speaks the thernet protocol and connects to a box called switch

- Switch has multiple ports, each of whih can connect to one computer

- To build larger LANs, switches can be plugged into each other using thier ports

What happens if you plug them together in a loop?

- job of the protocol to sort out what paths packets should travel to safely reach the intended computer

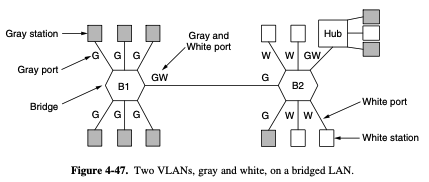

- also possible to divide one large physical LAN into two smaller logical LANs

- easier to manage the system if engineering and finance logically each had it's own network Virtual LAN , VLAN

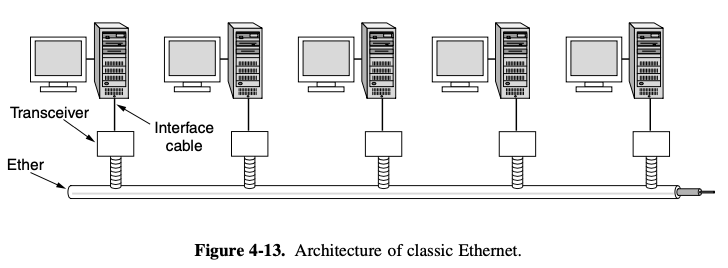

classic Ethernet

- at most one machine could successfully transmit at a time

- computers could transmit whenever the cable was idle

- two or more packets collided, each computer just waited a random time and tried later

- wireless and wired broadcast networks can be divided into static, dynamic degisn

static allocation

- devide time into discrete intervals

- use a round-robin algorithm

- wastes channel capacity when a machine has nothing to say during its allocated slot

dynamic allocation

- common channel are either centralized or decentralized

- centralized channel allocation, there are single entry

- ex) base station in cellular networks, which determins who goes next

- decentralized channel allocation,

- each machine must decide for itself whether to transmit

1.2.3. Metropolitan Area Networks

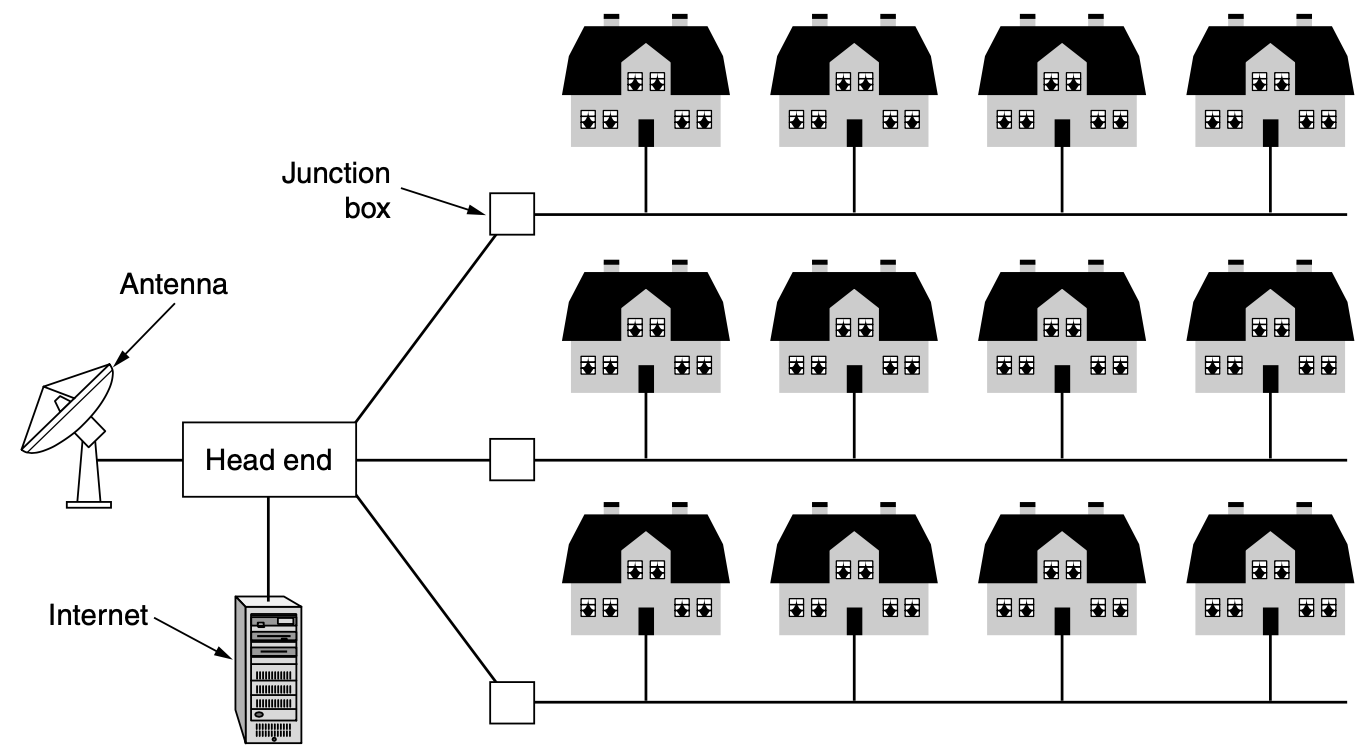

- ex) cable television networks available in many cities

- cable television is not the only MAN

- recent developments int high speed wireless Internet access have resulted in another MAN

1.2.4. Wide Area Networks

- large geographical area (ex) country or continent)

- rest of the network that connects these hosts is then called the communication subnet, just subnet for short

- Transmission lines move bits between machines

- Switching elements, are specialized computers that connect two or more transmission lines

- router : switching computers have been called by various name in the past

1.3. Network Software

1.3.1. Protocol Hierarchies

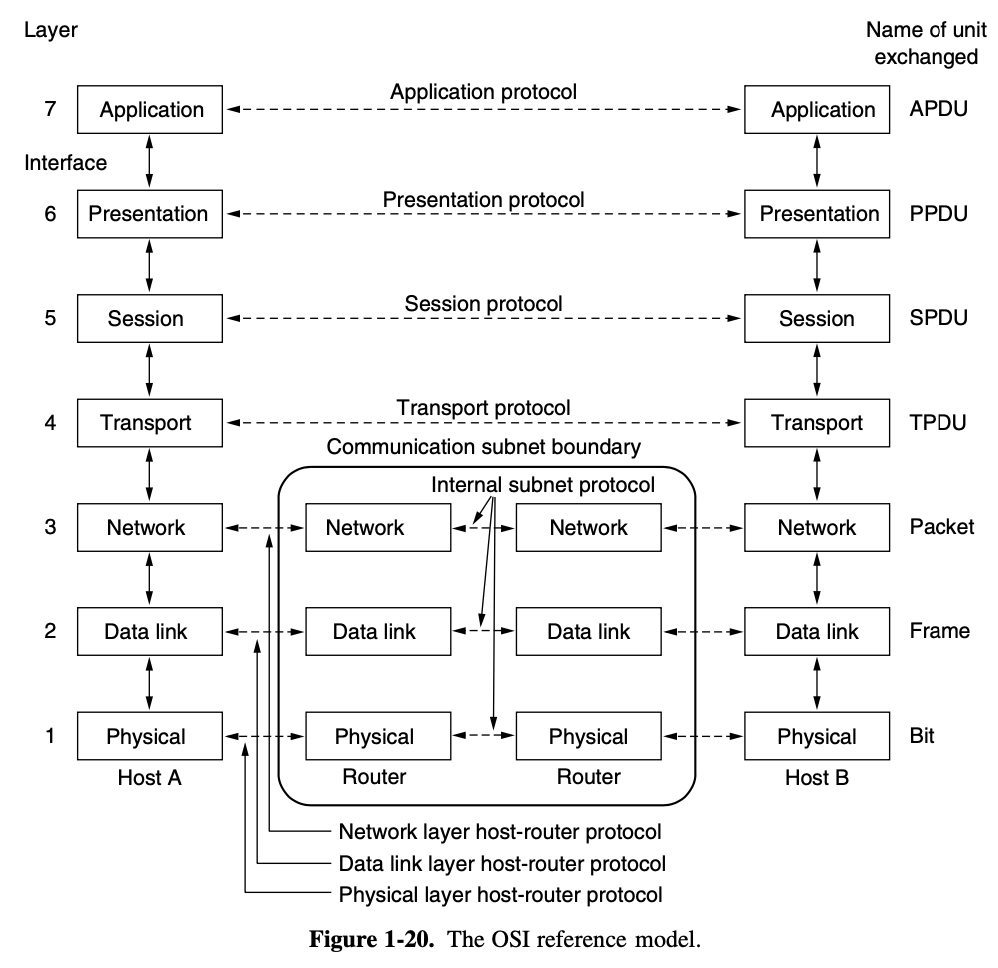

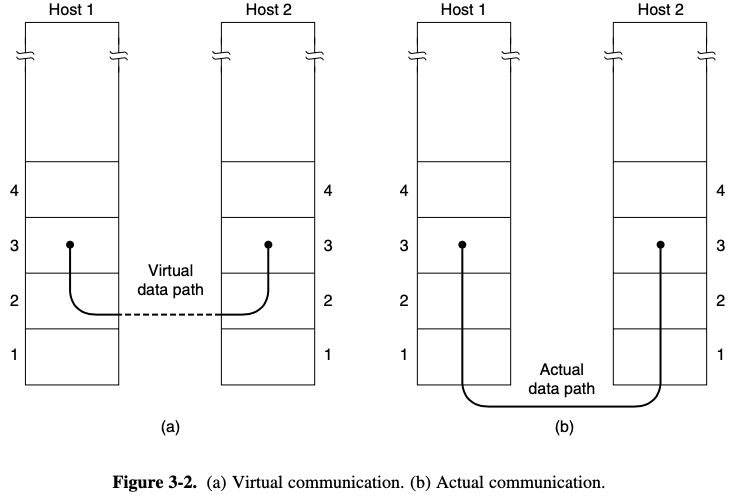

- to reduce their design complexity, most networks are organized as a stack of layers or levels

- protocol : agreement between the communicating parties

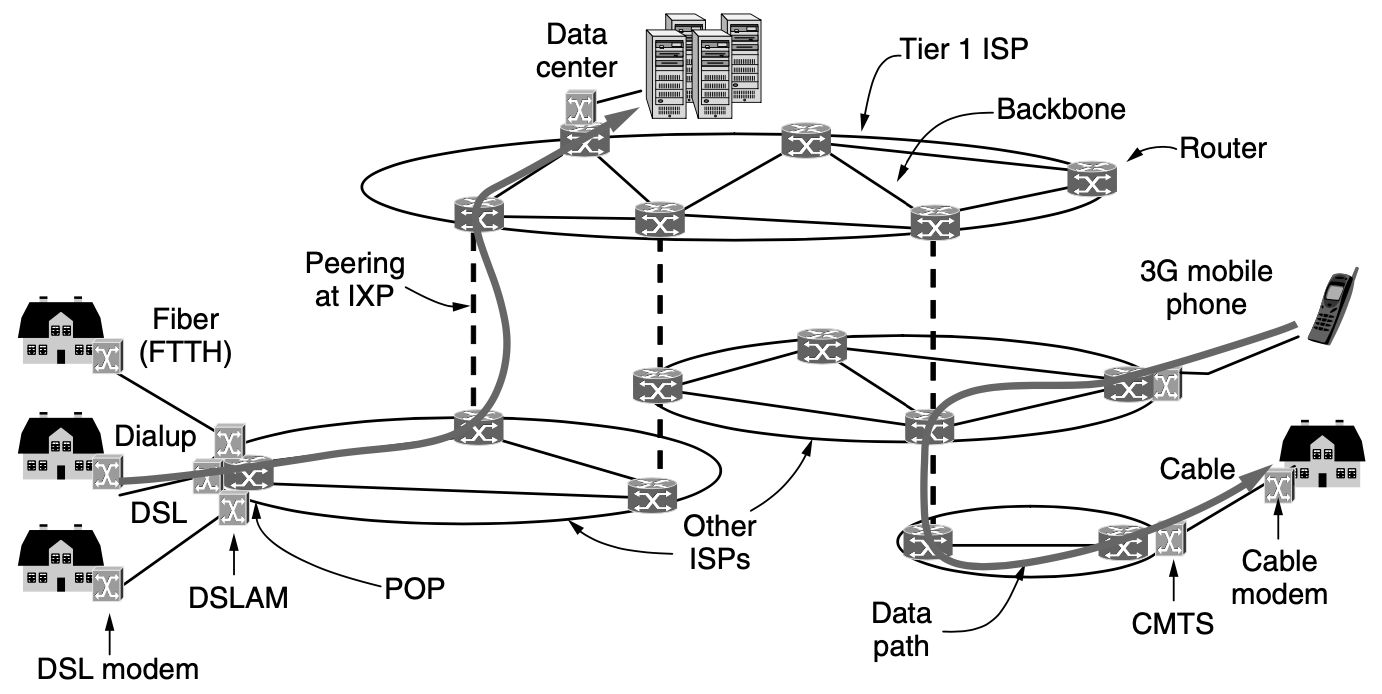

Architecture of the Internet

- DSL (Digital Subscriber Line) : reuses the telephone line that connects to your house for digital data transmission

- DSL model : convert digital packet to signal

- DSLAM (Digital Subscriber Line Access Multiplexer) : convert betwwen signals and packets

+) modem = modulator demodulator

- The device at the home and is called cable modem

- cable headend = CMTS (Cable Modem Termination System)

- internet access at much greater than dial-up speeds is called broadband

- name refers to access at much greater than dial-up used for faster networks, rather than any particular speed

- fatser internet access can be provided at rates on the order of 10 to 100 Mbps, called FTTH (Fiber to the Home)

- location at which customer packets enter the ISP network for service the ISP's POP (Point of Presence)

- long-distance transmission lines that interconnect routers at POPs in the different cities that the ISPs serve

- this equipment is called the backbone of the ISP

- ISPs connect their networks to exchange traffic at IXPs (Internet eXchange Points)

- The connected ISPs are said to peer with each other

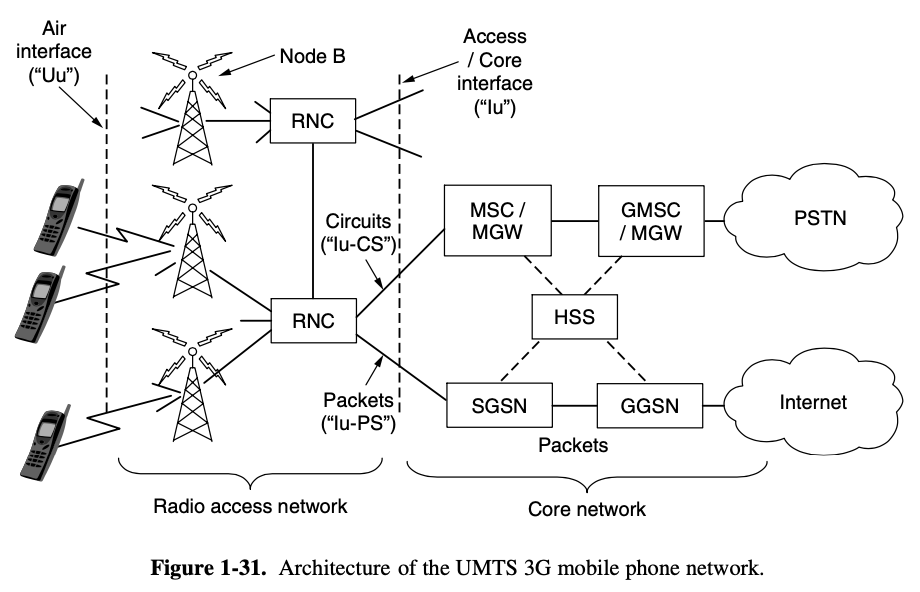

1.5.2. Third-Generation Mobile Phone Networks

- AMPS (Advanced Mobile Phone System) first generation system

- GSM (Global System for Mobile communications) : become the most widely used mobile phone syestem in the world, 2G system

- UMTS (Universal Mobile Telecommunications Systems), WCDMA (Wideband Code Division Multiple Access) : main 3G system, being rapidly deployed world wide

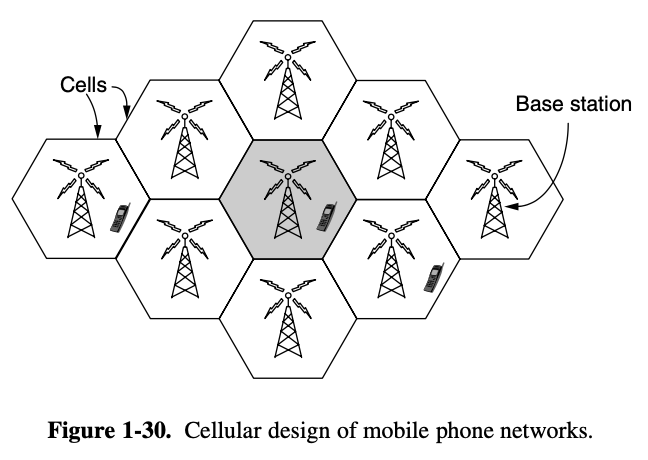

- scarcity of spectrum that led to the cellular network

- within a cell, users are assigned channels that do not interfere with each other and do not cause too much interference for adjacent cells

- this allows for good reuse of the spectrum or frequency reuse in te neighboring cells which increase the capacity of the network

- air interface, cellular base station : fancy name for the radio communication protocol, is used over the air between the mobile device

- **RNC (Radio Network Controlller)v : controls how the epectrum is used

- Node B : base station implements the air interface

- core network : rest of mobile phone network carries the traffic for the radio access network

- there is both packet and circuit switched equipment in the core network

- older mobile phone networks used a circuit-switched core in the style of the traditional phone network to carry voice calls

[legacy]

- MSC (Mobile Switching Center), GMSC (Gate-Mobile Switching Center), MGW (Media Gateway) elements that set up connections over a circuit-switched core network such as PSTN (Public Switched Telephone Network)

[data service]

- **GPRS (General Packet Radio Service)

- newer mobile phone networks carry packet data at rates of multiple Mbps

- to carry all this data, UTMS core network nodes connect directly to packet-switched network

- SGSN (Serving GPRS Support Node), GGSN (Gateway GPRS Support Node) deliver data packets to and from mobiles and interface to external packet networks such as the internet

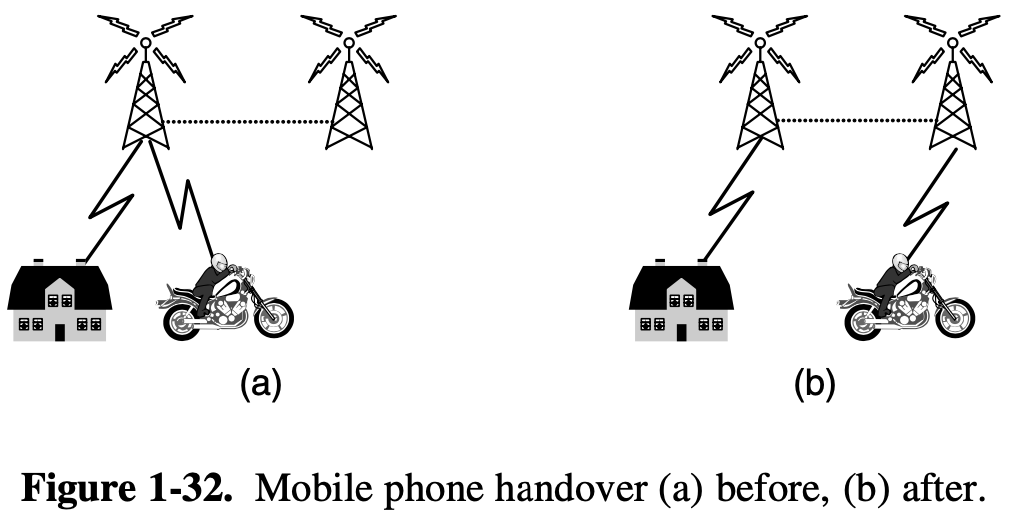

[handover, handoff]

- CDMA is possible to connect to new base station before disconnection from the old base station

- soft handover, hard handover

- hos to find mobile in the first place when there is an incomming call

- Each mobile phone network has HSS (Home Subscriber Server) in the core network

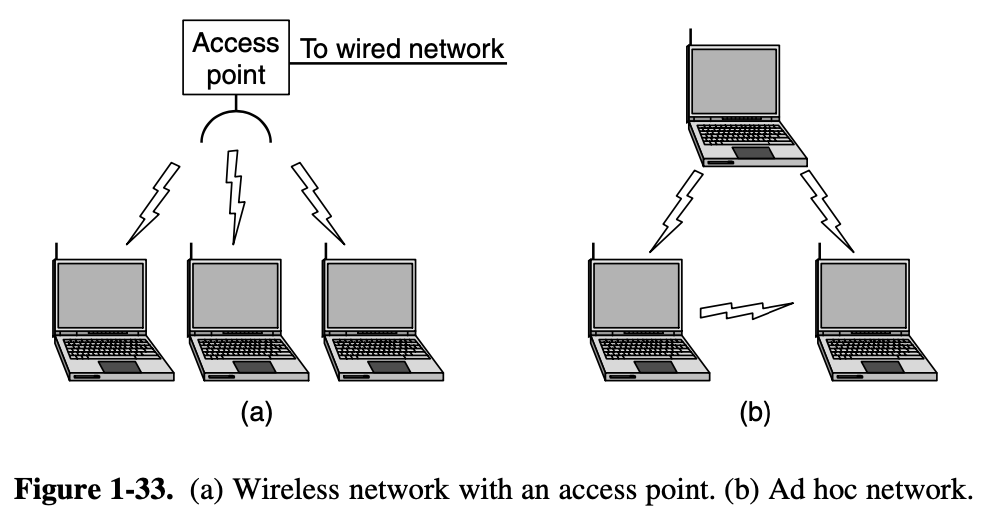

1.5.3. Wireless LANs: 802.11

- ISM (Indutrial Scientific and Medical) bands defined by ITU-R (e.g., 902-928 MHz, 2.4-2.5 GHz, 5.725-5.825 GHz)

- 802.11 networks are made up of clients called APs (access points) = base stations

- 802.11 uses a CSMA (Carrier Sense Multiple Access) scheme that draws on ideas from classic wired Ethernet

- computers wait for a short random interval before transmitting

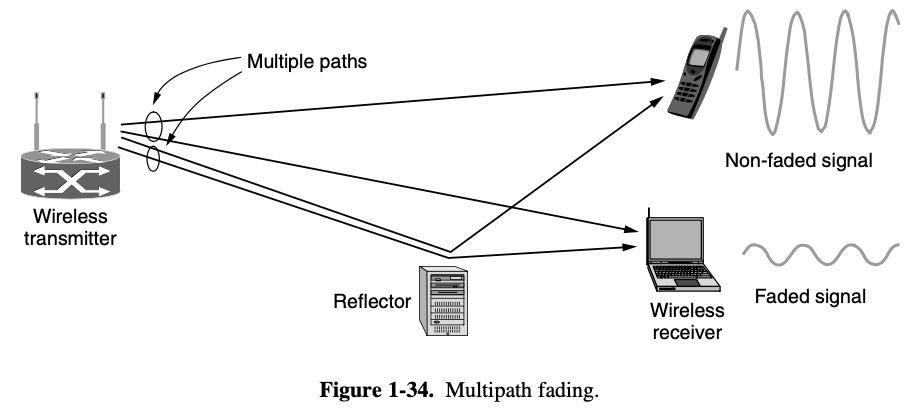

- wireless transmissions are broadcast, it is easy for nearby computers to receive packets of information that were not intended for them

- 802.11 standard included an encryption scheme known as WEP (Wired Equivalent Privacy), WiFi Protected Access, WPA, WPA2

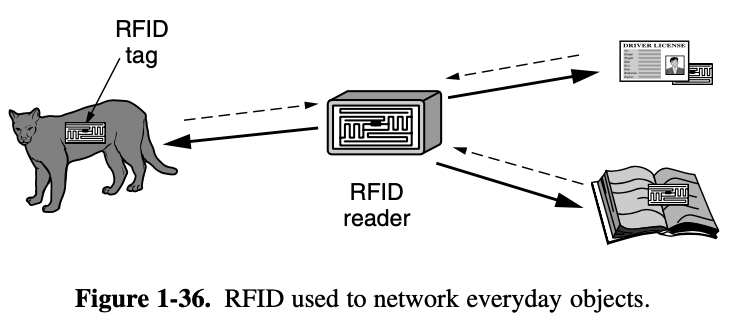

1.5.4. RFID and Sensor Networks

- RFID (Radio Frequency IDentification)

tag consists of a small microchip with a unique identifier and an antenna that receives radio transmissions - RFID readers installed at tracking points find tags when they come into range and interrogate them for their information

- passive RFID : form of radio waves by RFID readers

- active RFID : electric power source on the tag

- common form of RFID = UHF RFID (Ultra-High Frequency RFID)

- shipping pallets an some driver licenses

- tag communicate at distance of several meters by changing the way they relfect the reader signals

- the reader is able to pick up these reflections

- another popular kind of RFID = HF RFID (High Frequency RFID)

- ex) passport, credit cards, books etc

- short range, meter or less

- other frequencity = LF RFID (Low Frequency RFID)

- developed before HF RFID and used for animal tracking

- tags wait for a short random interval before responding with their identification

- which allows the reader to narrow down individual tags and interrogate them further

- RFID readers to easily track an object

- difficult to secure RFID tags

- weak measures like passwords are used

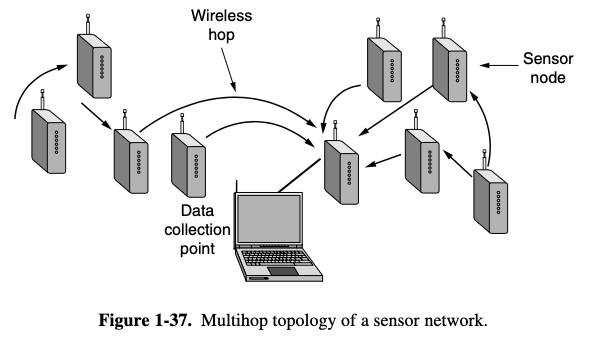

- RFID is sensor network

- deployed to monitor aspects of the physical world

- sensor nodes are small computers

- many nodes are place in the environment that is to be monitored

- nodes communicate carefully to be able to deliver thier sensor information to an external collection point

Physical layer? 왕어려움 안해

3. The data link layer

3.1. Data link layer design issues

- providing a well-defined service interface to the network layer

- dealing with transmission errors

- regulating the flow of data so that slow receivers are not swamped by fast senders

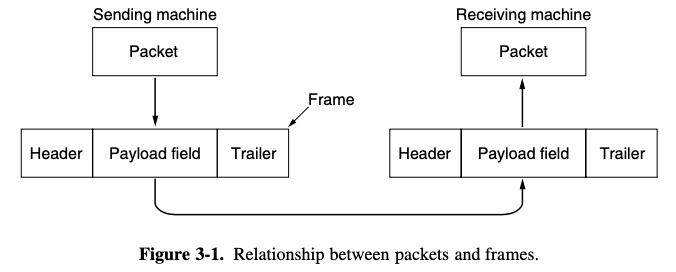

- data link layer takes the packets it gets from the network layer and encapsulates them into frames for transmission

- Each frame contains a frame header, a payload field for holding the packet

3.1.1. Services Provided to the Network Layer

- on the source machine is an entity, call it a process, in the network layer that hands some bits to the data link layer for transmission to the destination

3 reasonable possibilities that we will consider in turn are:

1. Unacknowledged connectionless service

- consists of having the source machine send independent frames to the destination machine without having the destination machine acknowledge them

- if frame is lost due to noise on the line, no attempt is made to detect the loss or recover from it in the data link layer

ex) Ethernet

- No logical connection is established beforehand or released afterward

2. Acknowledge connectionless service

- service is offered, there are still no logical connections used, but each frame sent is individually acknoledged

- sender knows whether a frame has arrived correctly or been lost

- this service is useful over unreliable channels, such as wireless systems, 802.11 (Wifi)

3. Acknowledge connection-oriented service

- the most sophisticated service the datalink layer : provide to the network layer is connection-oriented service

- each frame sent over the connection is numbered

- data link layer gurantees that each frame sent is indeed received

- appropriate over long, unreliable links (ex) stellite channel, long-distnace telephone circuit)

3.1.2. Framing

- the data link layer must use the service provided to it by th phyisical layer

- what the physical layer does is accept a raw bit atream and attempt to deliver it to the destiation

- the bit stream receivved by the data link layer is not guaranteed to be error free

- the usual approach is for the data link layer to break up the bit stream into discrete frames

- compute a short token called a checksum for each frame, and include the checksum in the frame when it is transmitted

find the start of new frames

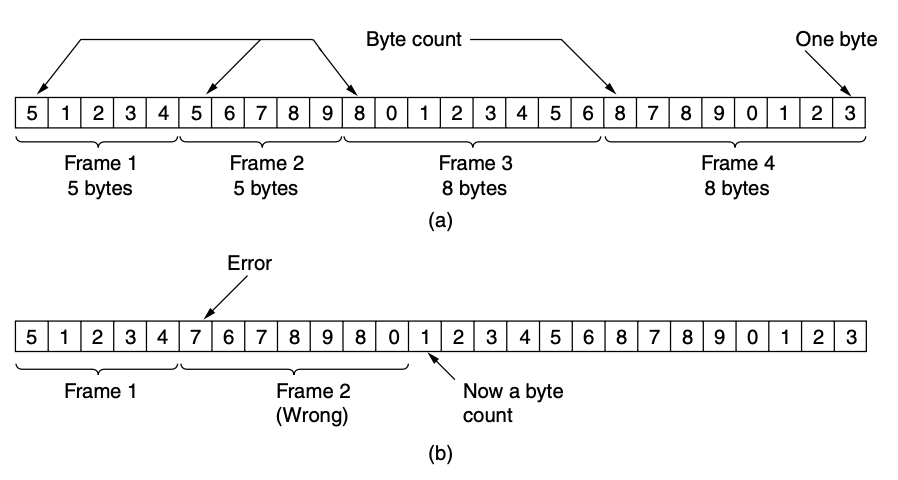

[1. Byte count]

- use a field in the header to specify the number of bytes in the frame

- count can be garbled by a transmission error

- the frame is bad, it still has no way to telling where the next frame starts

- sending a frame back to the source asking for a retransmission does not help either

- since the destination does not know how many bytes to skip over to get to the start of the retransmission

- this method is rarely used by itself

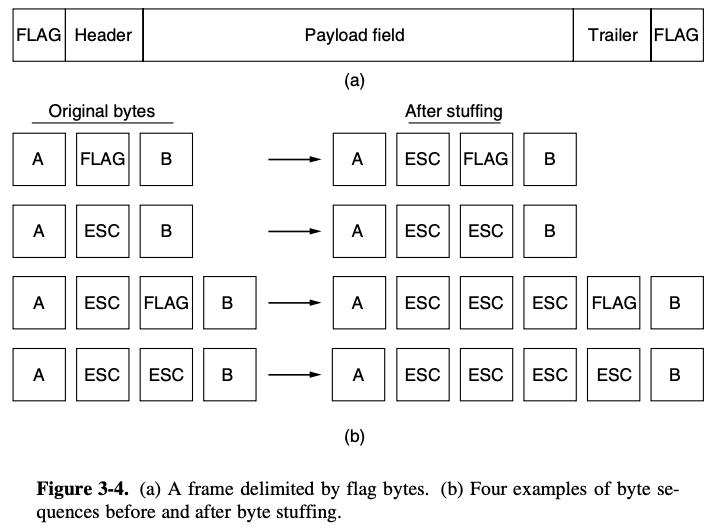

[2. Flag bytes with byte stuffing]

- resynchronization after an error by having each frame start and end with special delimiter

- the same byte, called a flag byte, is used as both the starting and ending delimiter

- the problem will be happend when the flag byte occurs in the data

- have to sender's data link layer insert a special escape byte (ESC) just before each "accidental" flag byte in the data

byte stuffing: the data link layer on the receiving end removes the escape bytes before giving the data to the network layer

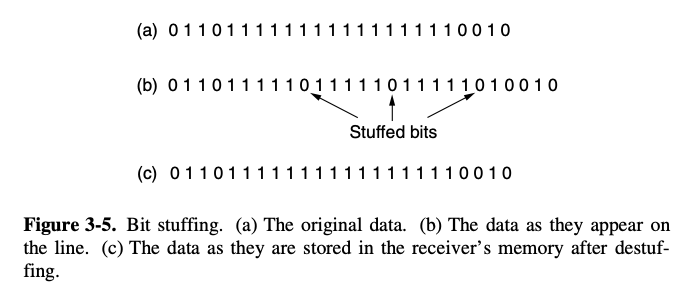

[3. Flag bits with bit stuffing]

- disadvantage of byte stuffing (tied to use of 8-bit)

- framing can be also be done at the bit level

- HDLC (High-level Data Link control) protocol

- Each frame begins and ends with a special bit pattern (0x7E)

- this pattern is flag byte

- when ever the sender's data link layer encounters five consecutive 1s in the data, it automatically stuffs a 0 bit into the outgoing bit stream

ex) USB

- when the receiver sees five consecutive incoming 1 bits followed by a 0 bit it automatically destufss the 0 bit

ex) flag pattern : 01111110

this flag is tranmitted as 011111010 but stored in the receiver's memory as 01111110

- with bit stuffing the boundary between two frames can be unambiguously recognized by the flag pattern

- with both bit and byte stuffing, a side effect is that the length of a frame now depends on the contents of the data is carries

- 100byte might be carried but with bit/byte stuffing the frame will become roughly 200 bytes long

[4. Physical layer coding violations]

- use a shortcut from the physical layer

coding violations: use some reserved signals to indicate the start and end of frames- they are reserved signals it is easy to find the start and end of frames and there is not need to stuff the data

- many data link protocol use a combination of these methods for safety

- common pattern used 802.11 called a preamble

- allow the receiver to prepare for an incoming packet

- followed by a length field in the header that is used to locate the end of the frame

3.1.3. Error control

- usual way to ensure reliable delivery is to provide the sender with some feedback about what is happening at the other end of the line

- receiver to send back sepcial control frames bearin gpositive or negative acknowledgements about the incoming frames

- hardware troubles may cause a frame to vanish completely

- the receive will not react at all, since it has no reason to react

- if the acknowledgement frame is lost, the sender will not know how to preceed

- timer is solution

- when the sender tranmit a frame it generally also starts a timer

- if either the frame or the acknowlegement is lost

- the timer will go off, alerting the sender to a potential problem

- the obvious solution is to just transmit the frame again

- when fames may be transmitted multiple times there is a danger that the receiver will accept the same frame two or more pening

- it is generally necessary to assign sequence numbers to outgoing frames, so that the receiver can distinguish retransmission from originals

whole issue of managing the timers and sequence numbers

3.1.4. Flow Control

- systematically wants to transmit frames faster than the receiver can accept them

[1. feedback-based flow control]

- the receiver sends back information to the sender giving it permission to send more data

- more common these days

- various schemes are known, but most of them use the same basic principle

- well-defined rules about when a sender may transmit the next frame

- these rules often prohibit frames from being sent until the receiver has granted permissions

[2. rate-based flow control]

- built-in mechanism that limits the rate at which senders may transmit data, without using feedback from the receiver

3.2. Error detection and correction

- redundant information to the data that is send

- including enough redundant information to enable the receiver to deduce what the transmitted data must have been

=> error-correcting code = FEC (Forward Error Correction)

- noisy channels : retransmissions are just as likely to be in error as the first transmission

- include only enough redundancy to allow the receiver to deduce that an error has occured and have it request a retransmission

=> error-detecting code

-

highly reliable, such as fiber

-

to avoid undetected errors the code must be strong enough to handle the expected errors

3.2.1. Error-Correcting Codes

- Hamming codes

- Binary convolutional codes

- Reed-Solomon codes

- Low-Density Parity Check codes

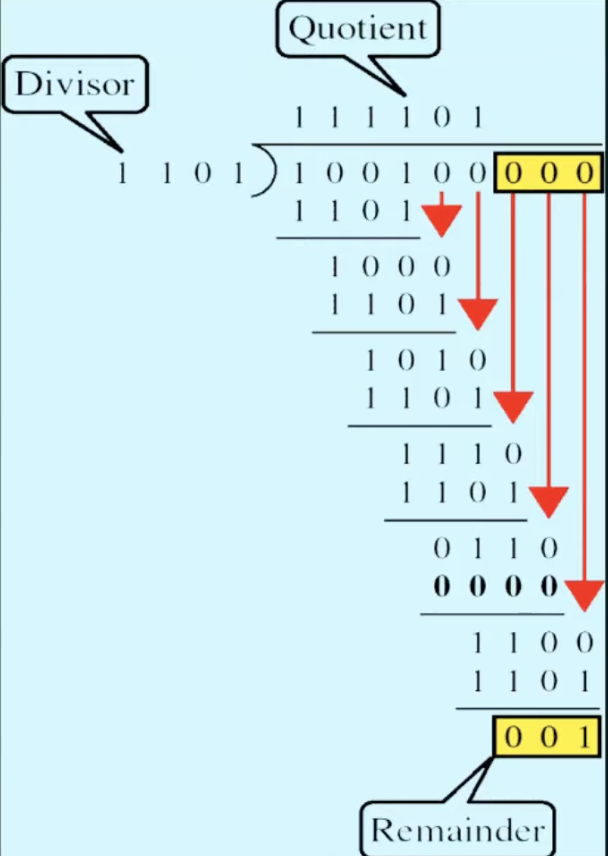

- A frame consists of m data bits and r redundant bits

block code: r check bits are computed solely as a function of the m data bitssystematic code: m data bits are sent directly along with the check bitslinear code: r check bits are computed as a linear function of the m data bits

- Let's assume total length of a block be n (n = m + r)

- we will describe this as an

(n, m)code

- An n bit unit containing data and check bits is referred to as an n-bit code word

- code rate : (# of message bits / # of transmission bits)

ex) 10001001, 10110001 (3 bit differ)

- how many bits differ : XOR the 2 codewords, count the 1 bits

Hamming distance

- number of bit positions in which 2 codewords differ

- 2 codewords are a hamming distance d apart

- it will require d single-bit errors to convert one into the other

- error-detecting and error-correcting properties of a block code depend on its Hamming distance

- to reliably detect d errors, you need a distance d + 1 code

- receiver sees an illegal codeword, it can tell that a tramsmission error has occured

- to correct d errors, you need a distance 2d + 1 code

- legal codewords are so far apart that even with d changes the original codeword is still closer than any other codeword

ex) valid codeword : 00, 11 (2 hamming distance)

00 : 2 bit error / correct

01 : 1 bit error / not know 00 or 11

10 : 1 bit error / not know 00 or 11

11 : 2 bit error / correct

ex) valid codeword : 000, 111 (3 hamming distance)

no error : 000 (correct)

1 bit error : 001, 010, 100 (error / assume 0 (correct))

2bit error : 011, 101, 110 (error / assume 1 (wrong))

3 bit error : 111 (no error / assume 1 (wrong))

So

2 hamming distance

- error detection : 1 bit error

- error correction : impossible

3 hamming distance

- error detection : 1-2 bit error

- error correction : 1 bit error

in summary

d hamming distance

- minimum hamming distance for error detection : d + 1

- minimum hamming distance for error correcton : 2d + 1

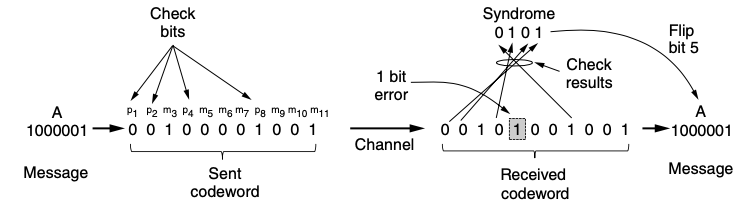

Hamming code

- the bit s of the codeword are numbered consecutively, starting with bit 1 at the left end, bit 2 to its immediate right and so on

- The bit that are powers of 2 are check bits

- the rest are filled up with the m data bits

- when the codeword arrives, the receiver redoes the check bit computations including the values of the received bits

parity check

- error detection but no error correction

- reserve one special bit + the rest carry a message

reserve one speical bit

- number of 1's (

even(0)orodd (1))

- do parity check not all the bits, do some part of bits

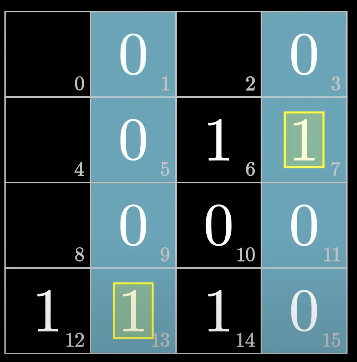

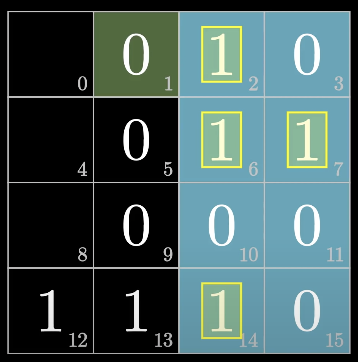

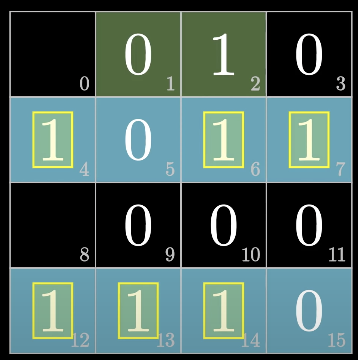

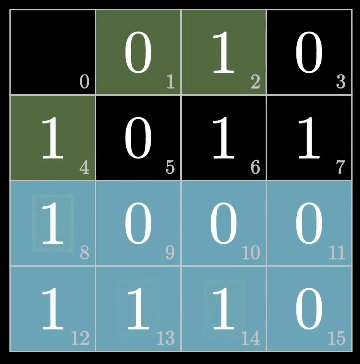

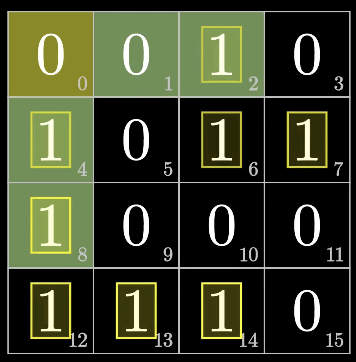

ex) do the hamming code

initial message

fill up the rest message

fill up the first bit (even)

fill up the second bit (odd)

fill up the fourth bit (odd)

fill up the eighth bit (odd)

fill up the 0th bit (even)

- if the check results are not all zero, an error has been detected

- the set of check results forms the error syndrome, is used to pinpoint and correct the error

- hamming distances are valuable for understanding block codes, and hamming codes are used in error-correcting memory

- most networks used stronger codes, the second code we will loock at is a convolutional code

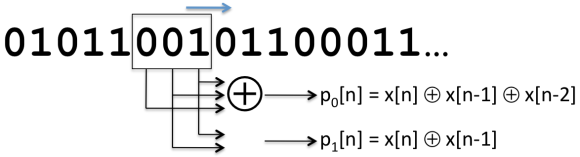

convolutional code

- this code is the only one we will cover that is not a block code

- encoder processes a sequence of input bits and generates a sequence of output bits

- the output depends on the current and previous input bits

- encoder has memory

- widely used in deployed networks, in 802.11

Reed-Solomon code

- linear block codes

- based on the fact that every n degree polynomial is uniquely deterined by n+1 points

ex) ax + b is determined by 2 points

- extra points on the same line are redundant, helpful for error correction

ex) CD, DVD, Barcode

- Reed-Solomon codes are often used in combination with other codes, such as convolutional code

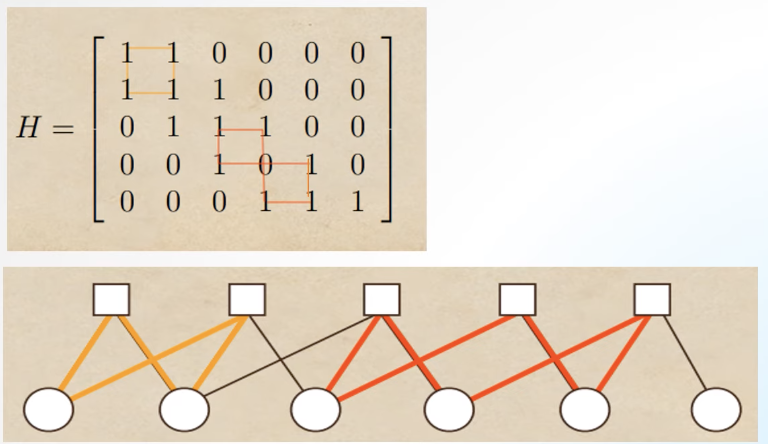

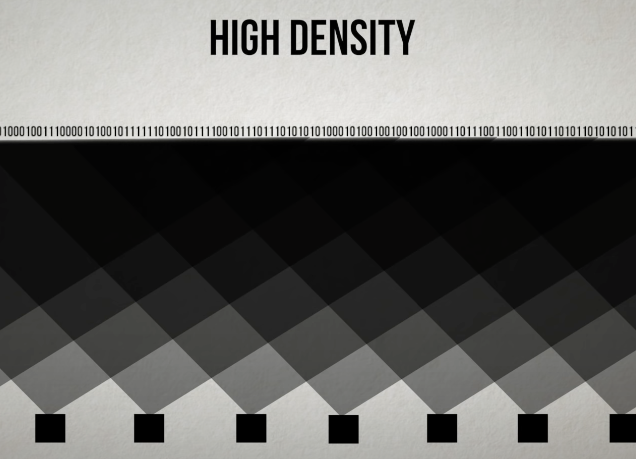

LDPC (Low-Density Parity Check)

+) Don't need to know everything in here (ㅎㅎ)

- linear block codes

- each output bit is formed from only a fraction of the input bits

- this leads to a matrix representation of the code that has a low density of 1s

+) definition

Density: maesursity amount of overlapped, how message bit is connect to the parity check bithigh density: each bits connected to many parity check bits

low density: each bits connected to few parity check bits

3.2.2. Error-Detecting Codes

- widely used on wireless links

- over fiber or high-quality copper, the error rate is much lower

- parity

- checksum

- cyclic redundancy check

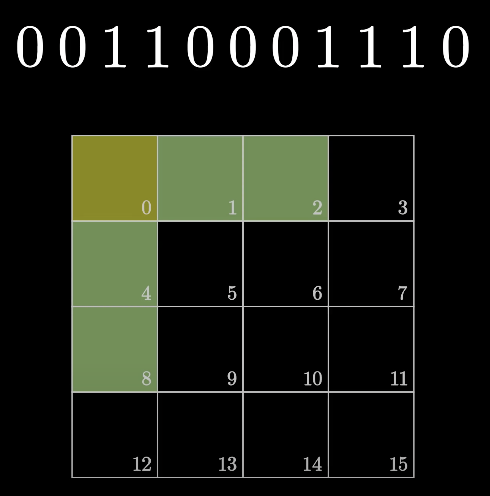

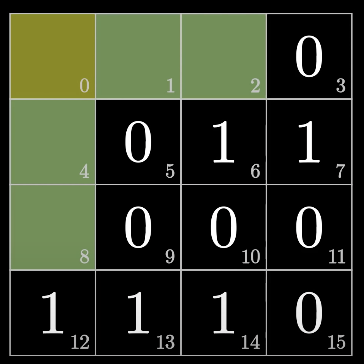

Parity

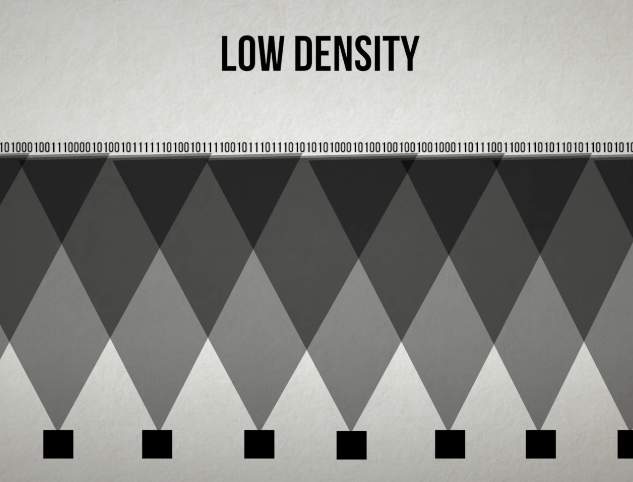

- i already explain about parity above so skip the description except image

Checksum

- closely related to groups of parity bits

- a group of parity bits is one example of a checksum

- checksum is usually placed at the end of the message

- divide bits on k blocks and sum of that

ex) 10011001, 11100010, 00100100, 10000100

10011001

11100010

00100100

+ 10000100

--------------

1000100011

00100011

+ 10

--------------

00100101

1's complement

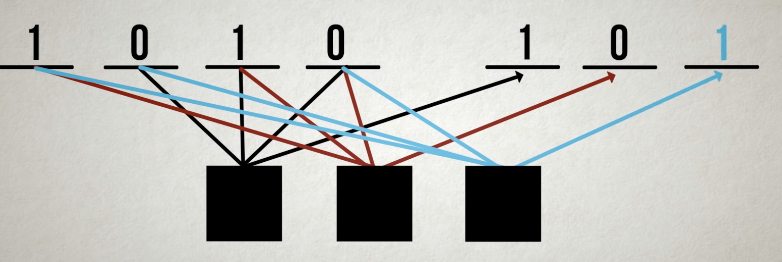

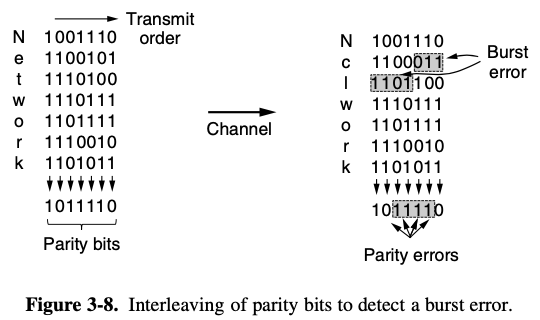

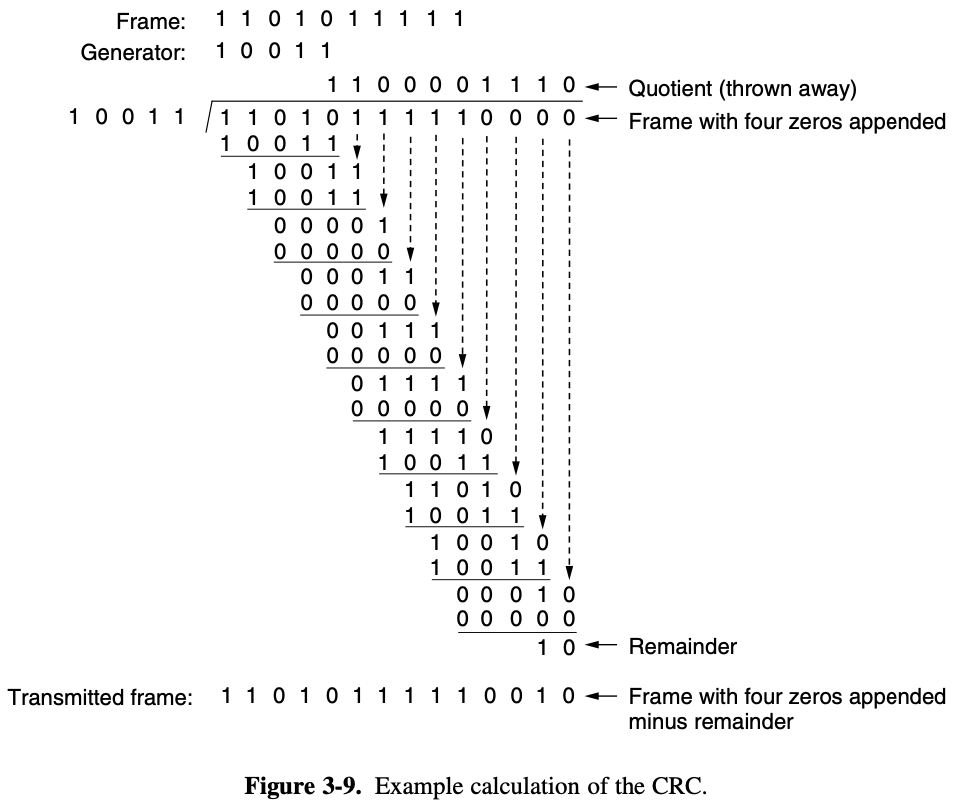

=> 11011010CRC (Cyclic Redundancy Check)

= polynomial code

- treating bit strings as representations of polynomials with coefficients of 0 and 1 only

- find the length of the divisor L

- append L-1 bits to the original message

- perform binary division operation

- remainder of the division = CRC

- results :

100100001

3.3. Elementary data link protocols

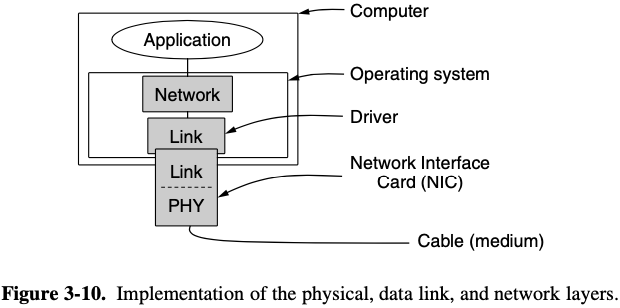

- The physical layer process and some of the data link layer process that communicate by passing messages back and forth

- The physical layer process and some of the data link layer process run on dedicate hardware called a NIC (Network Interface Card)

- th rest of the link layer process and the network layer process run on the main CPU as part of the operating system, with the software for the link layer proess often taking the form of a device driver

자세한 설명은 생략한다 (무슨 코드 설명임)

3.4. Sliding window protocols

- in most practical situation, there is a need to transmit data in both directions

- each link is then comprised of a "forward" channel (for data) and a "reverse" channel (for acknowledge)

- use same link for data in both directions

piggy backing

- The acknowledgement is attached to the outgoing data frame

- the technique of temporarily delaying outgoing acknowlegements so that they can be hooked onto the next outgoing data frame

- better use of the available channel bandwidth

- but how long data link layer wait for a packet onto piggyback the acknowledgement?

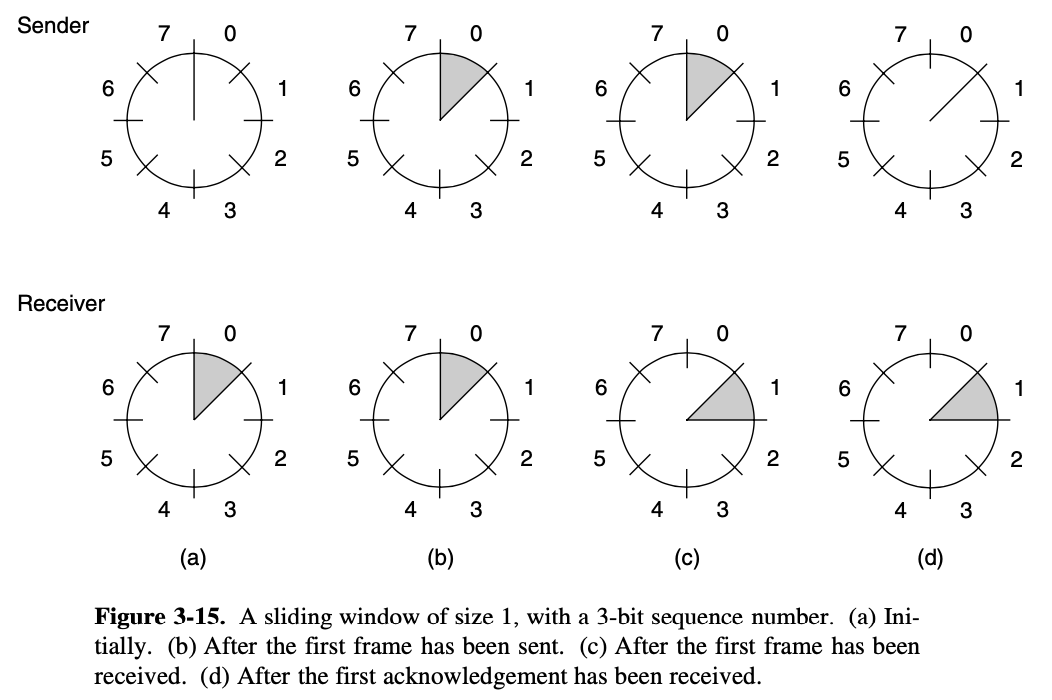

Sliding window

- bidirectional protocols

- any instant of time, the sender maintains a set of sequence numbers corresponding to frames it is permitted to send

- definitely not dropped the requirement that the protocol must deliver packets to the destination network layer in the same order

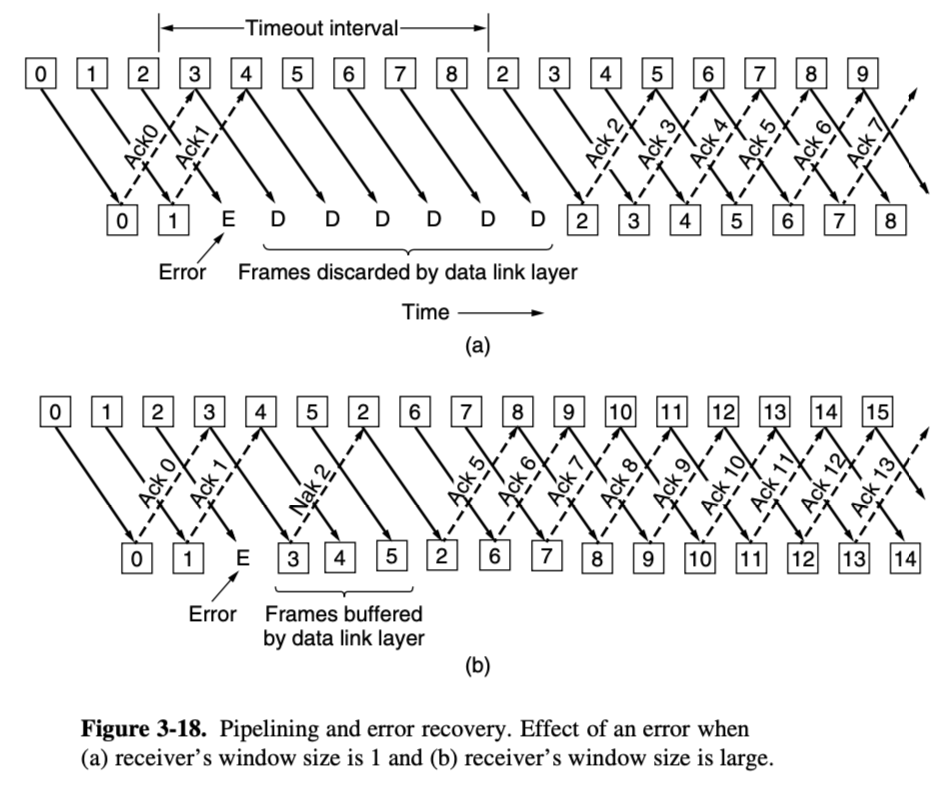

3.4.2. A Protocol Using Go-Back-N

- until now we didn't discuss about transmission time

- long transit time, high bandwidth, short frame length is disastrous in terms of efficiency

- problem described here can be viewed as a consequence of the rule

- requiring a sender to wait for an acknowledgement before sendig another frame

go-back-N

- the data link layer refuses to accept any frame except the next one it must give to the network layer

- frame 2 is damaged or lost

- the sender, unaware of this problem, continues to send frames until the timer for frame 2 expires

- then it backs up to frame 2 and start over with it, sending 2,3,4 etc

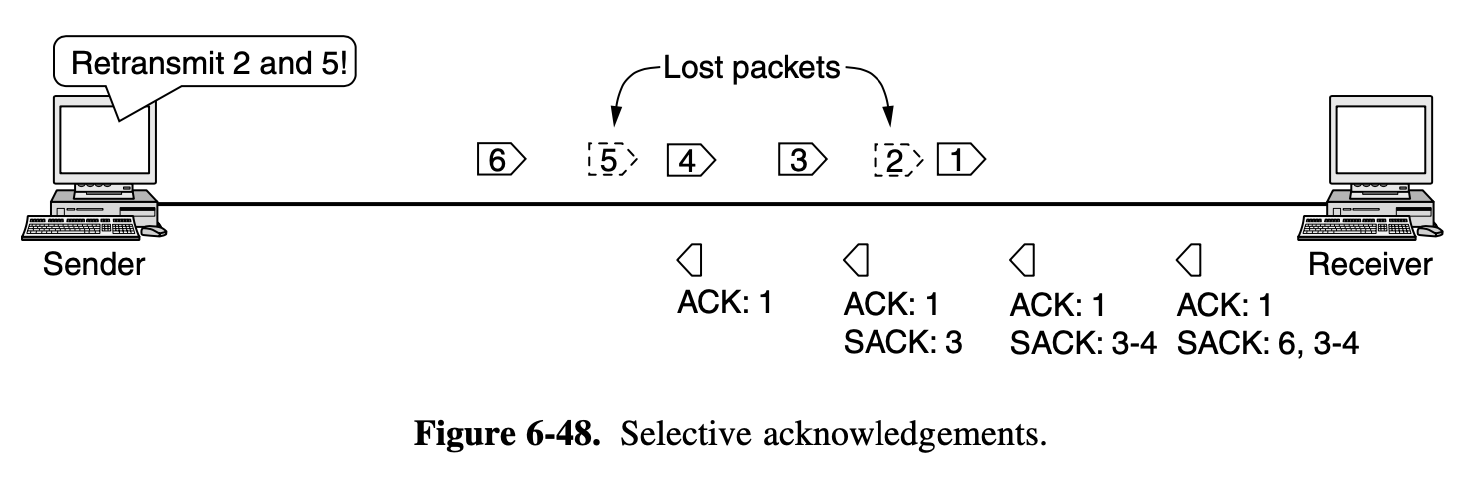

selective repeat

- when the sender times out, only the oldest unacknowledged frame is retransmitted

- is often combined with having the receiver send a negative acknowledgement when it detects an error

- NAKs simulate retransmission before the corresponding timer expires and thus improve performance

- if NAK should get lost, eventually the sender will time out for frame 2 and send it of its own accord, quite a while later

cummulative acknowledgement

- when an aknowlegement comes in for frame n (n-1, n-2 so on) are also automatically acknowledged

4. The medium access control sublayer

4.1. The channel allocation problem

- how to allocate a single braodcast channel among competing users

4.1.1. Static Channel Allocation

ex) telephone trunk

-

if there are N users, the bandwidth is divided into N equal-sized portions

-

-

however, when the number of senders is large and varying or the traffic is bursty, FDM (Frequency Division Multiplexing) presents some problems

4.1.2. Assumption for Dynamic Channel Allocation

- independent traffic

- single channel

- observable collisions

- continuous or slotted time

- carrier sense or no carrier sense

4.2. Multiple access protocol

4.2.1. ALOHA

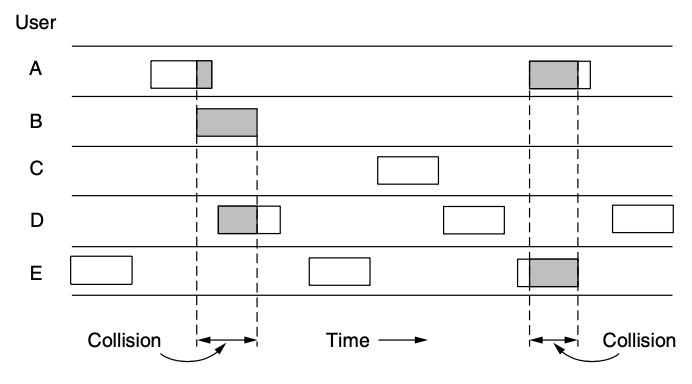

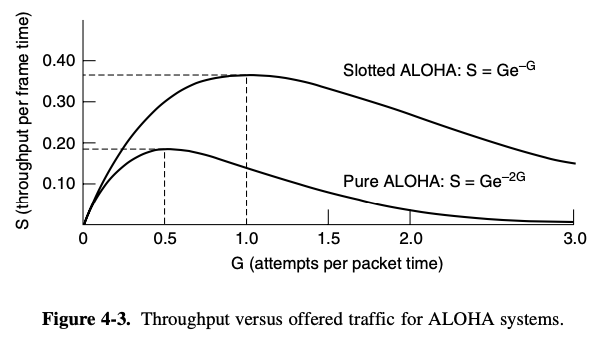

Pure ALOHA

- let users transmit whenever they have data to be sent

- if the frame was destroyed, the sender just waits a random amount of time and sends it again

- the waiting time must be random or the same frames will dollide over and over, lockstep

- systems in which multiple users share a common channel in a way that can lead to conflict are known as contention system

- whenever two frames try to occupy the channel at the same time, there will be collision and both wil be garbled

Slotted ALOHA

- divide time into discrete interval called slots

- each interval corresponding to one frame

- it is required to wait for the beginning of the next slot

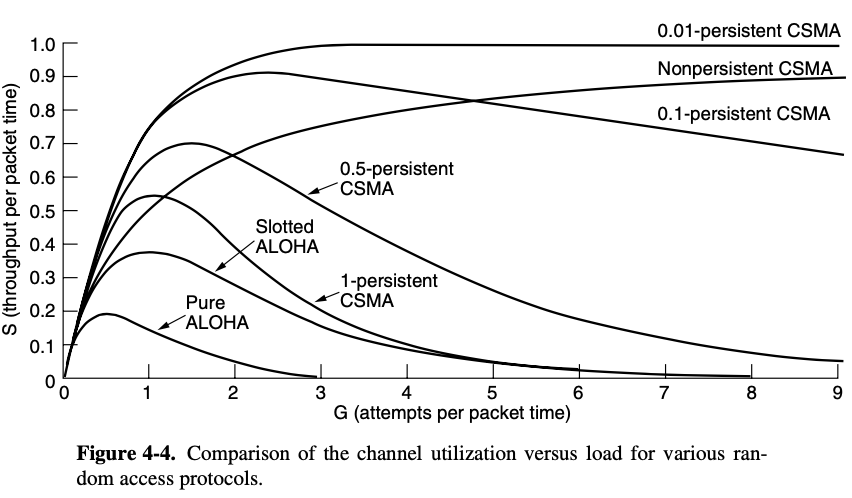

4.2.2. Carrier Sense Multiple Access Protocols

- improving performance than ALOHA

Persistent and Nonpersistent CSMA

- 1-persistent CSMA (Carrier Sense Multiple Access)

- simplest CSMA schema

- station transmits with a probability of 1 when it finds the channel idle

- when a station has data to send, it first listens to the channel to see if anyone else is transmitting at that moment

- if the chennel is idle, the stations sends its data

- if a collision occurs, the station waits a random amount of time and starts all over again

nonpersistent CSMA

- less greedy than in the previous one

- if the channel is already in use, the station does not continually sense it for the purpose of seizing it immediately upon detecting the end of the previous transmission

- instead it waits a random period of time and then repeats the algorithm

p-persistent CSMA

- when a station becomes ready to send, it sense the channel

- if it is idle, it transmits with a probability p

- important to realize that collision detection is an analog process

- worth devoting some time to looking as CSMA/CD in detail

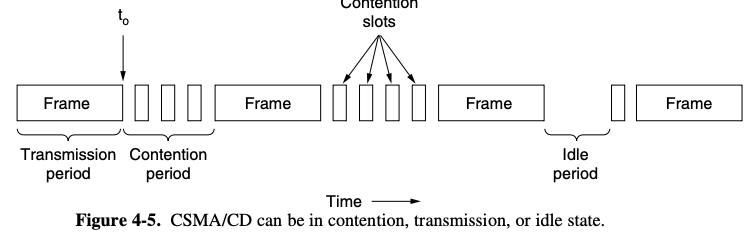

CSMA with Collision Detection (CSMA/CD)

- basis of the classic Ethernet LAN (data-link : switch, hub)

- if a station detects a collision, it aborts its transmission, waits a random period of time and then tries again

4.2.3. Collision-Free Protocols

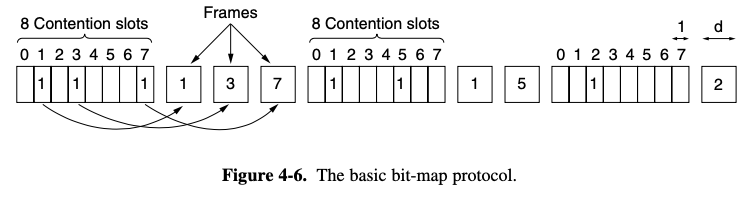

A Bit-Map Protocol

- basic bit-map method : each contention period consists of exactly N slots

- the essence of the bit-map protocol is that it lets every station transmit a frame in turn in a predefined order

- if station 0 has frame to send, it transmit a 1 bit during the slot 0

- no other station is allowed to transmit during this slot

- regardless of what station 0 does, station 1 gets the opportunity to transmit a 1 bit during slot 1

- the desire to transmit is braodcast before the actual transmission are called reservation protocol

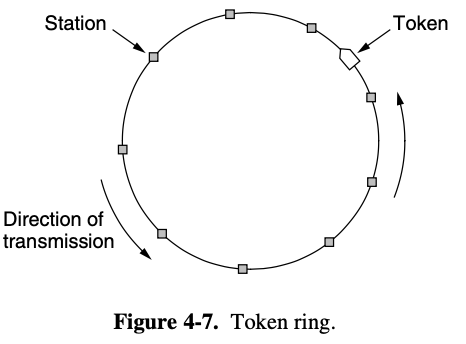

token passing

- small message called token from one station to the next in the same predefined order

- the token represents permission to send

token ring protocol

- network is used to define the order in which stations send

- this protocol is called token buse

- the performace of token passing is similar to that of the bit-map protocol

- after sending a frame each station must wait for all N stations

- Token rings have cropped up as MAC protocols with some consistency

- a much faster token ring called FDDI (Fiber Distributed Data Interface)

- standarize the mix of metropolitan area rings in use by ISPs RPR (resilient Packet Ring)

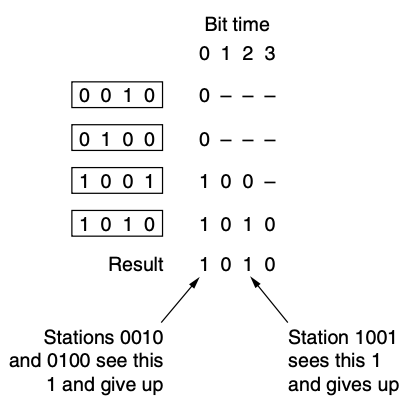

Binary Countdown

- a problem with the bit-map protocol and token passing, is that the overhead is 1 bit per station

- we can do better than by using binary station addresses

- to avoid conflicts, an arbitration rule must be applied

- a station sees that a high-order bit position that is 0 in its address has been overwritten with 1, it give up

ex) if stations 0010, 0100, 1001, 1010

- binary countdown is an example of a simple, elegant and efficient protocol that is waiting to be rediscovered

- hopefully it will find a new home some day

4.2.4. Limited-Contention Protocol

- used contention and collision-free protocols, arriving at a new protocol that used contention at low load to provice low delay

- but used a collison-free technique at high load to provide good channel efficiency

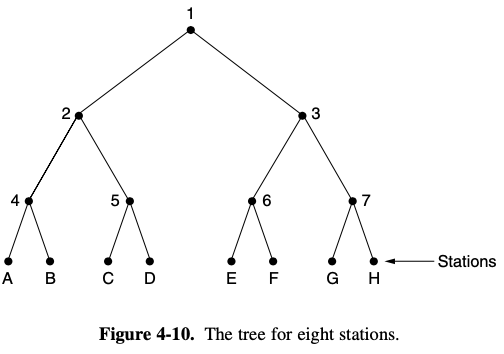

- the trick is how to assign stations to slots

- what we need is a way to assign stations to slots dynamically

the adpative tree walk protocol

- 그중 노드 0에서 성공적인 전송이 이루어지면 첫 번째 경쟁 슬롯 0에서 모든 스테이션들의 채널 점유가 시도가 허락된다.

- 모든 스테이션 중 하나의 전송이 성공하면 노드상에 문제가 없는 것이고 만약 충돌이 일어나면 트리구조에서 노드 1 밑으로 내려가는 스테이션들 즉 왼쪽 우선순위에 의해 경쟁이 일어난다.

- 노드 1에서만 경쟁이 일어나지 않고 2에서도 동시에 경쟁이 일어난다면 결국 충돌이 일어날 확률은 슬롯 0과 같아지게 된다.

- 이는 어느 스테이션에서 문제가 발생했는지 오류 검출이 어려워지며 노드 활용의 효율성도 낮아지는 결과를 가져온다.

- 이러한 문제를 해결하기 위한 방법으로 ATW Protocol을 사용하게 되었다.

4.2.5. Wireless LAN Protocols

- A system of laptop computers that communicate by radio can be regarded as a wireless LAN

- an office building with access points (APs) strategically placed around the building

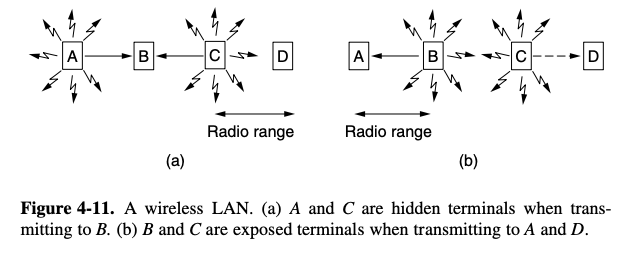

important difference between wireless LANs and wired LANs

- wireless LAN may not be able to transmit frames to or receive frames from all other stations

- limited radio range of the stations

- A naive approach to using a wireless LAN might be to try CSMA

- just listen for other transmission and only transmit if no one else is doing so

[first consider : A, C transmit to B]

- if A send and then C immediately sense the medium, it will not hear A because A is out of range

- C will falsely conclude that it can transmit to B

- if C dose start transmitting, it will interfere at B, wiping out the frame from A

- the problem of collision from happening because it competitor for the medium

- because the competitor is too far away is called the hidden terminal problem

[second consider : B transmitting to A, at the same time that C want to transmit to D]

- if C sense the medium , it will hear a transmission and falsely conclude that it may not send to D

- In fact, such a transmission would cause bad reception only in to zone between B, C, where neither of the intended receivers is located

- exposed terminal problem

- the difficulty is that

before starting a transmission, a station really want to know whether there is radion activity around the receiver

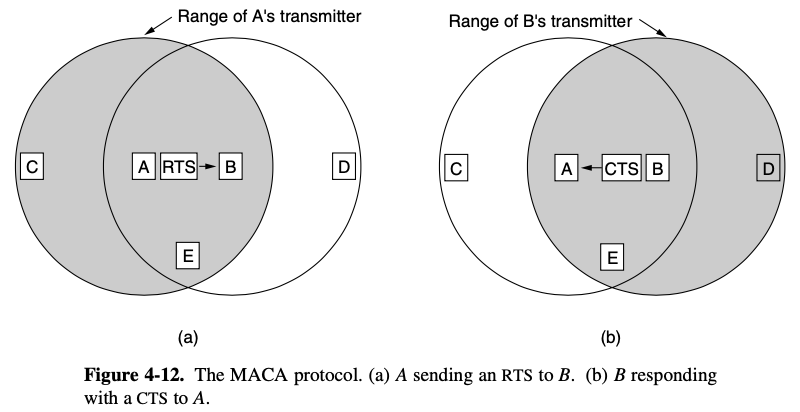

MACA (Multiple Access With Collision Avoidance)

- ealry influential protocol

[basic idea]

- sender to stimulate the receiver into outputting a short frame,

- so stations nearby can detect this transmission

- avoid transmitting for the duration of the upcoming data frame

- A starts by sending an RTS (Request To Send) frame to B

- B replies with a CTS (Clear To Send) frame

- receipt of the CTS frame, A begins transmission

- C is Within range of A but not within range of B

- it hears the RTS from A but not the CTS from B

- D is within range of B but not A

- it does not hear the RTS but does hear the CTS

- hearing CTS tips it off that it is close to a station that is about to receive a frame

- so it defers sending anything until that frame is expected to be finished

- E hears both control message and like D, must be silent until the data frame is complete

- Despite these precautions, collisions can stil occur

- in the event of a collision, an unsuccessful transmitter waits a random amount of time and tries again later

4.3. Ethernet

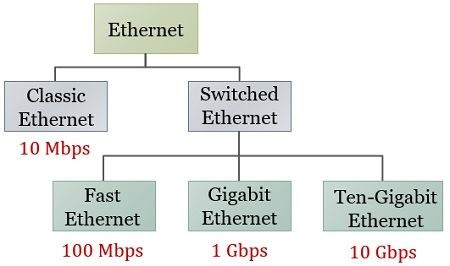

[2 kind of Ethernet exist]

classic Ethernet

- solves the multiple access problem using the techniques

- original form and ran at rates from 3 to 10 Mbps

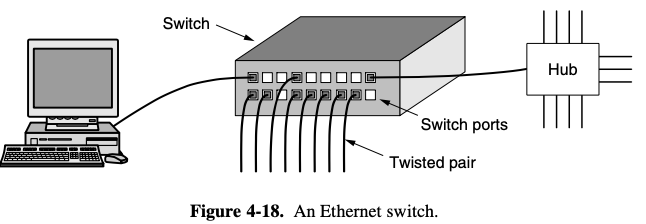

switched Ethernet

- devices called switches are used to connect different computers

- Ethernet has become and runs at 100, 1000, 10000 Mbps

only switched Ethernet is used nowadays

4.3.1. Classic Ethernet Physical Layer

- snaked around the building as a single long cable to which all the computers were attached

- to allow larger networks, multiple cables can be connected by

repeaters - physical layer device that receive, amplifies, and retransmit signals in both directions

4.3.2. Classic Ethernet MAC Sublayer Protocol

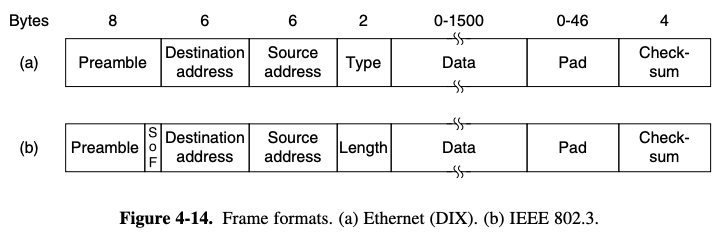

multicasting: sending to a group of stations, more selectivebraodcasting: 1 bits is reserved, accepted by all stations on the network

CSMA/CD with Binary Exponential Backoff

- use 1-presistent CSMA/CD algorithm

- binary exponential backoff : chosen to dynamically adapt (0 and 2^i -1 (i = collision number)) to the number of stations trying to send

- after 10 collision have been reached, the randomization interval is frozen at a maximum of 1023 slots

- average wait after a collision would be hundereds of slot times, introducing significant delay

4.3.4. Switched Ethernet

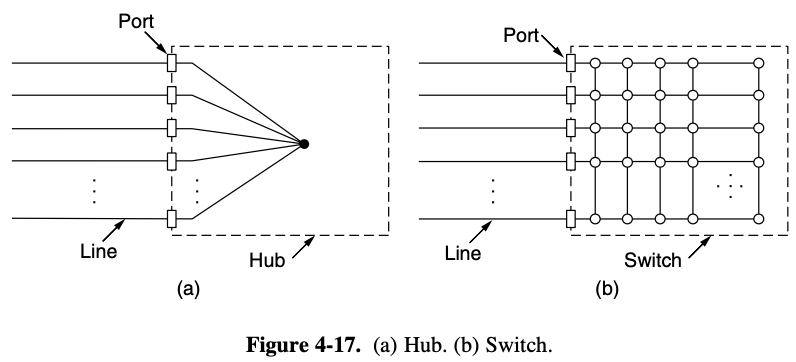

hubsdo not increase capacity because they are logically equivalent to the single long cable of classic Ethernet- As more and more stations are added, each station gets a decreasing share of the fixed capacity

- the heart of

switchsystem is a switch containing a high-speed backplane that connects all of the prots

- when a switch port receives an Ethernet frame from a station

- the switch checks the Ethernet addresses to see which port the frame is destined for

- switch knows the frame's destination port

what happens if more than one of the stations or ports wants to send a frame at the same time?

- in a hub, all stations are in the same collision domain

- the must use CSMA/CD algorithm to schedule their transmissions

- in a switch, each port is its own independent collision domain

- cable is a full duplex (station, port can send a frame at the same time)

- collision are now impossible and CSMA/CD is not needed

[Switch improves performance]

- no collisions

- multiple frames can be sent simultaneously

[Change in the ports]

- have security benefis

- most LAN interfaces have a promiscuous mode

- all frames are given to each computer, not just those addressed to it

- with a hub, every computer that is attached can see the traffic sent between all of the other computers

- Spies and hackers will love it

- with a switch, traffic is forwarded only to the ports where it is destined

- the restriction provides better isolation so that traffic will not easily escape and fall into the wrong hands

4.4. Wireless LANS

4.4.1. The 802.11 Architecture and Protocol Stack

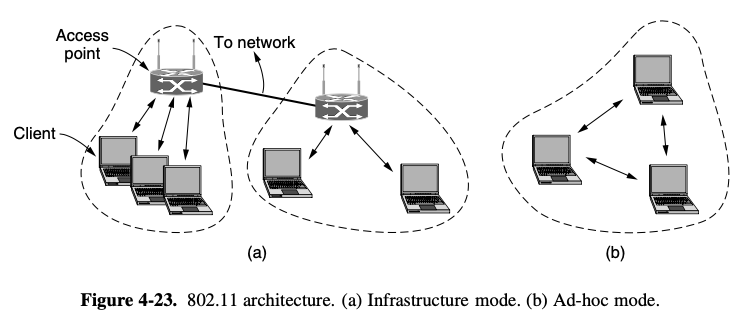

[2 mode]

- AP (Access Point)

- each client is associated with an AP (Access Point), is in turn connected to the other network

- the client sends and receives its packets via the AP

- serveral access points may be connected together, typically by a wired network called a distribution system

- ad hoc neetwork

- collection of computers that are associated so that they can directly send frames to each other

- no access point, not very popular

802.11 관련 설명이 정말 길게 나오지만 나는 알고 싶지도, 정리하고 싶지도 않음

4.4.5. Services

association service

- used by mobile station to connect themselves to APs

- it is used just after a station moves within radio range of the AP

- upon arrival, the station learns the identity and capabilities of the AP

- either from beacon frames or by directly asking the AP

reassociation service

- let a station change its preferred AP

- facility is useful for mobile stations moving from one AP to another AP

-in the same extended 802.11 LAN, like a handover in the cellular network

- stations must also authenticate before they can send frames via the AP

- but authentication is handled in different ways depending on the choice of security scheme

WPA2

- The recommended scheme, called WPA2 (Wifi Protected Access 2)

- with WPA2, the AP can talk to an authentication server that has a username and password database to determine if the station is allowed to access the network

WEP (Wired Equivalent Privacy)

- the schema that was used before WPA

- authentication with a preshared key happens before association

- software to crack WEP passwords is now freely available

- wireless is a broadcast signal

- for information sent over a wireless LAN to be kept confidential, it must be encrypted

- the encryption algorithm for WPA2 is based on AES (Advanced Encryption Standard)

- To handle traffic with different priorities, there is a QOS traffic scheduling service

- transit power control : gives stations the information they need to meet regulatory limits on transmit power that vary from region to region

- dynamic frequency selection : give stations the information they need to avoid transmitting on frequencies in 5-GHz band

4.5. Braodband wireless

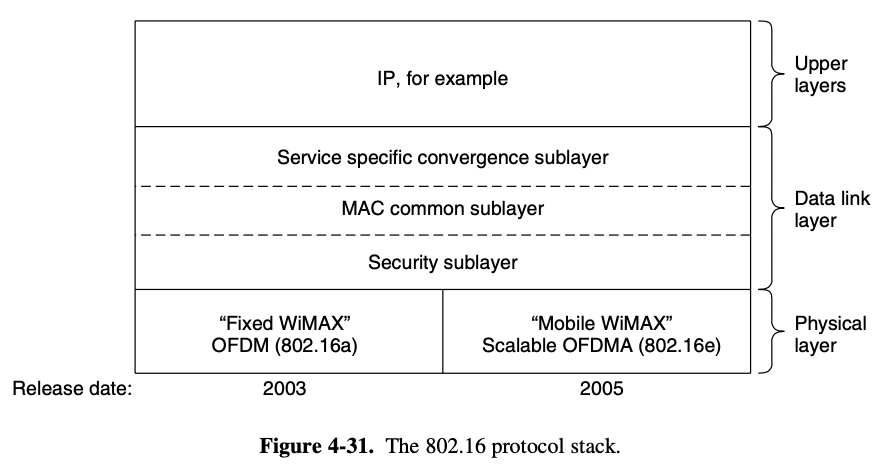

- IEEE 802.16, WiMAX (Worldwide Interoperability for Microwave Access)

4.5.1. Comparison of 802.16 with 802.11 and 3G

-

WiMax

= 802.11 (wirelessly connecting devices to the internet) + 3G

= 4G -

802.16 was designed to carry IP packets over the air and to connect to an IP-based wired network with a minimum of fuss

-

The packets may carry peer-to-peer traffic, VoIP calls, or streaming media to support a range of applications

- WiMAX is more like 3G

- WiMAX base stations are more powerful than 802.11 AP

- To handle weaker signals over larger distances

- 4G = LTE (Long Term Evolution)

3G cellular network: based on CDMA and suppor voice and data4G cellular network: based on OFDM with MIMO (target data, with voice as just one application)

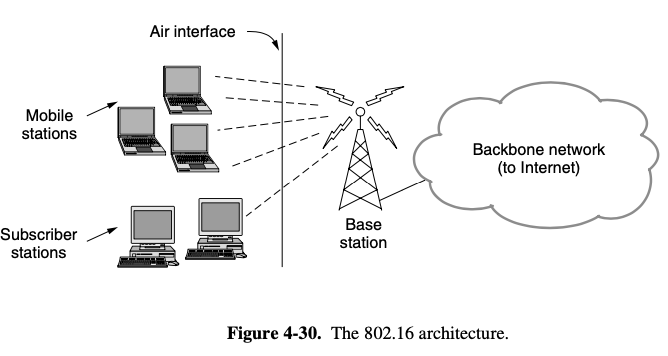

4.5.2. The 802.16 Architecture and Protocol Stack

- base stations connect directly to the provider's backbone network

- base stations communicate with stations over the wireless air interface

subscriber station: a fixed locatio (ex) broadband internet access for homes)mobile station: receive service while they are moving (ex) WiMAX)

여기도 마찬가지로 802.16 에 대해 최선을 다해 설명하고 있지만, 듣고 싶지 않음

4.6. Bluetooth

- Bluetooth protocols let these devices find and connect to eah other an act called pairing, and securely transfer data

4.6.1. Bluetooth Architecture

- piconet : consists of a master node and up to seven active slave nodes with in a distance of 10 meters

- multiple piconets can exist in the same room

- can event be connected via a bridge node that takes part in multiple pinconets

- interconnected connection of pinconets : scatternet

- 7 active slave nodes in pinconet can be up to 255 parked nodes in the net

- devices that master has switched to a low power state to reduce the drain on their batteries

- in parked state, a device cannot do anything except respond to an activation or beacon signal from the master

- 2 intermediate power state : hold & sniff

- the reason for the master/slave design : the designer intended to facilitate the implementation of complete Bluetooth chips for under $5

- slaves : fairly dumb, basically just doing whatever the master tell them do

- pinconet is a centralized TDM system : master controlling the clock and determining which device gets to communicate in which time slot

4.6.2. Bluetooth Applications

- Bluetooth SIG specifies particular applications to be supported and provides different protocol stacks for each one

- 25 applications, which are called profiles

- very large amount of complexity

profiles

- 6 of profiles : for different uses of audio and video

- other profiles : for streaming stero-quality audio and video (ex) portable music player to headphone)

- hume interface device profile : connecting keyboards and mice to computers

- other profiles : let a mobile phone or other computer receive images from a camera or send images to a printer

- for more interest is a profile to use a mobile phone as a remote control for a (Bluetooth-enabled) TV

- still other profiles (ㅋㅋㅋ) : enable networking (ad hoc network or remotely access another network, via access point)

- we will skip the rest of the profiles

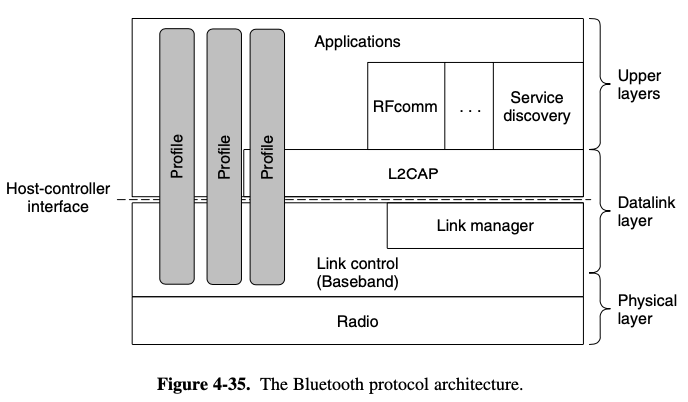

4.6.3. The Bluetooth Protocol Stack

- many protocols grouped loosely into the layer

bottom layer

- physical radio layer > radio transmission and modulation

link control

- analogous to the MAC sublayer, includes elements of the physical layer,

- how the master controls time slot and how these slots are grouped into frames

- 2 protocols that use the link control protocol

- the link manager handles the establishment of logical channels between devices

ex) power management, pairing, encryption etc - it lies below the host controller interface line

L2CAP (Logical Link Control Adaptation Protocol)

- link protocol above the line

- frames variable-length messages and provides reliability if needed

- many protocols use L2CAP

- the service discovery protocol is used to locate services within the network

RFcomm (Radio Frequency communication)

- emulates the standard serial port found on PCs for connecting the keyboard, mouse and modem among other devices

top layer

- applications are located

- profiles are represented by vertical boxes

- they each define a slice of the protocol stack for a particular purpose

4.6.4. The Bluetooth Radio Layer

- radio layer moves the bits from master to slave (vice versa)

- low-power system with a range of 10 meters operating

- the band is divided into 79 channels of 1 MHz each

- To coesist with other networks using the ISM band

adaptive frequency hopping

- bluetooth to adapt its hop sequence to exclude channels on which there are other RF signals

4.6.5. The Bluetooth Link Layers

- closest thing Bluetooth has to a MAC sublayer

- it turns the raw bit stream into frames, defines some key formats

secure simple pairing

- enables users to confirm the both devices are displaying th same passkey

- or to observe the passkey on one device and enter it into the second device

- once pairing is complete, the link manager protocol sets up the links

2 main kind of links exist to carry user data

- SCO (Synchronous Connection Oriented) link

- real-time data (ex) telephone connections)

- fixed slot in each direction

- ACL (Asynchronous ConnectionLess) link

- used for packet-switched data (available irregular intervals)

- frames can be lost and may have to retransmitted

the data sent over ACL links com from the L2CAP layer

L2CAP layer

- accept packets of up to 64KB from the upper layers and breaks them into frame for transmission

- handles the multiplexing and demultiplexing of multiple packet sources

- handles error control and retransmission

- enforce quality of service requirements between multiple links

4.7. RFID (Radio Frequency IDentification)

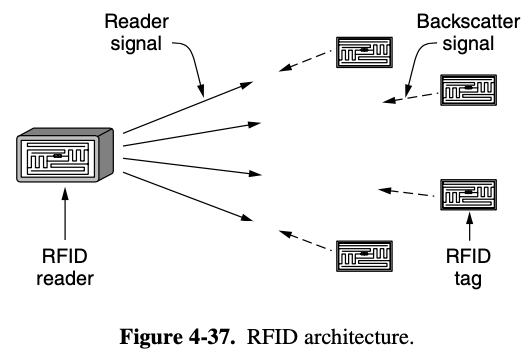

4.7.1. EPC Gen 2 Arcihtecture

- tags & readers

- RFID tags are small, inexpensive devices

- have unique 69-bit EPC identifier and a small amount of memory that can to read and written by the RFID reader

- memory might be used to record the location history of an item

- the tags look like stickers that can be placed on

- most of the sticker is taken up by an antenna that is printed onto it

- a tiny dot in the middle is the FRID intergrated circuit

- the RFID tags can be integrated into an object

- no battery and must gather power from the radio transmission of a nearby RFID reader to run

- the readers are the intelligence in the system

- analogous to base stations and access points in cellular and WiFi networks

- Readers are much more powerful than tags

- they have thier own power source, often have multiple antennas

- incharge of when tags send and receive messages

- main job of the reader is to inventory the tags in the neighborhood

- discover the identifers of the nearby tags

4.7.2. EPC Gen 2 Physical Layer

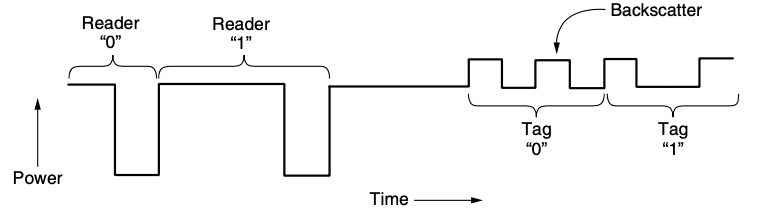

- reader is always transmitting a signal (regardless of whether it is the reader or tag that is communicating)

- for the tags to send bits to the reader

- the reader transmit a fixed carrier signal that carries no bits

- tag harvest this signal to get the power they need to run

- to send data, a tag changes whether it is reflecting the signal from the reader

- backscatter

backscatter

- sender and receiver never both transmit at the same time

- low-energy way for the tag to create a weak signal of its own, that shows up at the reader

- for the reader to decode the incoming signal

- it must filter out the outgoing signal that it is transmitting

- tag that runs on very little power and costs only a few cents to make

- to send data to the tags

- the reader uses 2 amplitude levels

- bits are determined to be either 0 or 1

- tag responses consist of the tag alternating its backscatter state at fixed intervals to create a series of pulses in the signal

- 1s have fewer transitions than 0s

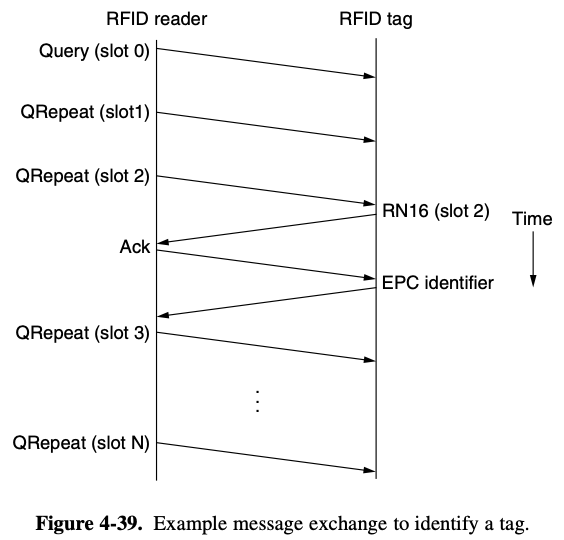

4.7.3. EPC Gen 2 Tag Identification Layer

- reader needs to receive a message from each tag that gives the identifier for the tag

- the reader might broadcast a query to ask all tags to send their identifiers

- tags that replied right away would then collide

- in which the tags cannot hear each other's transmissions

Gen 2 RFID

- reader send a query message to start the process

- each Qrepeat message advances to the next slot

- reader also tells the tags the range of slot over which to randomize transmission

- using a range is necessary because the reader synchronizes tags when it starts the process

- tag pick a random slot in which to reply

- tag replies in slot

- tags do not send their identifier when they first reply

- instead a tag send a short 16 bit random number in an RN16 message

- if there is no collisions, the reader receives this message and send an ACK message of its own

- at this stage the tag has acquired the slot and send its EPC identifier

+) Why send identification later?

- EPC identifier are long

- collision on these messages would be expensive

- identifier has been successfully transmitted

- tag temporarily stops responding to new Query messages so that all the remaining tags can be identified

key problem

- reader to adjust the number of slots to avoid collisions

- performance suffers

- if the reader sees too many slots with collisions

- it can send a QAdjust message to decrease or increase the range of slots over which the tags are responding

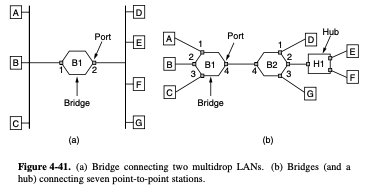

4.8. Data link layer switching

- connect multiple LANs

bridge

- operate data link layer

- examine the data link layer addresses to forward frames

4.8.1. Use of Bridges

- buy bridge, plug the LAN cables into the bridges and have everything work perfectly

2 algorithms are used

1. a backward learning algorithm : stop traffic being sent where it is not needed

2. a spanning tree algorithm : to break loops

4.8.2. Learning Bridge

- left hand side : classic Ethernets

- rgiht hand side : point-to-point cables

- if there is more than one station, as in a classic Ethernet, hub, or a half-duplex link, the CSMA/CD protocol is u sed to send frames

- Each bridge operates in promiscuous mode

- it accepts every frame transmitted by the stations attached to each of its ports

- the bridge must decided whether to forward or discard each frame

- this decision is made by using the destination address

- A simple way to implement this scheme is to have a big hash table inside the bridge

- table can list each possible destination and which output port it belongs on

backward learning

- when the bridges are first plugged in, all the hash tables are empty

- As time goes on, the bridge learn where destinations are

- Periodically, a process in the bridge scan the hash table and purges all entries more than a few minutes old

- if the port for the destination address is the same as the source port, discard the frame

- if the port for the destination address and the source port are different, forward the frame on to the destination port

- if the destination port is unknown, use flooding and send the frame on all ports except the source port

cut-through switching / wormhole routing

-

as each frame arrives, usually implemented with special-purpose VLSI chips

-

the chip do the lookup and update the table entry

-

bridge only look at the MAC addresses to decide how to forward frames

-

it is possible to start forwarding as soon as the destination header field has come in

-

this design reduces the latency of passing through the bridge

-

as well as the number of frames that the bridge must be able to buffer

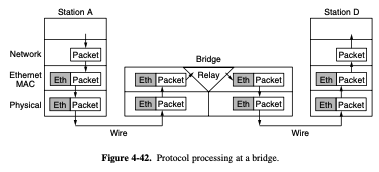

- relay at a given layer can rewrite the headers for that layer

4.8.3. Spanning Tree Bridges

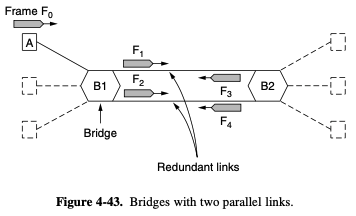

- to increase reliability, redundant links ca be used between bridges

- there are 2 links in parallel between a pair of bridges

- the network will not be partitioned into 2 sets of computers that cannot talk to each other

- however this redundancy introduces some additional problems, it create loops in the topology

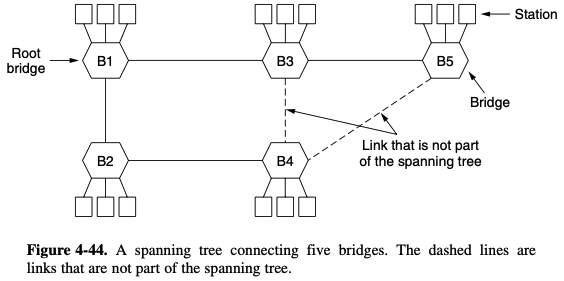

- some potential connections between bridges are ignored in the interest of construction a fictitious loop-free topology that is a subset of the actual topology

- to build the spanning tree, the bridges run a distributed algorithm

- each brdige periodically broadcasts a configuration message out all of its ports to its neighbors and processes the message it receives from other bridges

Spanning Tree algorithm 은 나중에 배우세요

4.8.4. Repeaters, Hubs, Bridges, Switches, Routers and Gateways

Physical Link Layer

Repeaters

- analog devices that work with signals on the cables to which they are connected

- do not understand frames, packets, or headers

- understand the symbols that encode bits as volts

- classic ethernet was designed to allow 4 repeaters

hubs

- a number of input lines that it join electrically

- arriving on any of the lines are sent out on all the others

- do not amplify the incomming signals

Data link layer

bridge

- intended to be able to join different kinds of LANs

- 2 or more LANs like a hub, a modern bridge has multiple ports, usually enough for 4 to 48 input lines of a certain type

- each port is isolated to be its own collision domain

- when a frame arrives, the bridge extracts the destination address from the frame header and looks it up in a table to see where to send the frame

- For ethernet this address is the 48 bit destination address (MAC)

switches

- modern bridges by another name

- bridge were developed when classic Ethernet was in use

- so they tend to join relatively few LANs and thus have relatively few ports

- mordern installations all use point-to-point links

4.8.5. Virtual LANs

- network administrators like to group users on LANs to relect the organizational structure rather than physical layout of the building

+) broadcast storm

- entire LAN capacity is occupied by these frames

- all the machines on all the interconnected LANs are cripples just processing and discarding all the frames being broadcast

VLAN (Virtual LAN)

- IEEE 802

+) Often the VLANs are informally named by colors

- to make the VLANs function correctly, configuration tables have to be set up in the bridges

- these tables tell which VLANs are accessible via which ports

The IEEE 802.1Q standard 읽어보시길

5. The Network Layer

5.1. Network Layer Design Issues

5.1.1. Store-and-Forward Packet Switching

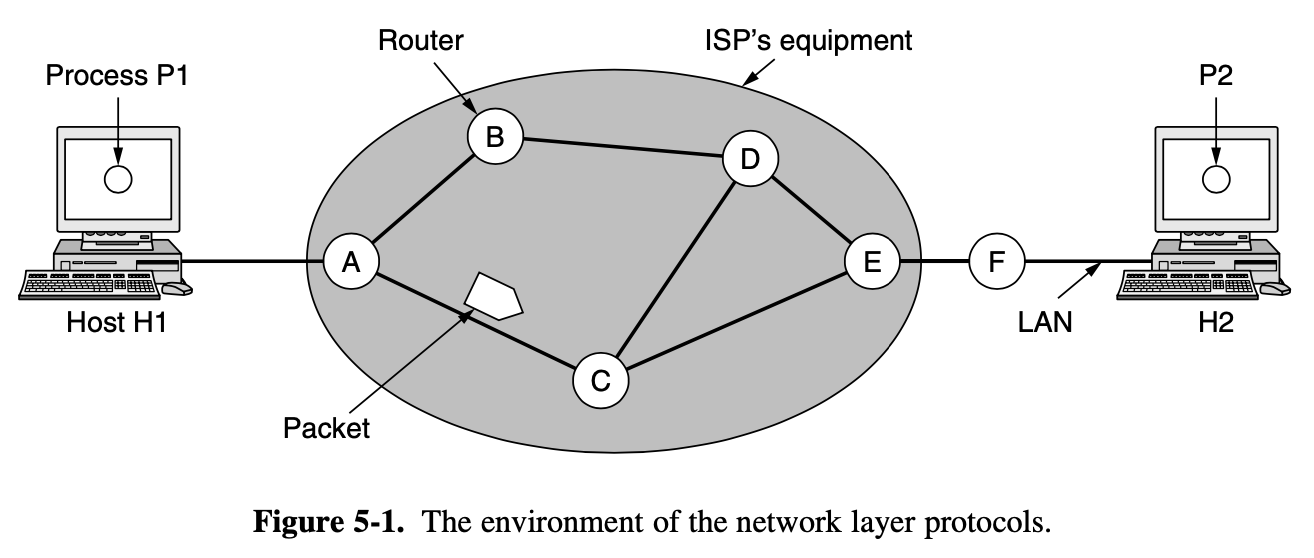

- major components of the network are the ISP's equipment (router connected by transmission lines)

- A host with a packet to send transmit it to the nearest router, either on its own LAN or over a point-to-point link to the ISP

- The packet is stored there until it has fully arrived and the link has finished its processing by verifying the checksum > stop

- Then it is forwarded to the next router along the path until it reaches the destination host, where it is delivered > forward

5.1.2. Services Provided to the Transport Layer

- The services should be independent of the router technology

- The transport layer should be shielded from the number, type and topology of the routers present

- The network addresses made available to the transport layer should use a uniform numbering plan, even across LANs ans WANs

5.1.3. Implementation of Connectionles Service

- packets are injected into the network individually and routed independently of each other

- The packets are frequently called datagrams

- The network called a datagram network

- If connection-oriented service is used

- a path from the source router all the way to the destination router must be established before any data packets can be sent

- This connection is called a VC (Virtual Circuit)

Virtual Circuit network

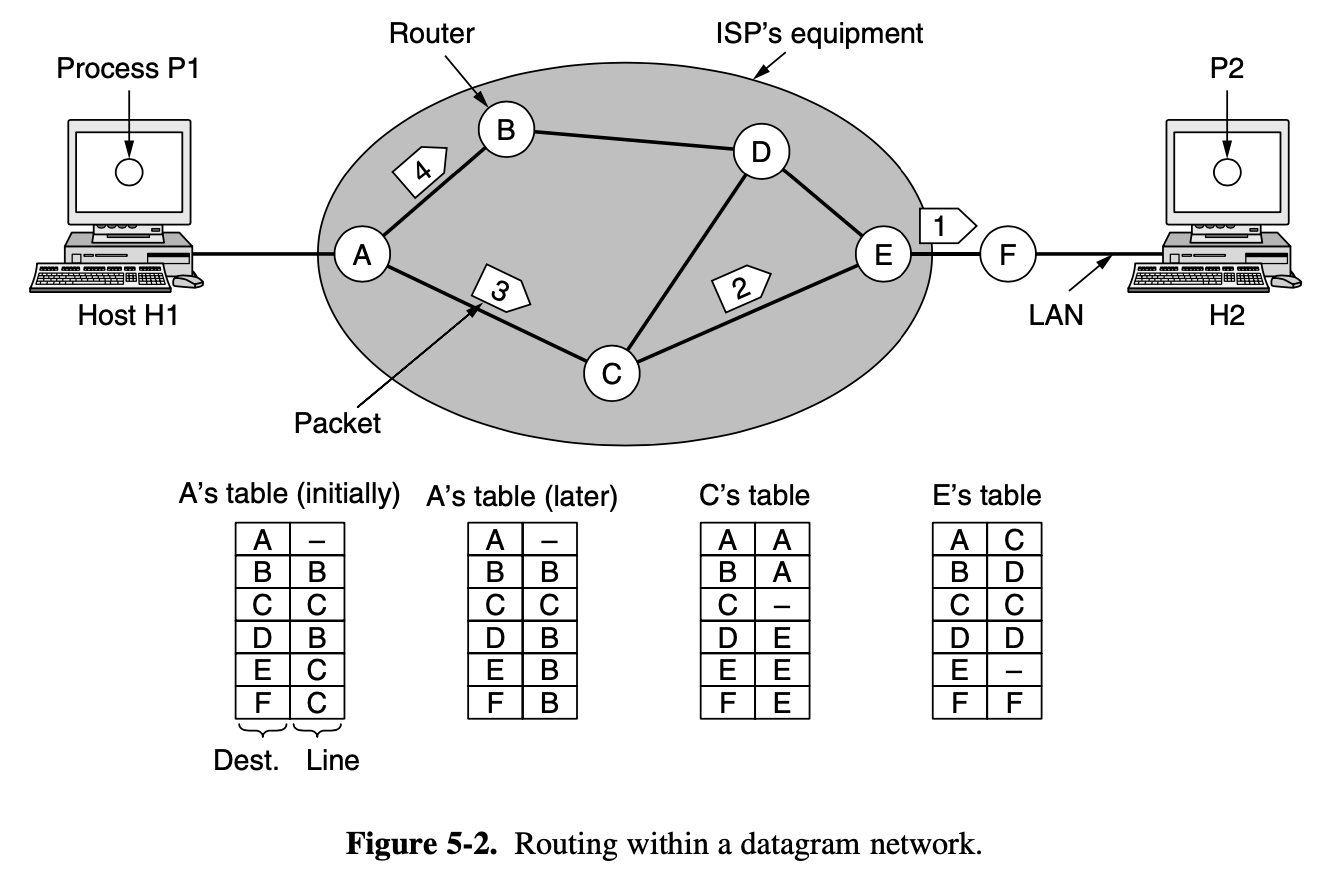

Let us assume) the message is 4 times longer than the maximum packet size

- the network layer haas to break it into 4 packets 1,2,3,4

- send each of them in turn to router A using some point to point protocol, PPP

- At this point the ISP takes over

- Every router has an internal table telling it where to send packets for each of the possible destinations

- Each table entry is a pair consisting of a destination and th outgoing line to use for that destination

- At A, packets 1, 2, 3 are stored briefly, having arrived on the incoming link and had their checksums verfieid (stop-and-forward)

- The each packet is forwarded according to A's table, onto the outgoing link to C within a new frame

- Packet 1 is then forwarded to E and then to F

- When it get to F, it is sent wighin a frame over the LAN to H2

- Packets 2 and 3 follow the same route

- However someting different happend to packet 4

- When it gets to A it is sent to router B, even though it is also destined for F

- For some reason, A decided to send packet 4 via a different route than that of the first 3 packets

- Perhaps it has learned for a traffic jam somewhere along the ACE path and updated its routing table, as shown under the label "later"

- The algorithm that manages the tables and makes the routing decisions is called the routing algorithm

- IP (Internet Protocol), which is the basis for the entire Internet, is the dominant example of a connectionless network service

- Each packet carries a destination IP address that routers use to individually forward each packet

- The address are 32 bits in IPv4 packets and 128 bits in IPv6 packet

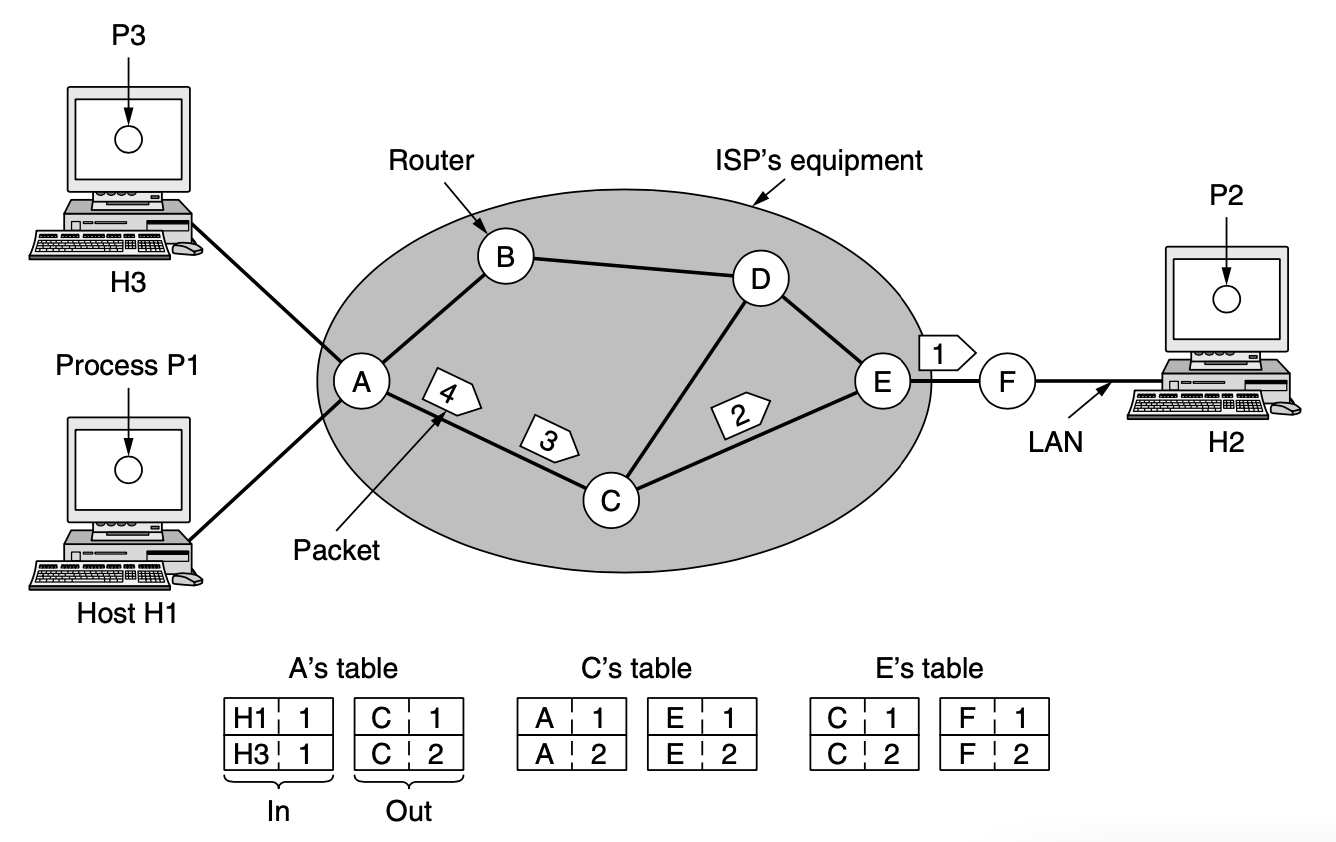

5.1.4. Implementation of Connection-Oriented Service

- For connection-oriented service, we need a virtual-circuit network

- The idea behind virtual circuits is to avoid having to choose a new route for every packet sent

- When a connection is established, a route from the source machine to the destination machine is chosen as part of the connection setup and stored in tables inside the router

- That route is used for all traffic flowing over the connection

- When the connection is released, the virtual circuit is also terminated

- each packet carries an identifier telling which virtual circuit it belongs to

[An example]

- host H1 has established connection 1 with host H2

- This connection is remembered as the first entry in each of the routing tables

[NEW!] Now let us consider what happends if H3 also wants to establish a connection to H2

- It choose connection identifier 1

- because it is initiating the connection and this is its only connection

- tell the network to establish the virtual circuit

- this leads to the second row in the tables

- we have a conflict here because although A can saily distinguish connection 1 packets from H1 from connection 1 packet from H3, C cannot do this

- For this reason, A assigns a different connection identifier to the outgoing traffic for the second connection

- this process is called Label Switching

ex) connection-oriented network service : MPLS (MultipProtocol Label Swtiching)

- it used within ISP networks in the internet, with IP packets wrapped in an MPLS header having a 20-bit connection idenfier or label

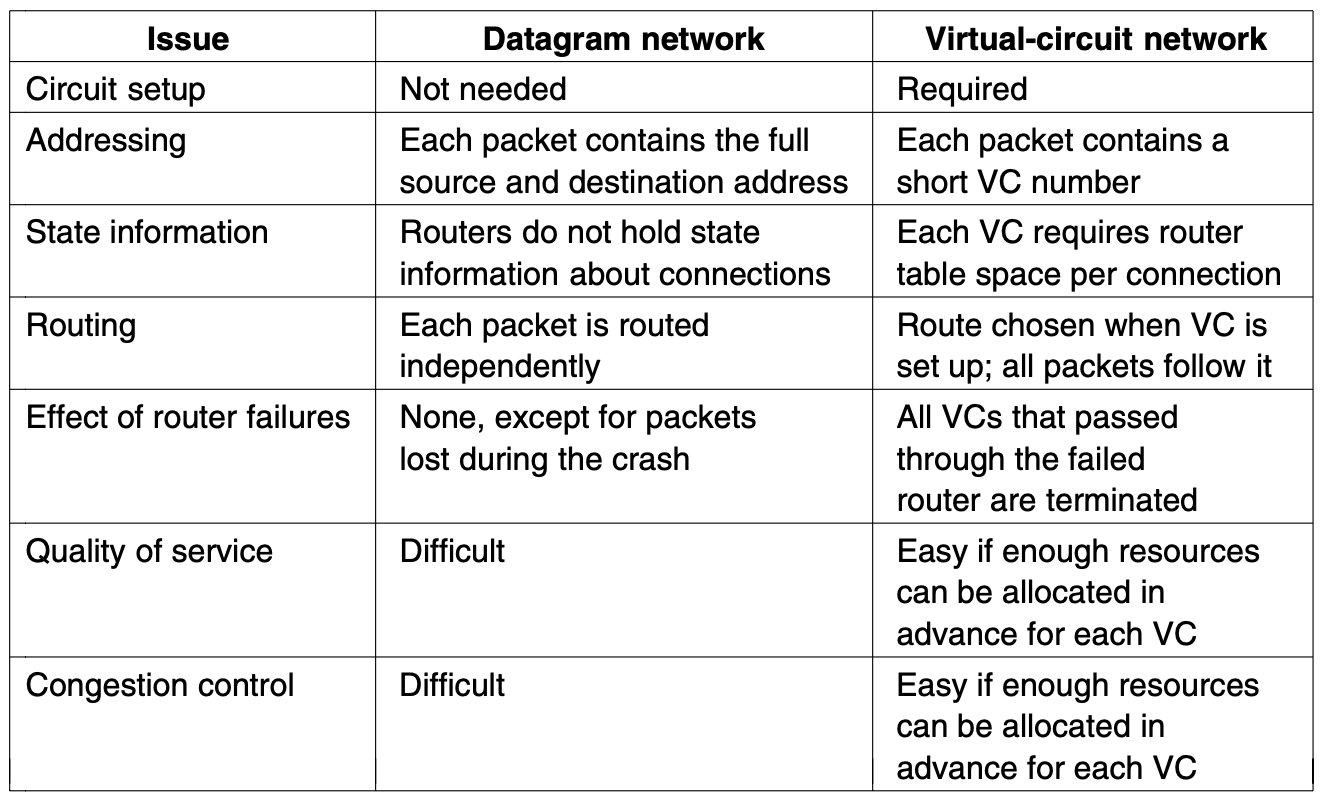

5.1.5. Comparision of Virtual-Circuit and Datagram Networks

- Inside the network, several trade-off exist between virtual circuit and datagram

- One trade-off is setup time versus address parsing time

- Using virtual circuits requires a setup phase, which takes time and consumes resources

- Once this price is paid figuring out what to do with a data packet in a virtual-circuit network is easy

- The router just uses the circuit number of index into a table to find out where the packet goes

- In a datagram network, no setup is needed but a more complicated lookup procedure is required to locate the entry for the destination

[Issue]

- the destination addresses used in datagram networks are longer than circuit number used in virtual-circuit networks

- destination address have a global meaning

- If packets tend to be fairly short, including a full destination address in every packet may represent a significant amount of overhead and hence a waste of bandwidth

- the amount of thable space required in router memory

- A datagram network needs to have an entry for every possible destination

[Virtual circuits have some advantages]

- guaranteeing quality of service and avoiding congestion within the network

- because resources can be reserved in advance, when the connection is establish

- Once the packets start arriving, the necessary bandwidth and router capaciy will be there

- With the datagram network, congestion avoidance is more difficult

- For transaction processing system (e.g. payment system), the overhead required to set up and clear a virtual circuit may easily dwarf the use of the circuit

- If the majority of the traffic is expected to be of this kind, the use of virtual circuits inside the network makes little sense - On the ther hand, for long-running uses such as **VPN traffic** between 2 corporate offices, permanent virtual circuits may be useful

[Virtual circuits vulnerability problem]

- If a router crashes and loses its memory, even if it comes back up a second later, all the virtual circuits passing through it will have to be aborted

- if a datagram router goes down, only those users wose packets were queued in the router at the time need suffer

- datagram also allow the router to balance the traffic throughout the network

5.2. Routing Algorithm

- routing packet from the source machine to the destination machine

- in most networks, packets will require multiple hops to make the journey

- The only notable exception is for broadcast networks

- If the network uses virtual circuits internally, routing decisions are made only when a new virtual circuit is being set up

- Threreafter, data packets just follow the already established route

- The latter case is sometimes called session routing

[router as having 2 processes inside it]

- forwarding : each packet as it arrives, looking up the outgoing line to use for it in the routing tables

- responsible for filling in and updating routing tables

[Routing algorithms grouped into 2 major classes]

- nonadaptive

- adaptive

[Nonadaptive algorithms]

- do not base their routing decisions on any measurements or estimates of the current topology and traffic

- offline and downloaded to the routers when the network is booted

- This procedure is sometimes called static routing

- because it does not respond to failures

- static routing is mostly useful for situations in which the routing choice is clear

[Adaptive algorithms]

- change their routing decisions to relfect changes in the topology

- sometimes changes in the traffic as well

- dynamic routing

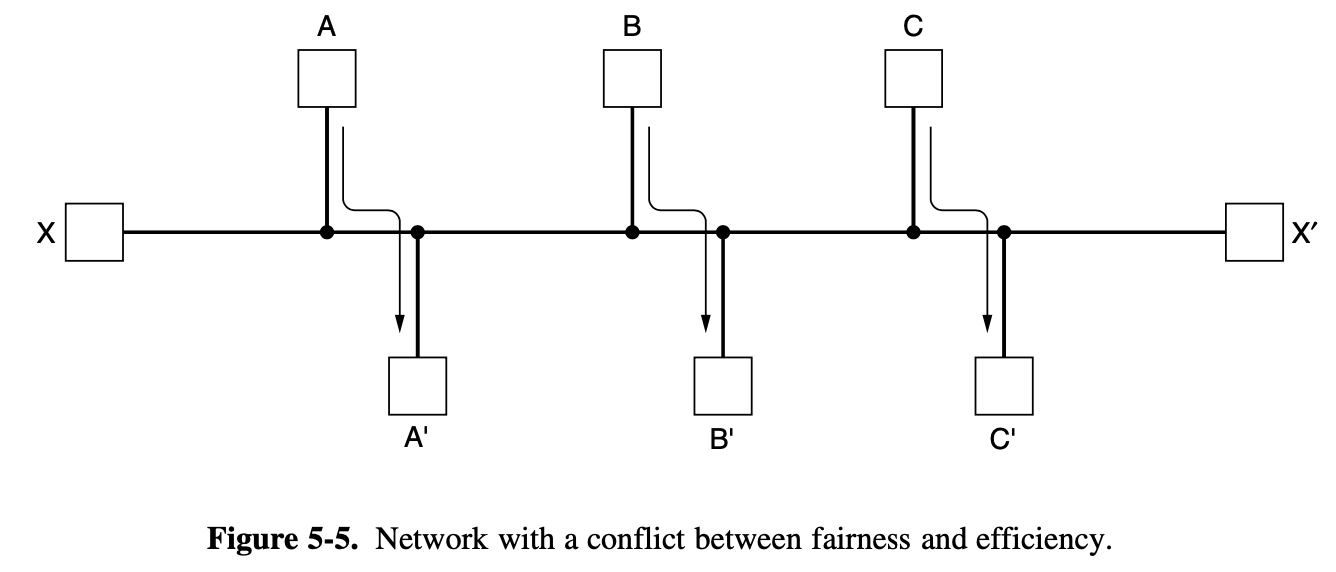

5.2.1. The Optimality Principle

- if router J is on the optimal path from router I to router K

- then the optimal path form J to K also falls along the same ways

- As a direct consequence of the optimality principle, we can see that the set of optimal routes from all sources to a given destination form a tree rooted at the destination (called a sink tree)

- where the distance metric is the number of hops

- the goal of all routing algorithms is to discover and use the sink trees for all routers

- sink tree is not necessarily unique

- other trees with the same path lengths may exist

- the tree becomes a more general structure called a DAG (Directed Acyclic Graph)

[DAGs]

- have no loops

- use sink trees as a convenient shorthand

- since a sink tree is indeed a tree, it does not contains any loops, so each packet will be delivered within a finite and bounded number of hops

- the optimality principle and the sink tree provide a benchmark against which other routing algorithms can be measured

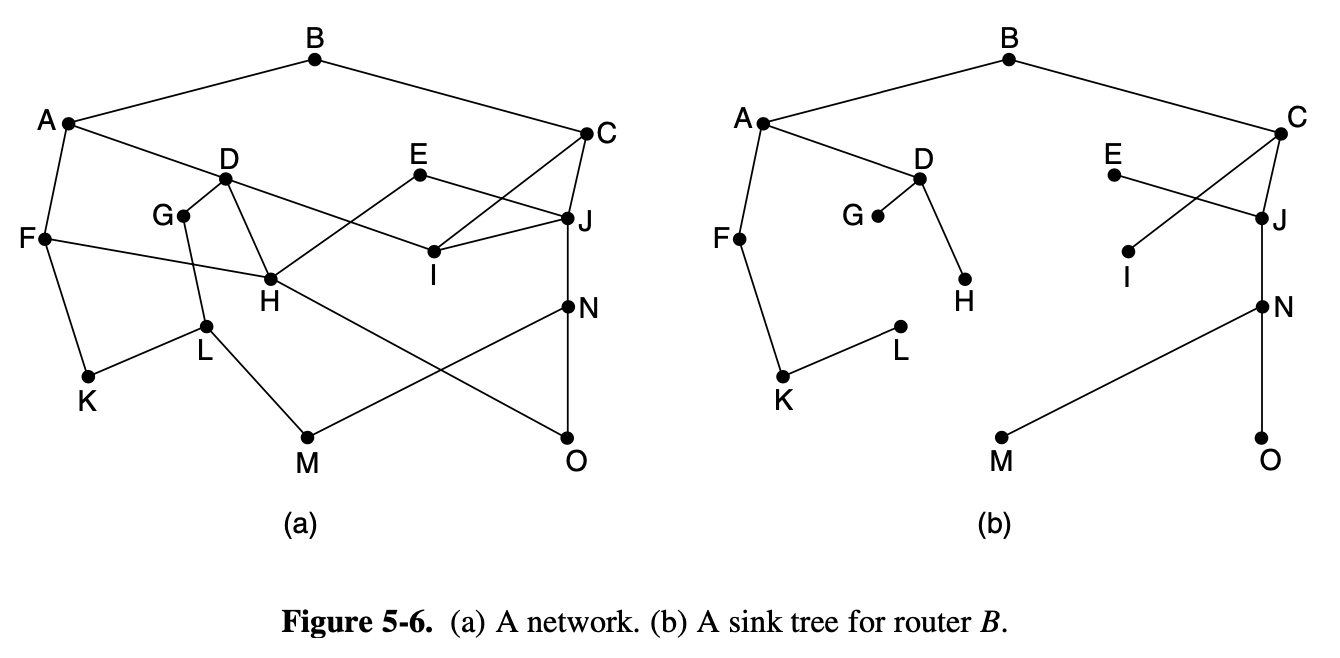

5.2.2. Shortest Path Algorithm

-

The concept of shortest path deserves some explanation

-

One way of measuring path length is the number of hops

-

but what if selected link runs hourly, then that line will not be selected by algorithm

[Dijkstra]

- finds the sortest paths between a source and all destinations in the network

- each node is labeled with its distance from the source node along the best known path

- initially no paths are know, so all nodes are labeld with infinity

- As the algorithm proceeds and paths are found, the labels may change, relecting better paths

- A label may be either tentative or permanent

- Whenever a node is relabeled, we also label it with the node from which the probe was made so that we can reconstruct the final path later

5.2.3. Flooding

- routing algorithm is implemented, each router must make decision based on local knowledge, not the complete picture of the network

- An incoming packet to A, will be sent to B, C and D.

- B will send the packet to C and E.

- C will send the packet to B, D and F.

- D will send the packet to C and F.

- E will send the packet to F.

- F will send the packet to C and E.

- Flooding obviously generates vast numbers of duplicate packets

- Flooding with a hop count can produce an exponential number of duplicate packets as the hop count grows and router duplicate packets they have seen before

- A better technique for damming the flood is to have routers keep

track of way to achieve this goal is to have to source router put a sequence number in each packet it receives from its hosts - Each router then needs a list per source router telling which sequence numbers orginating at that source have already been seen

- If an incoming packet is on the list, it is not flooded

- Flooding is not practical for sending most packets,

- but is does have some important uses

- it ensures that a packet is delivered to every node in the network

- flodding is trememdously robust

- Flooding always chooses the shortest path because it chooses every possible path in parallel

5.2.4. Distance Vector Routing

- Computer networks generally use dynamic routing algorithms that are more complex than flooding

- but more efficient because they find shortest paths for the current topology

[2 dynamic algorithms]

- distance vector routing

- link state routing

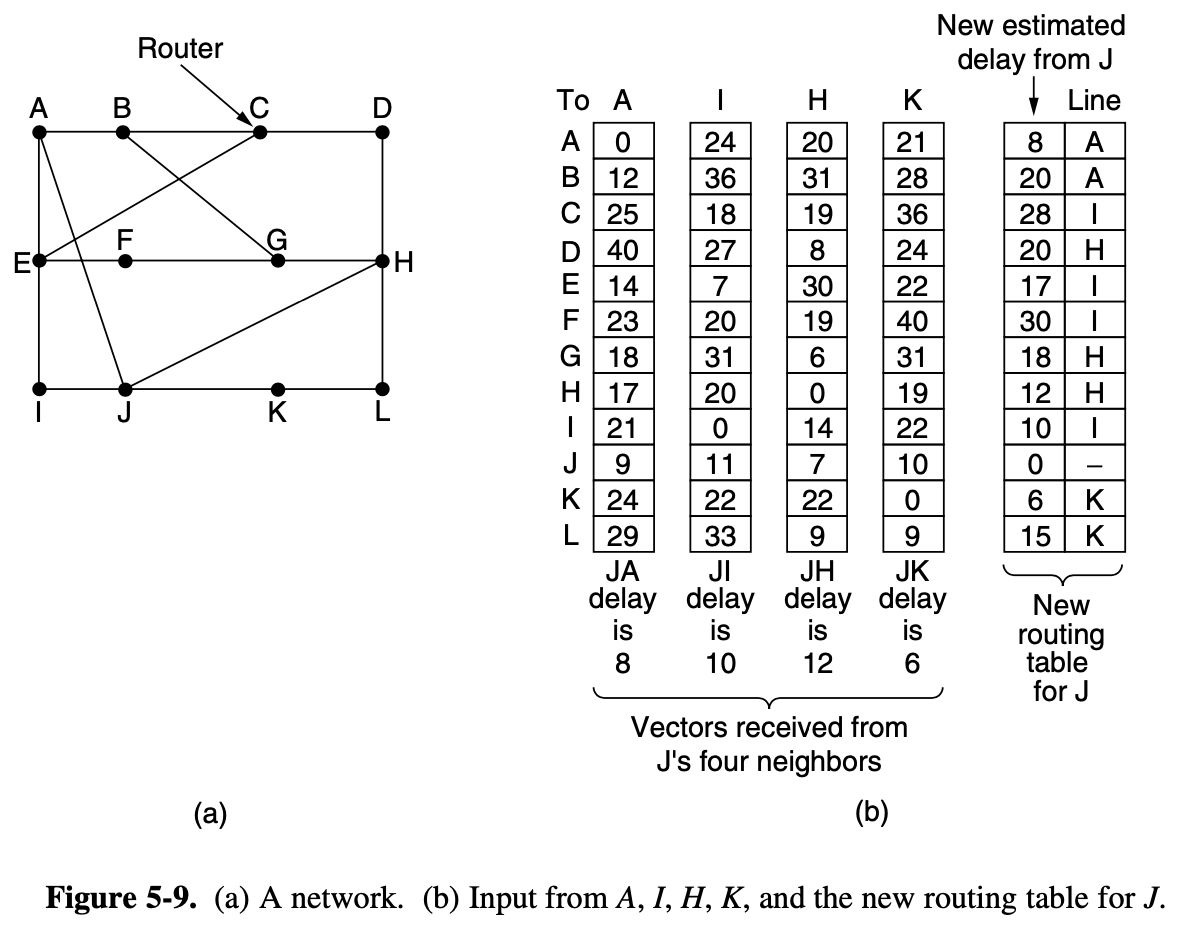

distance vector routing (Bellman-Ford Routing algorithm)

- operate by having each router maintain a table giving the best known distance to each destination and which link to use to get there

- These tables are updated by exchanging information with the neighbors

- Eventually every router knows the best link to reach each destination

- The router is assumed to know the distance to each of its neighbors

- If the metric is propagation delay, the router can measure it directly with special ECHO packets that the receiver just timestamps and sends back as fast as it can

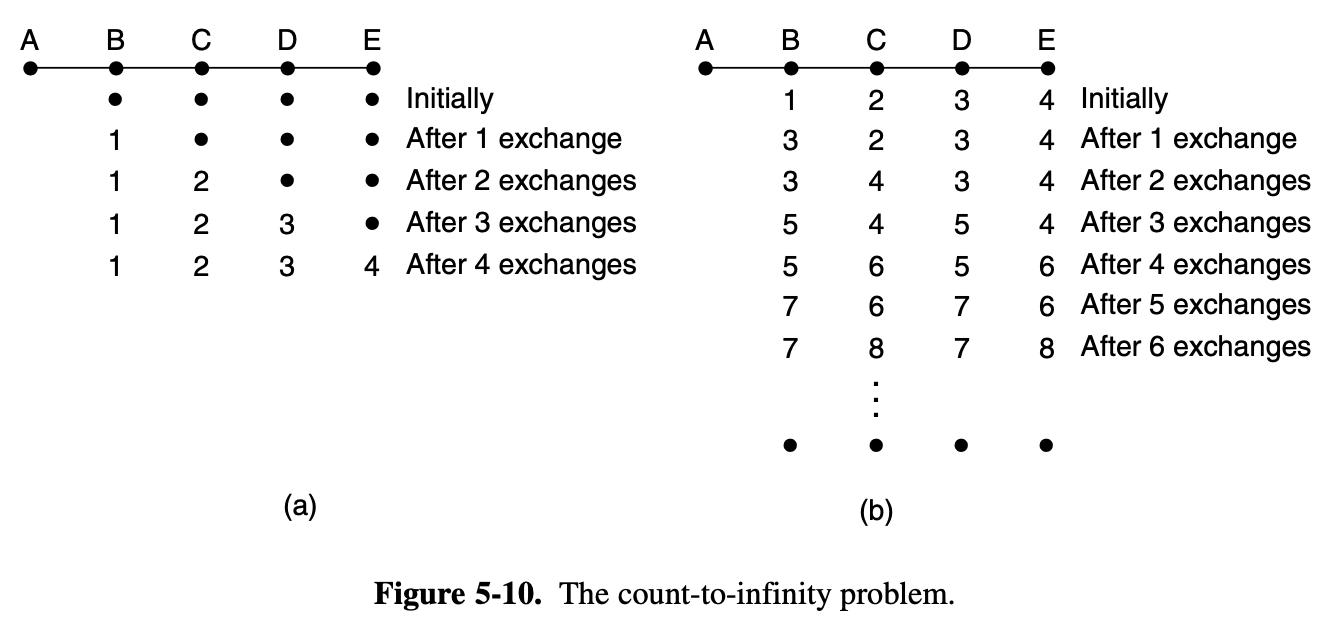

The Count-to-Infinity Problem

- The setting of routes to best path across the network is called convergence

- Distance vector routing is usedful as a simple technique by which routers can collectively compute shortest paths, but is has a serious drawback in practice

- Suppose [A is down] initially and all the other routers know this

- They have all recorded the delay to A as inifinity

- When [A comes up] the other router lean about it via the vector exchanges

- for simplicity, we will assume that there is gigantic gong somewhere that is struck periodically to initiate a vector exchange at all routers simultaneously

- At th time of the first exchange, B learn that is left-hand neighbor has zero delay to A

- B now makes an entry in its routing table indicating that A is one hop away to the left

- All the other routers still think that A is down

- At this point the routing table entries for A are as shown in the second row (Figure 5-10 a)

- On the next exchange, C learns that B has path of length 1 to A

- so it updates its routing table

- The good news is spreading at the rate of one hop per exchange

- Now let us consider the situation of Fig. 5-10(b)

- Router B, C, D and E have distance to A of 1, 2, 3, 4 hops

- Suddenly either [A goes down] or the link between A and B is cut

- At the [first exchange], B does not hear anything from A

- Fortunately C have path to A of length 2, so B thinks it can reach A via C, with a path length of 3

- [Second exchange] C notices that each of its neighbors claims to have a path to A of length 3

- It picks one of them at random and makes its new distance to A 4

- Subsequent exchanges produce the history

5.2.5. Link State Routing

- Discover its neighbors and learn their network addresses

- Set the distance or cost metric to each of its neighbors

- Construct a packet telling all it has just learned

- Send this packet to and receive packets from all other routers

- Compute the shortest path to every other router

1. Learning about the Neighbors

- Router is booted, its first task is to learn who its neighbors are

- sending a special HELLO packets on each point to point line

- Th router on the other end is expected to send back a reply giving its name

- These names must be globally unique

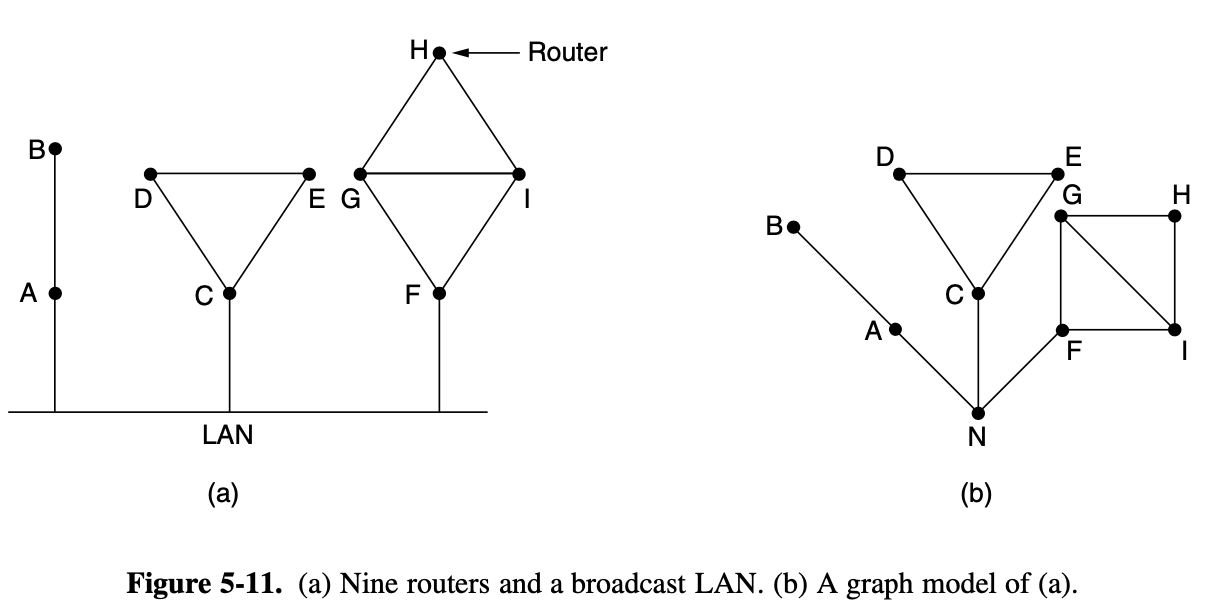

- The braodcast LAN provides connectivity between each pair of attached routers

2. Setting Link Costs

- each link to have a distance or cost metric for finding shortest paths

- The cost to reach neighbors can be set automatically or configured by the network operator

- The most direct way to determine this delay is to send over the line a special ECHO packet that the other side is required to send back immediately

- By measuring the round-trip time and dividing it by 2, the sending router can get a reasonable estimate of the day

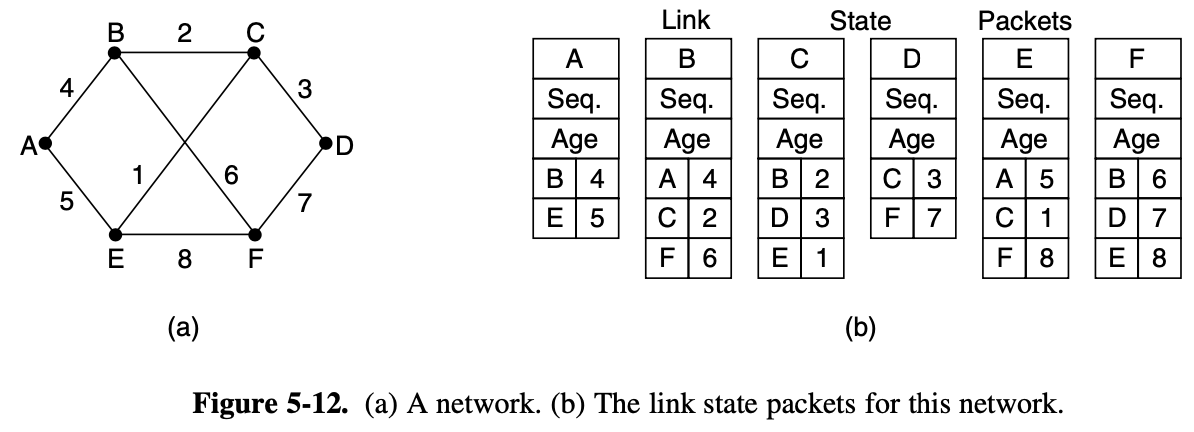

3. Building Link State Packets

- the next step is for each router to build a packet containing all the data

[determining when to build them]

- build them periodically

- build them when som significant event occurs

4. Distirbuting the Link State Packets

- If different router are using different versions of the topology

- the routes they compute can have inconsistencies such as loops, unreachable machines, and other problems

basic distribution algorithm

- use flooding to distribute the link state packets to all routers

- to keep the flood in check, each packet contains a sequence number that is incremented for each new packet sent

- [If it is new], it is forwarded on all lines except the one it arrived one

- [If it is duplicate], it is discarded

- [If a packet with a sequence number lower then the highest one seen so far ever arrives], it is rejected as being obsolete as the router has more recent data

5. Computing the New Routes

- Once a router has accumulated a full set of link state packet

- it can construct the entire network grap because every link is represented

- Now Dijkstra's algorithm can be run locally to construct the shortest paths to all possible destinations

- Compared to distance vector routing, link state routing requires more memory and computation

- Link state routing is widely used in actual networks (ex) OSPF (Open Shortest Path First))

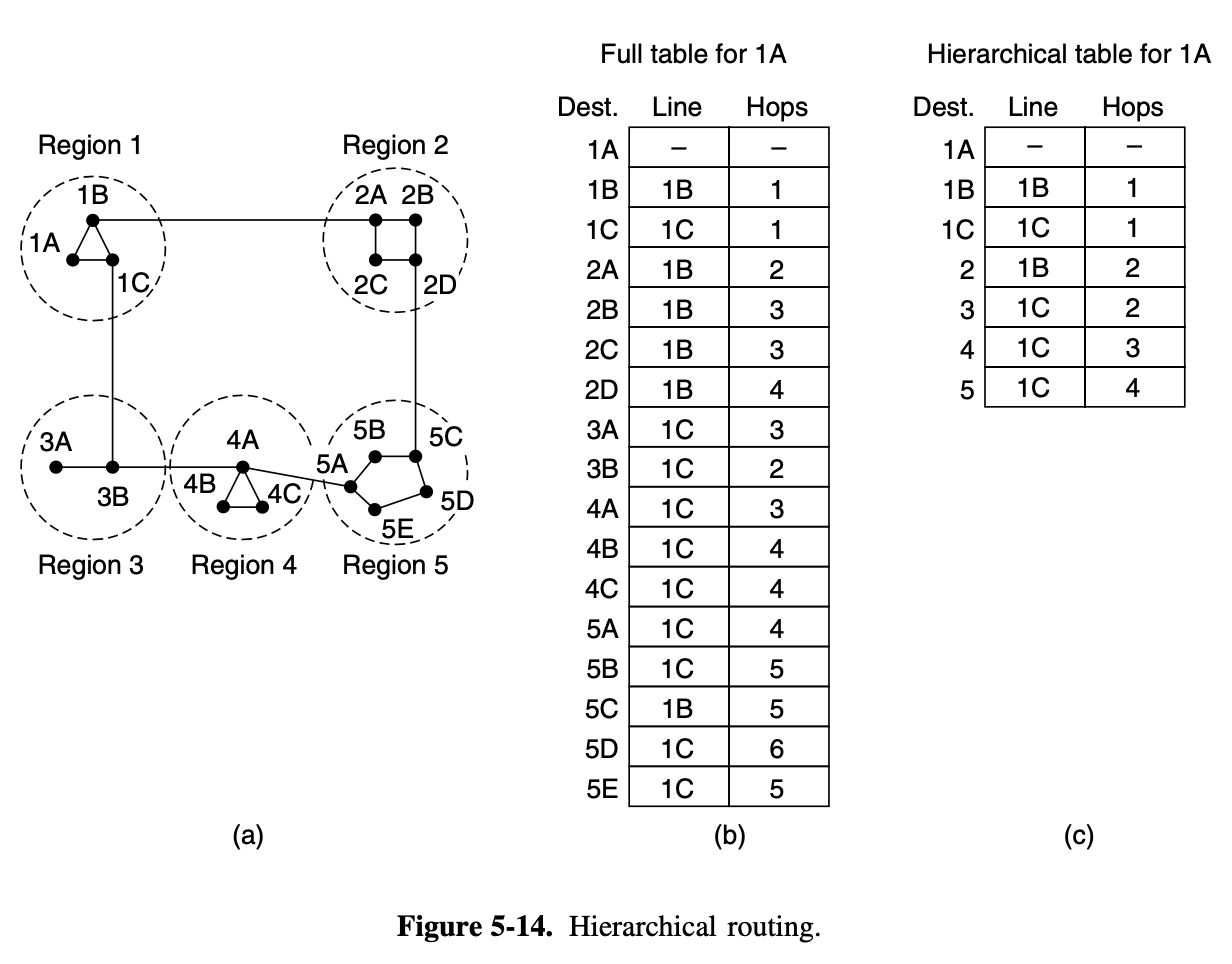

5.2.6. Hierarchical Routing

As a networks grow in size, the router routing tables grow proportionally

- Not only is router memory consumed by ever-increasing tables, but more CPU time is needed to scan them and more bandwidth is needed to send status reports about them

- When hierarchical routing is used, the routers are divided into what we will call regions

- Each router all the details about how to route packets to destinations within its own region but knows nothing about the internal structure of other region

- For huge networks, 2-level hierarchy may be insufficient

- it may be necessary to group the regions into clusters, the clusters into zones, the zones into groups and so on

- how many levels should the hierarchy have? e ln N entries per router

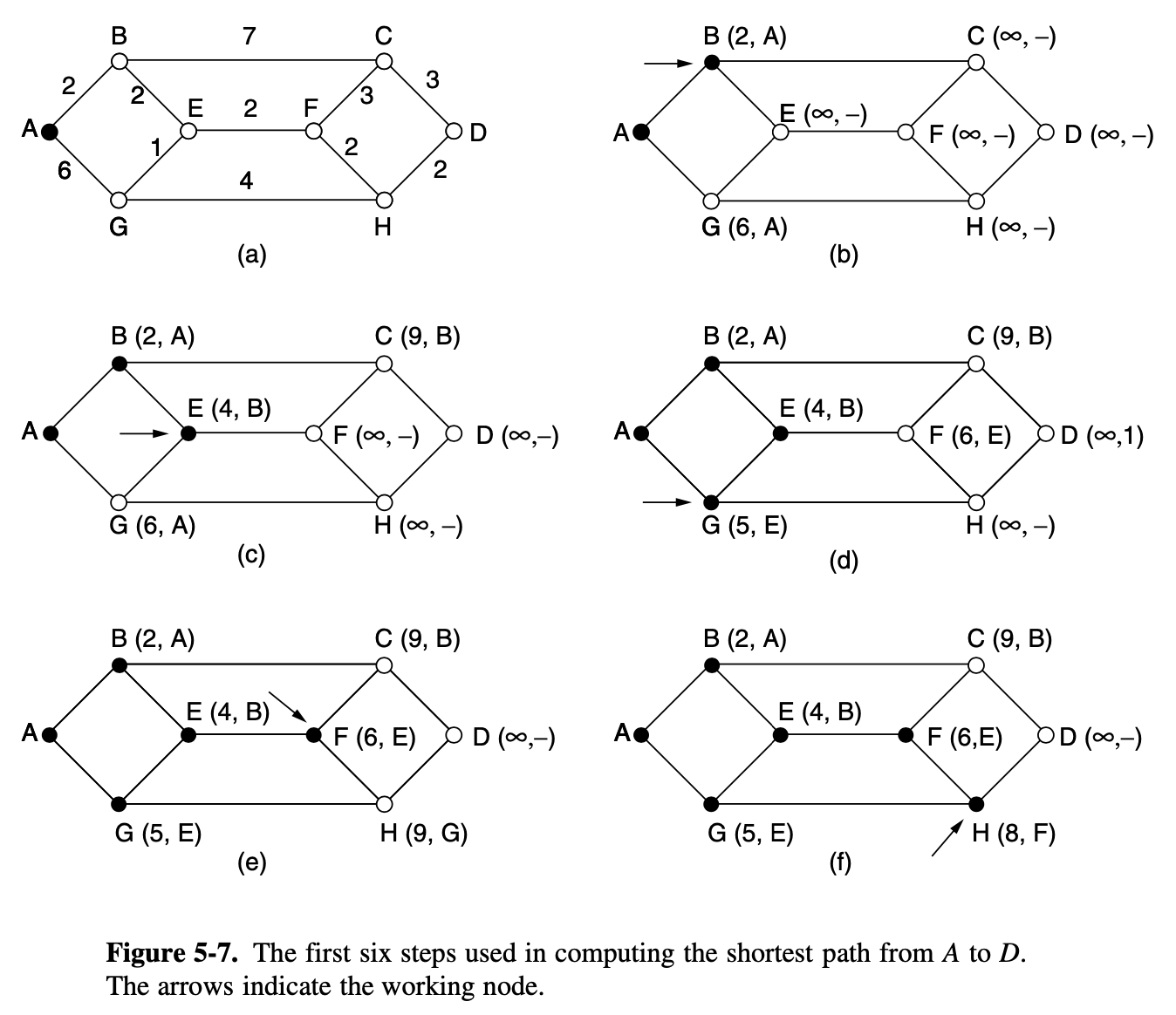

5.2.7. Broadcast Routing

- Sending a packet to all destinations simultneously is called braodcasting

- An improvement is multidestination routing, in which each packet contains either a list of destination or a bit map indicating the desired destinations

- When a packe arrives at a router, the router generates a new copy of the packet for each output line

- The schema still requires the source to know all the destinations, plus it is as much work for a router to determine where to send one multidestination packet as it is for multiple distinct packet

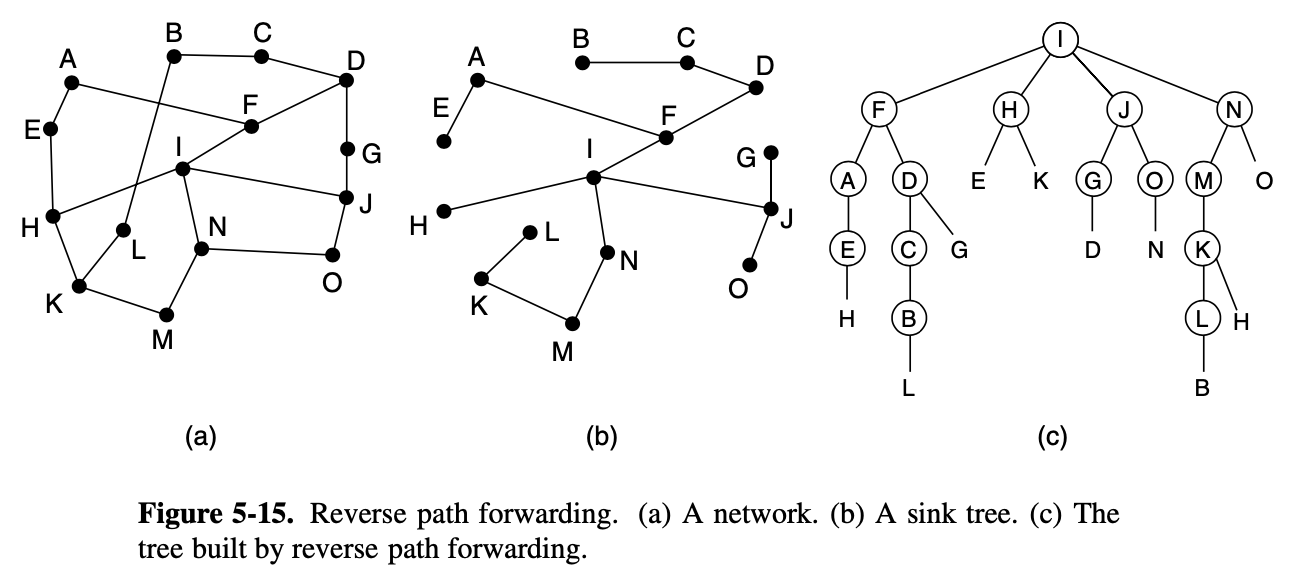

- we have already seen a better broadcast routing technique : flooding

- However, it turned out that we can do better still once the shortest path routes for regular packets have been computed

- reverse path forwarding is elegant and remarkably simple

- When a braodcast packet arrives at a router, the router checks to see if the packet arrived on the link that is normally used for sending packets toward the source of the broadcast

- if so, there is an excellent chance that the braodcast packet itself followed the best route from the router and is therefore the first copy to arrive at the router

- our last braodcast algorithm : spanning tree

- sink trees are spanning trees

- this method makes excelent used of bandwidth, generating the absolute minimum number of packets necessary to do the job

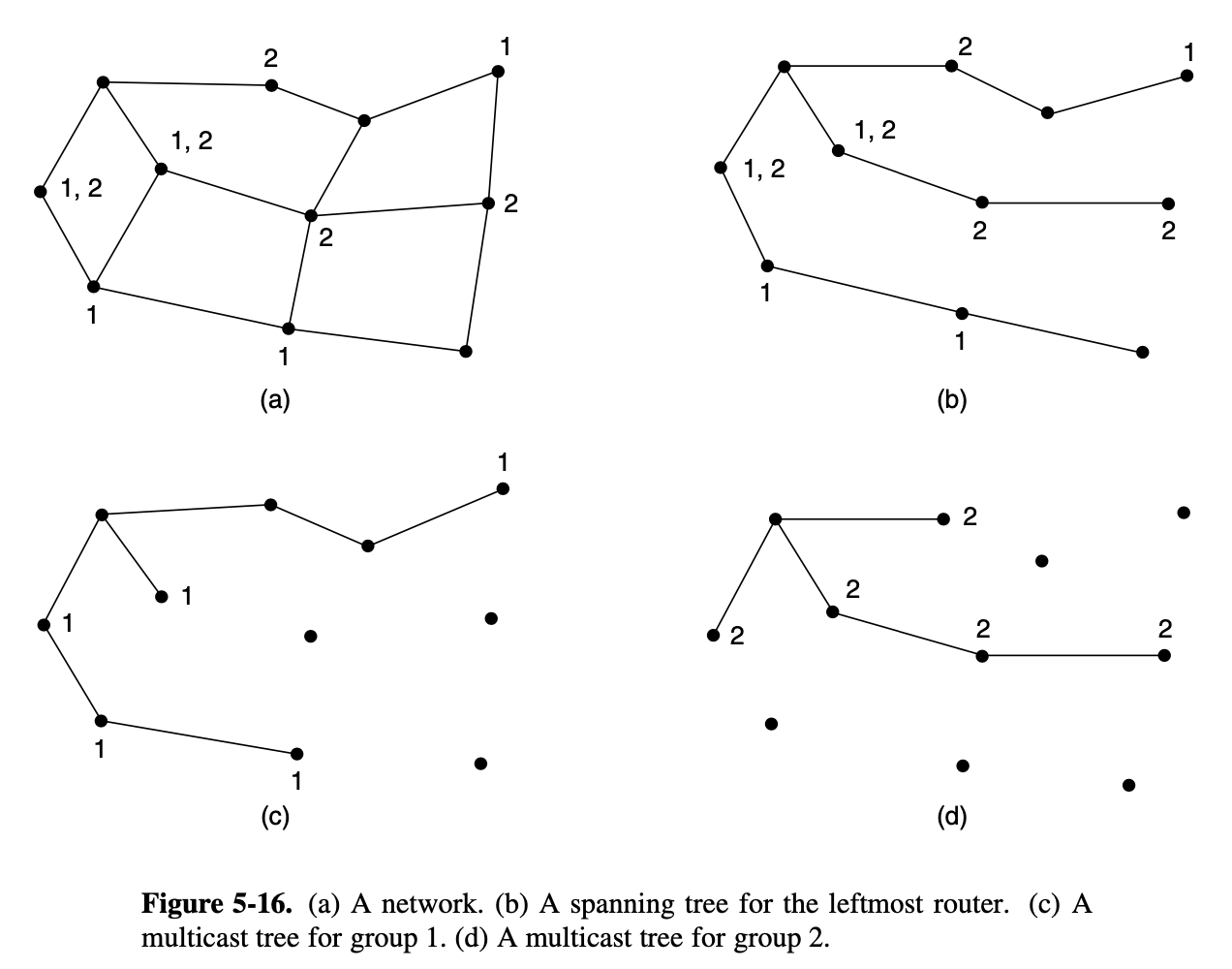

5.2.8. Multicast Routing

- we need a way to send messages to well-defined groups that are numerically large in size but small compared to network as a whole

- sending a message to such a group is called multicasting

- all multicasting schemes require some way to create and destroy groups and to identify which routers are members of a group

- prune the braodcast spanning tree by removing links that do not lead to members

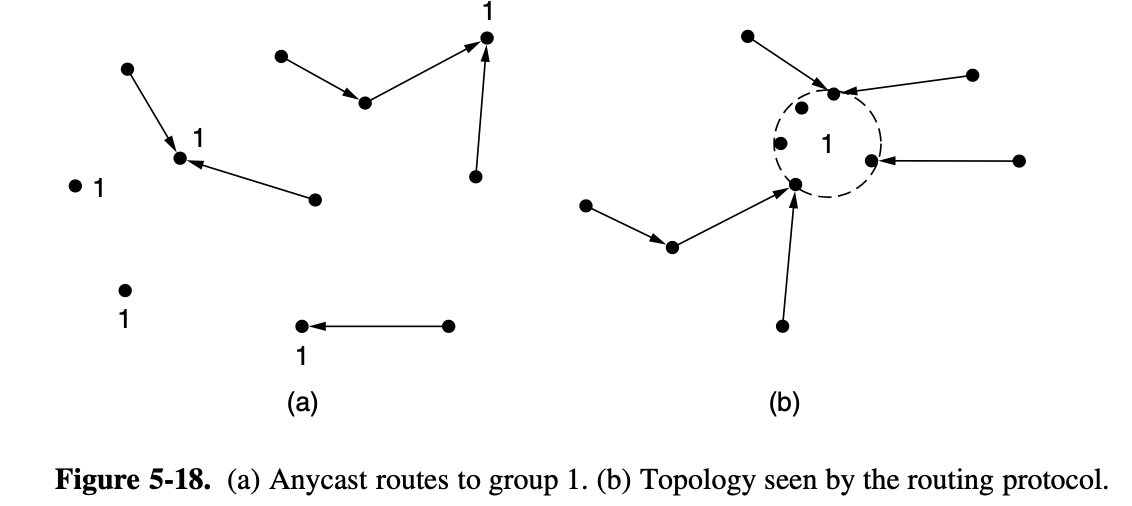

5.2.9. Anycast Routing

- packet is delivered to the nearest member of group

- sometimes nodes provide a service, not the node that is contacted, any node will do

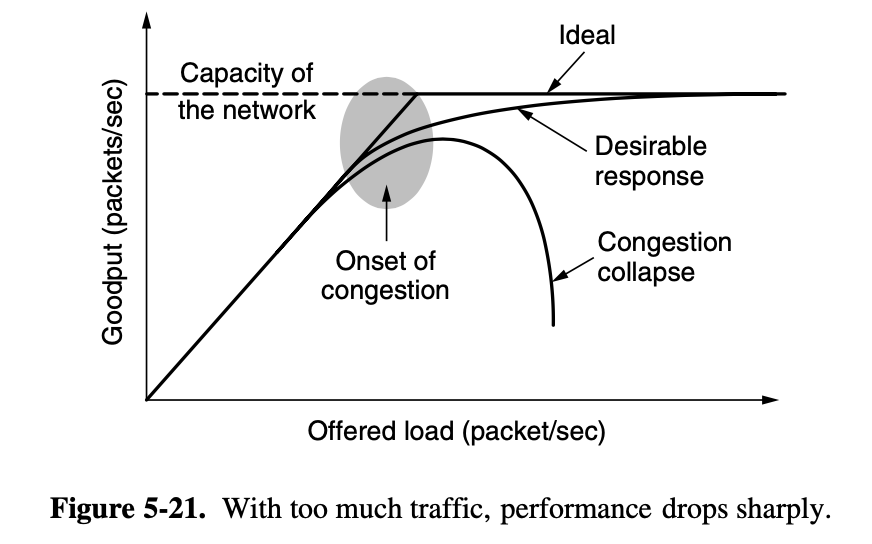

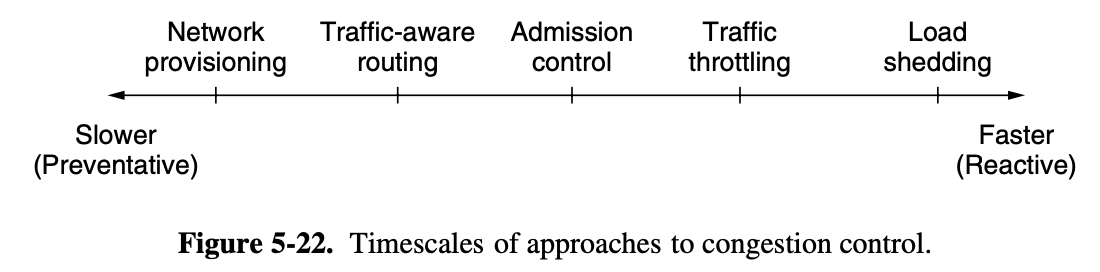

5.3. Congestion control algorithms

5.3.1. Approaches to Congestion Control

- provisioning : links and routers that are regularly heavily utilized are upgraded at the earliest opportunity

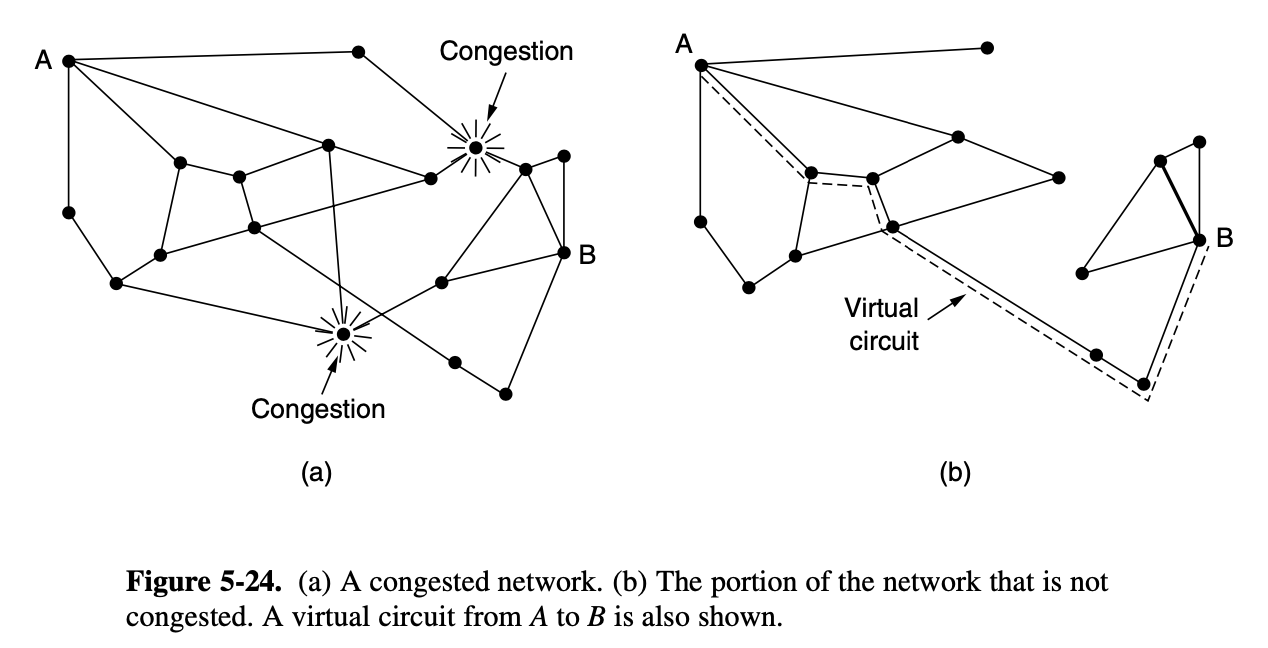

traffic-aware routing

ex) routers may be changed to shift traffic away from heavily used paths by changing the shortest path weight

admission control

- the only way then to beat back the congestion is to decrease the load

- In a virtual-circuit network, new connections can be refused if they would cause the network to become congested

load shedding

- when all else fails the network is forced to discard packets that is cannot deliver

5.3.3. Admission Control

- do no set up a new virtual circuit unless the network can carry the added traffic without becomming congested

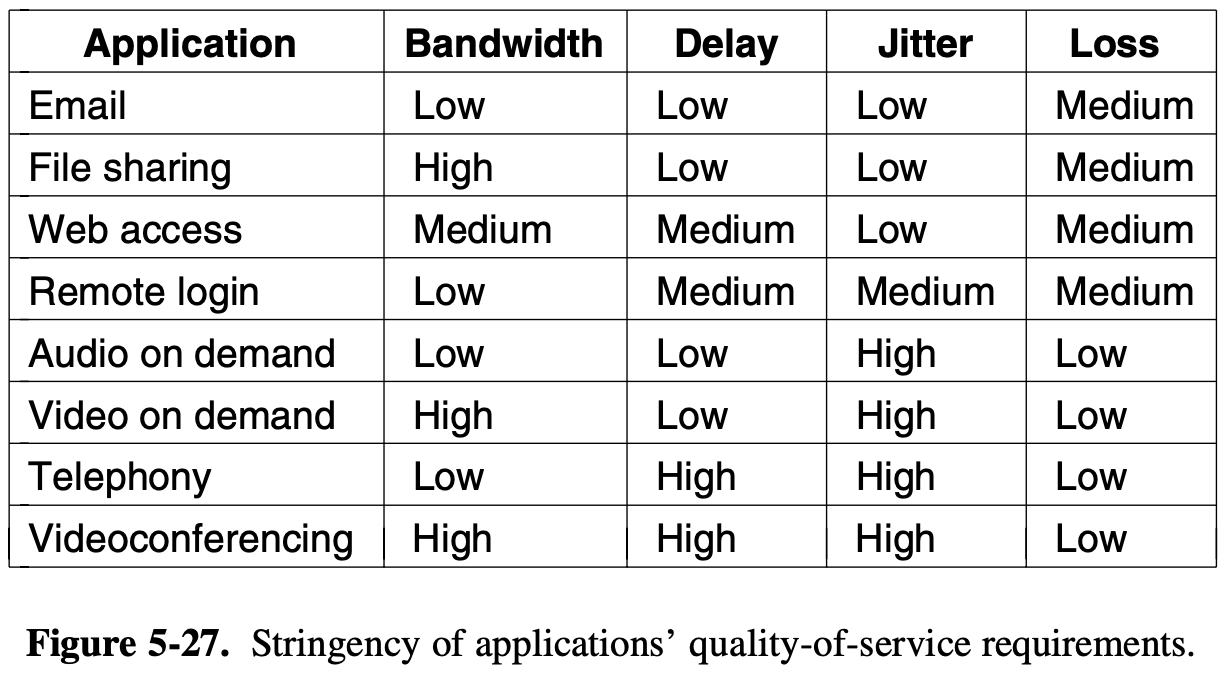

- Traffic is often described in terms of its rate and shape

ex) traffic that varies while browsing the Web is more difficult to handle than a streaming movie because the burst of Web traffic are more likeyl to congest routers in the network

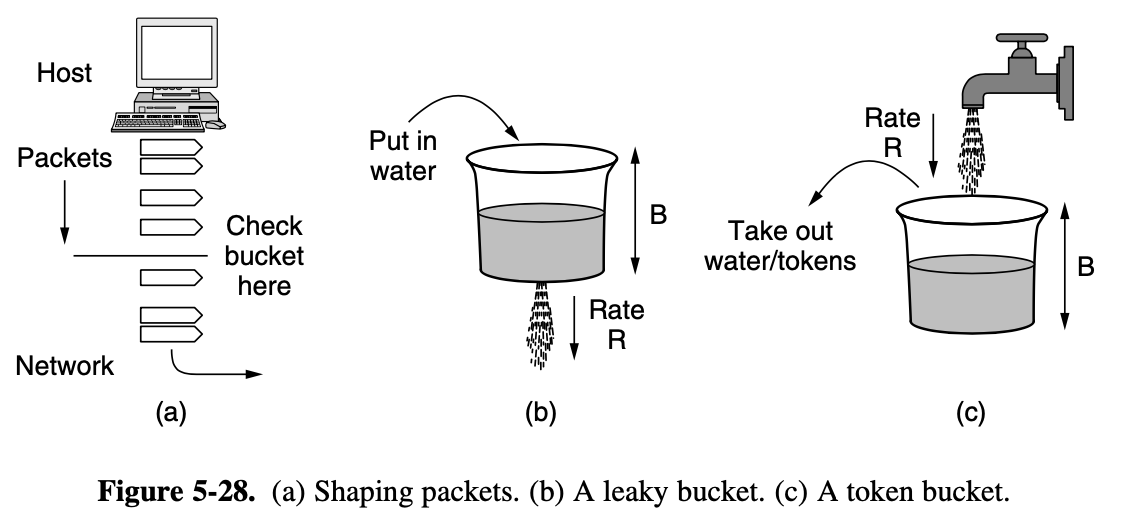

leaky bucket

- has 2 parameters that bound the average rate and the instantaneous burst size of traffic

5.3.4. Traffic Throttling

congestion avoidance

- When congestion is imminent, it must tell the senders to throttle back their transmission and slow down

- this feedback is business as usual rather than an exceptional situation

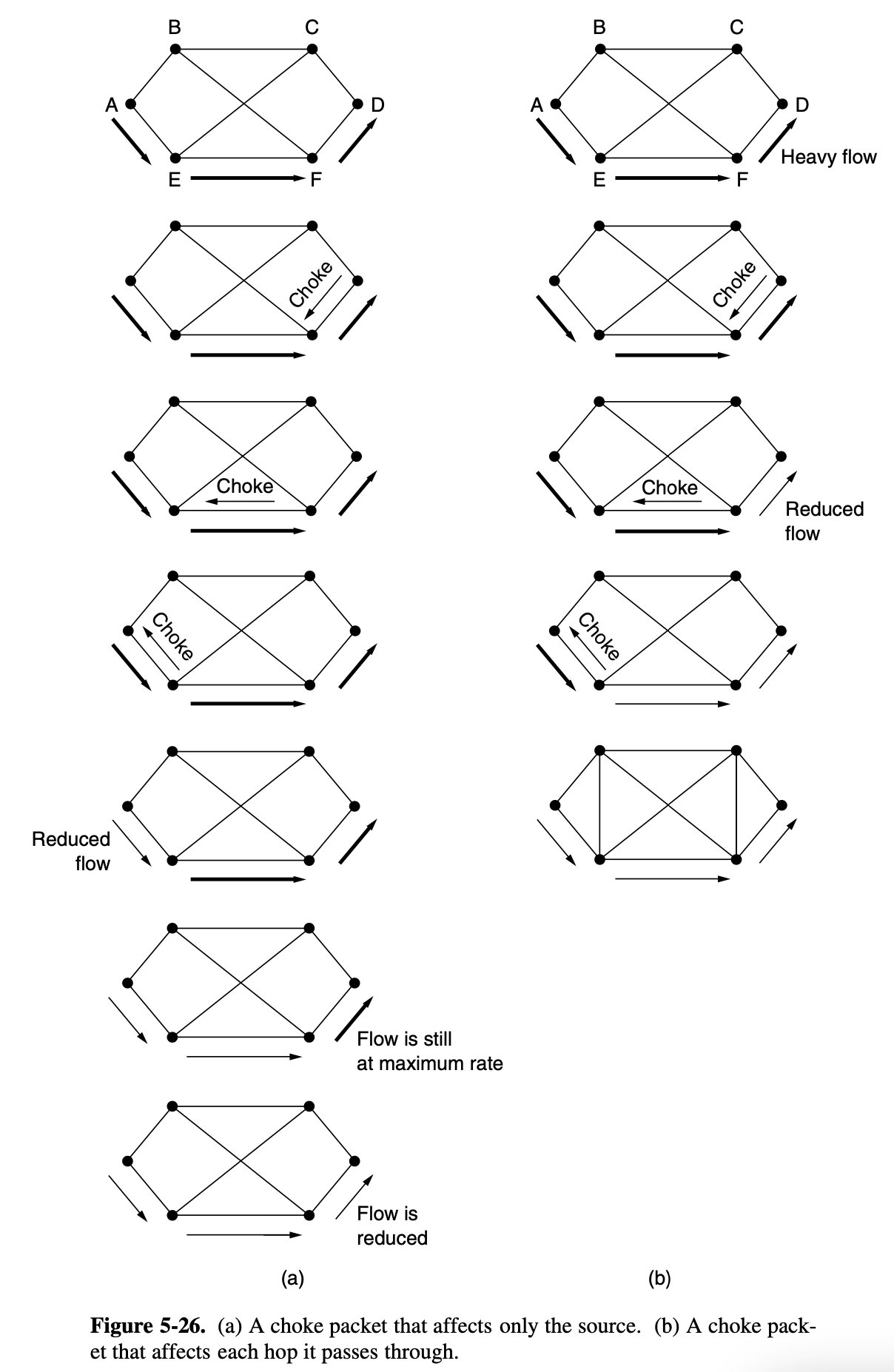

Choke Packets

- Th most direct way to notify a sender of congestion is to tell it directly

- To avoid increasing loadon the network during a time of congstion, the router may only send choke packets at a low rate

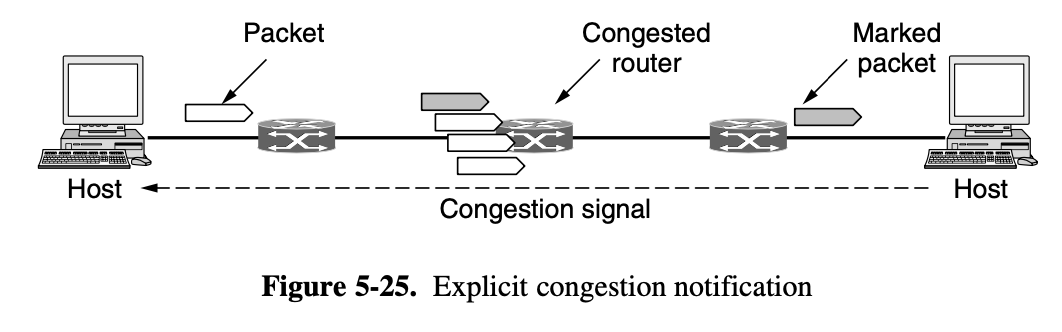

Explicit congestion Notification

- a router can tag any packet it forwards to signal that it is experiencing congestion

- When the network delivers the packet, the destination can note that there is congestion and infrom the sender when it sends a reply packet

Hop-by-Hop Backpressure

- the choke packet reaches E, which tells E to reduce the flow to F

- This action puts a greater demand on E's bufferes but give F immediate relief

5.3.5. Load Shedding

- router drowning in packets is which packets to drop

- The preferred choice may depend on the type of applications that use the network

ex) in file transfer, old packet is worth than new packet

but real time system, new packet is worth than old packet

- To implement an intelligent discard policy, applications must mark their packets to indicate to the network how important they are

- of course, unless there is some significant incentive to avoid marking every packet as VERY IMPORTANT - NEVER, EVER DISCARD

Random Early Detection

- Dealing with congestion when it first starts is more effective than letting it gum up the works and then trying to deal with it

- it is difficult to build a router that does not drop packets when it is overloaded

- TCP was designed for wired networks and wired networks are very reliable, so lost packets are mostly due to buffer overruns rather thatn transmission errors

- Wireless links must recover transmission erros at the link layer to work well with TCP

- By having routers drop packets early, before the situation has become hopeless, there is time for the source to take action before it is too late

- A popular algorithm for doing this is called RED (Random Early Detection)

- When the average queue length on some link exceed a threshold, the link is said to be congested and a small fraction of the packets are dropped at random

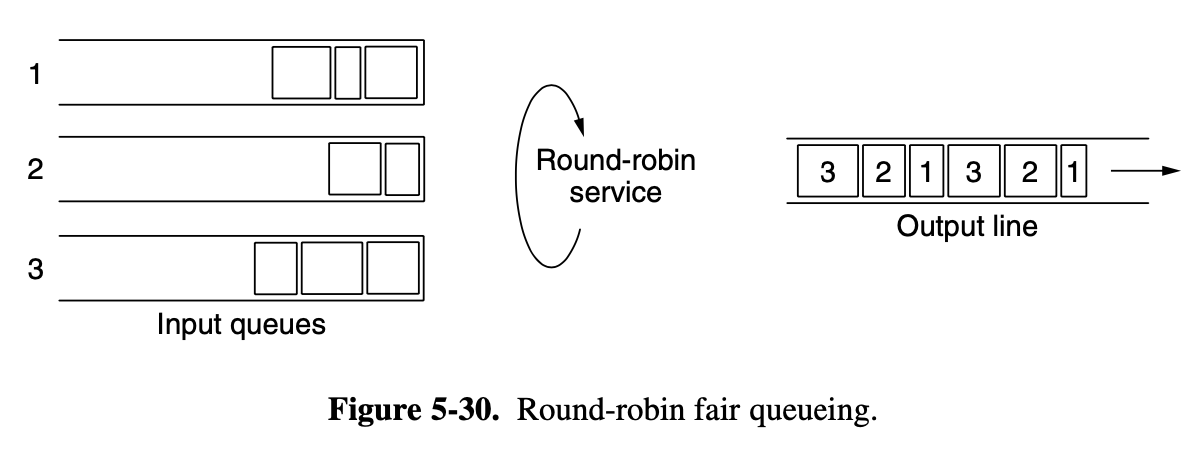

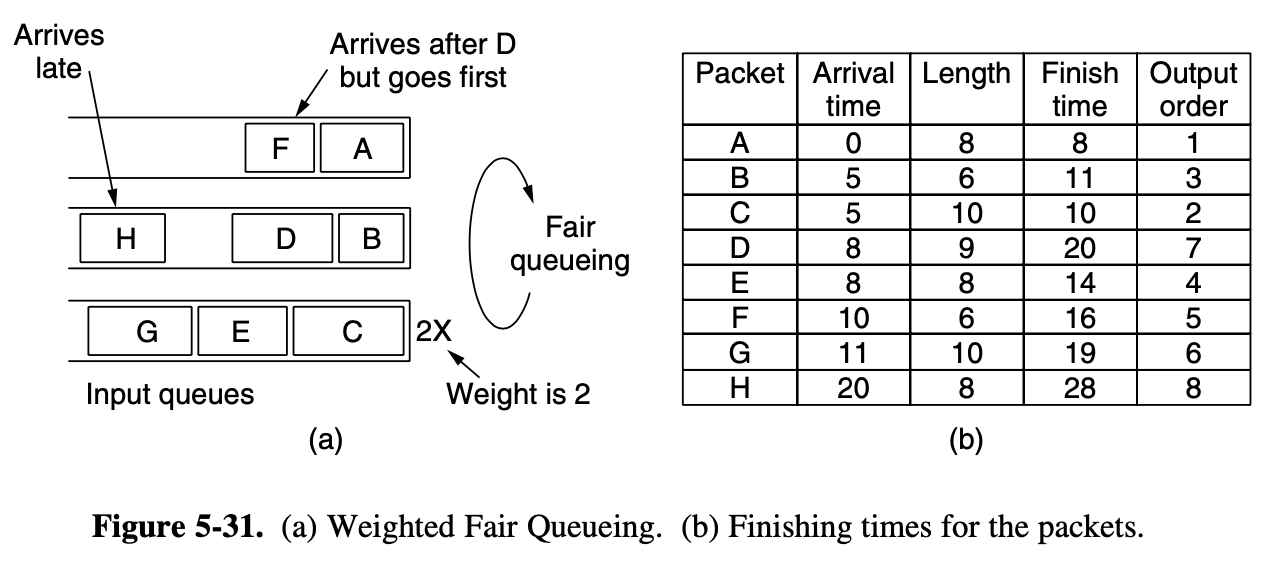

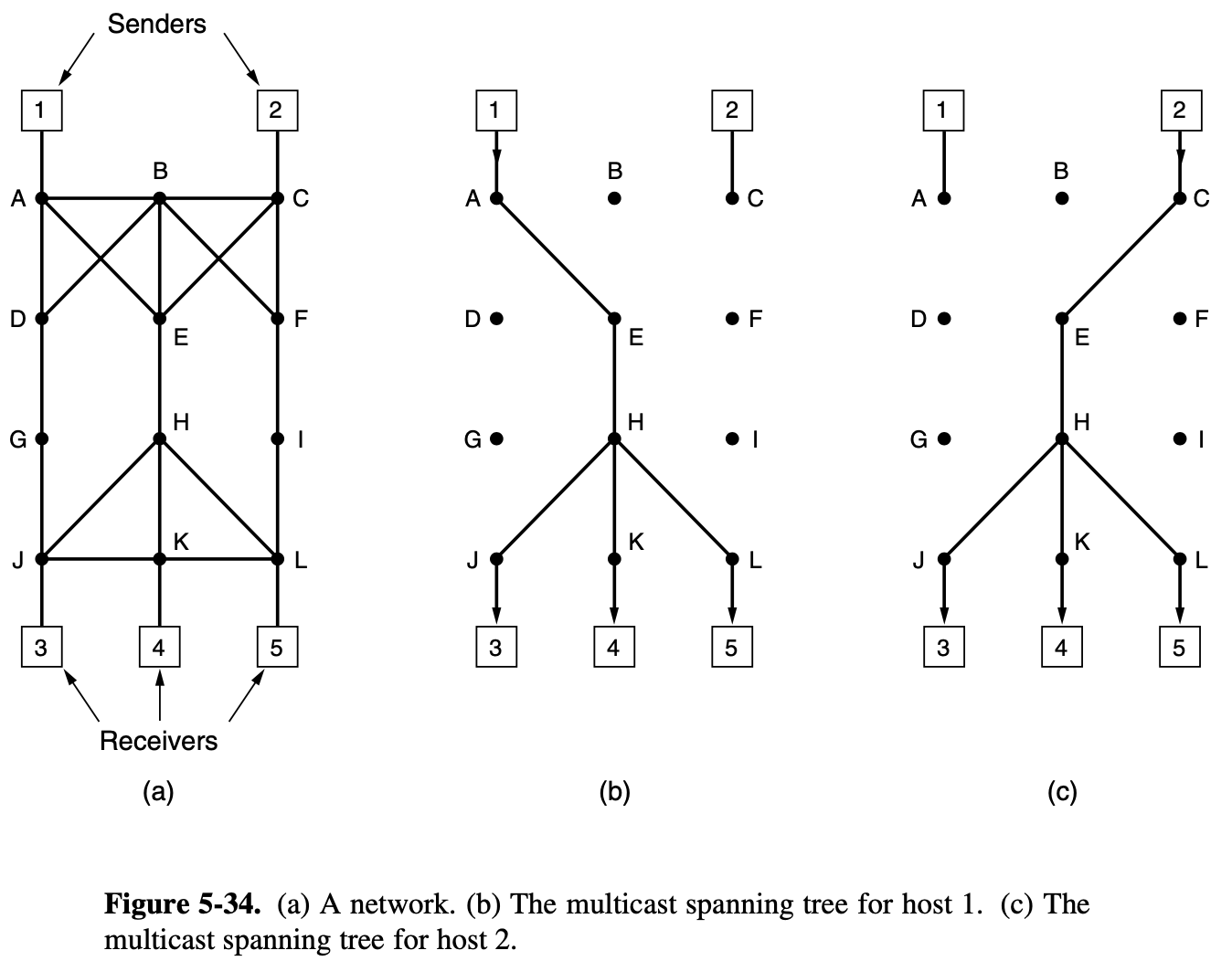

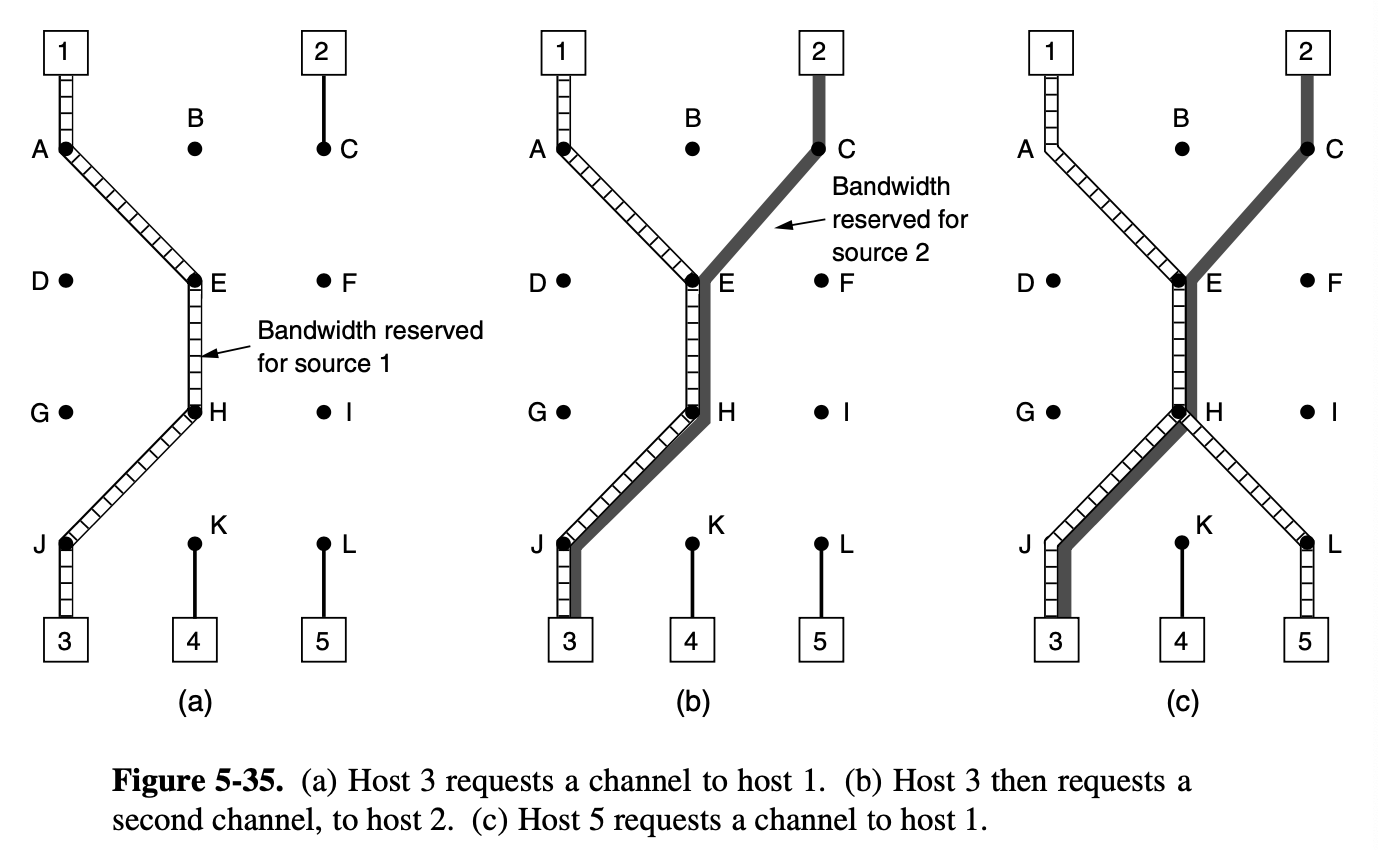

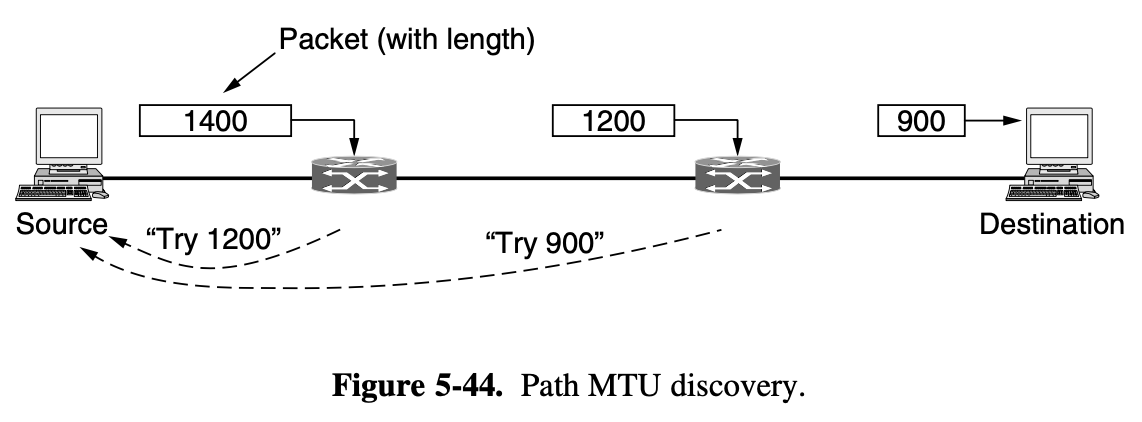

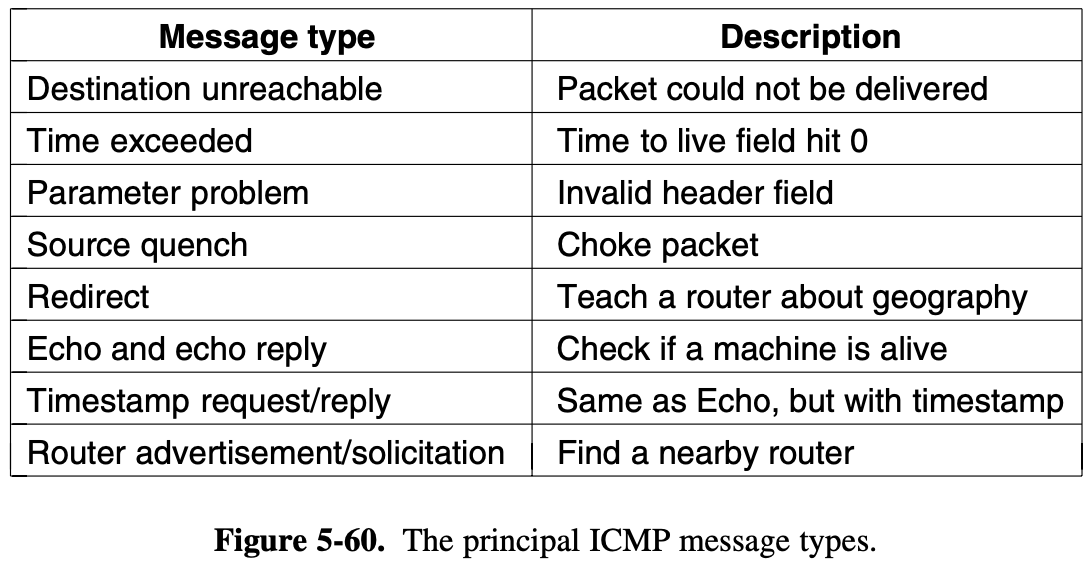

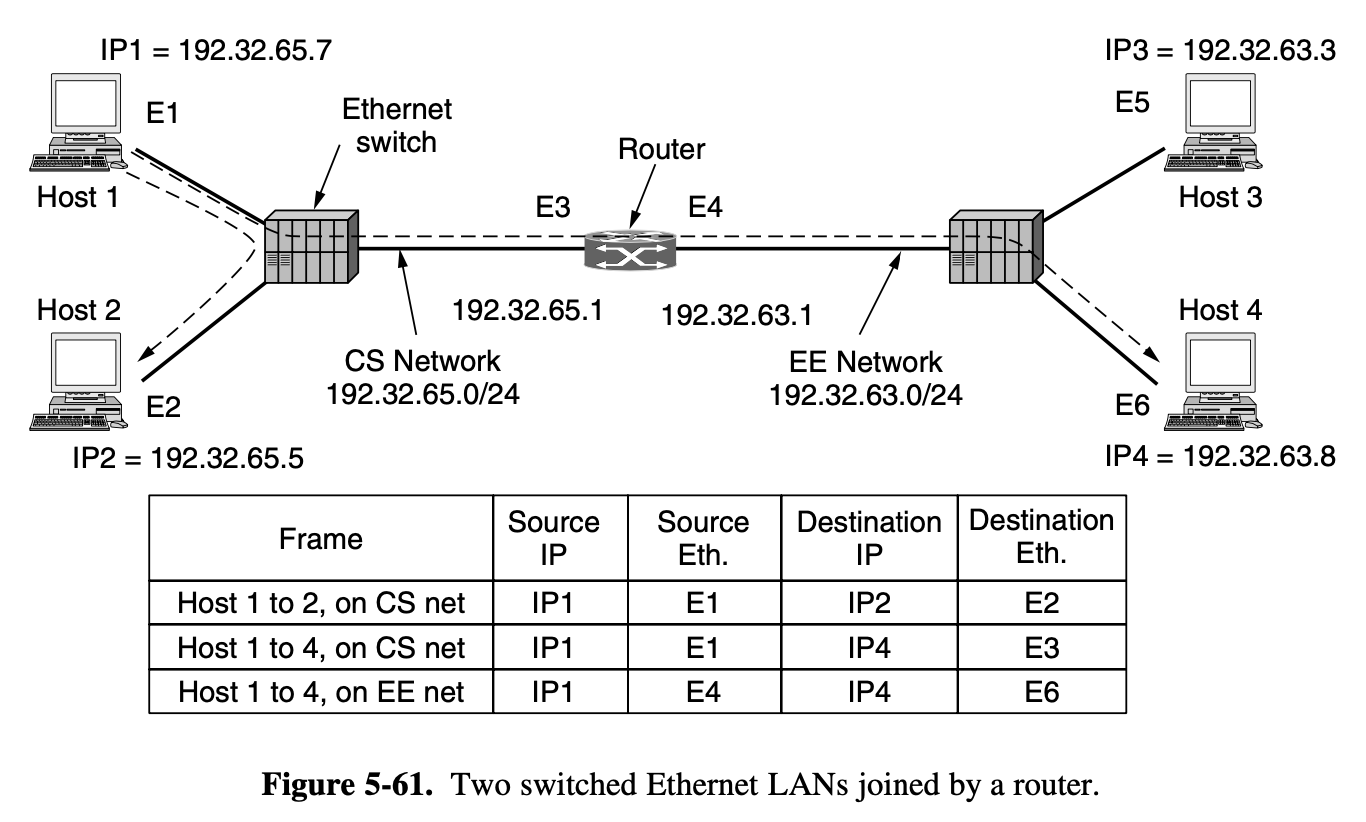

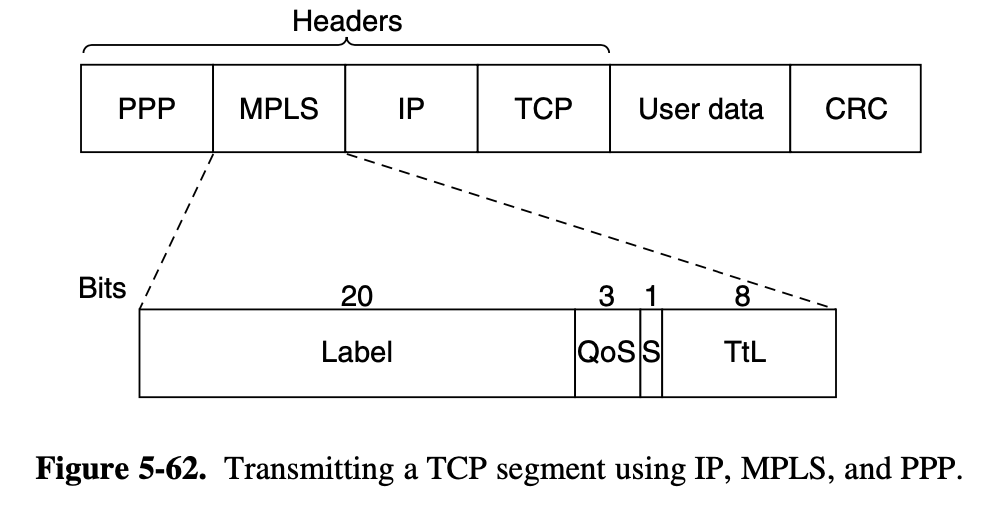

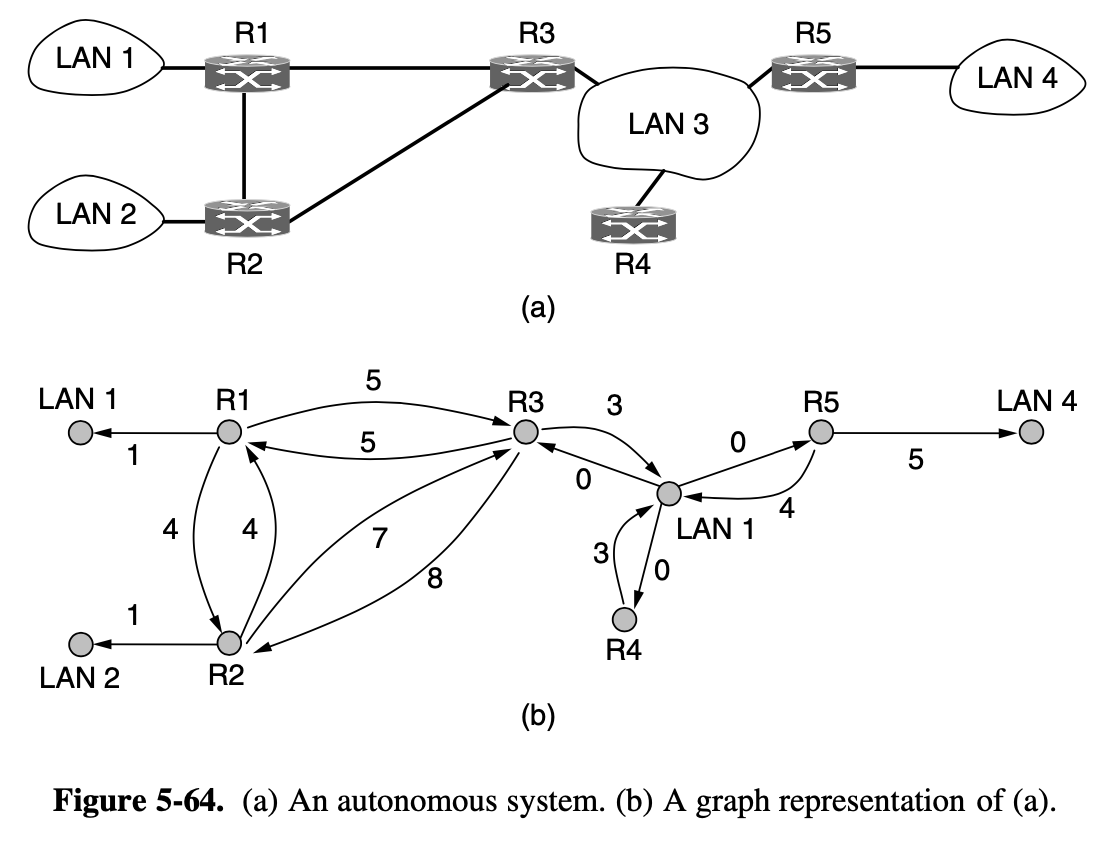

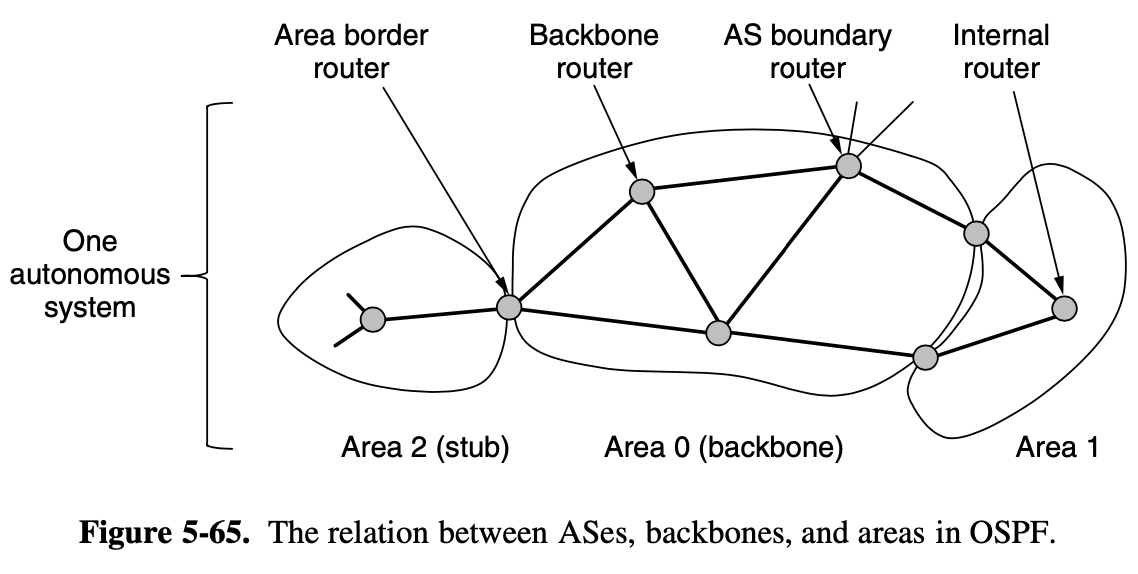

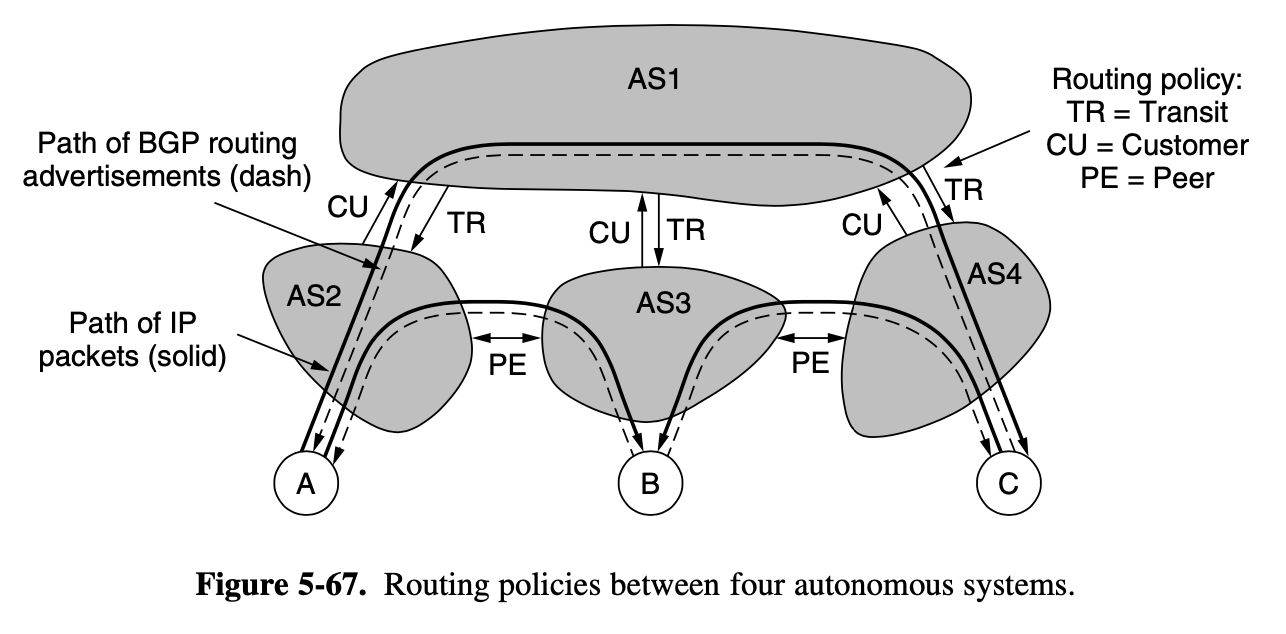

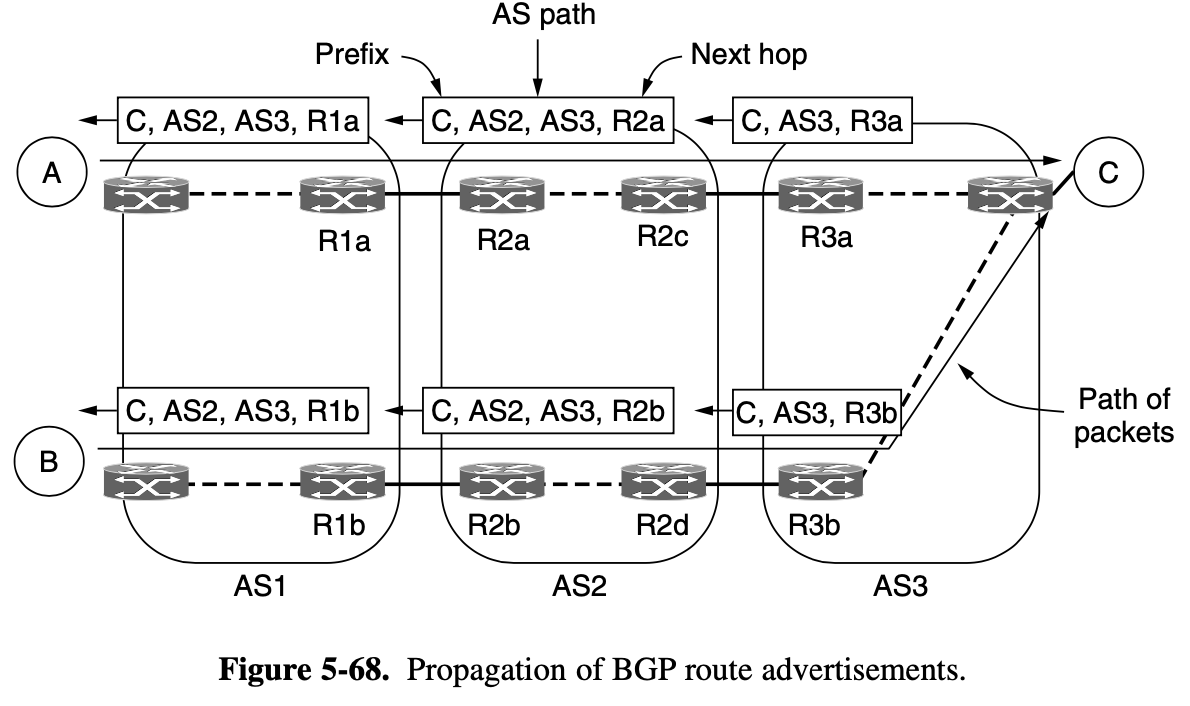

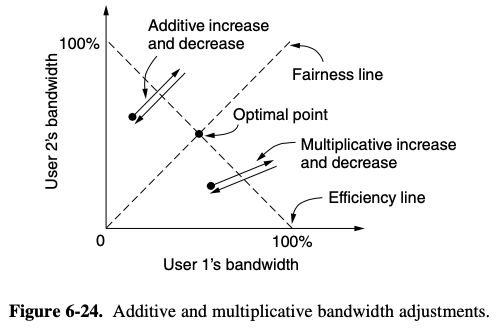

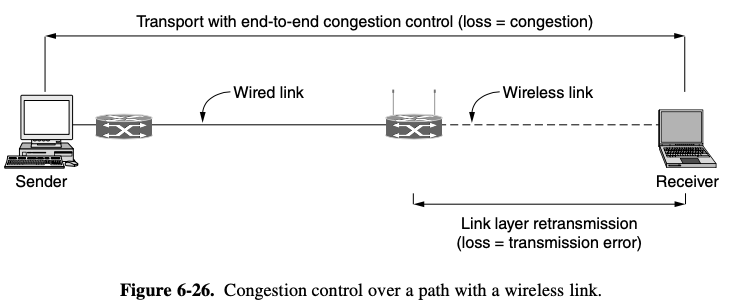

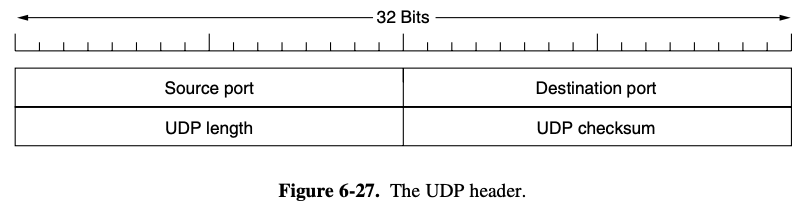

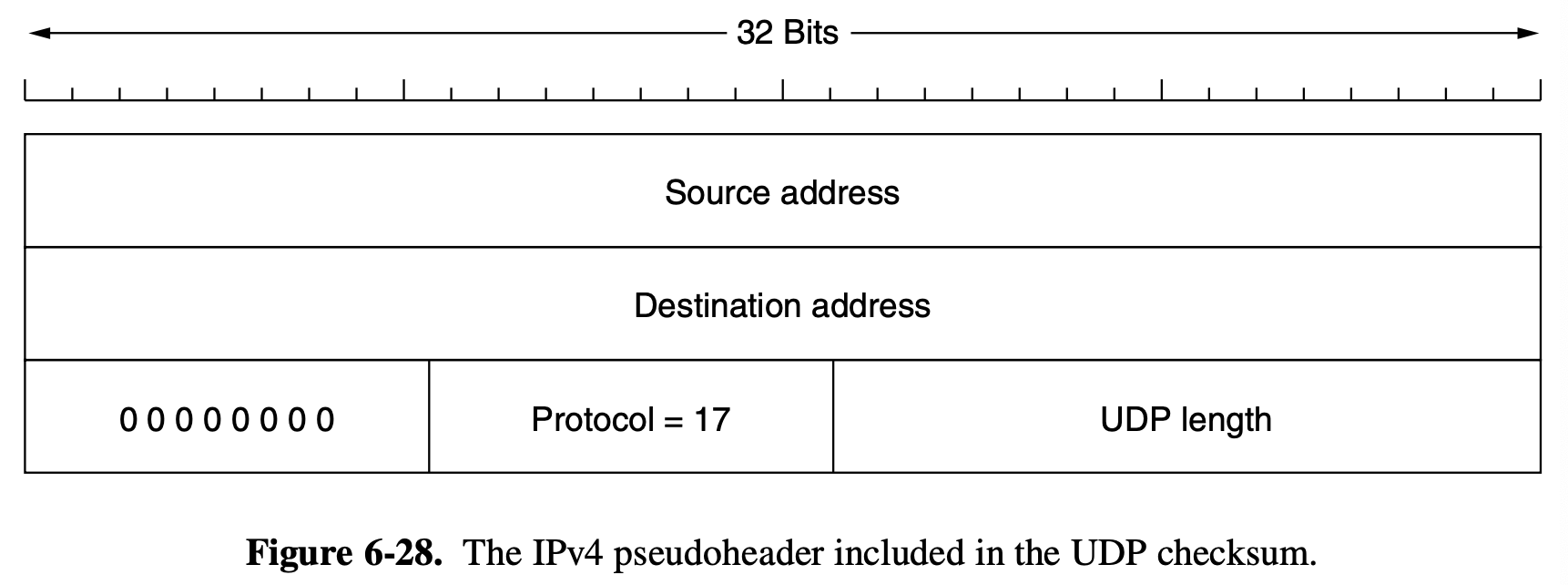

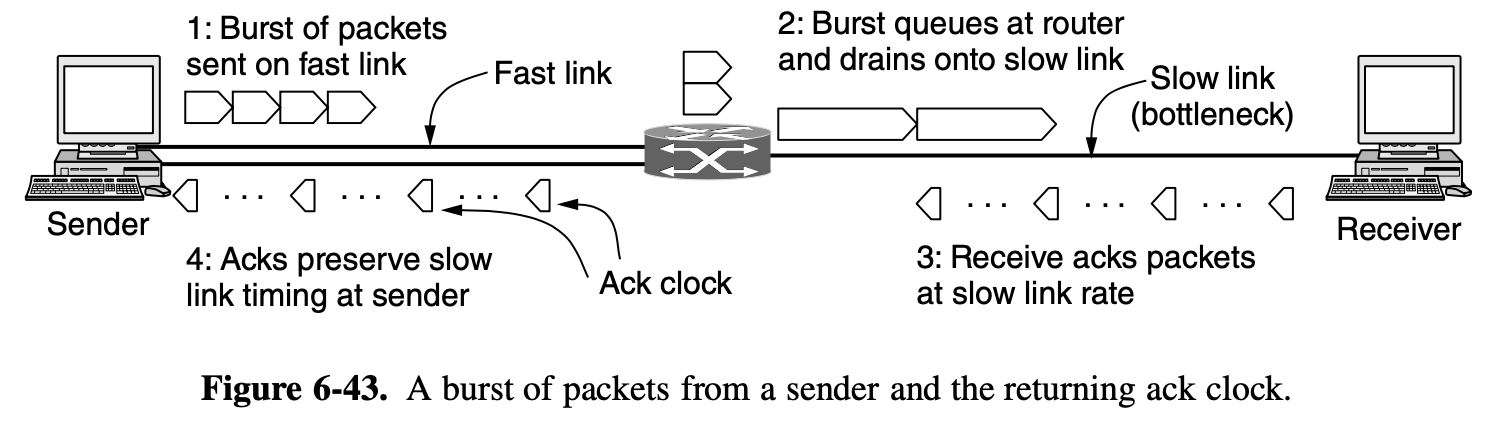

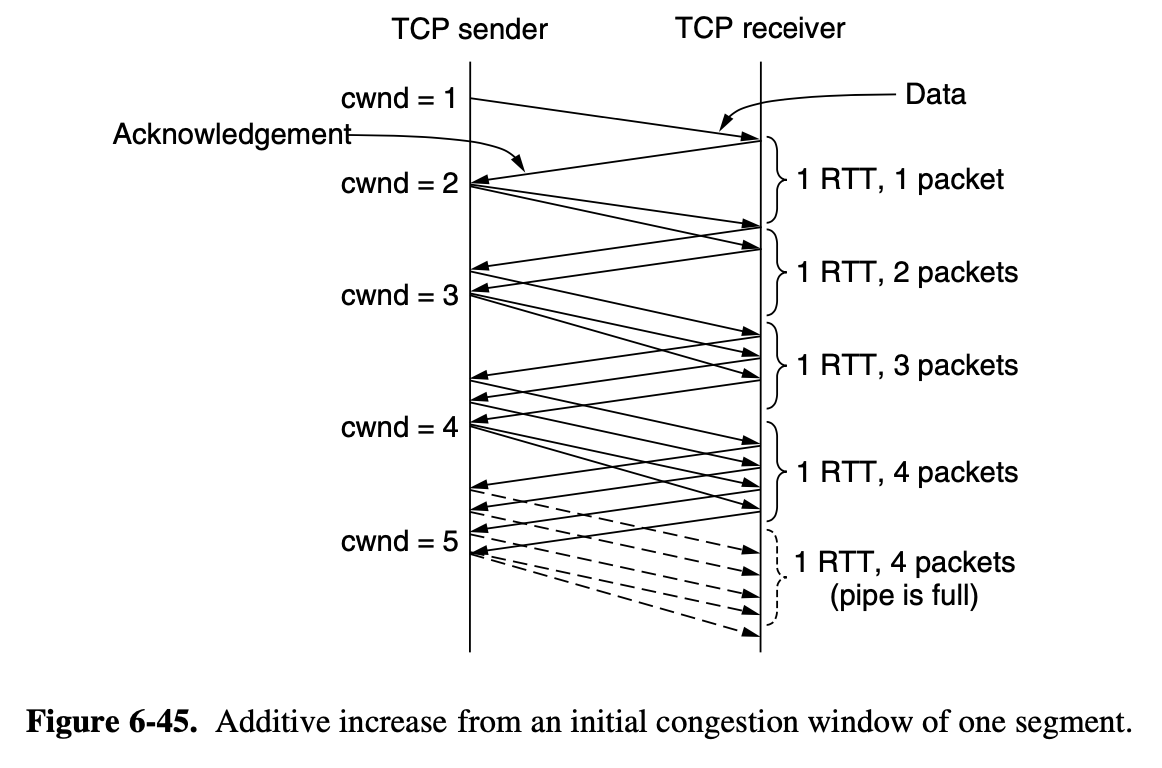

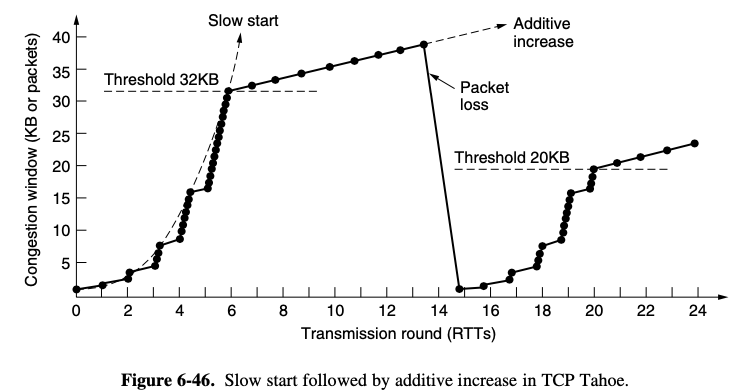

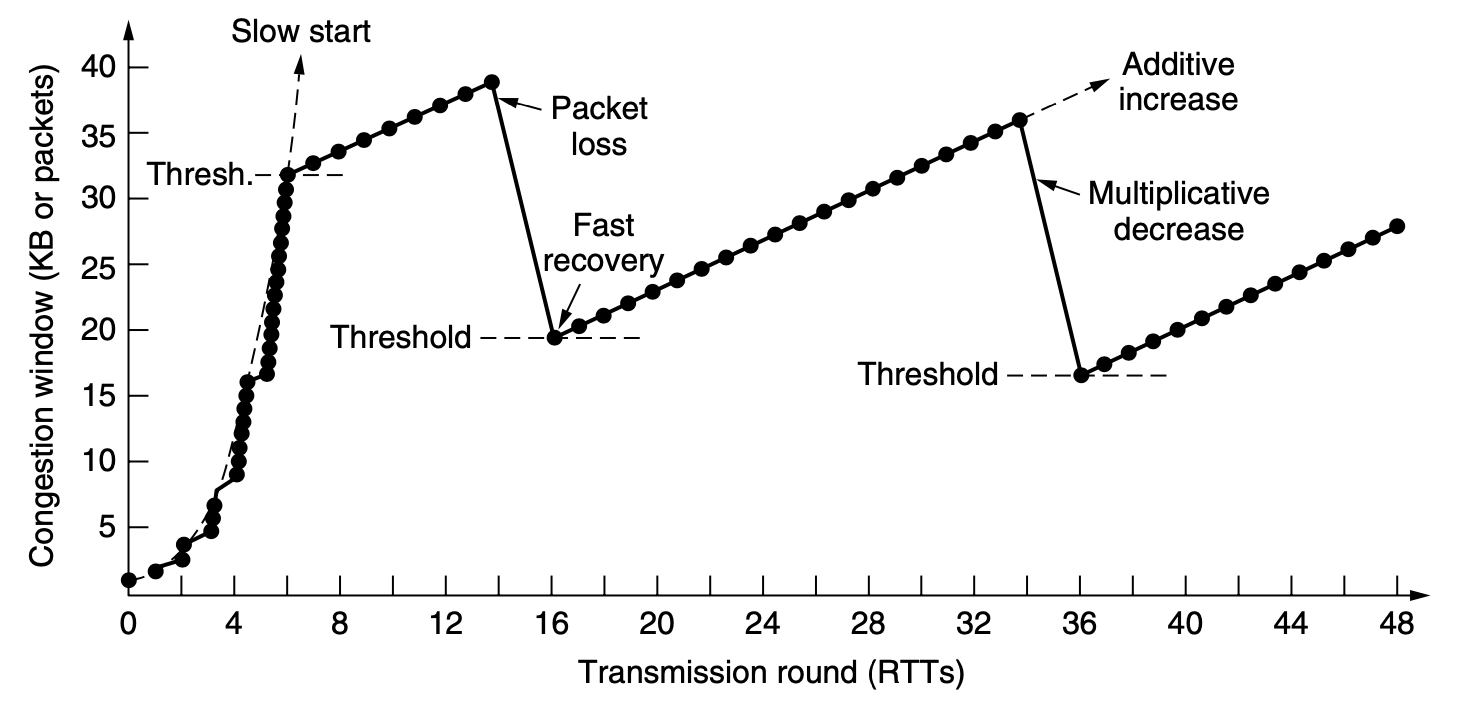

- The affected sender will notice the loss when there is no acknowledgement, and then the transport protocol will slow down