[ICML '17] Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks

- 풀고자 하는 문제

Trained 모델이 new task에 대해 fine-tuning 되는 과정을 가속화시켜줌. 즉, new task에 대해 fine-tuning 될 때 새로운 데이터가 많이 필요 없음. 다시 말해 few-shot learning의 범주에 든다고 생각하면 됨 (Few-shot & Rapid adaptation)

논문에 쓰인 구절을 차용하자면, our artificial agents should be able to learn and adapt quickly from only a few examples, and continue to adapt as more data becomes available!

- Main contributions

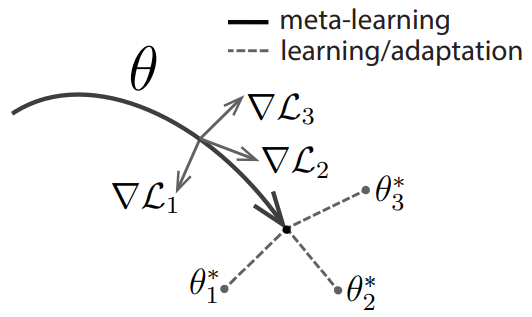

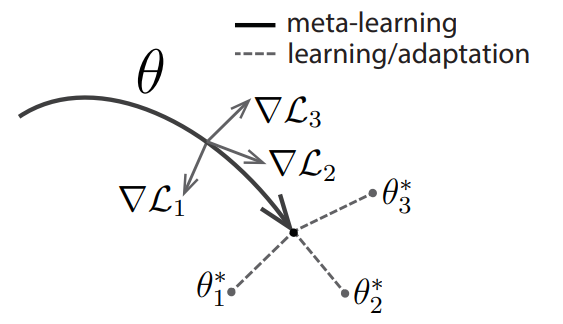

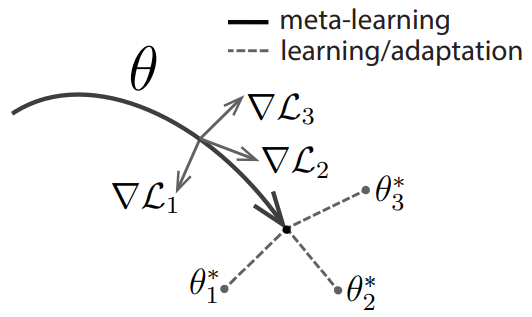

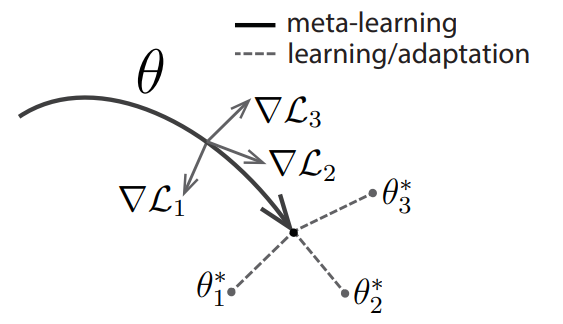

- Train the model's initial parameters such that the model has maximal performance on a new task after the parameters have been updated through one or more gradient steps computed with a small amount of data from the new task

- Maximize the sensitivity of the loss functions of new tasks with respect to the parameters

- Model-agnostic & Task-agnostic

- Supervised regression

- Supervised classification

- Reinforcement learning e.g., locomotion

- Accelerate fine-tuning for policy gradient reinforcement learning with NN policies

- A new task might involve achieving a new goal or succeeding on a previously trained goal in a new environment

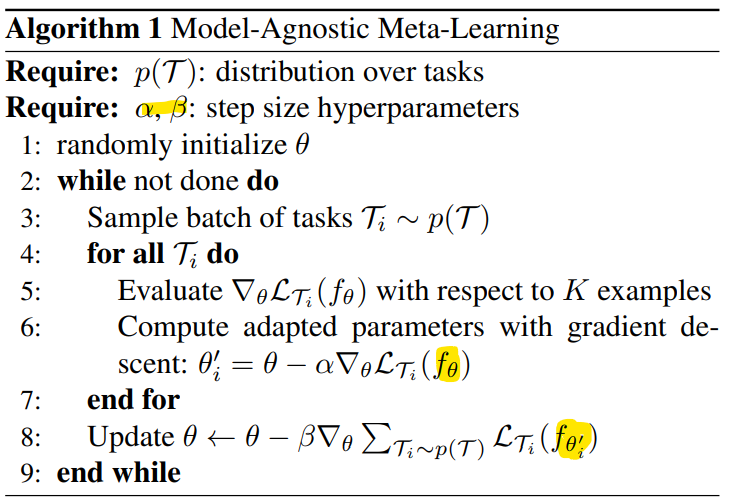

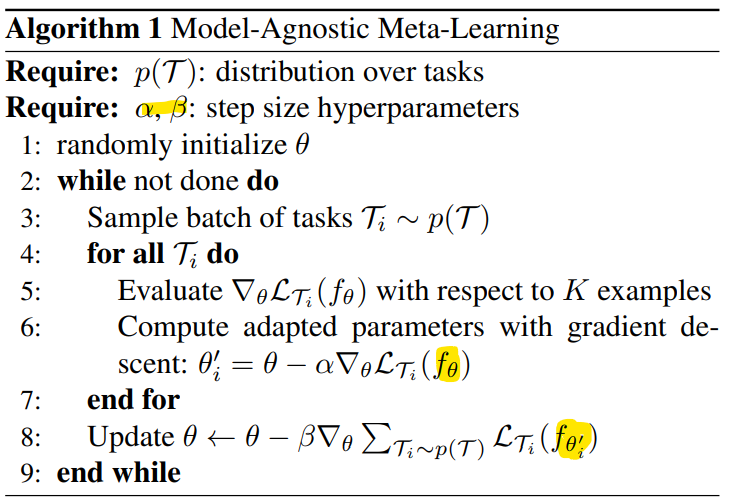

- MAML 알고리즘

- 코드: 오피셜 있음, Tensorflow

github.com/cbfinn/maml

github.com/cbfinn/maml_rl