비지도 학습 및 앙상블 주요 코드

목차

파트 4. CART: 분류 트리 (Classification Tree)

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

X_train, X_test, y_train, y_test = train_test_split(

X, y,

test_size=0.2,

stratify=y,

random_state=1

)

dt = DecisionTreeClassifier(criterion='gini', random_state=1)

dt.fit(X_train, y_train)

y_pred = dt.predict(X_test)

print(accuracy_score(y_test, y_pred))

파트 5. CART: 회귀 트리 (Regression Tree)

from sklearn.tree import DecisionTreeRegressor

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error as MSE

X_train, X_test, y_train, y_test = train_test_split(

X, y,

test_size=0.2,

random_state=3

)

dt = DecisionTreeRegressor(max_depth=4, min_samples_leaf=0.1, random_state=3)

dt.fit(X_train, y_train)

y_pred = dt.predict(X_test)

rmse = MSE(y_test, y_pred) ** 0.5

print(rmse)

파트 6. 모델 일반화 평가 & 교차 검증

from sklearn.tree import DecisionTreeRegressor

from sklearn.model_selection import train_test_split, cross_val_score

from sklearn.metrics import mean_squared_error as MSE

SEED = 123

X_train, X_test, y_train, y_test = train_test_split(

X, y,

test_size=0.3,

random_state=SEED

)

dt = DecisionTreeRegressor(max_depth=4, min_samples_leaf=0.14, random_state=SEED)

mse_cv = -cross_val_score(

dt, X_train, y_train,

cv=10,

scoring='neg_mean_squared_error',

n_jobs=-1

)

dt.fit(X_train, y_train)

y_train_pred = dt.predict(X_train)

y_test_pred = dt.predict(X_test)

print('CV MSE:', mse_cv.mean())

print('Train MSE:', MSE(y_train, y_train_pred))

print('Test MSE:', MSE(y_test, y_test_pred))

파트 7. Bagging

from sklearn.ensemble import BaggingClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

SEED = 1

X_train, X_test, y_train, y_test = train_test_split(

X, y,

test_size=0.3,

stratify=y,

random_state=SEED

)

dt = DecisionTreeClassifier(max_depth=4, min_samples_leaf=0.16, random_state=SEED)

bc = BaggingClassifier(

base_estimator=dt,

n_estimators=300,

n_jobs=-1

)

bc.fit(X_train, y_train)

y_pred = bc.predict(X_test)

print('Bagging Accuracy:', accuracy_score(y_test, y_pred))

파트 8. AdaBoost

from sklearn.ensemble import AdaBoostClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import roc_auc_score

from sklearn.model_selection import train_test_split

SEED = 1

X_train, X_test, y_train, y_test = train_test_split(

X, y,

test_size=0.3,

stratify=y,

random_state=SEED

)

dt = DecisionTreeClassifier(max_depth=1, random_state=SEED)

adb_clf = AdaBoostClassifier(base_estimator=dt, n_estimators=100)

adb_clf.fit(X_train, y_train)

y_proba = adb_clf.predict_proba(X_test)[:, 1]

print('ROC AUC:', roc_auc_score(y_test, y_proba))

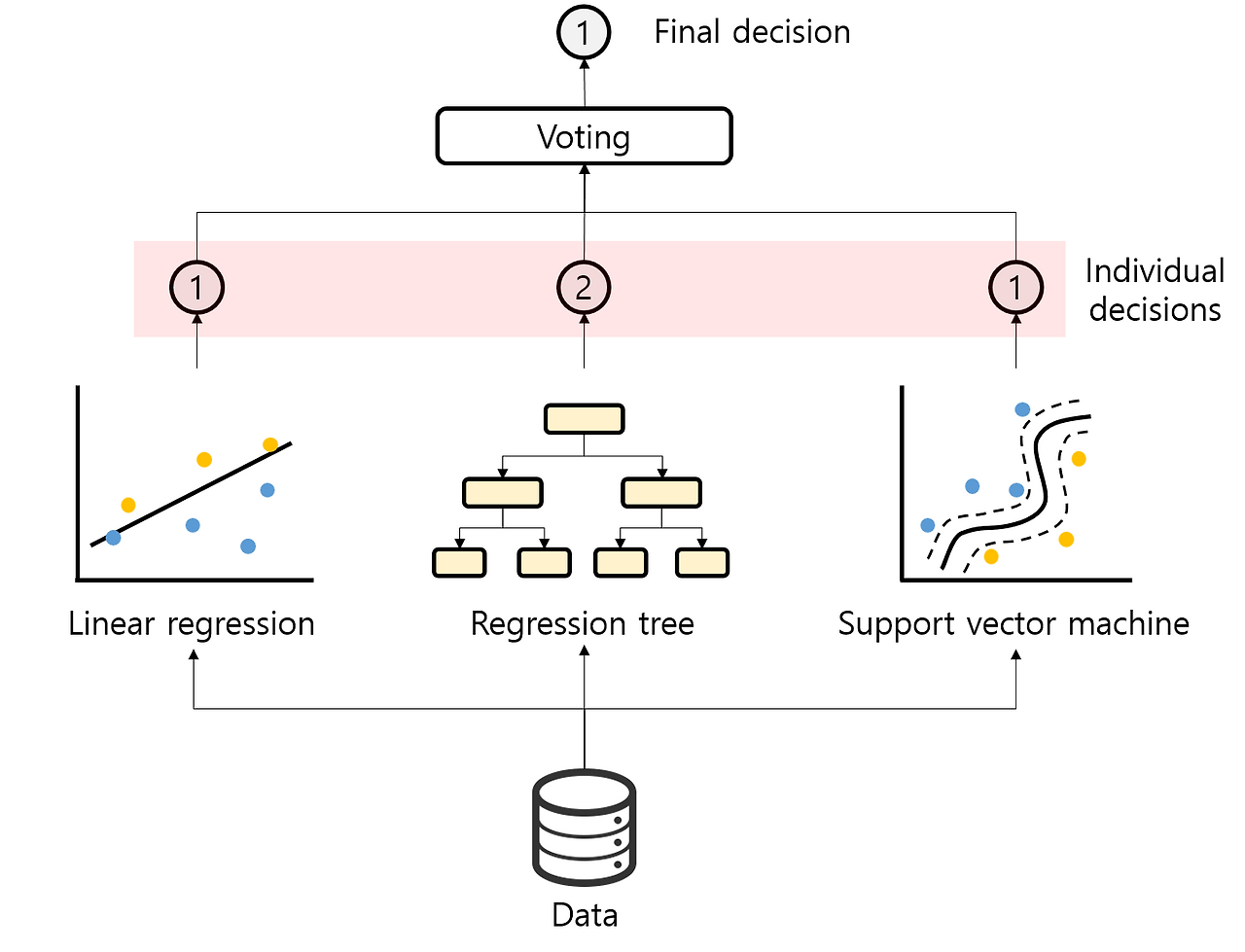

파트 9: Voting Classifier

from sklearn.metrics import accuracy_score

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import VotingClassifier

SEED = 1

X_train, X_test, y_train, y_test = train_test_split(

X, y,

test_size=0.3,

random_state=SEED

)

lr = LogisticRegression(random_state=SEED)

knn = KNeighborsClassifier()

dt = DecisionTreeClassifier(random_state=SEED)

voting_clf = VotingClassifier(

estimators=[('lr', lr), ('knn', knn), ('dt', dt)],

voting='hard'

)

voting_clf.fit(X_train, y_train)

y_pred = voting_clf.predict(X_test)

print(accuracy_score(y_test, y_pred))

파트 10: Random ForestRegressor

from sklearn.ensemble import RandomForestRegressor

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error as MSE

SEED = 1

X_train, X_test, y_train, y_test = train_test_split(

X, y,

test_size=0.3,

random_state=SEED

)

rf = RandomForestRegressor(

n_estimators=400,

min_samples_leaf=0.12,

random_state=SEED

)

rf.fit(X_train, y_train)

y_pred = rf.predict(X_test)

rmse = MSE(y_test, y_pred) ** 0.5

print(f'Test set RMSE of rf: {rmse:.2f}')